Ahmet E. Tekden

Object and Relation Centric Representations for Push Effect Prediction

Feb 03, 2021

Abstract:Pushing is an essential non-prehensile manipulation skill used for tasks ranging from pre-grasp manipulation to scene rearrangement, reasoning about object relations in the scene, and thus pushing actions have been widely studied in robotics. The effective use of pushing actions often requires an understanding of the dynamics of the manipulated objects and adaptation to the discrepancies between prediction and reality. For this reason, effect prediction and parameter estimation with pushing actions have been heavily investigated in the literature. However, current approaches are limited because they either model systems with a fixed number of objects or use image-based representations whose outputs are not very interpretable and quickly accumulate errors. In this paper, we propose a graph neural network based framework for effect prediction and parameter estimation of pushing actions by modeling object relations based on contacts or articulations. Our framework is validated both in real and simulated environments containing different shaped multi-part objects connected via different types of joints and objects with different masses. Our approach enables the robot to predict and adapt the effect of a pushing action as it observes the scene. Further, we demonstrate 6D effect prediction in the lever-up action in the context of robot-based hard-disk disassembly.

Adaptive Conditional Neural Movement Primitives via Representation Sharing Between Supervised and Reinforcement Learning

Mar 25, 2020

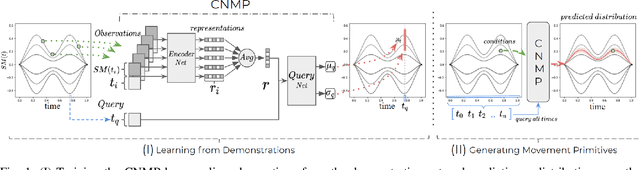

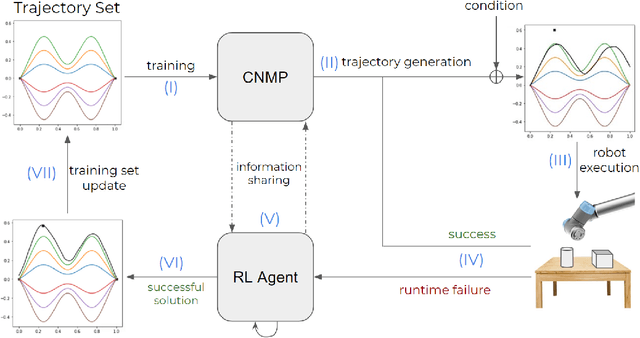

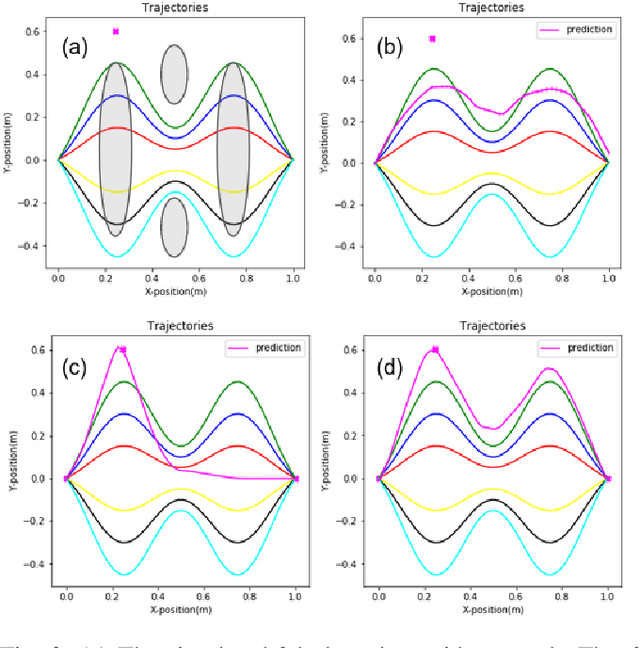

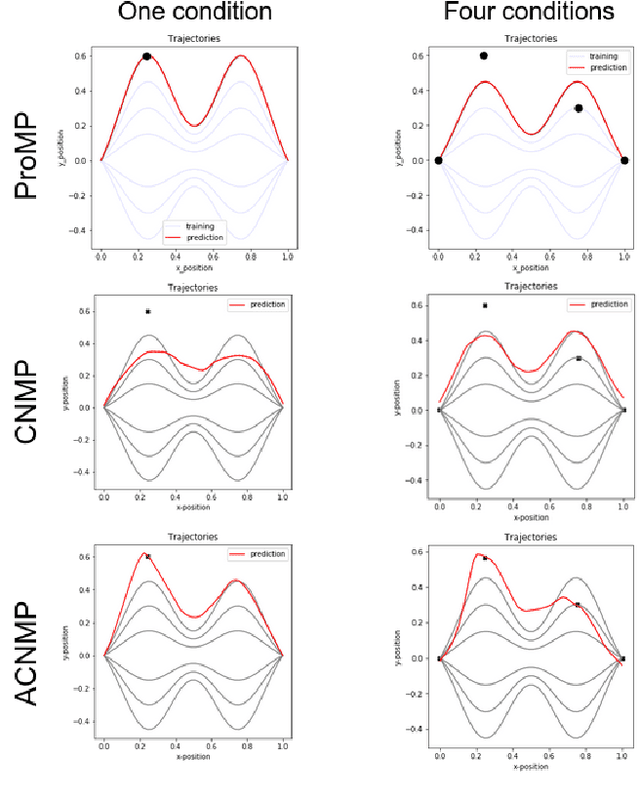

Abstract:Learning by Demonstration provides a sample efficient way to equip robots with complex sensorimotor skills in supervised manner. Several movement primitive representations can be used for flexible motor representation and learning. A recent state-of-the art approach is Conditional Neural Movement Primitives (CNMP) that can learn non-linear relations between environment parameters and complex multi-modal trajectories from a few expert demonstrations by forming powerful latent space representations. In this study, to improve the applicability of CNMP to changing tasks and/or environments, we couple it with a reinforcement learning agent that exploits the formed representations by the original CNMP network, and learns to generate synthetic demonstrations for further learning. This enables the CNMP network to generalize to new environments by adapting its internal representations. In the current implementation, the reinforcement learning agent is triggered when a failure in task execution is detected, and the CNMP is trained with the newly discovered demonstration (trajectory), which shares essential characteristics with the original demonstrations due to the representation sharing. As a result, the overall system increases its capacity and handle situations in scenarios where the initial CNMP network can not produce a useful trajectory. To show the validity of our proposed model, we compare our approach with original CNMP work and other movement primitives approaches. Furthermore, we presents the experimental results from the implementation of the proposed model on real robotics setups, which indicate the applicability of our approach as an effective adaptive learning by demonstration system.

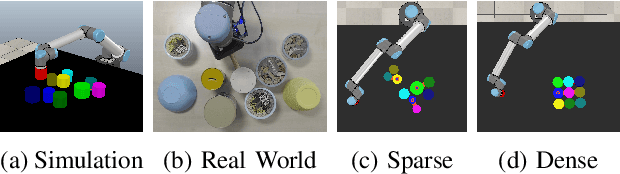

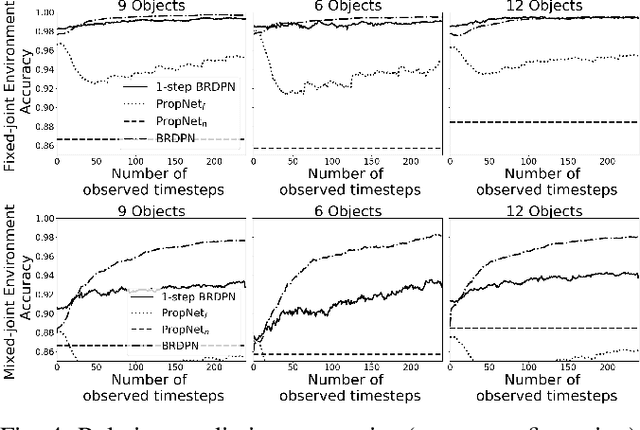

Belief Regulated Dual Propagation Nets for Learning Action Effects on Articulated Multi-Part Objects

Sep 18, 2019

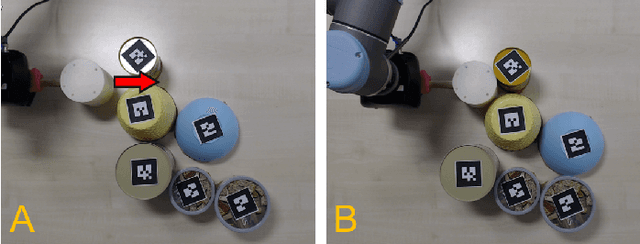

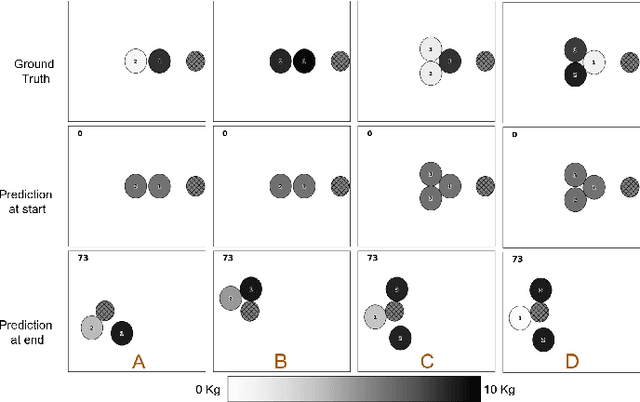

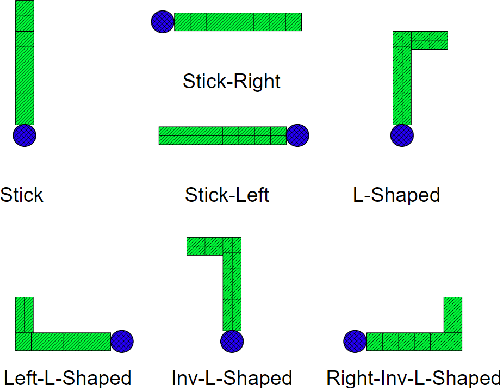

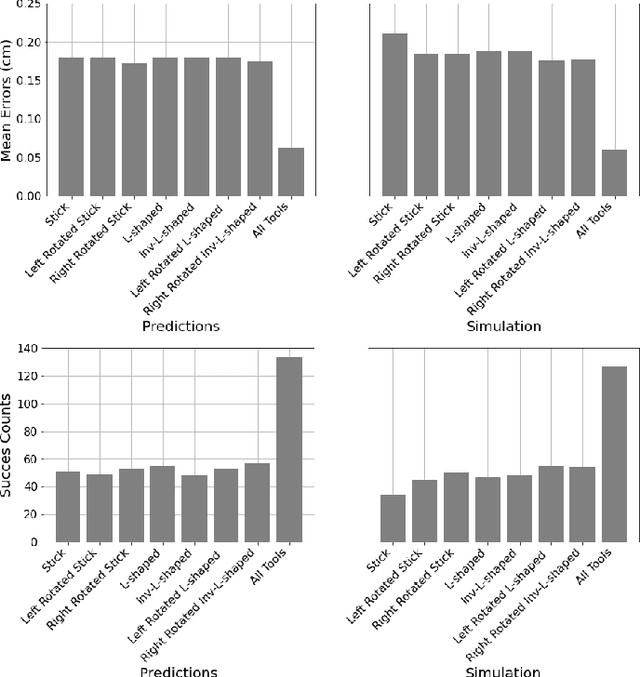

Abstract:In recent years, graph neural networks have been successfully applied for learning the dynamics of complex and partially observable physical systems. However, their use in therobotics domain is, to date, still limited. In this paper, we introduce Belief Regulated Dual Propagation Networks (BRDPN), a general purpose learnable physics engine, which enables a robot to predict the effects of its actions in scenes containing groups of articulated multi-part objects. Specifically, our framework extends the recently proposed propagation networks (PropNets) and consists of two complementary components, a physics predictor and a belief regulator. While the former predicts the future states of the object(s) manipulated by the robot, the latter constantly corrects the robots knowledge regarding the objects and their relations. Our results showed that after trained in a simulator, the robot could reliably predict the consequences of its actions in object trajectory level and exploit its own interaction experience to correct its belief about the state of the world, enabling better predictions in partially observable environments. Furthermore, the trained model was transferred to the real world and its capabilities were verified in correctly predicting trajectories of pushed interacting objects whose joint relations were initially unknown. We compared our BRDPN against the original PropNets and showed that BRDPN can perform consistently well even if the relations between the objects are not explicitly given but instead predicted from observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge