M. Álvaro Berbís

3D Reconstruction and Alignment by Consumer RGB-D Sensors and Fiducial Planar Markers for Patient Positioning in Radiation Therapy

Mar 22, 2021

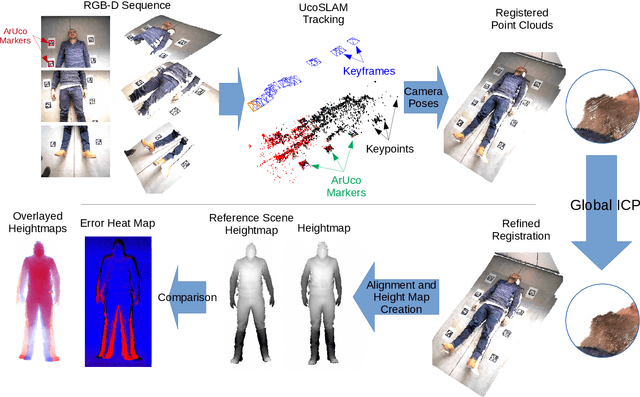

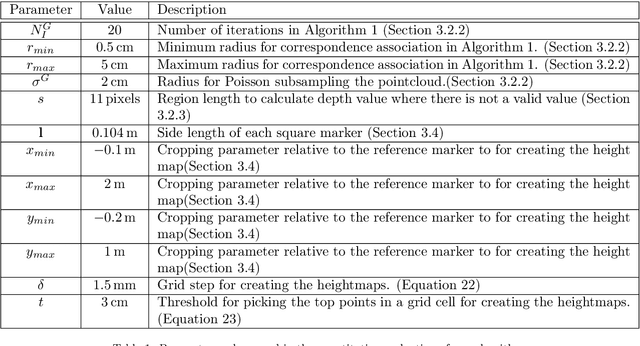

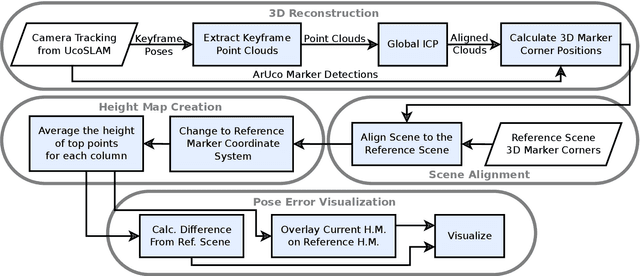

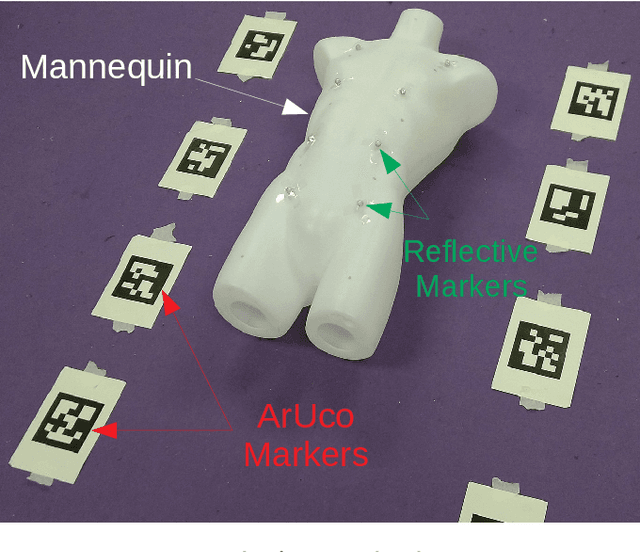

Abstract:BACKGROUND AND OBJECTIVE: Patient positioning is a crucial step in radiation therapy, for which non-invasive methods have been developed based on surface reconstruction using optical 3D imaging. However, most solutions need expensive specialized hardware and a careful calibration procedure that must be repeated over time.This paper proposes a fast and cheap patient positioning method based on inexpensive consumer level RGB-D sensors. METHODS: The proposed method relies on a 3D reconstruction approach that fuses, in real-time, artificial and natural visual landmarks recorded from a hand-held RGB-D sensor. The video sequence is transformed into a set of keyframes with known poses, that are later refined to obtain a realistic 3D reconstruction of the patient. The use of artificial landmarks allows our method to automatically align the reconstruction to a reference one, without the need of calibrating the system with respect to the linear accelerator coordinate system. RESULTS:The experiments conducted show that our method obtains a median of 1 cm in translational error, and 1 degree of rotational error with respect to reference pose. Additionally, the proposed method shows as visual output overlayed poses (from the reference and the current scene) and an error map that can be used to correct the patient's current pose to match the reference pose. CONCLUSIONS: A novel approach to obtain 3D body reconstructions for patient positioning without requiring expensive hardware or dedicated graphic cards is proposed. The method can be used to align in real time the patient's current pose to a preview pose, which is a relevant step in radiation therapy.

Probabilistic combination of eigenlungs-based classifiers for COVID-19 diagnosis in chest CT images

Mar 04, 2021

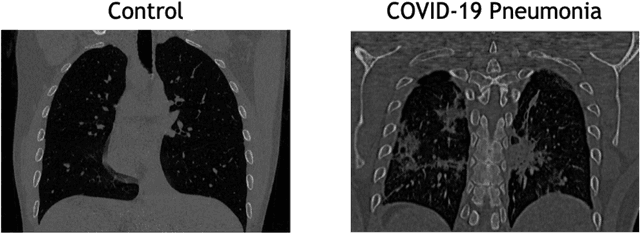

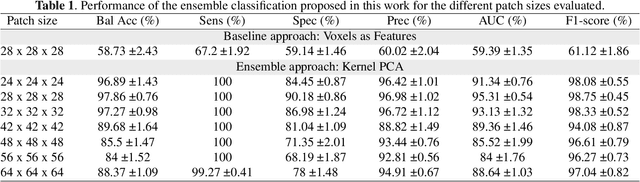

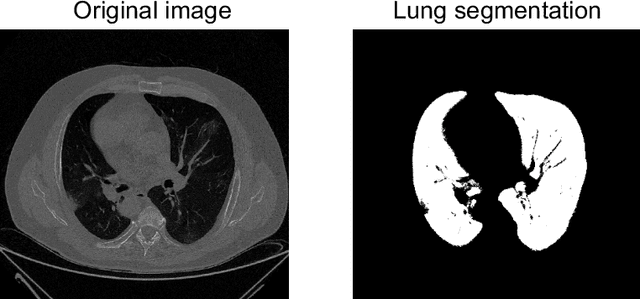

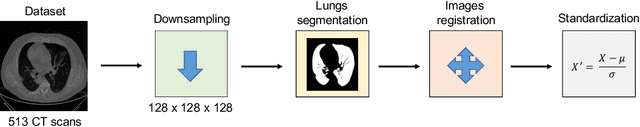

Abstract:The outbreak of the COVID-19 (Coronavirus disease 2019) pandemic has changed the world. According to the World Health Organization (WHO), there have been more than 100 million confirmed cases of COVID-19, including more than 2.4 million deaths. It is extremely important the early detection of the disease, and the use of medical imaging such as chest X-ray (CXR) and chest Computed Tomography (CCT) have proved to be an excellent solution. However, this process requires clinicians to do it within a manual and time-consuming task, which is not ideal when trying to speed up the diagnosis. In this work, we propose an ensemble classifier based on probabilistic Support Vector Machine (SVM) in order to identify pneumonia patterns while providing information about the reliability of the classification. Specifically, each CCT scan is divided into cubic patches and features contained in each one of them are extracted by applying kernel PCA. The use of base classifiers within an ensemble allows our system to identify the pneumonia patterns regardless of their size or location. Decisions of each individual patch are then combined into a global one according to the reliability of each individual classification: the lower the uncertainty, the higher the contribution. Performance is evaluated in a real scenario, yielding an accuracy of 97.86%. The large performance obtained and the simplicity of the system (use of deep learning in CCT images would result in a huge computational cost) evidence the applicability of our proposal in a real-world environment.

Joint Scene and Object Tracking for Cost-Effective Augmented Reality Assisted Patient Positioning in Radiation Therapy

Oct 05, 2020

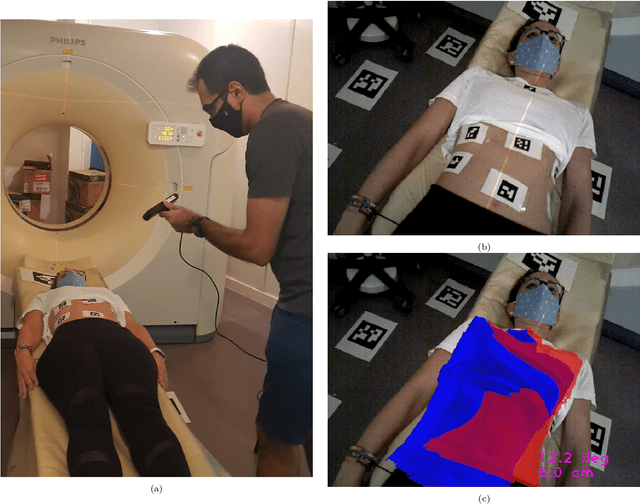

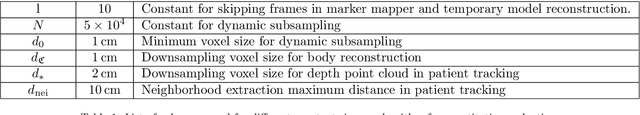

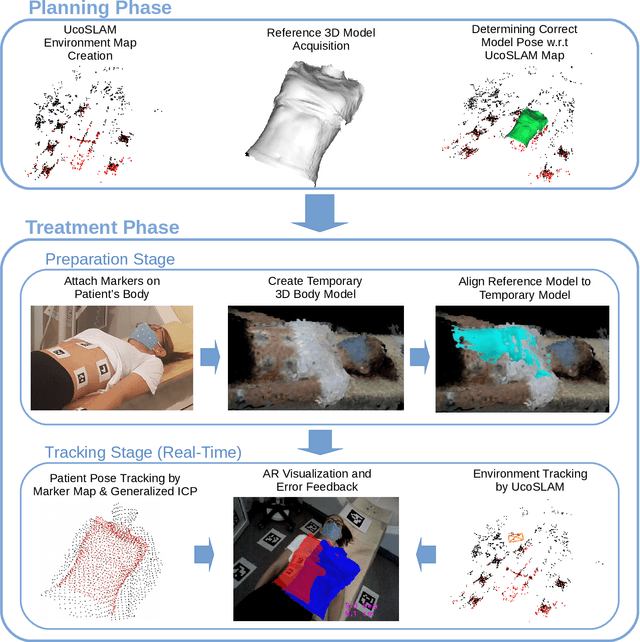

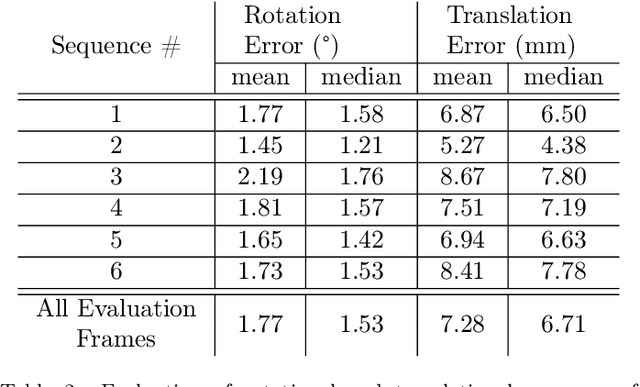

Abstract:$\textrm{Background and Objective:}$ The research done in the field of Augmented Reality (AR) for patient positioning in radiation therapy is scarce. We propose an efficient and cost-effective algorithm for tracking the scene and the patient to interactively assist the patient's positioning process by providing visual feedback to the operator. $\textrm{Methods:}$ We have taken advantage of the marker mapper algorithm combined with other steps including generalized ICP to track the patient. We track the environment using the UcoSLAM algorithm. The alignment between the 3D reference model and body marker map is calculated employing our efficient body reconstruction algorithm. $\textrm{Results}:$ Our quantitative evaluation shows that we were able to achieve an average rotational error of 1.77 deg and a translational error of 7.28 mm. Our algorithm performed with an average frame rate of 19 fps. Furthermore, the qualitative results demonstrate the usefulness of our algorithm in patient positioning on different human subjects. $\textrm{Conclusion:}$ Since our algorithm achieves a relatively high frame rate and accuracy without the usage of a dedicated GPU employing a regular laptop, it is a very cost-effective AR-based patient positioning method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge