R. Medina-Carnicer

Simultaneous Multi-View Camera Pose Estimation and Object Tracking with Square Planar Markers

Mar 16, 2021

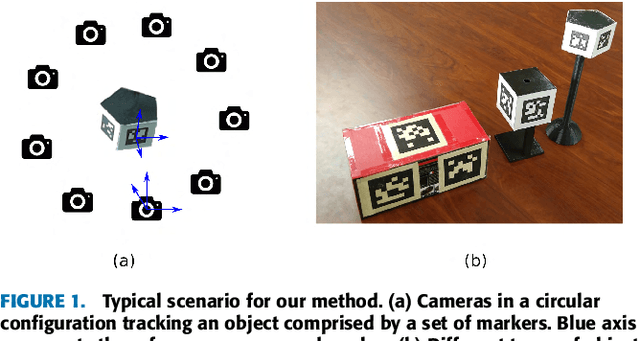

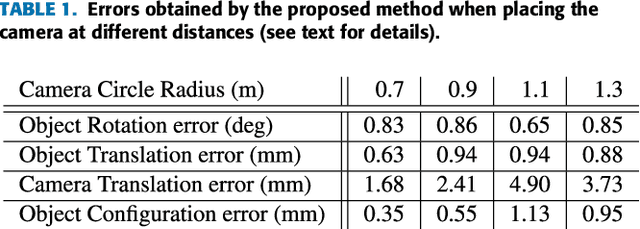

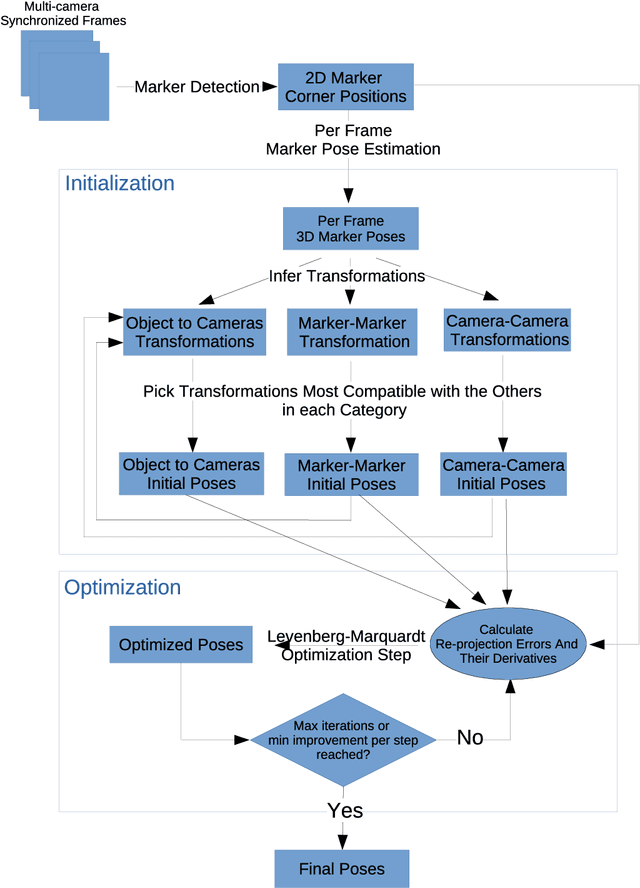

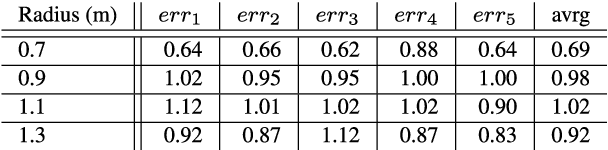

Abstract:Object tracking is a key aspect in many applications such as augmented reality in medicine (e.g. tracking a surgical instrument) or robotics. Squared planar markers have become popular tools for tracking since their pose can be estimated from their four corners. While using a single marker and a single camera limits the working area considerably, using multiple markers attached to an object requires estimating their relative position, which is not trivial, for high accuracy tracking. Likewise, using multiple cameras requires estimating their extrinsic parameters, also a tedious process that must be repeated whenever a camera is moved. This work proposes a novel method to simultaneously solve the above-mentioned problems. From a video sequence showing a rigid set of planar markers recorded from multiple cameras, the proposed method is able to automatically obtain the three-dimensional configuration of the markers, the extrinsic parameters of the cameras, and the relative pose between the markers and the cameras at each frame. Our experiments show that our approach can obtain highly accurate results for estimating these parameters using low resolution cameras. Once the parameters are obtained, tracking of the object can be done in real time with a low computational cost. The proposed method is a step forward in the development of cost-effective solutions for object tracking.

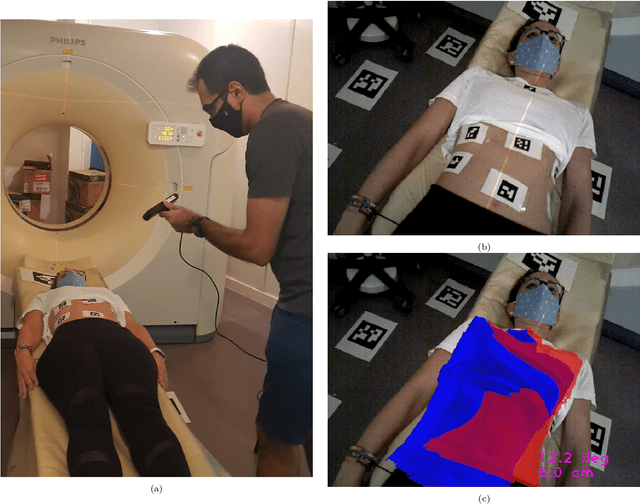

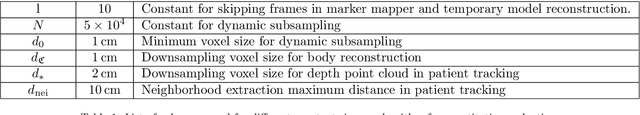

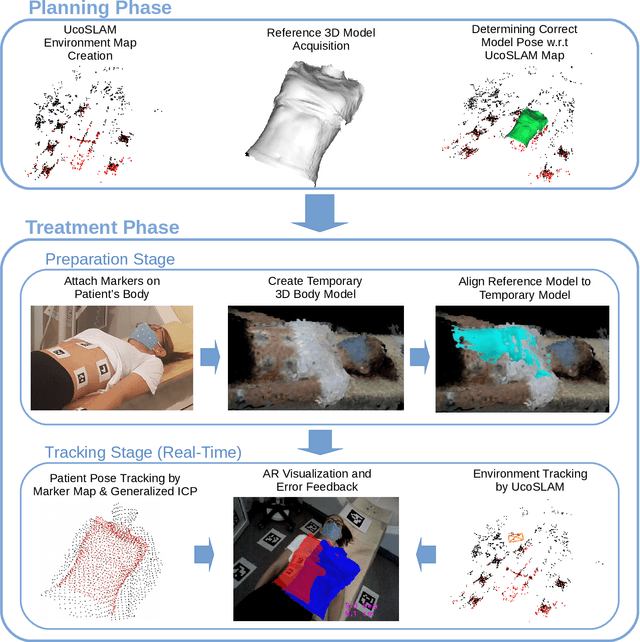

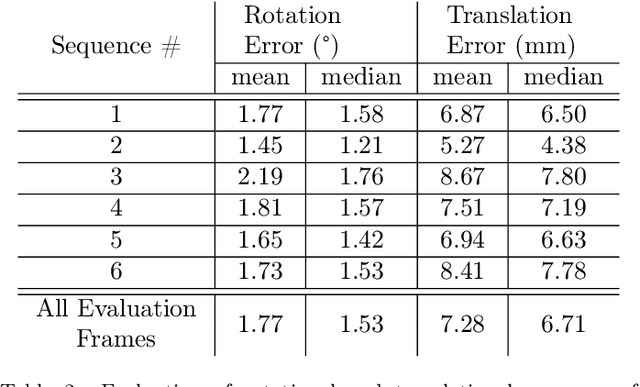

Joint Scene and Object Tracking for Cost-Effective Augmented Reality Assisted Patient Positioning in Radiation Therapy

Oct 05, 2020

Abstract:$\textrm{Background and Objective:}$ The research done in the field of Augmented Reality (AR) for patient positioning in radiation therapy is scarce. We propose an efficient and cost-effective algorithm for tracking the scene and the patient to interactively assist the patient's positioning process by providing visual feedback to the operator. $\textrm{Methods:}$ We have taken advantage of the marker mapper algorithm combined with other steps including generalized ICP to track the patient. We track the environment using the UcoSLAM algorithm. The alignment between the 3D reference model and body marker map is calculated employing our efficient body reconstruction algorithm. $\textrm{Results}:$ Our quantitative evaluation shows that we were able to achieve an average rotational error of 1.77 deg and a translational error of 7.28 mm. Our algorithm performed with an average frame rate of 19 fps. Furthermore, the qualitative results demonstrate the usefulness of our algorithm in patient positioning on different human subjects. $\textrm{Conclusion:}$ Since our algorithm achieves a relatively high frame rate and accuracy without the usage of a dedicated GPU employing a regular laptop, it is a very cost-effective AR-based patient positioning method.

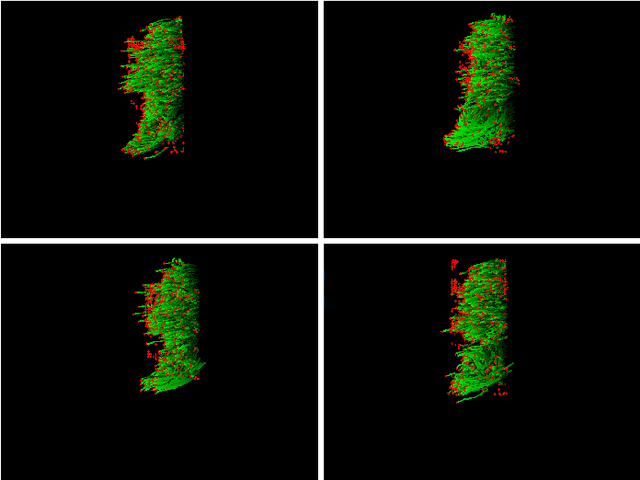

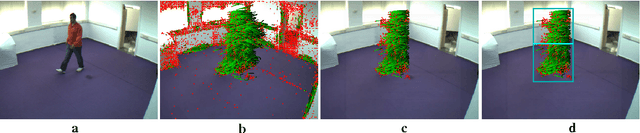

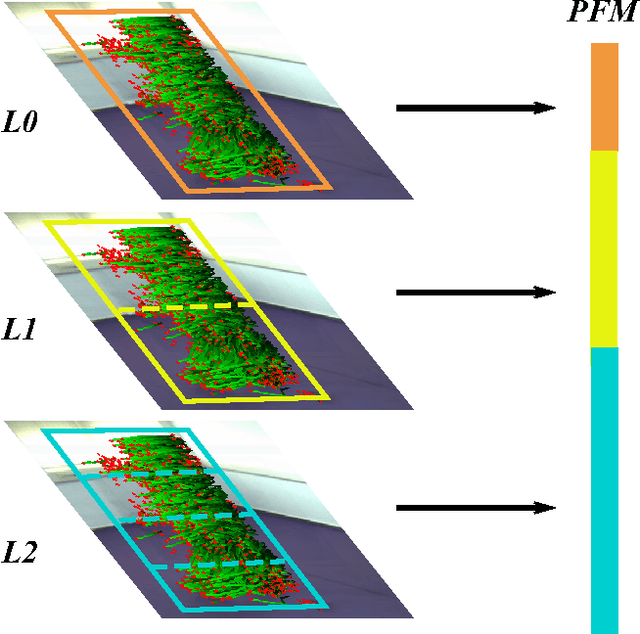

Pyramidal Fisher Motion for Multiview Gait Recognition

Mar 27, 2014

Abstract:The goal of this paper is to identify individuals by analyzing their gait. Instead of using binary silhouettes as input data (as done in many previous works) we propose and evaluate the use of motion descriptors based on densely sampled short-term trajectories. We take advantage of state-of-the-art people detectors to define custom spatial configurations of the descriptors around the target person. Thus, obtaining a pyramidal representation of the gait motion. The local motion features (described by the Divergence-Curl-Shear descriptor) extracted on the different spatial areas of the person are combined into a single high-level gait descriptor by using the Fisher Vector encoding. The proposed approach, coined Pyramidal Fisher Motion, is experimentally validated on the recent `AVA Multiview Gait' dataset. The results show that this new approach achieves promising results in the problem of gait recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge