Andrés Ortiz

EEG Connectivity Analysis Using Denoising Autoencoders for the Detection of Dyslexia

Nov 23, 2023Abstract:The Temporal Sampling Framework (TSF) theorizes that the characteristic phonological difficulties of dyslexia are caused by an atypical oscillatory sampling at one or more temporal rates. The LEEDUCA study conducted a series of Electroencephalography (EEG) experiments on children listening to amplitude modulated (AM) noise with slow-rythmic prosodic (0.5-1 Hz), syllabic (4-8 Hz) or the phoneme (12-40 Hz) rates, aimed at detecting differences in perception of oscillatory sampling that could be associated with dyslexia. The purpose of this work is to check whether these differences exist and how they are related to children's performance in different language and cognitive tasks commonly used to detect dyslexia. To this purpose, temporal and spectral inter-channel EEG connectivity was estimated, and a denoising autoencoder (DAE) was trained to learn a low-dimensional representation of the connectivity matrices. This representation was studied via correlation and classification analysis, which revealed ability in detecting dyslexic subjects with an accuracy higher than 0.8, and balanced accuracy around 0.7. Some features of the DAE representation were significantly correlated ($p<0.005$) with children's performance in language and cognitive tasks of the phonological hypothesis category such as phonological awareness and rapid symbolic naming, as well as reading efficiency and reading comprehension. Finally, a deeper analysis of the adjacency matrix revealed a reduced bilateral connection between electrodes of the temporal lobe (roughly the primary auditory cortex) in DD subjects, as well as an increased connectivity of the F7 electrode, placed roughly on Broca's area. These results pave the way for a complementary assessment of dyslexia using more objective methodologies such as EEG.

* 19 pages, 6 figures

Convolutional Neural Networks for Neuroimaging in Parkinson's Disease: Is Preprocessing Needed?

Nov 21, 2023

Abstract:Spatial and intensity normalization are nowadays a prerequisite for neuroimaging analysis. Influenced by voxel-wise and other univariate comparisons, where these corrections are key, they are commonly applied to any type of analysis and imaging modalities. Nuclear imaging modalities such as PET-FDG or FP-CIT SPECT, a common modality used in Parkinson's Disease diagnosis, are especially dependent on intensity normalization. However, these steps are computationally expensive and furthermore, they may introduce deformations in the images, altering the information contained in them. Convolutional Neural Networks (CNNs), for their part, introduce position invariance to pattern recognition, and have been proven to classify objects regardless of their orientation, size, angle, etc. Therefore, a question arises: how well can CNNs account for spatial and intensity differences when analysing nuclear brain imaging? Are spatial and intensity normalization still needed? To answer this question, we have trained four different CNN models based on well-established architectures, using or not different spatial and intensity normalization preprocessing. The results show that a sufficiently complex model such as our three-dimensional version of the ALEXNET can effectively account for spatial differences, achieving a diagnosis accuracy of 94.1% with an area under the ROC curve of 0.984. The visualization of the differences via saliency maps shows that these models are correctly finding patterns that match those found in the literature, without the need of applying any complex spatial normalization procedure. However, the intensity normalization -- and its type -- is revealed as very influential in the results and accuracy of the trained model, and therefore must be well accounted.

* 19 pages, 7 figures

APIS: A paired CT-MRI dataset for ischemic stroke segmentation challenge

Sep 26, 2023Abstract:Stroke is the second leading cause of mortality worldwide. Immediate attention and diagnosis play a crucial role regarding patient prognosis. The key to diagnosis consists in localizing and delineating brain lesions. Standard stroke examination protocols include the initial evaluation from a non-contrast CT scan to discriminate between hemorrhage and ischemia. However, non-contrast CTs may lack sensitivity in detecting subtle ischemic changes in the acute phase. As a result, complementary diffusion-weighted MRI studies are captured to provide valuable insights, allowing to recover and quantify stroke lesions. This work introduced APIS, the first paired public dataset with NCCT and ADC studies of acute ischemic stroke patients. APIS was presented as a challenge at the 20th IEEE International Symposium on Biomedical Imaging 2023, where researchers were invited to propose new computational strategies that leverage paired data and deal with lesion segmentation over CT sequences. Despite all the teams employing specialized deep learning tools, the results suggest that the ischemic stroke segmentation task from NCCT remains challenging. The annotated dataset remains accessible to the public upon registration, inviting the scientific community to deal with stroke characterization from NCCT but guided with paired DWI information.

Tiled sparse coding in eigenspaces for the COVID-19 diagnosis in chest X-ray images

Jun 28, 2021

Abstract:The ongoing crisis of the COVID-19 (Coronavirus disease 2019) pandemic has changed the world. According to the World Health Organization (WHO), 4 million people have died due to this disease, whereas there have been more than 180 million confirmed cases of COVID-19. The collapse of the health system in many countries has demonstrated the need of developing tools to automatize the diagnosis of the disease from medical imaging. Previous studies have used deep learning for this purpose. However, the performance of this alternative highly depends on the size of the dataset employed for training the algorithm. In this work, we propose a classification framework based on sparse coding in order to identify the pneumonia patterns associated with different pathologies. Specifically, each chest X-ray (CXR) image is partitioned into different tiles. The most relevant features extracted from PCA are then used to build the dictionary within the sparse coding procedure. Once images are transformed and reconstructed from the elements of the dictionary, classification is performed from the reconstruction errors of individual patches associated with each image. Performance is evaluated in a real scenario where simultaneously differentiation between four different pathologies: control vs bacterial pneumonia vs viral pneumonia vs COVID-19. The accuracy when identifying the presence of pneumonia is 93.85%, whereas 88.11% is obtained in the 4-class classification context. The excellent results and the pioneering use of sparse coding in this scenario evidence the applicability of this approach as an aid for clinicians in a real-world environment.

Temporal EigenPAC for dyslexia diagnosis

Apr 13, 2021

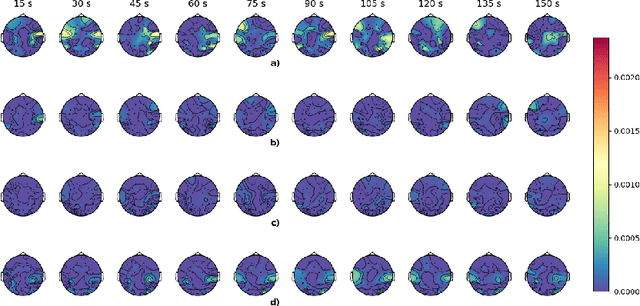

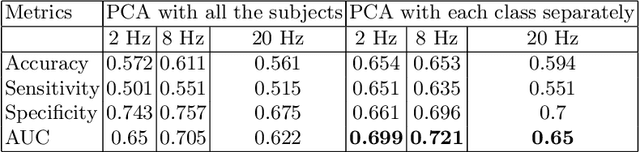

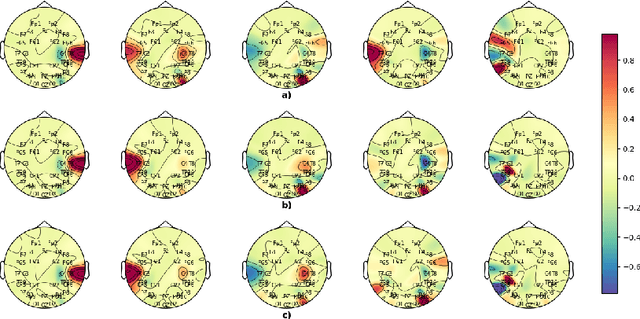

Abstract:Electroencephalography signals allow to explore the functional activity of the brain cortex in a non-invasive way. However, the analysis of these signals is not straightforward due to the presence of different artifacts and the very low signal-to-noise ratio. Cross-Frequency Coupling (CFC) methods provide a way to extract information from EEG, related to the synchronization among frequency bands. However, CFC methods are usually applied in a local way, computing the interaction between phase and amplitude at the same electrode. In this work we show a method to compute PAC features among electrodes to study the functional connectivity. Moreover, this has been applied jointly with Principal Component Analysis to explore patterns related to Dyslexia in 7-years-old children. The developed methodology reveals the temporal evolution of PAC-based connectivity. Directions of greatest variance computed by PCA are called eigenPACs here, since they resemble the classical \textit{eigenfaces} representation. The projection of PAC data onto the eigenPACs provide a set of features that has demonstrates their discriminative capability, specifically in the Beta-Gamma bands.

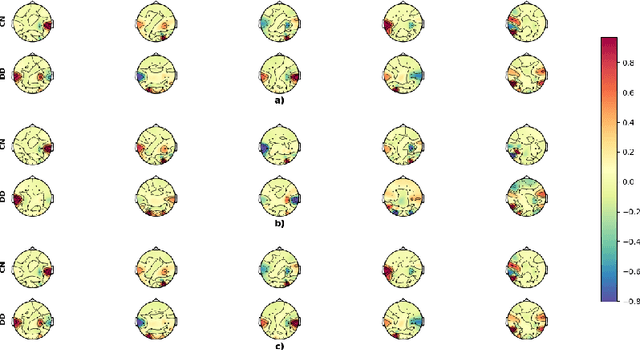

Modelling Brain Connectivity Networks by Graph Embedding for Dyslexia Diagnosis

Apr 12, 2021

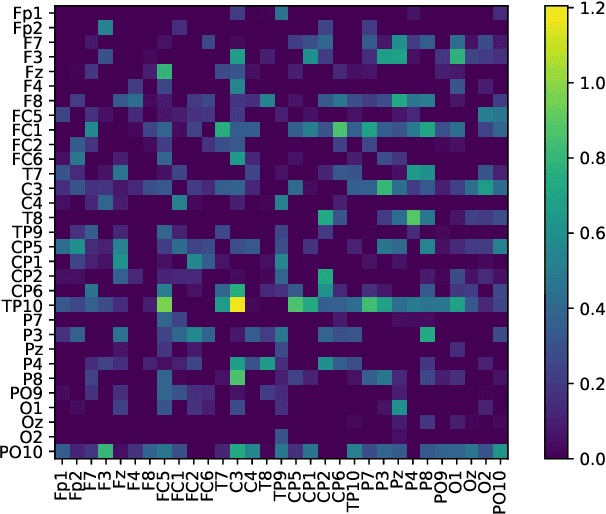

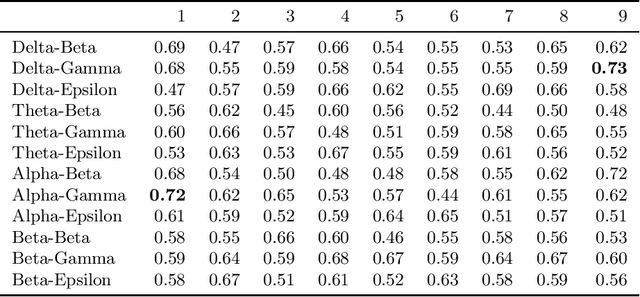

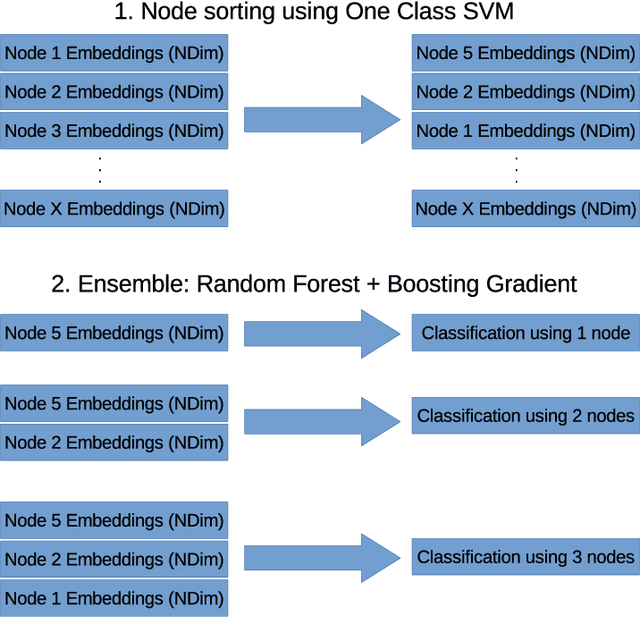

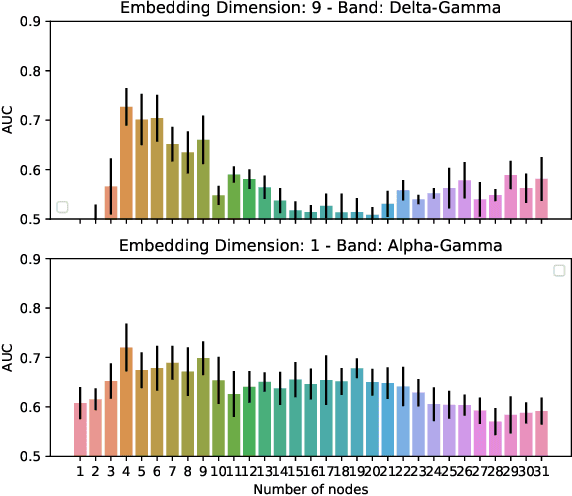

Abstract:Several methods have been developed to extract information from electroencephalograms (EEG). One of them is Phase-Amplitude Coupling (PAC) which is a type of Cross-Frequency Coupling (CFC) method, consisting in measure the synchronization of phase and amplitude for the different EEG bands and electrodes. This provides information regarding brain areas that are synchronously activated, and eventually, a marker of functional connectivity between these areas. In this work, intra and inter electrode PAC is computed obtaining the relationship among different electrodes used in EEG. The connectivity information is then treated as a graph in which the different nodes are the electrodes and the edges PAC values between them. These structures are embedded to create a feature vector that can be further used to classify multichannel EEG samples. The proposed method has been applied to classified EEG samples acquired using specific auditory stimuli in a task designed for dyslexia disorder diagnosis in seven years old children EEG's. The proposed method provides AUC values up to 0.73 and allows selecting the most discriminant electrodes and EEG bands.

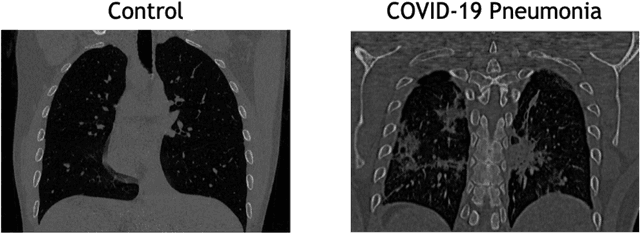

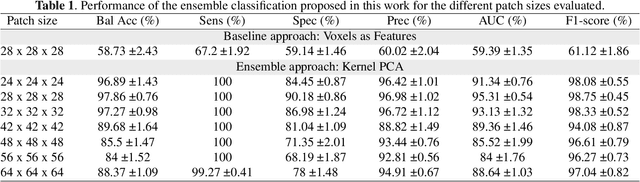

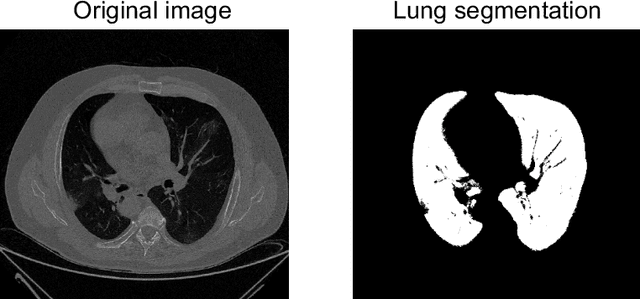

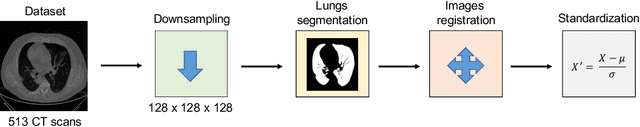

Probabilistic combination of eigenlungs-based classifiers for COVID-19 diagnosis in chest CT images

Mar 04, 2021

Abstract:The outbreak of the COVID-19 (Coronavirus disease 2019) pandemic has changed the world. According to the World Health Organization (WHO), there have been more than 100 million confirmed cases of COVID-19, including more than 2.4 million deaths. It is extremely important the early detection of the disease, and the use of medical imaging such as chest X-ray (CXR) and chest Computed Tomography (CCT) have proved to be an excellent solution. However, this process requires clinicians to do it within a manual and time-consuming task, which is not ideal when trying to speed up the diagnosis. In this work, we propose an ensemble classifier based on probabilistic Support Vector Machine (SVM) in order to identify pneumonia patterns while providing information about the reliability of the classification. Specifically, each CCT scan is divided into cubic patches and features contained in each one of them are extracted by applying kernel PCA. The use of base classifiers within an ensemble allows our system to identify the pneumonia patterns regardless of their size or location. Decisions of each individual patch are then combined into a global one according to the reliability of each individual classification: the lower the uncertainty, the higher the contribution. Performance is evaluated in a real scenario, yielding an accuracy of 97.86%. The large performance obtained and the simplicity of the system (use of deep learning in CCT images would result in a huge computational cost) evidence the applicability of our proposal in a real-world environment.

Dyslexia detection from EEG signals using SSA component correlation and Convolutional Neural Networks

Oct 26, 2020

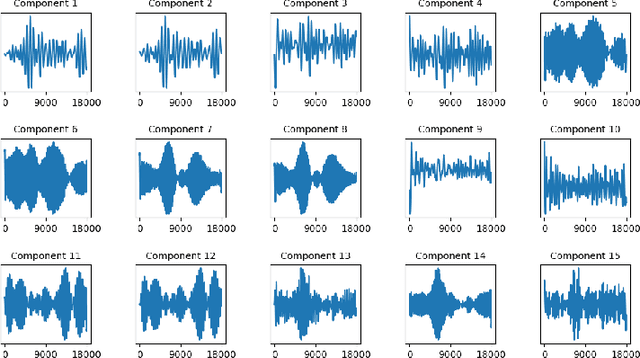

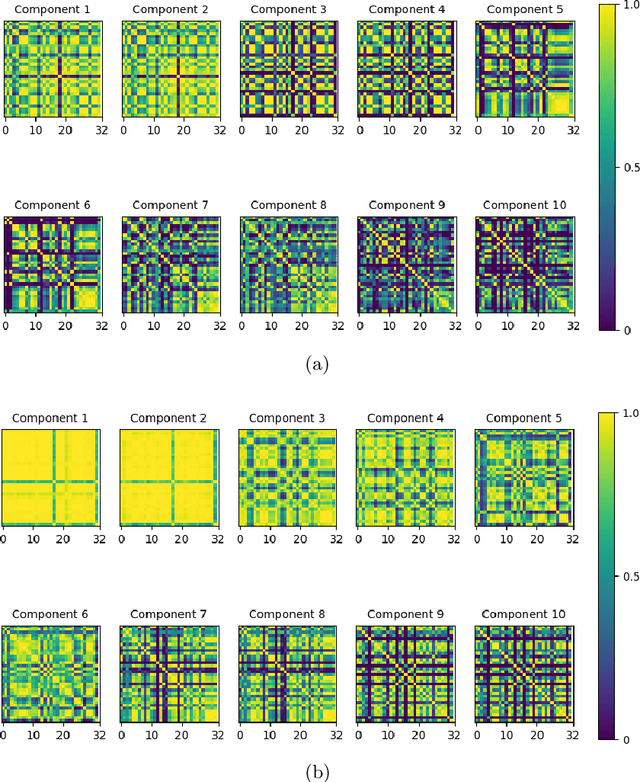

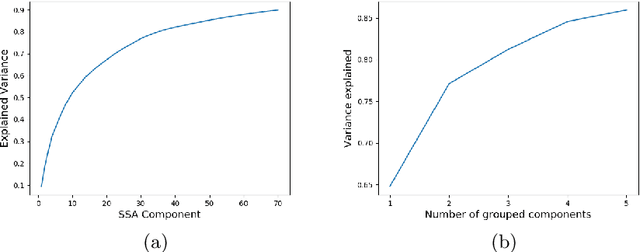

Abstract:Objective dyslexia diagnosis is not a straighforward task since it is traditionally performed by means of the intepretation of different behavioural tests. Moreover, these tests are only applicable to readers. This way, early diagnosis requires the use of specific tasks not only related to reading. Thus, the use of Electroencephalography (EEG) constitutes an alternative for an objective and early diagnosis that can be used with pre-readers. In this way, the extraction of relevant features in EEG signals results crucial for classification. However, the identification of the most relevant features is not straighforward, and predefined statistics in the time or frequency domain are not always discriminant enough. On the other hand, classical processing of EEG signals based on extracting EEG bands frequency descriptors, usually make some assumptions on the raw signals that could cause indormation loosing. In this work we propose an alternative for analysis in the frequency domain based on Singluar Spectrum Analysis (SSA) to split the raw signal into components representing different oscillatory modes. Moreover, correlation matrices obtained for each component among EEG channels are classfied using a Convolutional Neural network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge