Lukáš Gajdošech

Shaken, Not Stirred: A Novel Dataset for Visual Understanding of Glasses in Human-Robot Bartending Tasks

Mar 06, 2025Abstract:Datasets for object detection often do not account for enough variety of glasses, due to their transparent and reflective properties. Specifically, open-vocabulary object detectors, widely used in embodied robotic agents, fail to distinguish subclasses of glasses. This scientific gap poses an issue to robotic applications that suffer from accumulating errors between detection, planning, and action execution. The paper introduces a novel method for the acquisition of real-world data from RGB-D sensors that minimizes human effort. We propose an auto-labeling pipeline that generates labels for all the acquired frames based on the depth measurements. We provide a novel real-world glass object dataset that was collected on the Neuro-Inspired COLlaborator (NICOL), a humanoid robot platform. The data set consists of 7850 images recorded from five different cameras. We show that our trained baseline model outperforms state-of-the-art open-vocabulary approaches. In addition, we deploy our baseline model in an embodied agent approach to the NICOL platform, on which it achieves a success rate of 81% in a human-robot bartending scenario.

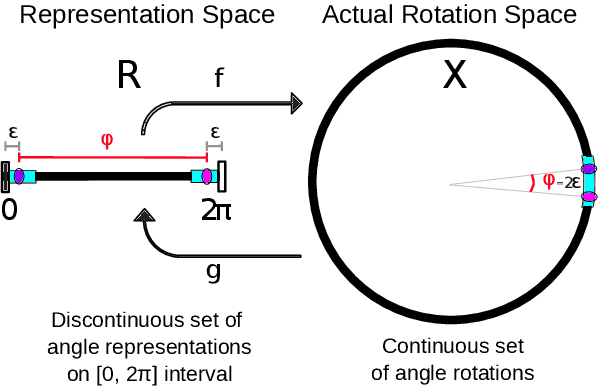

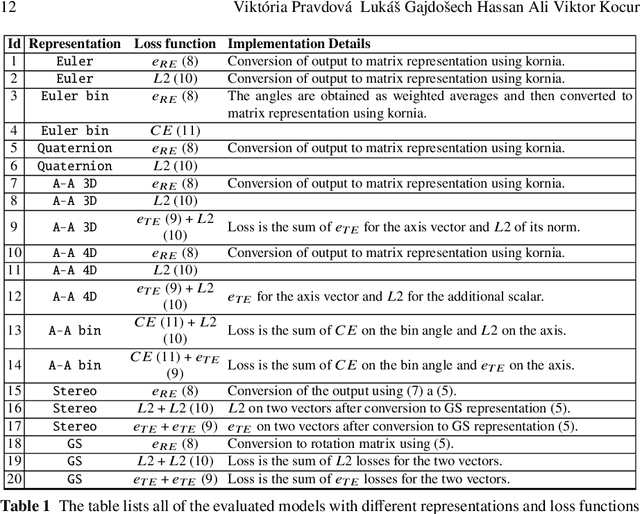

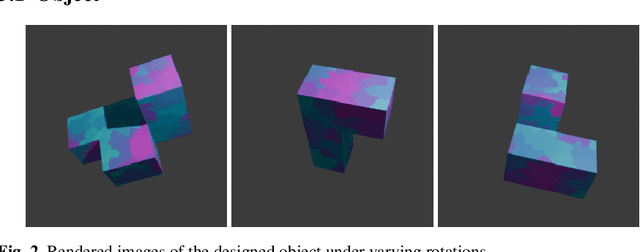

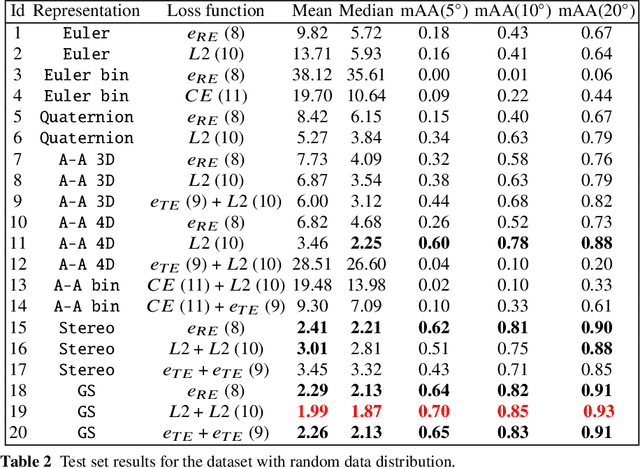

On Representation of 3D Rotation in the Context of Deep Learning

Oct 15, 2024

Abstract:This paper investigates various methods of representing 3D rotations and their impact on the learning process of deep neural networks. We evaluated the performance of ResNet18 networks for 3D rotation estimation using several rotation representations and loss functions on both synthetic and real data. The real datasets contained 3D scans of industrial bins, while the synthetic datasets included views of a simple asymmetric object rendered under different rotations. On synthetic data, we also assessed the effects of different rotation distributions within the training and test sets, as well as the impact of the object's texture. In line with previous research, we found that networks using the continuous 5D and 6D representations performed better than the discontinuous ones.

Enhancement of 3D Camera Synthetic Training Data with Noise Models

Feb 26, 2024Abstract:The goal of this paper is to assess the impact of noise in 3D camera-captured data by modeling the noise of the imaging process and applying it on synthetic training data. We compiled a dataset of specifically constructed scenes to obtain a noise model. We specifically model lateral noise, affecting the position of captured points in the image plane, and axial noise, affecting the position along the axis perpendicular to the image plane. The estimated models can be used to emulate noise in synthetic training data. The added benefit of adding artificial noise is evaluated in an experiment with rendered data for object segmentation. We train a series of neural networks with varying levels of noise in the data and measure their ability to generalize on real data. The results show that using too little or too much noise can hurt the networks' performance indicating that obtaining a model of noise from real scanners is beneficial for synthetic data generation.

* Published in 2024 Proceedings of the 27th Computer Vision Winter Workshop (CVWW). Accepted: 19.1.2024. Published: 16.2.2024. This work was funded by the Horizon-Widera-2021 European Twinning project TERAIS G.A. n. 101079338. Code: https://doi.org/10.5281/zenodo.10581562 Data: https://doi.org/10.5281/zenodo.10581278

Supersampling of Data from Structured-light Scanner with Deep Learning

Nov 13, 2023Abstract:This paper focuses on increasing the resolution of depth maps obtained from 3D cameras using structured light technology. Two deep learning models FDSR and DKN are modified to work with high-resolution data, and data pre-processing techniques are implemented for stable training. The models are trained on our custom dataset of 1200 3D scans. The resulting high-resolution depth maps are evaluated using qualitative and quantitative metrics. The approach for depth map upsampling offers benefits such as reducing the processing time of a pipeline by first downsampling a high-resolution depth map, performing various processing steps at the lower resolution and upsampling the resulting depth map or increasing the resolution of a point cloud captured in lower resolution by a cheaper device. The experiments demonstrate that the FDSR model excels in terms of faster processing time, making it a suitable choice for applications where speed is crucial. On the other hand, the DKN model provides results with higher precision, making it more suitable for applications that prioritize accuracy.

Processing and Segmentation of Human Teeth from 2D Images using Weakly Supervised Learning

Nov 13, 2023Abstract:Teeth segmentation is an essential task in dental image analysis for accurate diagnosis and treatment planning. While supervised deep learning methods can be utilized for teeth segmentation, they often require extensive manual annotation of segmentation masks, which is time-consuming and costly. In this research, we propose a weakly supervised approach for teeth segmentation that reduces the need for manual annotation. Our method utilizes the output heatmaps and intermediate feature maps from a keypoint detection network to guide the segmentation process. We introduce the TriDental dataset, consisting of 3000 oral cavity images annotated with teeth keypoints, to train a teeth keypoint detection network. We combine feature maps from different layers of the keypoint detection network, enabling accurate teeth segmentation without explicit segmentation annotations. The detected keypoints are also used for further refinement of the segmentation masks. Experimental results on the TriDental dataset demonstrate the superiority of our approach in terms of accuracy and robustness compared to state-of-the-art segmentation methods. Our method offers a cost-effective and efficient solution for teeth segmentation in real-world dental applications, eliminating the need for extensive manual annotation efforts.

Novel Synthetic Data Tool for Data-Driven Cardboard Box Localization

May 09, 2023Abstract:Application of neural networks in industrial settings, such as automated factories with bin-picking solutions requires costly production of large labeled data-sets. This paper presents an automatic data generation tool with a procedural model of a cardboard box. We briefly demonstrate the capabilities of the system, its various parameters and empirically prove the usefulness of the generated synthetic data by training a simple neural network. We make sample synthetic data generated by the tool publicly available.

Towards Deep Learning-based 6D Bin Pose Estimation in 3D Scans

Dec 17, 2021

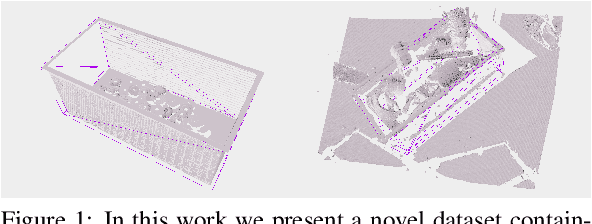

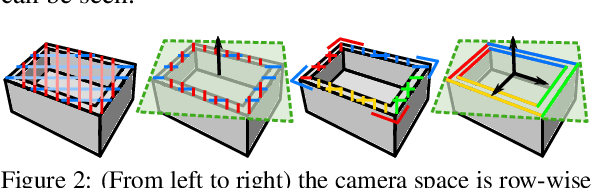

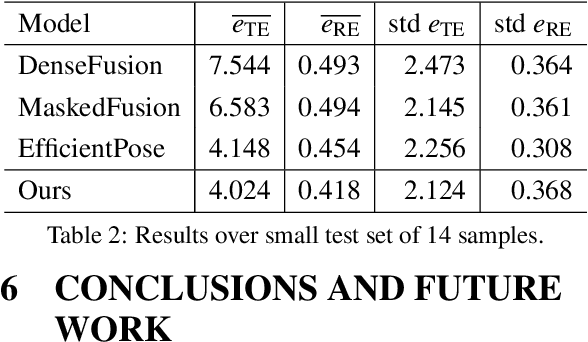

Abstract:An automated robotic system needs to be as robust as possible and fail-safe in general while having relatively high precision and repeatability. Although deep learning-based methods are becoming research standard on how to approach 3D scan and image processing tasks, the industry standard for processing this data is still analytically-based. Our paper claims that analytical methods are less robust and harder for testing, updating, and maintaining. This paper focuses on a specific task of 6D pose estimation of a bin in 3D scans. Therefore, we present a high-quality dataset composed of synthetic data and real scans captured by a structured-light scanner with precise annotations. Additionally, we propose two different methods for 6D bin pose estimation, an analytical method as the industrial standard and a baseline data-driven method. Both approaches are cross-evaluated, and our experiments show that augmenting the training on real scans with synthetic data improves our proposed data-driven neural model. This position paper is preliminary, as proposed methods are trained and evaluated on a relatively small initial dataset which we plan to extend in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge