Luca Passamonti

Multimodal and multicontrast image fusion via deep generative models

Mar 28, 2023Abstract:Recently, it has become progressively more evident that classic diagnostic labels are unable to reliably describe the complexity and variability of several clinical phenotypes. This is particularly true for a broad range of neuropsychiatric illnesses (e.g., depression, anxiety disorders, behavioral phenotypes). Patient heterogeneity can be better described by grouping individuals into novel categories based on empirically derived sections of intersecting continua that span across and beyond traditional categorical borders. In this context, neuroimaging data carry a wealth of spatiotemporally resolved information about each patient's brain. However, they are usually heavily collapsed a priori through procedures which are not learned as part of model training, and consequently not optimized for the downstream prediction task. This is because every individual participant usually comes with multiple whole-brain 3D imaging modalities often accompanied by a deep genotypic and phenotypic characterization, hence posing formidable computational challenges. In this paper we design a deep learning architecture based on generative models rooted in a modular approach and separable convolutional blocks to a) fuse multiple 3D neuroimaging modalities on a voxel-wise level, b) convert them into informative latent embeddings through heavy dimensionality reduction, c) maintain good generalizability and minimal information loss. As proof of concept, we test our architecture on the well characterized Human Connectome Project database demonstrating that our latent embeddings can be clustered into easily separable subject strata which, in turn, map to different phenotypical information which was not included in the embedding creation process. This may be of aid in predicting disease evolution as well as drug response, hence supporting mechanistic disease understanding and empowering clinical trials.

DBGSL: Dynamic Brain Graph Structure Learning

Sep 27, 2022

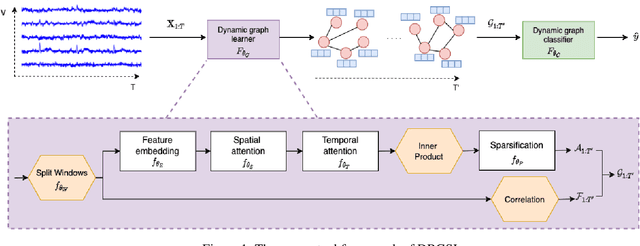

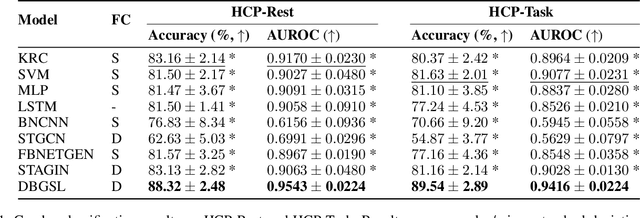

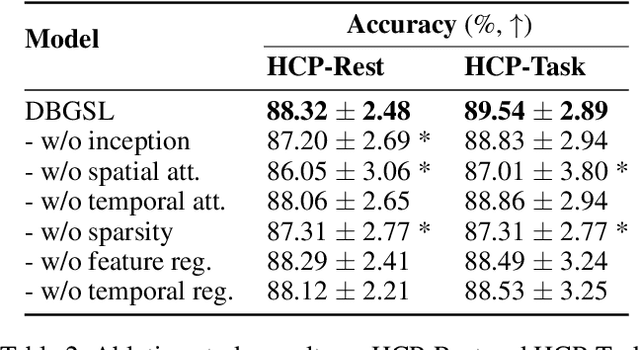

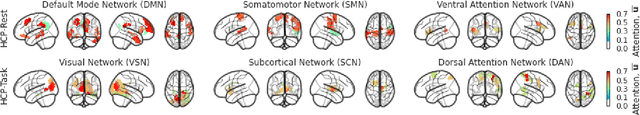

Abstract:Functional connectivity (FC) between regions of the brain is commonly estimated through statistical dependency measures applied to functional magnetic resonance imaging (fMRI) data. The resulting functional connectivity matrix (FCM) is often taken to represent the adjacency matrix of a brain graph. Recently, graph neural networks (GNNs) have been successfully applied to FCMs to learn brain graph representations. A common limitation of existing GNN approaches, however, is that they require the graph adjacency matrix to be known prior to model training. As such, it is implicitly assumed the ground-truth dependency structure of the data is known. Unfortunately, for fMRI this is not the case as the choice of which statistical measure best represents the dependency structure of the data is non-trivial. Also, most GNN applications to fMRI assume FC is static over time, which is at odds with neuroscientific evidence that functional brain networks are time-varying and dynamic. These compounded issues can have a detrimental effect on the capacity of GNNs to learn representations of brain graphs. As a solution, we propose Dynamic Brain Graph Structure Learning (DBGSL), a supervised method for learning the optimal time-varying dependency structure of fMRI data. Specifically, DBGSL learns a dynamic graph from fMRI timeseries via spatial-temporal attention applied to brain region embeddings. The resulting graph is then fed to a spatial-temporal GNN to learn a graph representation for classification. Experiments on large resting-state as well as task fMRI datasets for the task of gender classification demonstrate that DBGSL achieves state-of-the-art performance. Moreover, analysis of the learnt dynamic graphs highlights prediction-related brain regions which align with findings from existing neuroscience literature.

Towards a predictive spatio-temporal representation of brain data

Feb 29, 2020

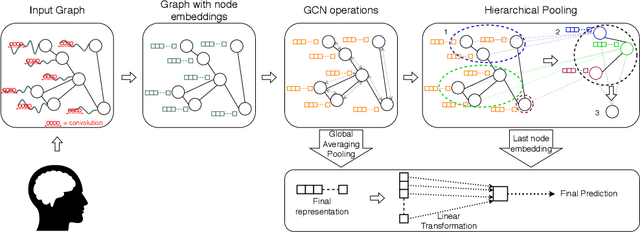

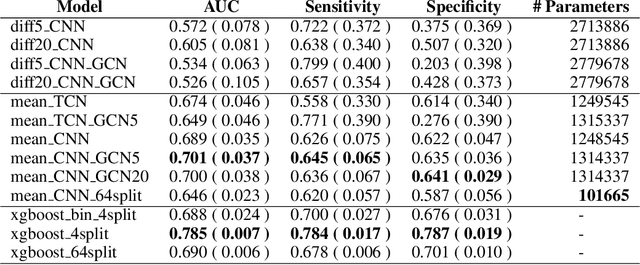

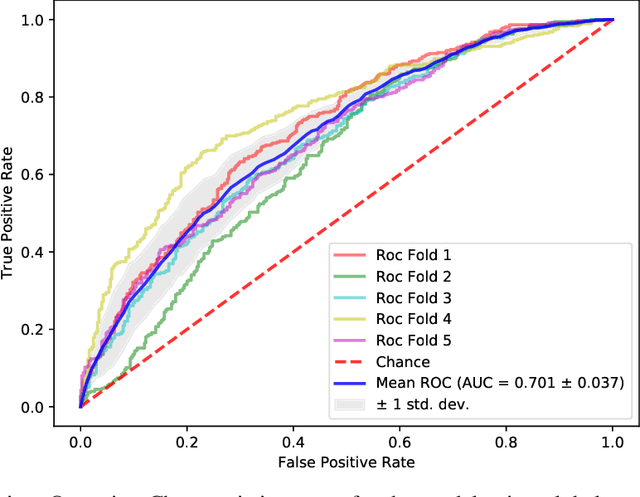

Abstract:The characterisation of the brain as a "connectome", in which the connections are represented by correlational values across timeseries and as summary measures derived from graph theory analyses, has been very popular in the last years. However, although this representation has advanced our understanding of the brain function, it may represent an oversimplified model. This is because the typical fMRI datasets are constituted by complex and highly heterogeneous timeseries that vary across space (i.e., location of brain regions). We compare various modelling techniques from deep learning and geometric deep learning to pave the way for future research in effectively leveraging the rich spatial and temporal domains of typical fMRI datasets, as well as of other similar datasets. As a proof-of-concept, we compare our approaches in the homogeneous and publicly available Human Connectome Project (HCP) dataset on a supervised binary classification task. We hope that our methodological advances relative to previous "connectomic" measures can ultimately be clinically and computationally relevant by leading to a more nuanced understanding of the brain dynamics in health and disease. Such understanding of the brain can fundamentally reduce the constant specialised clinical expertise in order to accurately understand brain variability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge