Lovis Heindrich

Do Sparse Autoencoders Generalize? A Case Study of Answerability

Feb 27, 2025Abstract:Sparse autoencoders (SAEs) have emerged as a promising approach in language model interpretability, offering unsupervised extraction of sparse features. For interpretability methods to succeed, they must identify abstract features across domains, and these features can often manifest differently in each context. We examine this through "answerability"-a model's ability to recognize answerable questions. We extensively evaluate SAE feature generalization across diverse answerability datasets for Gemma 2 SAEs. Our analysis reveals that residual stream probes outperform SAE features within domains, but generalization performance differs sharply. SAE features demonstrate inconsistent transfer ability, and residual stream probes similarly show high variance out of distribution. Overall, this demonstrates the need for quantitative methods to predict feature generalization in SAE-based interpretability.

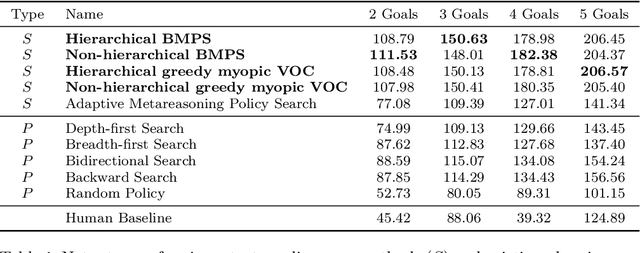

Leveraging automatic strategy discovery to teach people how to select better projects

Jun 06, 2024Abstract:The decisions of individuals and organizations are often suboptimal because normative decision strategies are too demanding in the real world. Recent work suggests that some errors can be prevented by leveraging artificial intelligence to discover and teach prescriptive decision strategies that take people's constraints into account. So far, this line of research has been limited to simplified decision problems. This article is the first to extend this approach to a real-world decision problem, namely project selection. We develop a computational method (MGPS) that automatically discovers project selection strategies that are optimized for real people and develop an intelligent tutor that teaches the discovered strategies. We evaluated MGPS on a computational benchmark and tested the intelligent tutor in a training experiment with two control conditions. MGPS outperformed a state-of-the-art method and was more computationally efficient. Moreover, the intelligent tutor significantly improved people's decision strategies. Our results indicate that our method can improve human decision-making in naturalistic settings similar to real-world project selection, a first step towards applying strategy discovery to the real world.

Training Dynamics of Contextual N-Grams in Language Models

Nov 01, 2023

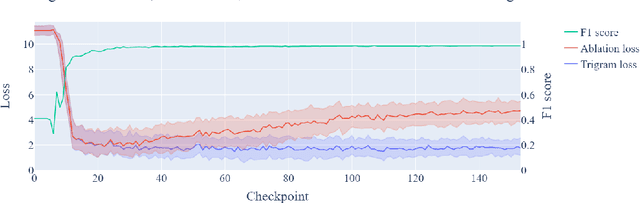

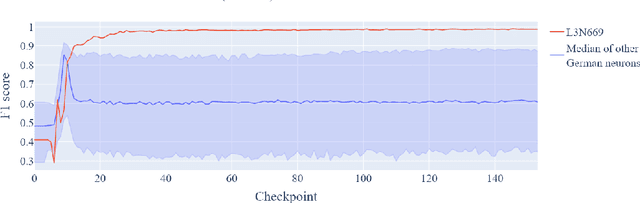

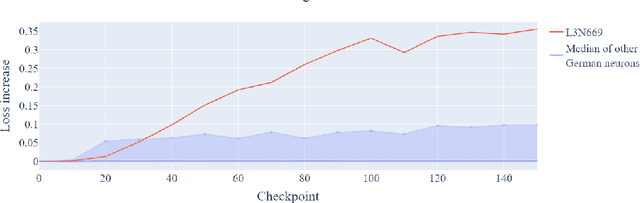

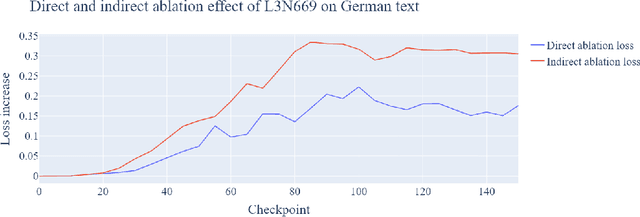

Abstract:Prior work has shown the existence of contextual neurons in language models, including a neuron that activates on German text. We show that this neuron exists within a broader contextual n-gram circuit: we find late layer neurons which recognize and continue n-grams common in German text, but which only activate if the German neuron is active. We investigate the formation of this circuit throughout training and find that it is an example of what we call a second-order circuit. In particular, both the constituent n-gram circuits and the German detection circuit which culminates in the German neuron form with independent functions early in training - the German detection circuit partially through modeling German unigram statistics, and the n-grams by boosting appropriate completions. Only after both circuits have already formed do they fit together into a second-order circuit. Contrary to the hypotheses presented in prior work, we find that the contextual n-gram circuit forms gradually rather than in a sudden phase transition. We further present a range of anomalous observations such as a simultaneous phase transition in many tasks coinciding with the learning rate warm-up, and evidence that many context neurons form simultaneously early in training but are later unlearned.

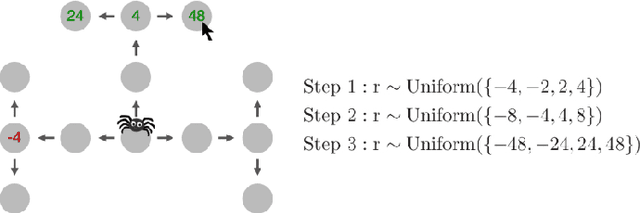

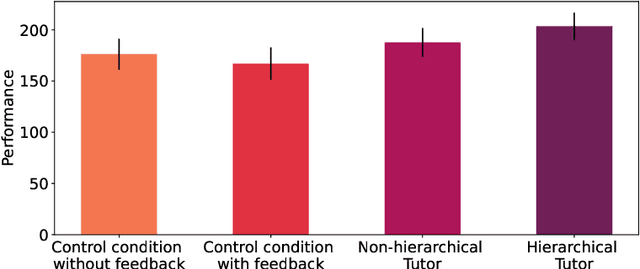

Leveraging AI to improve human planning in large partially observable environments

Feb 06, 2023Abstract:AI can not only outperform people in many planning tasks, but also teach them how to plan better. All prior work was conducted in fully observable environments, but the real world is only partially observable. To bridge this gap, we developed the first metareasoning algorithm for discovering resource-rational strategies for human planning in partially observable environments. Moreover, we developed an intelligent tutor teaching the automatically discovered strategy by giving people feedback on how they plan in increasingly more difficult problems. We showed that our strategy discovery method is superior to the state-of-the-art and tested our intelligent tutor in a preregistered training experiment with 330 participants. The experiment showed that people's intuitive strategies for planning in partially observable environments are highly suboptimal, but can be substantially improved by training with our intelligent tutor. This suggests our human-centred tutoring approach can successfully boost human planning in complex, partially observable sequential decision problems.

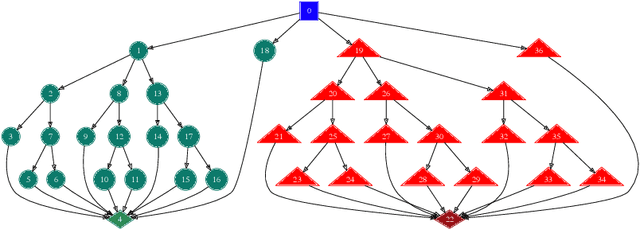

Improving Human Decision-Making by Discovering Efficient Strategies for Hierarchical Planning

Jan 31, 2021

Abstract:To make good decisions in the real world people need efficient planning strategies because their computational resources are limited. Knowing which planning strategies would work best for people in different situations would be very useful for understanding and improving human decision-making. But our ability to compute those strategies used to be limited to very small and very simple planning tasks. To overcome this computational bottleneck, we introduce a cognitively-inspired reinforcement learning method that can overcome this limitation by exploiting the hierarchical structure of human behavior. The basic idea is to decompose sequential decision problems into two sub-problems: setting a goal and planning how to achieve it. This hierarchical decomposition enables us to discover optimal strategies for human planning in larger and more complex tasks than was previously possible. The discovered strategies outperform existing planning algorithms and achieve a super-human level of computational efficiency. We demonstrate that teaching people to use those strategies significantly improves their performance in sequential decision-making tasks that require planning up to eight steps ahead. By contrast, none of the previous approaches was able to improve human performance on these problems. These findings suggest that our cognitively-informed approach makes it possible to leverage reinforcement learning to improve human decision-making in complex sequential decision-problems. Future work can leverage our method to develop decision support systems that improve human decision making in the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge