Liqun Huang

GR-Dexter Technical Report

Dec 30, 2025Abstract:Vision-language-action (VLA) models have enabled language-conditioned, long-horizon robot manipulation, but most existing systems are limited to grippers. Scaling VLA policies to bimanual robots with high degree-of-freedom (DoF) dexterous hands remains challenging due to the expanded action space, frequent hand-object occlusions, and the cost of collecting real-robot data. We present GR-Dexter, a holistic hardware-model-data framework for VLA-based generalist manipulation on a bimanual dexterous-hand robot. Our approach combines the design of a compact 21-DoF robotic hand, an intuitive bimanual teleoperation system for real-robot data collection, and a training recipe that leverages teleoperated robot trajectories together with large-scale vision-language and carefully curated cross-embodiment datasets. Across real-world evaluations spanning long-horizon everyday manipulation and generalizable pick-and-place, GR-Dexter achieves strong in-domain performance and improved robustness to unseen objects and unseen instructions. We hope GR-Dexter serves as a practical step toward generalist dexterous-hand robotic manipulation.

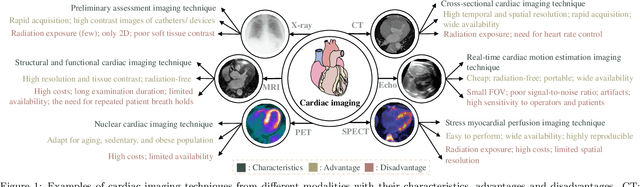

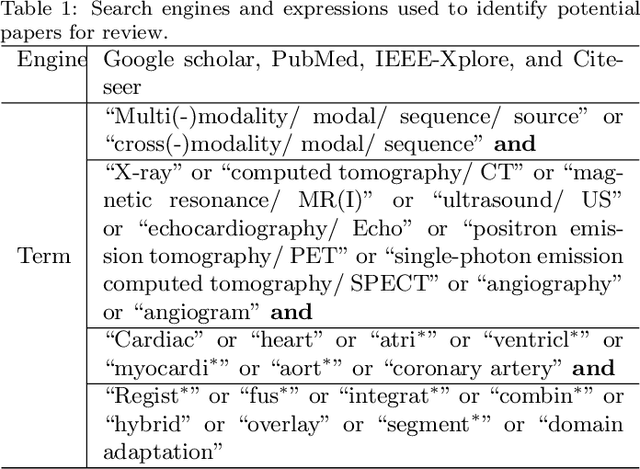

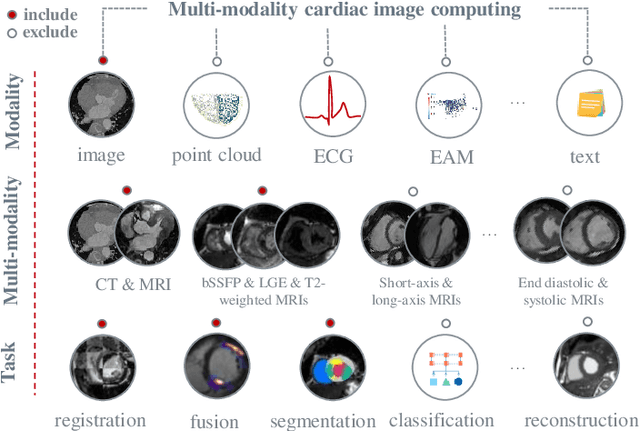

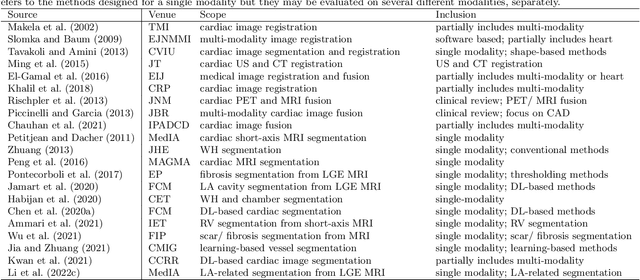

Multi-Modality Cardiac Image Computing: A Survey

Aug 26, 2022

Abstract:Multi-modality cardiac imaging plays a key role in the management of patients with cardiovascular diseases. It allows a combination of complementary anatomical, morphological and functional information, increases diagnosis accuracy, and improves the efficacy of cardiovascular interventions and clinical outcomes. Fully-automated processing and quantitative analysis of multi-modality cardiac images could have a direct impact on clinical research and evidence-based patient management. However, these require overcoming significant challenges including inter-modality misalignment and finding optimal methods to integrate information from different modalities. This paper aims to provide a comprehensive review of multi-modality imaging in cardiology, the computing methods, the validation strategies, the related clinical workflows and future perspectives. For the computing methodologies, we have a favored focus on the three tasks, i.e., registration, fusion and segmentation, which generally involve multi-modality imaging data, \textit{either combining information from different modalities or transferring information across modalities}. The review highlights that multi-modality cardiac imaging data has the potential of wide applicability in the clinic, such as trans-aortic valve implantation guidance, myocardial viability assessment, and catheter ablation therapy and its patient selection. Nevertheless, many challenges remain unsolved, such as missing modality, combination of imaging and non-imaging data, and uniform analysis and representation of different modalities. There is also work to do in defining how the well-developed techniques fit in clinical workflows and how much additional and relevant information they introduce. These problems are likely to continue to be an active field of research and the questions to be answered in the future.

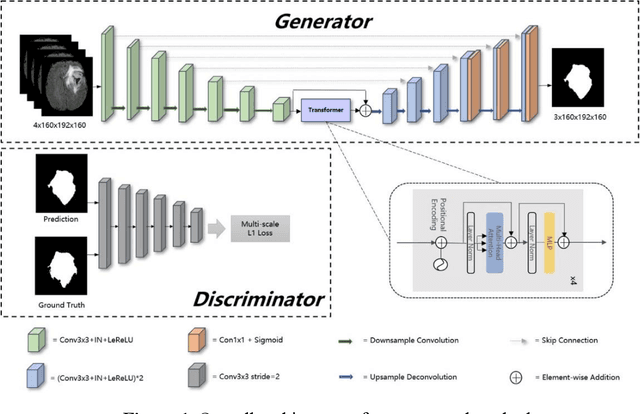

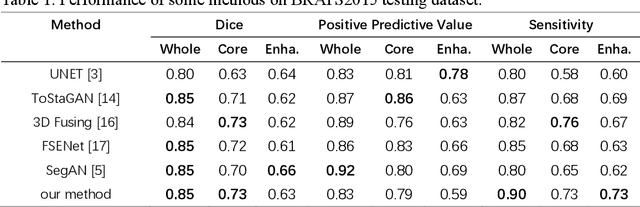

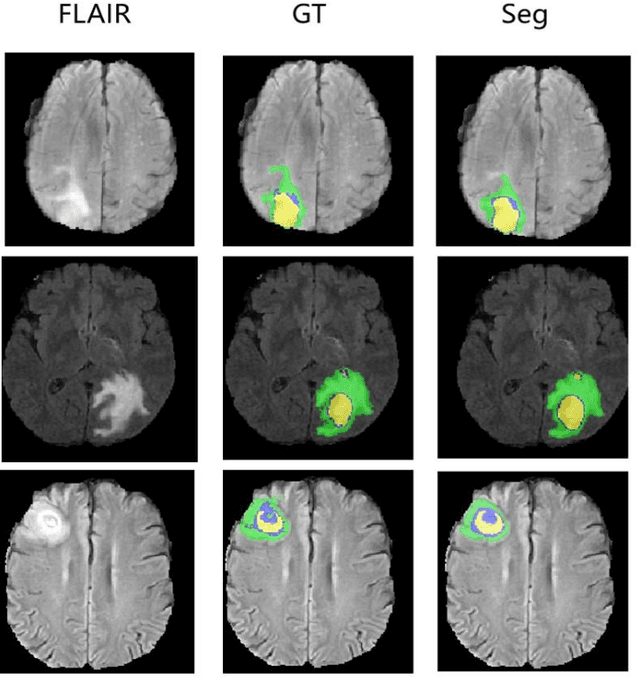

A Transformer-based Generative Adversarial Network for Brain Tumor Segmentation

Jul 29, 2022

Abstract:Brain tumor segmentation remains a challenge in medical image segmentation tasks. With the application of transformer in various computer vision tasks, transformer blocks show the capability of learning long-distance dependency in global space, which is complementary with CNNs. In this paper, we proposed a novel transformer-based generative adversarial network to automatically segment brain tumors with multi-modalities MRI. Our architecture consists of a generator and a discriminator, which are trained in min-max game progress. The generator is based on a typical "U-shaped" encoder-decoder architecture, whose bottom layer is composed of transformer blocks with resnet. Besides, the generator is trained with deep supervision technology. The discriminator we designed is a CNN-based network with multi-scale $L_{1}$ loss, which is proved to be effective for medical semantic image segmentation. To validate the effectiveness of our method, we conducted experiments on BRATS2015 dataset, achieving comparable or better performance than previous state-of-the-art methods.

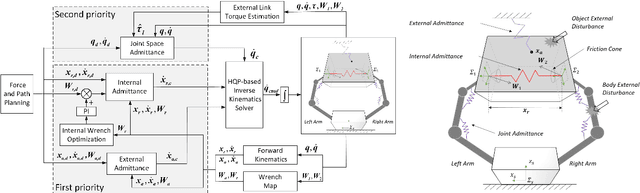

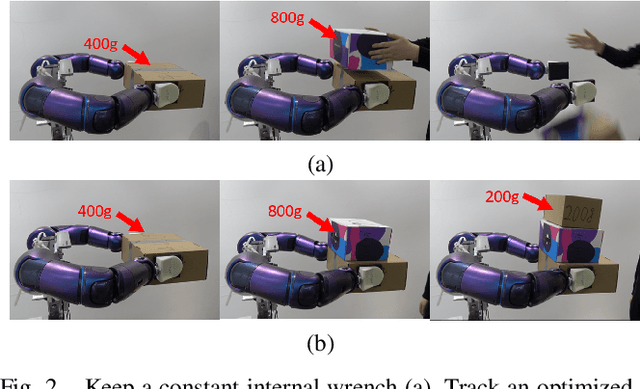

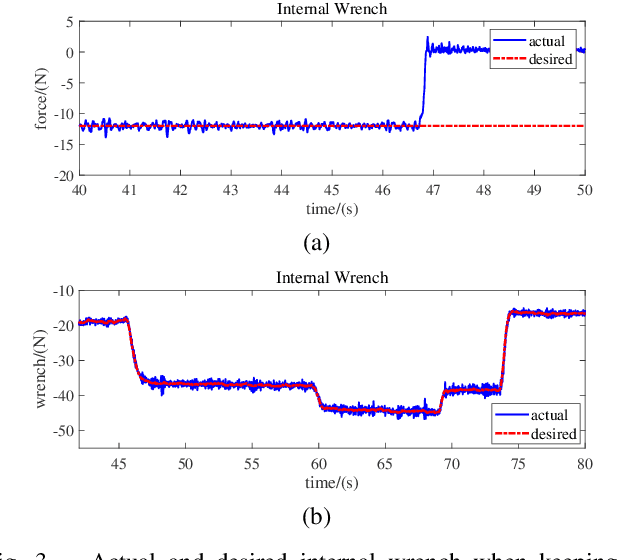

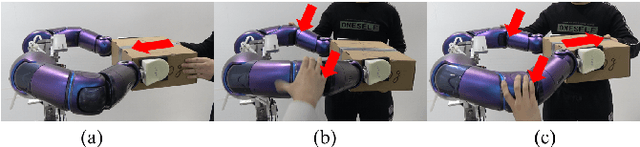

Prioritized Hierarchical Compliance Control for Dual-Arm Robot Stable Clamping

Dec 20, 2021

Abstract:When a dual-arm robot clamps a rigid object in an environment for human beings, the environment or the collaborating human will impose incidental disturbance on the operated object or the robot arm, leading to clamping failure, damaging the robot even hurting the human. This research proposes a prioritized hierarchical compliance control to simultaneously deal with the two types of disturbances in the dual-arm robot clamping. First, we use hierarchical quadratic programming (HQP) to solve the robot inverse kinematics under the joint constraints and prioritize the compliance for the disturbance on the object over that on the robot arm. Second, we estimate the disturbance forces throughout the momentum observer with the F/T sensors and adopt admittance control to realize the compliances. Finally, we perform the verify experiments on a 14-DOF position-controlled dual-arm robot WalkerX, clamping a rigid object stably while realizing the compliance against the disturbances.

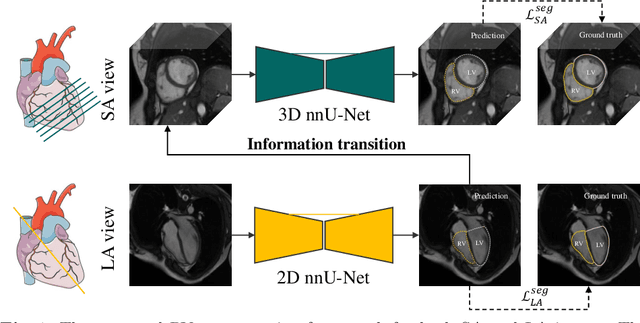

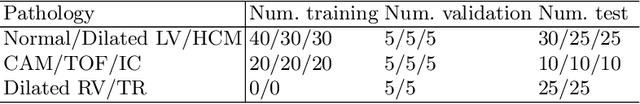

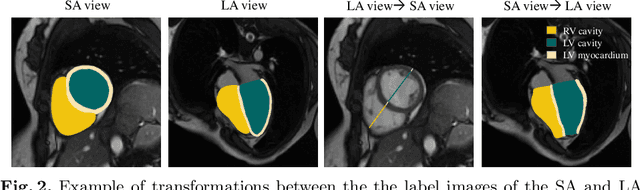

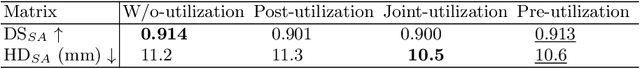

Right Ventricular Segmentation from Short- and Long-Axis MRIs via Information Transition

Sep 05, 2021

Abstract:Right ventricular (RV) segmentation from magnetic resonance imaging (MRI) is a crucial step for cardiac morphology and function analysis. However, automatic RV segmentation from MRI is still challenging, mainly due to the heterogeneous intensity, the complex variable shapes, and the unclear RV boundary. Moreover, current methods for the RV segmentation tend to suffer from performance degradation at the basal and apical slices of MRI. In this work, we propose an automatic RV segmentation framework, where the information from long-axis (LA) views is utilized to assist the segmentation of short-axis (SA) views via information transition. Specifically, we employed the transformed segmentation from LA views as a prior information, to extract the ROI from SA views for better segmentation. The information transition aims to remove the surrounding ambiguous regions in the SA views. %, such as the tricuspid valve regions. We tested our model on a public dataset with 360 multi-center, multi-vendor and multi-disease subjects that consist of both LA and SA MRIs. Our experimental results show that including LA views can be effective to improve the accuracy of the SA segmentation. Our model is publicly available at https://github.com/NanYoMy/MMs-2.

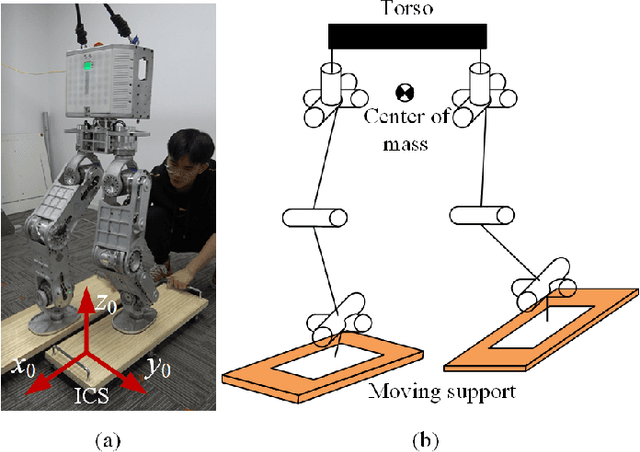

Dynamic Balancing of Humanoid Robot Walker3 with Proprioceptive Actuation: Systematic Design of Algorithm, Software and Hardware

Aug 09, 2021

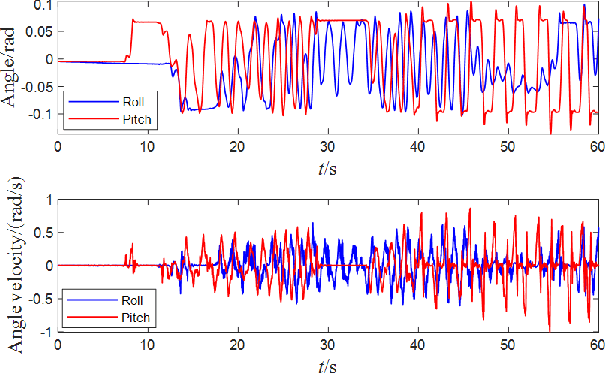

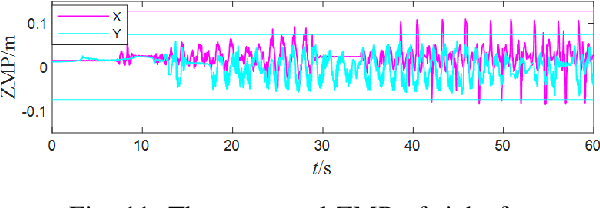

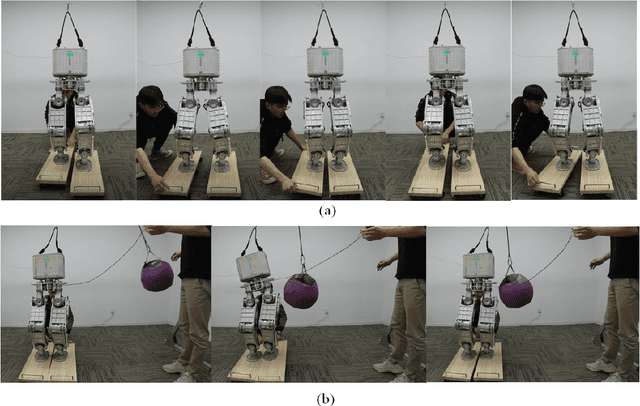

Abstract:Dynamic balancing under uncertain disturbances is important for a humanoid robot, which requires a good capability of coordinating the entire body redundancy to execute multi tasks. Whole-body control (WBC) based on hierarchical optimization has been generally accepted and utilized in torque-controlled robots. A good hierarchy is the prerequisite for WBC and can be predefined according to prior knowledge. However, the real-time computation would be problematic in the physical applications considering the computational complexity of WBC. For robots with proprioceptive actuation, the joint friction in gear reducer would also degrade the torque tracking performance. In our paper, a reasonable hierarchy of tasks and constraints is first customized for robot dynamic balancing. Then a real-time WBC is implemented via a computationally efficient WBC software. Such a method is solved on a modular master control system UBTMaster characterized by the real-time communication and powerful computing capability. After the joint friction being well covered by the model identification, extensive experiments on various balancing scenarios are conducted on a humanoid Walker3 with proprioceptive actuation. The robot shows an outstanding balance performance even under external impulses as well as the two feet of the robot suffering the inclination and shift disturbances independently. The results demonstrate that with the strict hierarchy, real-time computation and joint friction being handled carefully, the robot with proprioceptive actuation can manage the dynamic physical interactions with the unstructured environments well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge