Lingyao Zhang

Data Interpreter: An LLM Agent For Data Science

Mar 12, 2024

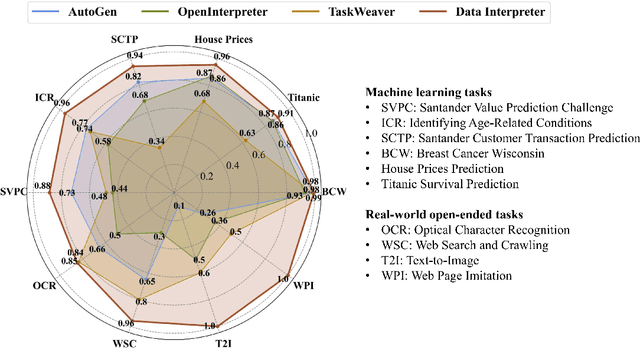

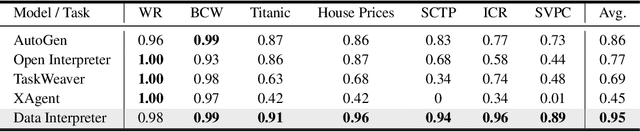

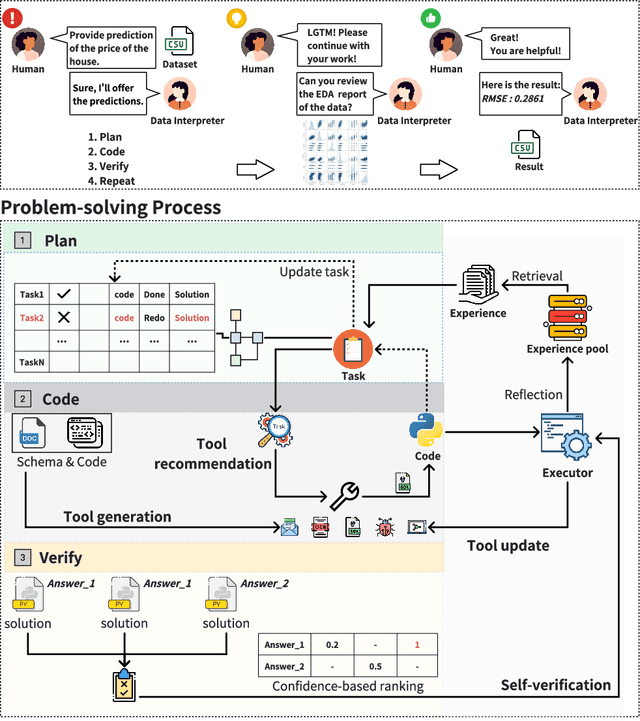

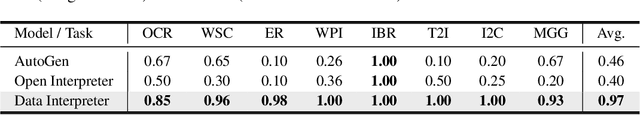

Abstract:Large Language Model (LLM)-based agents have demonstrated remarkable effectiveness. However, their performance can be compromised in data science scenarios that require real-time data adjustment, expertise in optimization due to complex dependencies among various tasks, and the ability to identify logical errors for precise reasoning. In this study, we introduce the Data Interpreter, a solution designed to solve with code that emphasizes three pivotal techniques to augment problem-solving in data science: 1) dynamic planning with hierarchical graph structures for real-time data adaptability;2) tool integration dynamically to enhance code proficiency during execution, enriching the requisite expertise;3) logical inconsistency identification in feedback, and efficiency enhancement through experience recording. We evaluate the Data Interpreter on various data science and real-world tasks. Compared to open-source baselines, it demonstrated superior performance, exhibiting significant improvements in machine learning tasks, increasing from 0.86 to 0.95. Additionally, it showed a 26% increase in the MATH dataset and a remarkable 112% improvement in open-ended tasks. The solution will be released at https://github.com/geekan/MetaGPT.

Map-Adaptive Goal-Based Trajectory Prediction

Sep 09, 2020

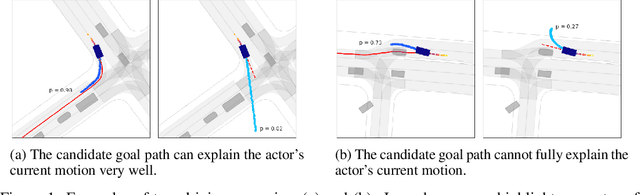

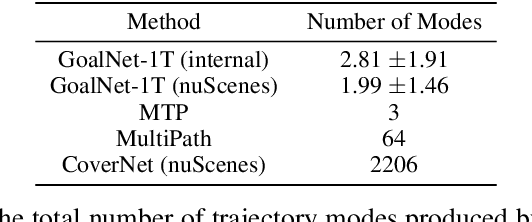

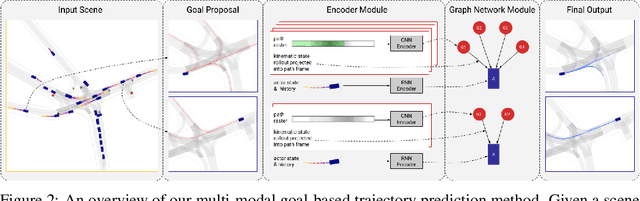

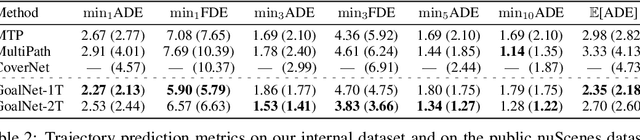

Abstract:We present a new method for multi-modal, long-term vehicle trajectory prediction. Our approach relies on using lane centerlines captured in rich maps of the environment to generate a set of proposed goal paths for each vehicle. Using these paths -- which are generated at run time and therefore dynamically adapt to the scene -- as spatial anchors, we predict a set of goal-based trajectories along with a categorical distribution over the goals. This approach allows us to directly model the goal-directed behavior of traffic actors, which unlocks the potential for more accurate long-term prediction. Our experimental results on both a large-scale internal driving dataset and on the public nuScenes dataset show that our model outperforms state-of-the-art approaches for vehicle trajectory prediction over a 6-second horizon. We also empirically demonstrate that our model is better able to generalize to road scenes from a completely new city than existing methods.

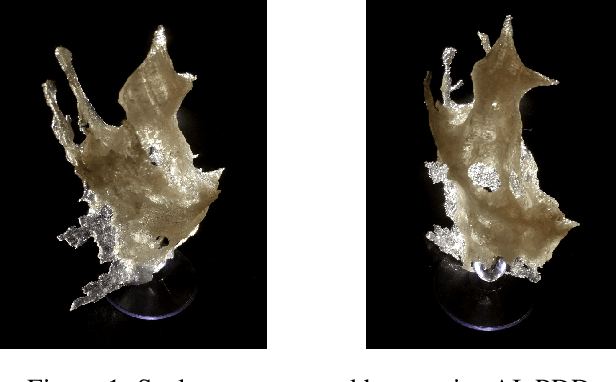

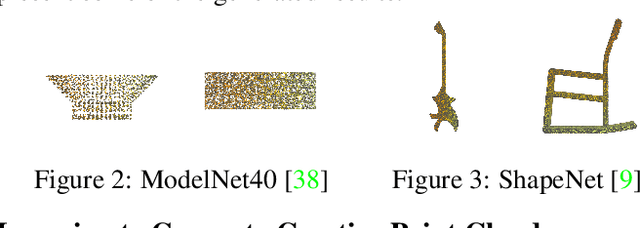

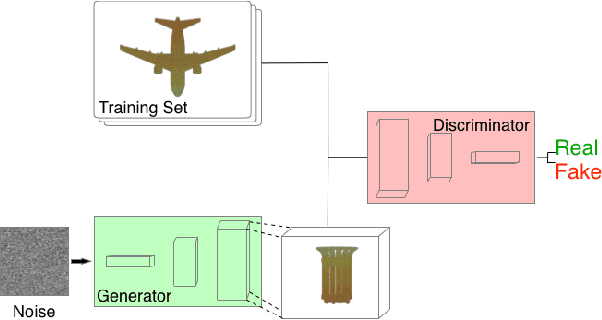

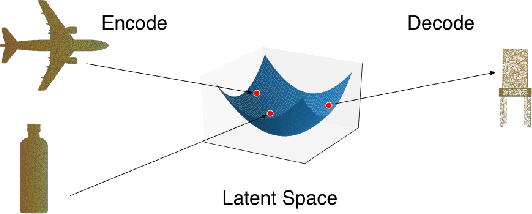

Developing Creative AI to Generate Sculptural Objects

Aug 20, 2019

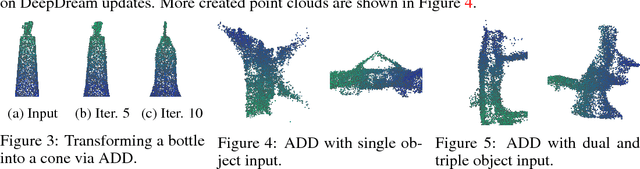

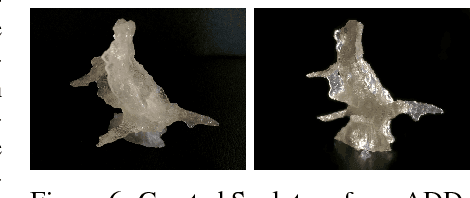

Abstract:We explore the intersection of human and machine creativity by generating sculptural objects through machine learning. This research raises questions about both the technical details of automatic art generation and the interaction between AI and people, as both artists and the audience of art. We introduce two algorithms for generating 3D point clouds and then discuss their actualization as sculpture and incorporation into a holistic art installation. Specifically, the Amalgamated DeepDream (ADD) algorithm solves the sparsity problem caused by the naive DeepDream-inspired approach and generates creative and printable point clouds. The Partitioned DeepDream (PDD) algorithm further allows us to explore more diverse 3D object creation by combining point cloud clustering algorithms and ADD.

Hallucinating Point Cloud into 3D Sculptural Object

Nov 29, 2018

Abstract:Our team of artists and machine learning researchers designed a creative algorithm that can generate authentic sculptural artworks. These artworks do not mimic any given forms and cannot be easily categorized into the dataset categories. Our approach extends DeepDream from images to 3D point clouds. The proposed algorithm, Amalgamated DeepDream (ADD), leverages the properties of point clouds to create objects with better quality than the naive extension. ADD presents promise for the creativity of machines, the kind of creativity that pushes artists to explore novel methods or materials and to create new genres instead of creating variations of existing forms or styles within one genre. For example, from Realism to Abstract Expressionism, or to Minimalism. Lastly, we present the sculptures that are 3D printed based on the point clouds created by ADD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge