Libin Lu

WaveNet-SF: A Hybrid Network for Retinal Disease Detection Based on Wavelet Transform in the Spatial-Frequency Domain

Jan 21, 2025

Abstract:Retinal diseases are a leading cause of vision impairment and blindness, with timely diagnosis being critical for effective treatment. Optical Coherence Tomography (OCT) has become a standard imaging modality for retinal disease diagnosis, but OCT images often suffer from issues such as speckle noise, complex lesion shapes, and varying lesion sizes, making interpretation challenging. In this paper, we propose a novel framework, WaveNet-SF, to enhance retinal disease detection by integrating spatial-domain and frequency-domain learning. The framework utilizes wavelet transforms to decompose OCT images into low- and high-frequency components, enabling the model to extract both global structural features and fine-grained details. To improve lesion detection, we introduce a multi-scale wavelet spatial attention (MSW-SA) module, which enhances the model's focus on regions of interest at multiple scales. Additionally, a high-frequency feature compensation block (HFFC) is incorporated to recover edge information lost during wavelet decomposition, suppress noise, and preserve fine details crucial for lesion detection. Our approach achieves state-of-the-art (SOTA) classification accuracies of 97.82% and 99. 58% on the OCT-C8 and OCT2017 datasets, respectively, surpassing existing methods. These results demonstrate the efficacy of WaveNet-SF in addressing the challenges of OCT image analysis and its potential as a powerful tool for retinal disease diagnosis.

Fusing Structural and Functional Connectivities using Disentangled VAE for Detecting MCI

Jun 16, 2023

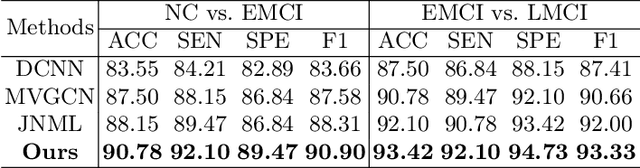

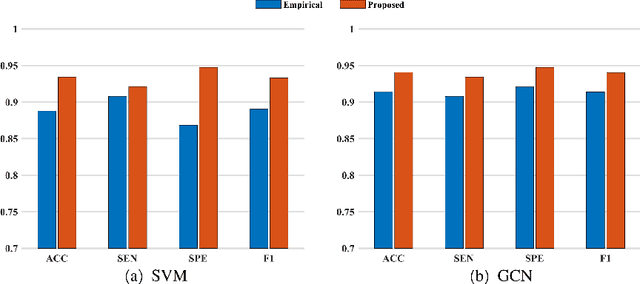

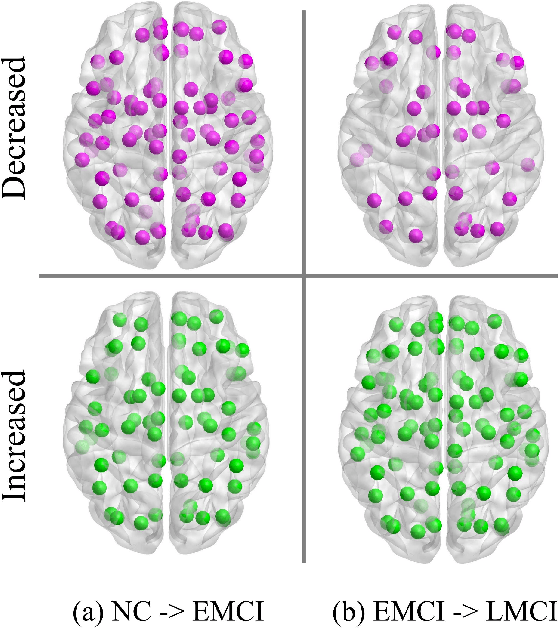

Abstract:Brain network analysis is a useful approach to studying human brain disorders because it can distinguish patients from healthy people by detecting abnormal connections. Due to the complementary information from multiple modal neuroimages, multimodal fusion technology has a lot of potential for improving prediction performance. However, effective fusion of multimodal medical images to achieve complementarity is still a challenging problem. In this paper, a novel hierarchical structural-functional connectivity fusing (HSCF) model is proposed to construct brain structural-functional connectivity matrices and predict abnormal brain connections based on functional magnetic resonance imaging (fMRI) and diffusion tensor imaging (DTI). Specifically, the prior knowledge is incorporated into the separators for disentangling each modality of information by the graph convolutional networks (GCN). And a disentangled cosine distance loss is devised to ensure the disentanglement's effectiveness. Moreover, the hierarchical representation fusion module is designed to effectively maximize the combination of relevant and effective features between modalities, which makes the generated structural-functional connectivity more robust and discriminative in the cognitive disease analysis. Results from a wide range of tests performed on the public Alzheimer's Disease Neuroimaging Initiative (ADNI) database show that the proposed model performs better than competing approaches in terms of classification evaluation. In general, the proposed HSCF model is a promising model for generating brain structural-functional connectivities and identifying abnormal brain connections as cognitive disease progresses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge