Lehel Ferenczi

Low performing pixel correction in computed tomography with unrolled network and synthetic data training

Jan 28, 2026Abstract:Low performance pixels (LPP) in Computed Tomography (CT) detectors would lead to ring and streak artifacts in the reconstructed images, making them clinically unusable. In recent years, several solutions have been proposed to correct LPP artifacts, either in the image domain or in the sinogram domain using supervised deep learning methods. However, these methods require dedicated datasets for training, which are expensive to collect. Moreover, existing approaches focus solely either on image-space or sinogram-space correction, ignoring the intrinsic correlations from the forward operation of the CT geometry. In this work, we propose an unrolled dual-domain method based on synthetic data to correct LPP artifacts. Specifically, the intrinsic correlations of LPP between the sinogram and image domains are leveraged through synthetic data generated from natural images, enabling the trained model to correct artifacts without requiring any real-world clinical data. In experiments simulating 1-2% detectors defect near the isocenter, the proposed method outperformed the state-of-the-art approaches by a large margin. The results indicate that our solution can correct LPP artifacts without the cost of data collection for model training, and it is adaptable to different scanner settings for software-based applications.

SynBT: High-quality Tumor Synthesis for Breast Tumor Segmentation by 3D Diffusion Model

Sep 03, 2025Abstract:Synthetic tumors in medical images offer controllable characteristics that facilitate the training of machine learning models, leading to an improved segmentation performance. However, the existing methods of tumor synthesis yield suboptimal performances when tumor occupies a large spatial volume, such as breast tumor segmentation in MRI with a large field-of-view (FOV), while commonly used tumor generation methods are based on small patches. In this paper, we propose a 3D medical diffusion model, called SynBT, to generate high-quality breast tumor (BT) in contrast-enhanced MRI images. The proposed model consists of a patch-to-volume autoencoder, which is able to compress the high-resolution MRIs into compact latent space, while preserving the resolution of volumes with large FOV. Using the obtained latent space feature vector, a mask-conditioned diffusion model is used to synthesize breast tumors within selected regions of breast tissue, resulting in realistic tumor appearances. We evaluated the proposed method for a tumor segmentation task, which demonstrated the proposed high-quality tumor synthesis method can facilitate the common segmentation models with performance improvement of 2-3% Dice Score on a large public dataset, and therefore provides benefits for tumor segmentation in MRI images.

Quality Enhancement of Radiographic X-ray Images by Interpretable Mapping

Jan 21, 2025Abstract:X-ray imaging is the most widely used medical imaging modality. However, in the common practice, inconsistency in the initial presentation of X-ray images is a common complaint by radiologists. Different patient positions, patient habitus and scanning protocols can lead to differences in image presentations, e.g., differences in brightness and contrast globally or regionally. To compensate for this, additional work will be executed by clinical experts to adjust the images to the desired presentation, which can be time-consuming. Existing deep-learning-based end-to-end solutions can automatically correct images with promising performances. Nevertheless, these methods are hard to be interpreted and difficult to be understood by clinical experts. In this manuscript, a novel interpretable mapping method by deep learning is proposed, which automatically enhances the image brightness and contrast globally and locally. Meanwhile, because the model is inspired by the workflow of the brightness and contrast manipulation, it can provide interpretable pixel maps for explaining the motivation of image enhancement. The experiment on the clinical datasets show the proposed method can provide consistent brightness and contrast correction on X-ray images with accuracy of 24.75 dB PSNR and 0.8431 SSIM.

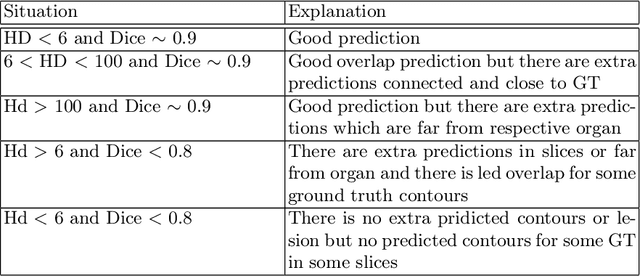

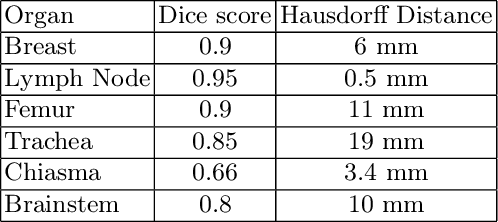

Automated Identification of Failure Cases in Organ at Risk Segmentation Using Distance Metrics: A Study on CT Data

Aug 21, 2023

Abstract:Automated organ at risk (OAR) segmentation is crucial for radiation therapy planning in CT scans, but the generated contours by automated models can be inaccurate, potentially leading to treatment planning issues. The reasons for these inaccuracies could be varied, such as unclear organ boundaries or inaccurate ground truth due to annotation errors. To improve the model's performance, it is necessary to identify these failure cases during the training process and to correct them with some potential post-processing techniques. However, this process can be time-consuming, as traditionally it requires manual inspection of the predicted output. This paper proposes a method to automatically identify failure cases by setting a threshold for the combination of Dice and Hausdorff distances. This approach reduces the time-consuming task of visually inspecting predicted outputs, allowing for faster identification of failure case candidates. The method was evaluated on 20 cases of six different organs in CT images from clinical expert curated datasets. By setting the thresholds for the Dice and Hausdorff distances, the study was able to differentiate between various states of failure cases and evaluate over 12 cases visually. This thresholding approach could be extended to other organs, leading to faster identification of failure cases and thereby improving the quality of radiation therapy planning.

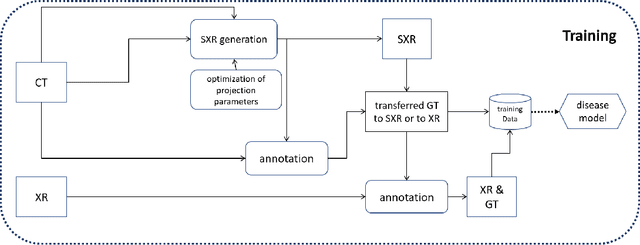

Pristine annotations-based multi-modal trained artificial intelligence solution to triage chest X-ray for COVID-19

Nov 10, 2020

Abstract:The COVID-19 pandemic continues to spread and impact the well-being of the global population. The front-line modalities including computed tomography (CT) and X-ray play an important role for triaging COVID patients. Considering the limited access of resources (both hardware and trained personnel) and decontamination considerations, CT may not be ideal for triaging suspected subjects. Artificial intelligence (AI) assisted X-ray based applications for triaging and monitoring require experienced radiologists to identify COVID patients in a timely manner and to further delineate the disease region boundary are seen as a promising solution. Our proposed solution differs from existing solutions by industry and academic communities, and demonstrates a functional AI model to triage by inferencing using a single x-ray image, while the deep-learning model is trained using both X-ray and CT data. We report on how such a multi-modal training improves the solution compared to X-ray only training. The multi-modal solution increases the AUC (area under the receiver operating characteristic curve) from 0.89 to 0.93 and also positively impacts the Dice coefficient (0.59 to 0.62) for localizing the pathology. To the best our knowledge, it is the first X-ray solution by leveraging multi-modal information for the development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge