Latif U. Khan

Large Language Models-Empowered Wireless Networks: Fundamentals, Architecture, and Challenges

Jun 12, 2025Abstract:The rapid advancement of wireless networks has resulted in numerous challenges stemming from their extensive demands for quality of service towards innovative quality of experience metrics (e.g., user-defined metrics in terms of sense of physical experience for haptics applications). In the meantime, large language models (LLMs) emerged as promising solutions for many difficult and complex applications/tasks. These lead to a notion of the integration of LLMs and wireless networks. However, this integration is challenging and needs careful attention in design. Therefore, in this article, we present a notion of rational wireless networks powered by \emph{telecom LLMs}, namely, \emph{LLM-native wireless systems}. We provide fundamentals, vision, and a case study of the distributed implementation of LLM-native wireless systems. In the case study, we propose a solution based on double deep Q-learning (DDQN) that outperforms existing DDQN solutions. Finally, we provide open challenges.

Robust Federated Learning on Edge Devices with Domain Heterogeneity

May 15, 2025

Abstract:Federated Learning (FL) allows collaborative training while ensuring data privacy across distributed edge devices, making it a popular solution for privacy-sensitive applications. However, FL faces significant challenges due to statistical heterogeneity, particularly domain heterogeneity, which impedes the global mode's convergence. In this study, we introduce a new framework to address this challenge by improving the generalization ability of the FL global model under domain heterogeneity, using prototype augmentation. Specifically, we introduce FedAPC (Federated Augmented Prototype Contrastive Learning), a prototype-based FL framework designed to enhance feature diversity and model robustness. FedAPC leverages prototypes derived from the mean features of augmented data to capture richer representations. By aligning local features with global prototypes, we enable the model to learn meaningful semantic features while reducing overfitting to any specific domain. Experimental results on the Office-10 and Digits datasets illustrate that our framework outperforms SOTA baselines, demonstrating superior performance.

Real-Time Aerial Fire Detection on Resource-Constrained Devices Using Knowledge Distillation

Feb 28, 2025Abstract:Wildfire catastrophes cause significant environmental degradation, human losses, and financial damage. To mitigate these severe impacts, early fire detection and warning systems are crucial. Current systems rely primarily on fixed CCTV cameras with a limited field of view, restricting their effectiveness in large outdoor environments. The fusion of intelligent fire detection with remote sensing improves coverage and mobility, enabling monitoring in remote and challenging areas. Existing approaches predominantly utilize convolutional neural networks and vision transformer models. While these architectures provide high accuracy in fire detection, their computational complexity limits real-time performance on edge devices such as UAVs. In our work, we present a lightweight fire detection model based on MobileViT-S, compressed through the distillation of knowledge from a stronger teacher model. The ablation study highlights the impact of a teacher model and the chosen distillation technique on the model's performance improvement. We generate activation map visualizations using Grad-CAM to confirm the model's ability to focus on relevant fire regions. The high accuracy and efficiency of the proposed model make it well-suited for deployment on satellites, UAVs, and IoT devices for effective fire detection. Experiments on common fire benchmarks demonstrate that our model suppresses the state-of-the-art model by 0.44%, 2.00% while maintaining a compact model size. Our model delivers the highest processing speed among existing works, achieving real-time performance on resource-constrained devices.

Vision-Language Models for Edge Networks: A Comprehensive Survey

Feb 11, 2025

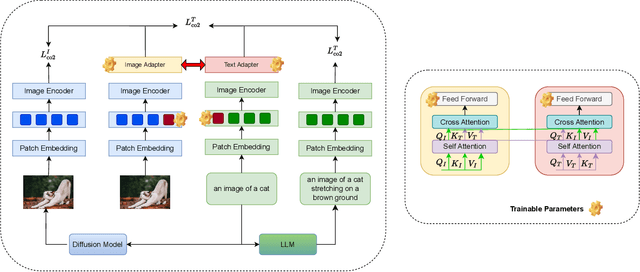

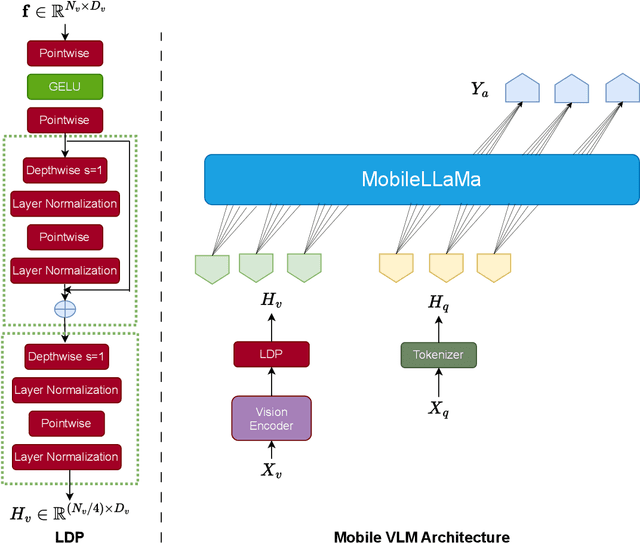

Abstract:Vision Large Language Models (VLMs) combine visual understanding with natural language processing, enabling tasks like image captioning, visual question answering, and video analysis. While VLMs show impressive capabilities across domains such as autonomous vehicles, smart surveillance, and healthcare, their deployment on resource-constrained edge devices remains challenging due to processing power, memory, and energy limitations. This survey explores recent advancements in optimizing VLMs for edge environments, focusing on model compression techniques, including pruning, quantization, knowledge distillation, and specialized hardware solutions that enhance efficiency. We provide a detailed discussion of efficient training and fine-tuning methods, edge deployment challenges, and privacy considerations. Additionally, we discuss the diverse applications of lightweight VLMs across healthcare, environmental monitoring, and autonomous systems, illustrating their growing impact. By highlighting key design strategies, current challenges, and offering recommendations for future directions, this survey aims to inspire further research into the practical deployment of VLMs, ultimately making advanced AI accessible in resource-limited settings.

UAV-Assisted Real-Time Disaster Detection Using Optimized Transformer Model

Jan 21, 2025

Abstract:Disaster recovery and management present significant challenges, particularly in unstable environments and hard-to-reach terrains. These difficulties can be overcome by employing unmanned aerial vehicles (UAVs) equipped with onboard embedded platforms and camera sensors. In this work, we address the critical need for accurate and timely disaster detection by enabling onboard aerial imagery processing and avoiding connectivity, privacy, and latency issues despite the challenges posed by limited onboard hardware resources. We propose a UAV-assisted edge framework for real-time disaster management, leveraging our proposed model optimized for real-time aerial image classification. The optimization of the model employs post-training quantization techniques. For real-world disaster scenarios, we introduce a novel dataset, DisasterEye, featuring UAV-captured disaster scenes as well as ground-level images taken by individuals on-site. Experimental results demonstrate the effectiveness of our model, achieving high accuracy with reduced inference latency and memory usage on resource-constrained devices. The framework's scalability and adaptability make it a robust solution for real-time disaster detection on resource-limited UAV platforms.

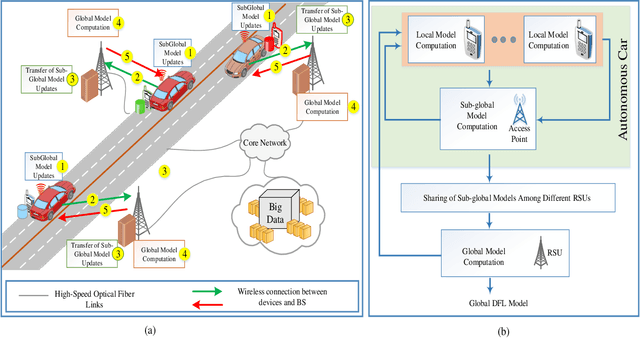

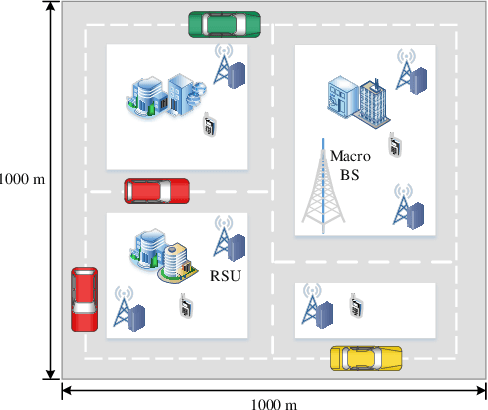

A Dispersed Federated Learning Framework for 6G-Enabled Autonomous Driving Cars

May 20, 2021

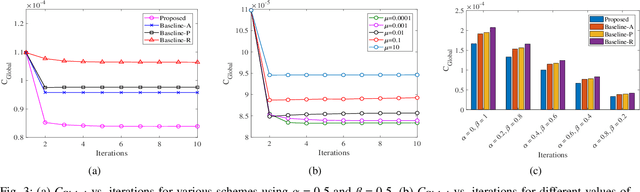

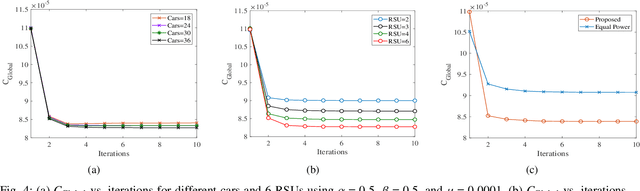

Abstract:Sixth-Generation (6G)-based Internet of Everything applications (e.g. autonomous driving cars) have witnessed a remarkable interest. Autonomous driving cars using federated learning (FL) has the ability to enable different smart services. Although FL implements distributed machine learning model training without the requirement to move the data of devices to a centralized server, it its own implementation challenges such as robustness, centralized server security, communication resources constraints, and privacy leakage due to the capability of a malicious aggregation server to infer sensitive information of end-devices. To address the aforementioned limitations, a dispersed federated learning (DFL) framework for autonomous driving cars is proposed to offer robust, communication resource-efficient, and privacy-aware learning. A mixed-integer non-linear (MINLP) optimization problem is formulated to jointly minimize the loss in federated learning model accuracy due to packet errors and transmission latency. Due to the NP-hard and non-convex nature of the formulated MINLP problem, we propose the Block Successive Upper-bound Minimization (BSUM) based solution. Furthermore, the performance comparison of the proposed scheme with three baseline schemes has been carried out. Extensive numerical results are provided to show the validity of the proposed BSUM-based scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge