Madyan Alsenwi

Pinching Antenna Systems versus Reconfigurable Intelligent Surfaces in mmWave

Jun 05, 2025Abstract:Flexible and intelligent antenna designs, such as pinching antenna systems and reconfigurable intelligent surfaces (RIS), have gained extensive research attention due to their potential to enhance the wireless channels. This letter, for the first time, presents a comparative study between the emerging pinching antenna systems and RIS in millimeter wave (mmWave) bands. Our results reveal that RIS requires an extremely large number of elements (in the order of $10^4$) to outperform pinching antenna systems in terms of spectral efficiency, which severely impact the energy efficiency performance of RIS. Moreover, pinching antenna systems demonstrate greater robustness against hardware impairments and severe path loss typically encountered in high-frequency mmWave bands.

Low-Complexity Channel Estimation Protocol for Non-Diagonal RIS-Assisted Communications

Apr 28, 2025Abstract:Non-diagonal reconfigurable intelligent surfaces (RIS) offer enhanced wireless signal manipulation over conventional RIS by enabling the incident signal on any of its $M$ elements to be reflected from another element via an $M \times M$ switch array. To fully exploit this flexible configuration, the acquisition of individual channel state information (CSI) is essential. However, due to the passive nature of the RIS, cascaded channel estimation is performed, as the RIS itself lacks signal processing capabilities. This entails estimating the CSI for all $M \times M$ switch array permutations, resulting in a total of $M!$ possible configurations, to identify the optimal one that maximizes the channel gain. This process leads to long uplink training intervals, which degrade spectral efficiency and increase uplink energy consumption. In this paper, we propose a low-complexity channel estimation protocol that substantially reduces the need for exhaustive $M!$ permutations by utilizing only three configurations to optimize the non-diagonal RIS switch array and beamforming for single-input single-output (SISO) and multiple-input single-output (MISO) systems. Specifically, our three-stage pilot-based protocol estimates scaled versions of the user-RIS and RIS-base-station (BS) channels in the first two stages using the least square (LS) estimator and the commonly used ON/OFF protocol from conventional RIS. In the third stage, the cascaded user-RIS-BS channels are estimated to enable efficient beamforming optimization. Complexity analysis shows that our proposed protocol significantly reduces the BS computational load from $\mathcal{O}(NM\times M!)$ to $\mathcal{O}(NM)$, where $N$ is the number of BS antennas. This complexity is similar to the conventional ON/OFF-based LS estimation for conventional diagonal RIS.

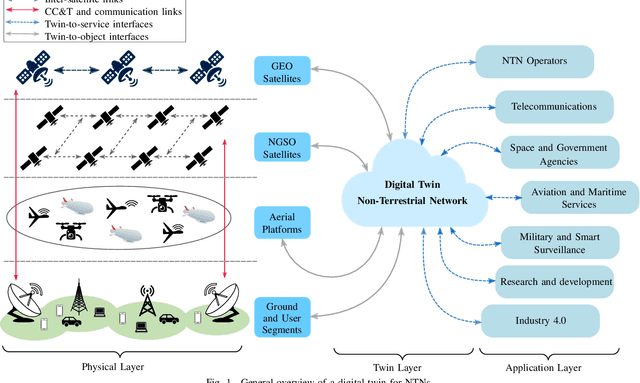

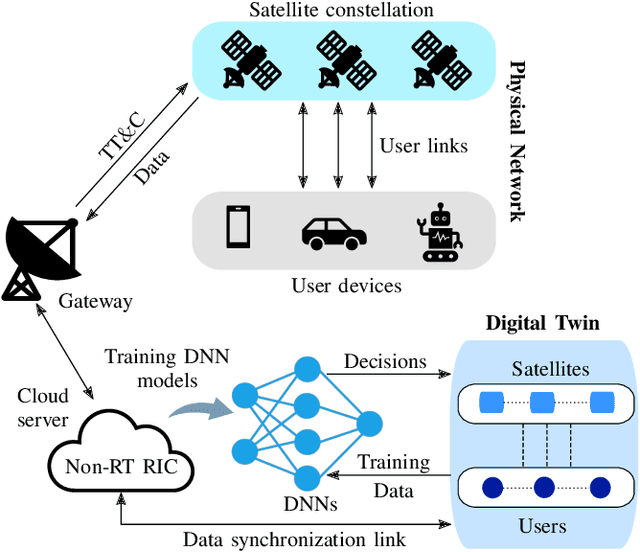

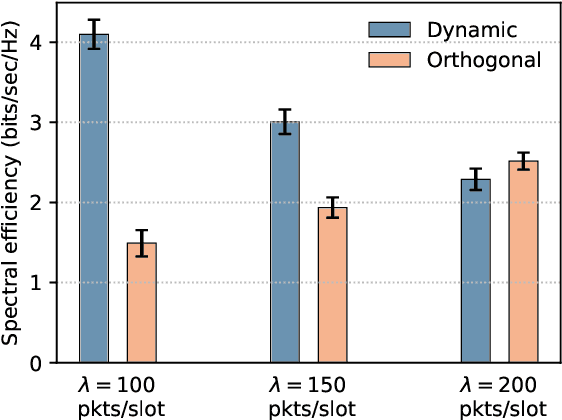

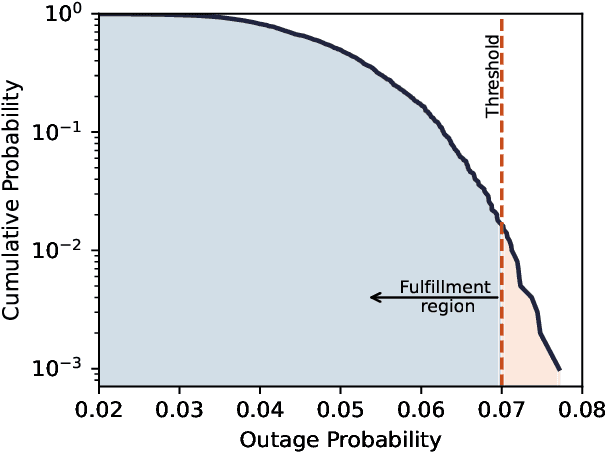

Digital Twin for Non-Terrestrial Networks: Vision, Challenges, and Enabling Technologies

May 17, 2023

Abstract:The ongoing digital transformation has sparked the emergence of various new network applications that demand cutting-edge technologies to enhance their efficiency and functionality. One of the promising technologies in this direction is the digital twin, which is a new approach to design and manage complicated cyber-physical systems with a high degree of automation, intelligence, and resilience. This article discusses the use of digital twin technology as a new approach for modeling non-terrestrial networks (NTNs). Digital twin technology can create accurate data-driven NTN models that operate in real-time, allowing for rapid testing and deployment of new NTN technologies and services, besides facilitating innovation and cost reduction. Specifically, we provide a vision on integrating the digital twin into NTNs and explore the primary deployment challenges, as well as the key potential enabling technologies within NTN realm. In closing, we present a case study that employs a data-driven digital twin model for dynamic and service-oriented network slicing within an open radio access network (O-RAN) NTN architecture.

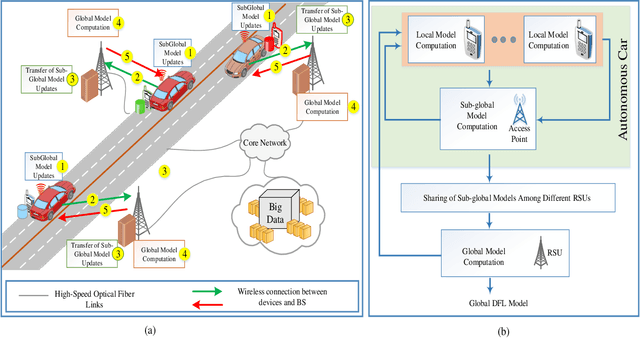

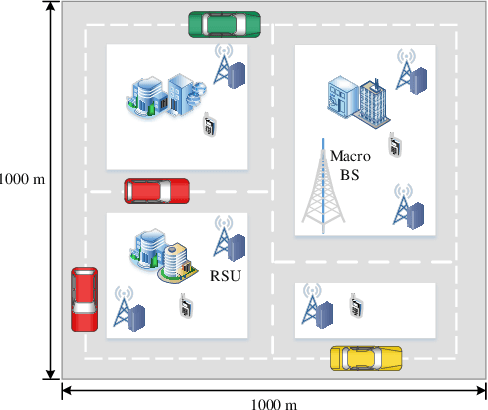

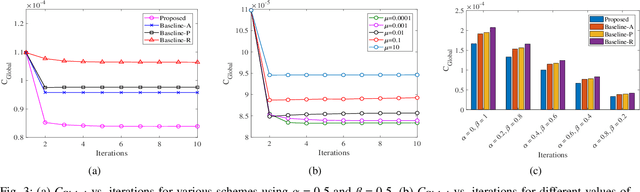

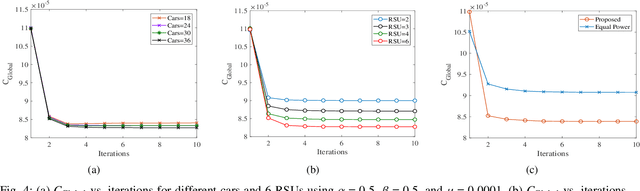

A Dispersed Federated Learning Framework for 6G-Enabled Autonomous Driving Cars

May 20, 2021

Abstract:Sixth-Generation (6G)-based Internet of Everything applications (e.g. autonomous driving cars) have witnessed a remarkable interest. Autonomous driving cars using federated learning (FL) has the ability to enable different smart services. Although FL implements distributed machine learning model training without the requirement to move the data of devices to a centralized server, it its own implementation challenges such as robustness, centralized server security, communication resources constraints, and privacy leakage due to the capability of a malicious aggregation server to infer sensitive information of end-devices. To address the aforementioned limitations, a dispersed federated learning (DFL) framework for autonomous driving cars is proposed to offer robust, communication resource-efficient, and privacy-aware learning. A mixed-integer non-linear (MINLP) optimization problem is formulated to jointly minimize the loss in federated learning model accuracy due to packet errors and transmission latency. Due to the NP-hard and non-convex nature of the formulated MINLP problem, we propose the Block Successive Upper-bound Minimization (BSUM) based solution. Furthermore, the performance comparison of the proposed scheme with three baseline schemes has been carried out. Extensive numerical results are provided to show the validity of the proposed BSUM-based scheme.

Radio Resource Allocation in 5G New Radio: A Neural Networks Based Approach)

Nov 13, 2019

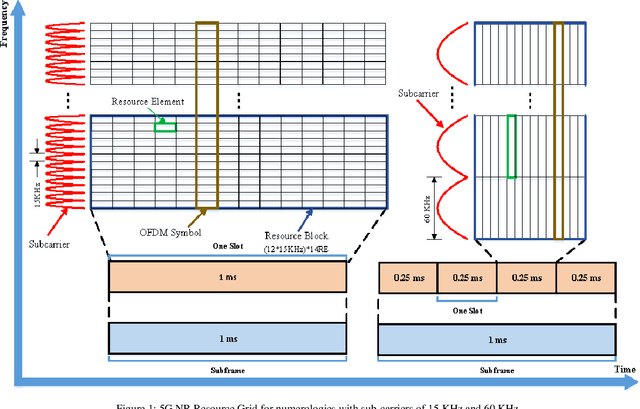

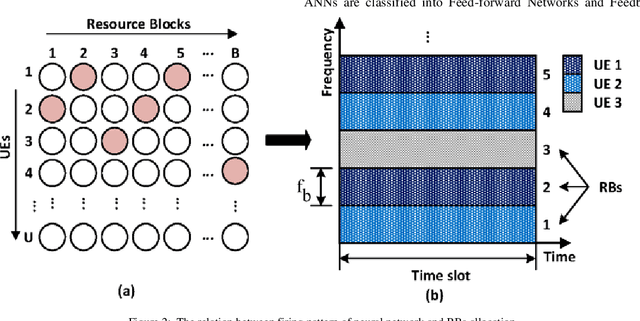

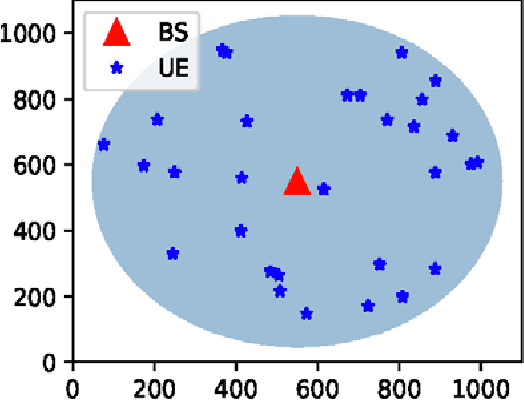

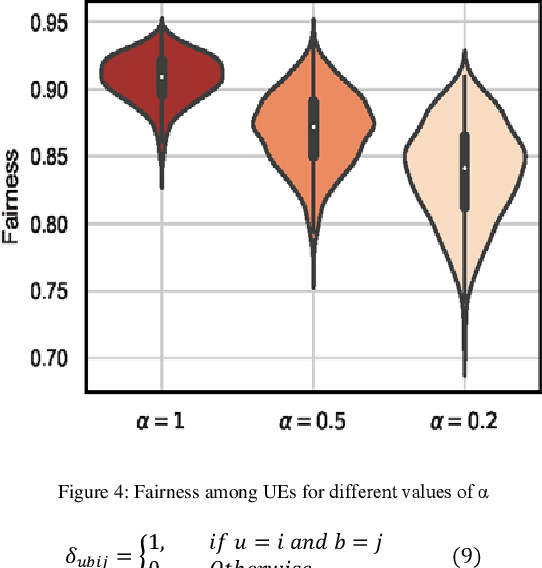

Abstract:The minimum frequency-time unit that can be allocated to User Equipments (UEs) in the fifth generation (5G) cellular networks is a Resource Block (RB). A RB is a channel composed of a set of OFDM subcarriers for a given time slot duration. 5G New Radio (NR) allows for a large number of block shapes ranging from 15 kHz to 480 kHz. In this paper, we address the problem of RBs allocation to UEs. The RBs are allocated at the beginning of each time slot based on the channel state of each UE. The problem is formulated based on the Generalized Proportional Fair (GPF) scheduling. Then, we model the problem as a 2-Dimension Hopfield Neural Networks (2D-HNN). Finally, in an attempt to solve the problem, the energy function of 2D-HNN is investigated. Simulation results show the efficiency of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge