Lars Kuhnert

UGainS: Uncertainty Guided Anomaly Instance Segmentation

Aug 03, 2023

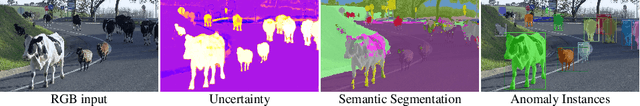

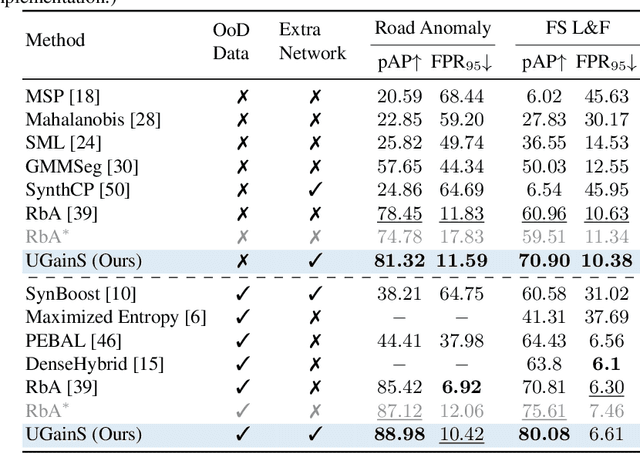

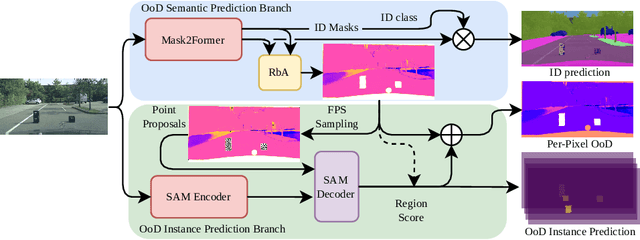

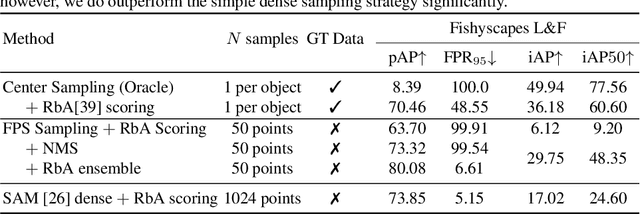

Abstract:A single unexpected object on the road can cause an accident or may lead to injuries. To prevent this, we need a reliable mechanism for finding anomalous objects on the road. This task, called anomaly segmentation, can be a stepping stone to safe and reliable autonomous driving. Current approaches tackle anomaly segmentation by assigning an anomaly score to each pixel and by grouping anomalous regions using simple heuristics. However, pixel grouping is a limiting factor when it comes to evaluating the segmentation performance of individual anomalous objects. To address the issue of grouping multiple anomaly instances into one, we propose an approach that produces accurate anomaly instance masks. Our approach centers on an out-of-distribution segmentation model for identifying uncertain regions and a strong generalist segmentation model for anomaly instances segmentation. We investigate ways to use uncertain regions to guide such a segmentation model to perform segmentation of anomalous instances. By incorporating strong object priors from a generalist model we additionally improve the per-pixel anomaly segmentation performance. Our approach outperforms current pixel-level anomaly segmentation methods, achieving an AP of 80.08% and 88.98% on the Fishyscapes Lost and Found and the RoadAnomaly validation sets respectively. Project page: https://vision.rwth-aachen.de/ugains

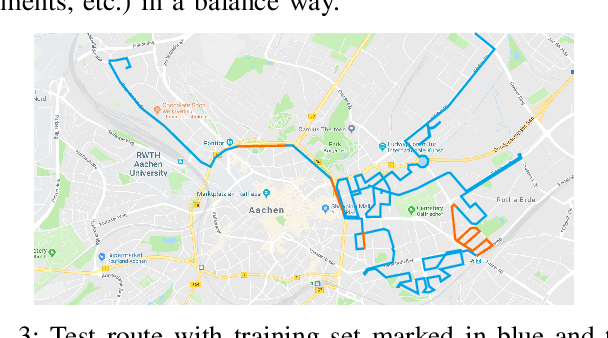

Multi-Camera Visual-Inertial Simultaneous Localization and Mapping for Autonomous Valet Parking

Apr 27, 2023

Abstract:Localization and mapping are key capabilities for self-driving vehicles. This paper describes a visual-inertial SLAM system that estimates an accurate and globally consistent trajectory of the vehicle and reconstructs a dense model of the free space surrounding the car. Towards this goal, we build on Kimera and extend it to use multiple cameras as well as external (e.g. wheel) odometry sensors, to obtain accurate and robust odometry estimates in real-world problems. Additionally, we propose an effective scheme for closing loops that circumvents the drawbacks of common alternatives based on the Perspective-n-Point method and also works with a single monocular camera. Finally, we develop a method for dense 3D mapping of the free space that combines a segmentation network for free-space detection with a homography-based dense mapping technique. We test our system on photo-realistic simulations and on several real datasets collected by a car prototype developed by the Ford Motor Company, spanning both indoor and outdoor parking scenarios. Our multi-camera system is shown to outperform state-of-the art open-source visual-inertial-SLAM pipelines (Vins-Fusion, ORB-SLAM3), and exhibits an average trajectory error under 1% of the trajectory length across more than 8 km of distance traveled (combined across all datasets). A video showcasing the system is available here: youtu.be/H8CpzDpXOI8

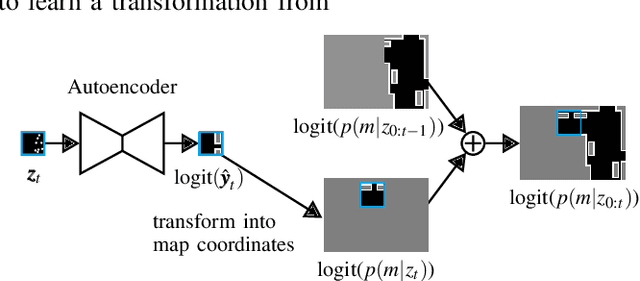

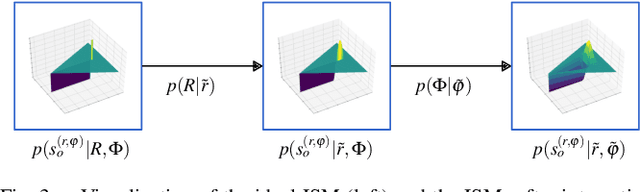

Deep Inverse Sensor Models as Priors for evidential Occupancy Mapping

Dec 02, 2020

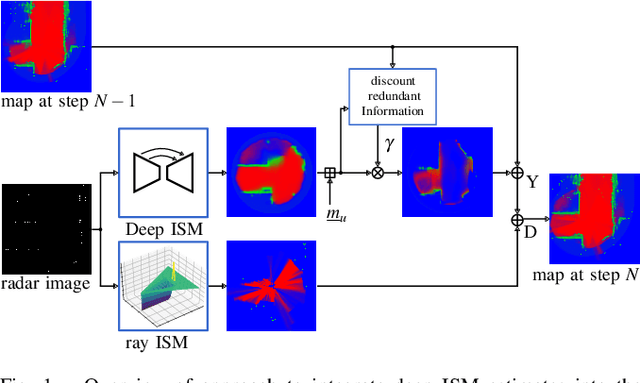

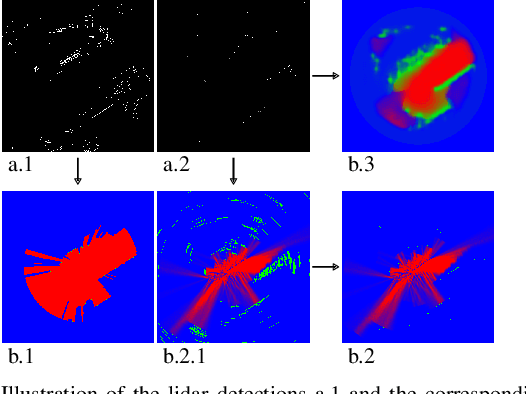

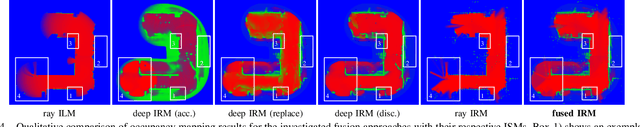

Abstract:With the recent boost in autonomous driving, increased attention has been paid on radars as an input for occupancy mapping. Besides their many benefits, the inference of occupied space based on radar detections is notoriously difficult because of the data sparsity and the environment dependent noise (e.g. multipath reflections). Recently, deep learning-based inverse sensor models, from here on called deep ISMs, have been shown to improve over their geometric counterparts in retrieving occupancy information. Nevertheless, these methods perform a data-driven interpolation which has to be verified later on in the presence of measurements. In this work, we describe a novel approach to integrate deep ISMs together with geometric ISMs into the evidential occupancy mapping framework. Our method leverages both the capabilities of the data-driven approach to initialize cells not yet observable for the geometric model effectively enhancing the perception field and convergence speed, while at the same time use the precision of the geometric ISM to converge to sharp boundaries. We further define a lower limit on the deep ISM estimate's certainty together with analytical proofs of convergence which we use to distinguish cells that are solely allocated by the deep ISM from cells already verified using the geometric approach.

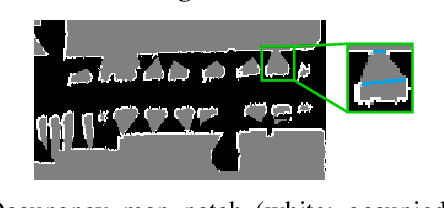

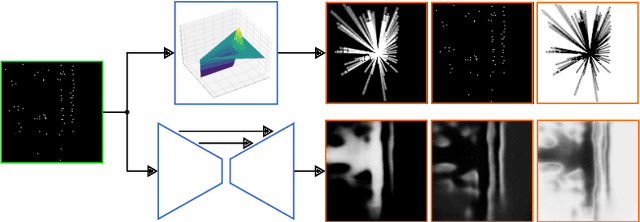

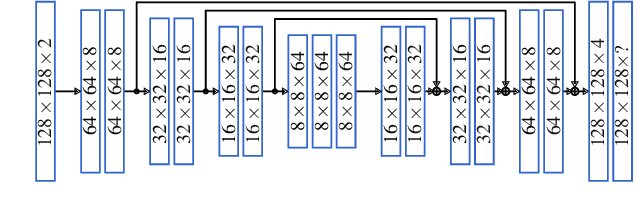

Deep, spatially coherent Occupancy Maps based on Radar Measurements

Mar 29, 2019

Abstract:One essential step to realize modern driver assistance technology is the accurate knowledge about the location of static objects in the environment. In this work, we use artificial neural networks to predict the occupation state of a whole scene in an end-to-end manner. This stands in contrast to the traditional approach of accumulating each detection's influence on the occupancy state and allows to learn spatial priors which can be used to interpolate the environment's occupancy state. We show that these priors make our method suitable to predict dense occupancy estimations from sparse, highly uncertain inputs, as given by automotive radars, even for complex urban scenarios. Furthermore, we demonstrate that these estimations can be used for large-scale mapping applications.

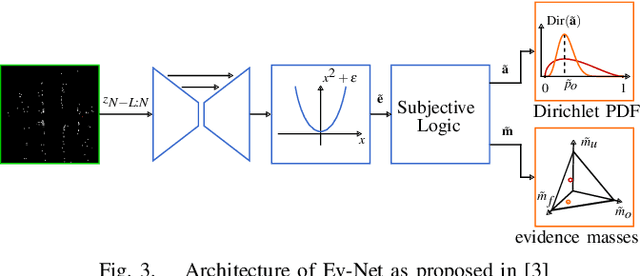

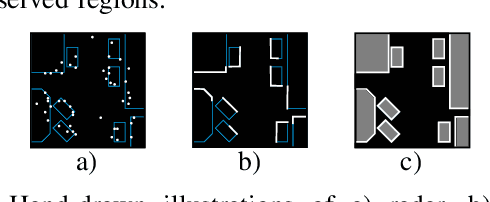

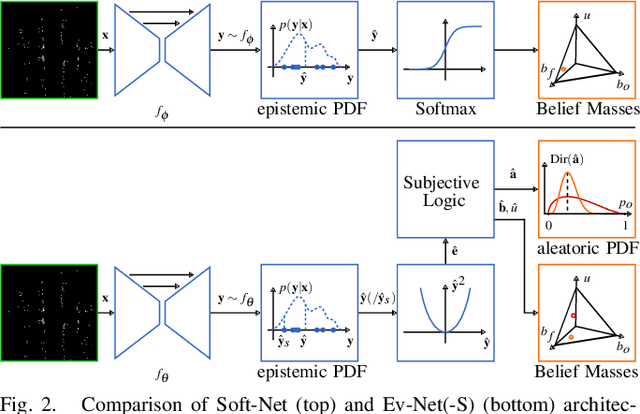

Deep, spatially coherent Inverse Sensor Models with Uncertainty Incorporation using the evidential Framework

Mar 29, 2019

Abstract:To perform high speed tasks, sensors of autonomous cars have to provide as much information in as few time steps as possible. However, radars, one of the sensor modalities autonomous cars heavily rely on, often only provide sparse, noisy detections. These have to be accumulated over time to reach a high enough confidence about the static parts of the environment. For radars, the state is typically estimated by accumulating inverse detection models (IDMs). We employ the recently proposed evidential convolutional neural networks which, in contrast to IDMs, compute dense, spatially coherent inference of the environment state. Moreover, these networks are able to incorporate sensor noise in a principled way which we further extend to also incorporate model uncertainty. We present experimental results that show This makes it possible to obtain a denser environment perception in fewer time steps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge