Kyle Dent

Detecting Abusive Language on Online Platforms: A Critical Analysis

Feb 27, 2021

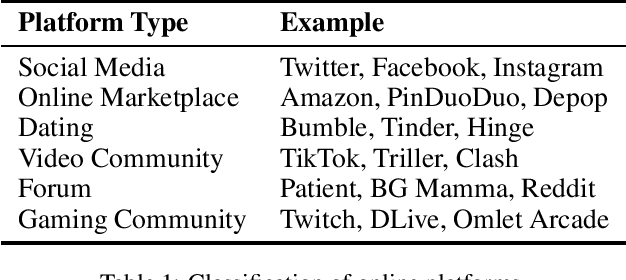

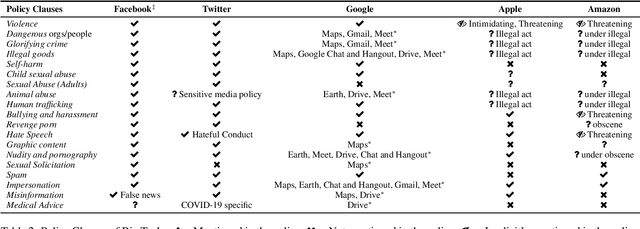

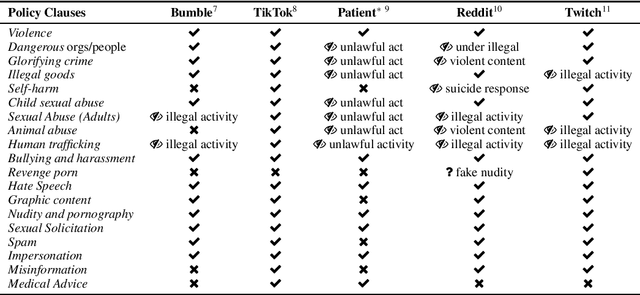

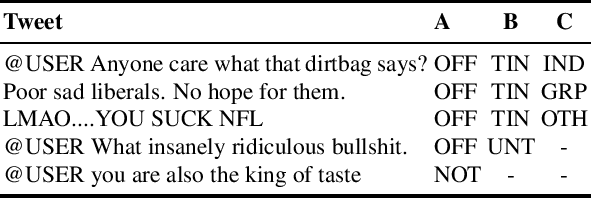

Abstract:Abusive language on online platforms is a major societal problem, often leading to important societal problems such as the marginalisation of underrepresented minorities. There are many different forms of abusive language such as hate speech, profanity, and cyber-bullying, and online platforms seek to moderate it in order to limit societal harm, to comply with legislation, and to create a more inclusive environment for their users. Within the field of Natural Language Processing, researchers have developed different methods for automatically detecting abusive language, often focusing on specific subproblems or on narrow communities, as what is considered abusive language very much differs by context. We argue that there is currently a dichotomy between what types of abusive language online platforms seek to curb, and what research efforts there are to automatically detect abusive language. We thus survey existing methods as well as content moderation policies by online platforms in this light, and we suggest directions for future work.

Through the Twitter Glass: Detecting Questions in Micro-Text

Jun 13, 2020Abstract:In a separate study, we were interested in understanding people's Q&A habits on Twitter. Finding questions within Twitter turned out to be a difficult challenge, so we considered applying some traditional NLP approaches to the problem. On the one hand, Twitter is full of idiosyncrasies, which make processing it difficult. On the other, it is very restricted in length and tends to employ simple syntactic constructions, which could help the performance of NLP processing. In order to find out the viability of NLP and Twitter, we built a pipeline of tools to work specifically with Twitter input for the task of finding questions in tweets. This work is still preliminary, but in this paper we discuss the techniques we used and the lessons we learned.

Ethical Considerations for AI Researchers

Jun 13, 2020Abstract:Use of artificial intelligence is growing and expanding into applications that impact people's lives. People trust their technology without really understanding it or its limitations. There is the potential for harm and we are already seeing examples of that in the world. AI researchers have an obligation to consider the impact of intelligent applications they work on. While the ethics of AI is not clear-cut, there are guidelines we can consider to minimize the harm we might introduce.

A Multifunction Printer CUI for the Blind

Jun 13, 2020

Abstract:Advances in interface design using touch surfaces creates greater obstacles for blind and visually impaired users of technology. Conversational user interfaces offer a reasonable alternative for interactions and enable greater access and most importantly greater independence for the blind. This paper presents a case study of our work to develop a conversational user interface for accessibility for multifunction printers (MFP). It describes our approach to conversational interfaces in general and the specifics of the solution we created for MFPs. It also presents a user study we performed to assess the solution and guide our future efforts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge