Kostas Berberidis

An Optimization-based Deep Equilibrium Model for Hyperspectral Image Deconvolution with Convergence Guarantees

Jun 10, 2023

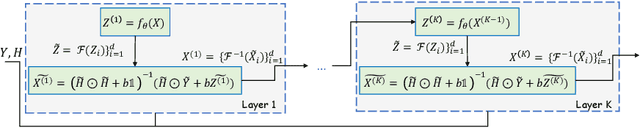

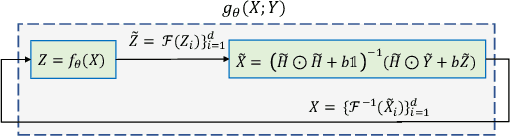

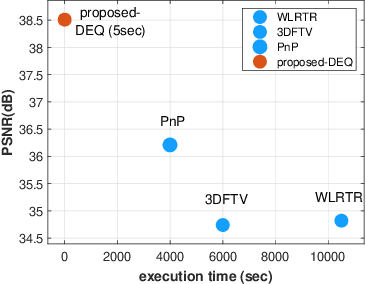

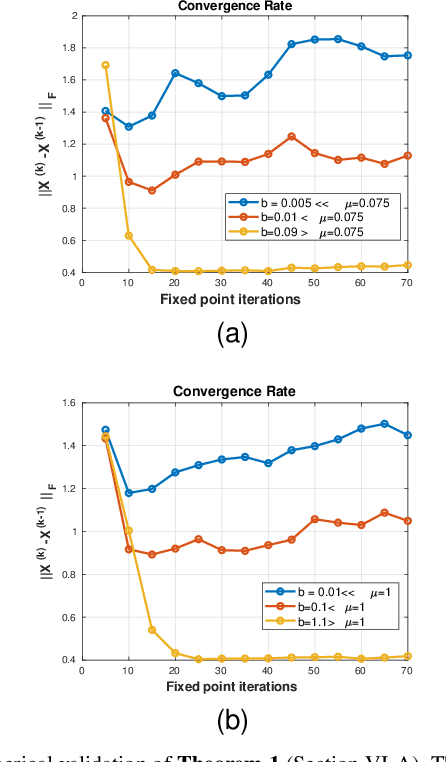

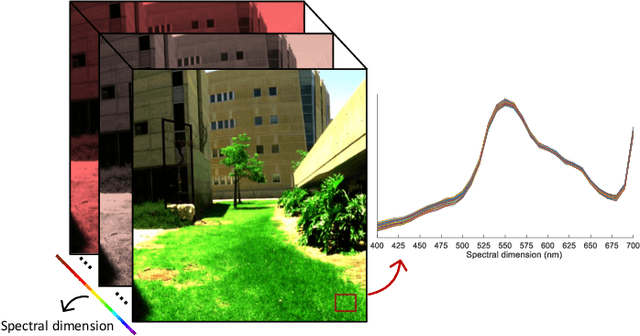

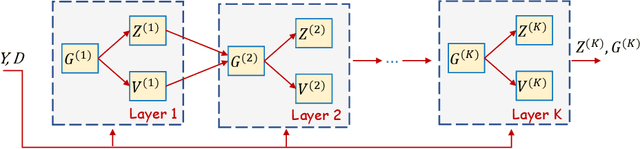

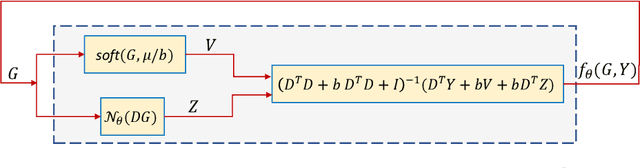

Abstract:In this paper, we propose a novel methodology for addressing the hyperspectral image deconvolution problem. This problem is highly ill-posed, and thus, requires proper priors (regularizers) to model the inherent spectral-spatial correlations of the HSI signals. To this end, a new optimization problem is formulated, leveraging a learnable regularizer in the form of a neural network. To tackle this problem, an effective solver is proposed using the half quadratic splitting methodology. The derived iterative solver is then expressed as a fixed-point calculation problem within the Deep Equilibrium (DEQ) framework, resulting in an interpretable architecture, with clear explainability to its parameters and convergence properties with practical benefits. The proposed model is a first attempt to handle the classical HSI degradation problem with different blurring kernels and noise levels via a single deep equilibrium model with significant computational efficiency. Extensive numerical experiments validate the superiority of the proposed methodology over other state-of-the-art methods. This superior restoration performance is achieved while requiring 99.85\% less computation time as compared to existing methods.

Deep Equilibrium Models Meet Federated Learning

May 29, 2023Abstract:In this study the problem of Federated Learning (FL) is explored under a new perspective by utilizing the Deep Equilibrium (DEQ) models instead of conventional deep learning networks. We claim that incorporating DEQ models into the federated learning framework naturally addresses several open problems in FL, such as the communication overhead due to the sharing large models and the ability to incorporate heterogeneous edge devices with significantly different computation capabilities. Additionally, a weighted average fusion rule is proposed at the server-side of the FL framework to account for the different qualities of models from heterogeneous edge devices. To the best of our knowledge, this study is the first to establish a connection between DEQ models and federated learning, contributing to the development of an efficient and effective FL framework. Finally, promising initial experimental results are presented, demonstrating the potential of this approach in addressing challenges of FL.

Deep Equilibrium Assisted Block Sparse Coding of Inter-dependent Signals: Application to Hyperspectral Imaging

Mar 29, 2022

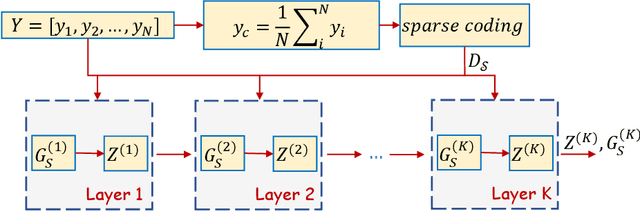

Abstract:In this study, the problem of computing a sparse representation for datasets of inter-dependent signals, given a fixed dictionary, is considered. A dataset of inter-dependent signals is defined as a matrix whose columns demonstrate strong dependencies. A computational efficient sparse coding optimization problem is derived by employing regularization terms that are adapted to the properties of the signals of interest. Exploiting the merits of the learnable regularization techniques, a neural network is employed to act as structure prior and reveal the underlying signal interdependencies. To solve the optimization problem Deep unrolling and Deep equilibrium based algorithms are developed, forming highly interpretable and concise deep-learning-based architectures, that process the input dataset in a block-by-block fashion. Extensive simulation results, in the context of hyperspectral image denoising, are provided, that demonstrate that the proposed algorithms outperform significantly other sparse coding approaches and exhibit superior performance against recent state-of-the-art deep-learning-based denoising models. In a wider perspective, our work provides a unique bridge between a classic approach, that is the sparse representation theory, and modern representation tools that are based on deep learning modeling.

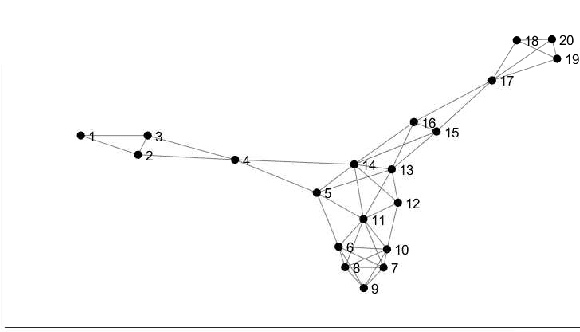

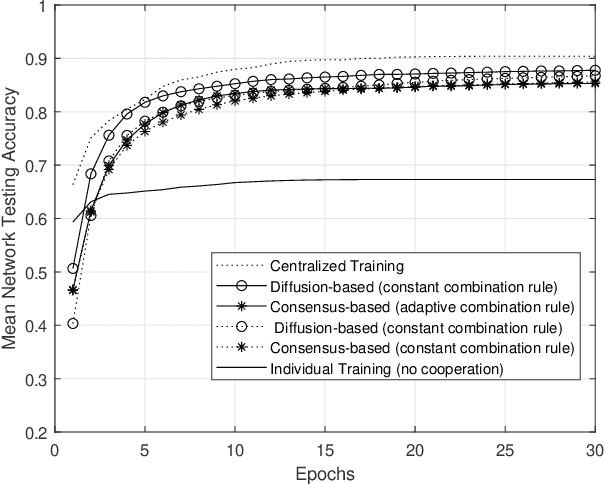

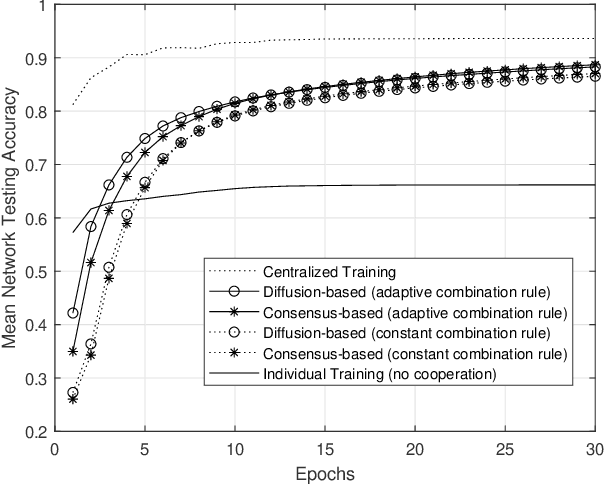

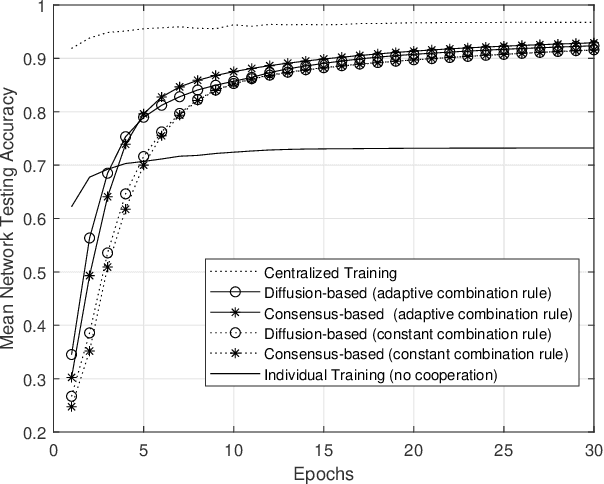

Efficient Fully Distributed Federated Learning with Adaptive Local Links

Mar 23, 2022

Abstract:Nowadays, data-driven, machine and deep learning approaches have provided unprecedented performance in various complex tasks, including image classification and object detection, and in a variety of application areas, like autonomous vehicles, medical imaging and wireless communications. Traditionally, such approaches have been deployed, along with the involved datasets, on standalone devices. Recently, a shift has been observed towards the so-called Edge Machine Learning, in which centralized architectures are adopted that allow multiple devices with local computational and storage resources to collaborate with the assistance of a centralized server. The well-known federated learning approach is able to utilize such architectures by allowing the exchange of only parameters with the server, while keeping the datasets private to each contributing device. In this work, we propose a fully distributed, diffusion-based learning algorithm that does not require a central server and propose an adaptive combination rule for the cooperation of the devices. By adopting a classification task on the MNIST dataset, the efficacy of the proposed algorithm over corresponding counterparts is demonstrated via the reduction of the number of collaboration rounds required to achieve an acceptable accuracy level in non- IID dataset scenarios.

Graph Laplacian Diffusion Localization of Connected and Automated Vehicles

Aug 24, 2021

Abstract:In this paper, we design distributed multi-modal localization approaches for Connected and Automated vehicles. We utilize information diffusion on graphs formed by moving vehicles, based on Adapt-then-Combine strategies combined with the Least-Mean-Squares and the Conjugate Gradient algorithms. We treat the vehicular network as an undirected graph, where vehicles communicate with each other by means of Vehicle-to- Vehicle communication protocols. Connected vehicles perform cooperative fusion of different measurement modalities, including location and range measurements, in order to estimate both their positions and the positions of all other networked vehicles, by interacting only with their local neighborhood. The trajectories of vehicles were generated either by a well-known kinematic model, or by using the CARLA autonomous driving simulator. The various proposed distributed and diffusion localization schemes significantly reduce the GPS error and do not only converge to the global solution, but they even outperformed it. Extensive simulation studies highlight the benefits of the various approaches, outperforming the accuracy of the state of the art approaches. The impact of the network connections and the network latency are also investigated.

Cooperative Multi-Modal Localization in Connected and Autonomous Vehicles

Jul 16, 2021

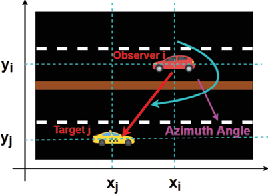

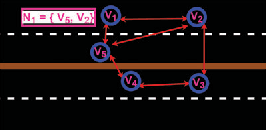

Abstract:Cooperative Localization is expected to play a crucial role in various applications in the field of Connected and Autonomous vehicles (CAVs). Future 5G wireless systems are expected to enable cost-effective Vehicle-to-Everything (V2X)systems, allowing CAVs to share with the other entities of the network the data they collect and measure. Typical measurement models usually deployed for this problem, are absolute position from Global Positioning System (GPS), relative distance and azimuth angle to neighbouring vehicles, extracted from Light Detection and Ranging (LIDAR) or Radio Detection and Ranging(RADAR) sensors. In this paper, we provide a cooperative localization approach that performs multi modal-fusion between the interconnected vehicles, by representing a fleet of connected cars as an undirected graph, encoding each vehicle position relative to its neighbouring vehicles. This method is based on:i) the Laplacian Processing, a Graph Signal Processing tool that allows to capture intrinsic geometry of the undirected graph of vehicles rather than their absolute position on global coordinate system and ii) the temporal coherence due to motion patterns of the moving vehicles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge