Cooperative Multi-Modal Localization in Connected and Autonomous Vehicles

Paper and Code

Jul 16, 2021

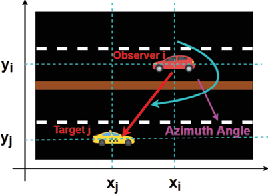

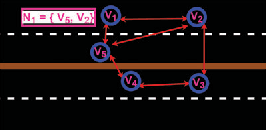

Cooperative Localization is expected to play a crucial role in various applications in the field of Connected and Autonomous vehicles (CAVs). Future 5G wireless systems are expected to enable cost-effective Vehicle-to-Everything (V2X)systems, allowing CAVs to share with the other entities of the network the data they collect and measure. Typical measurement models usually deployed for this problem, are absolute position from Global Positioning System (GPS), relative distance and azimuth angle to neighbouring vehicles, extracted from Light Detection and Ranging (LIDAR) or Radio Detection and Ranging(RADAR) sensors. In this paper, we provide a cooperative localization approach that performs multi modal-fusion between the interconnected vehicles, by representing a fleet of connected cars as an undirected graph, encoding each vehicle position relative to its neighbouring vehicles. This method is based on:i) the Laplacian Processing, a Graph Signal Processing tool that allows to capture intrinsic geometry of the undirected graph of vehicles rather than their absolute position on global coordinate system and ii) the temporal coherence due to motion patterns of the moving vehicles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge