Alexandros Gkillas

A holistic perception system of internal and external monitoring for ground autonomous vehicles: AutoTRUST paradigm

Aug 25, 2025

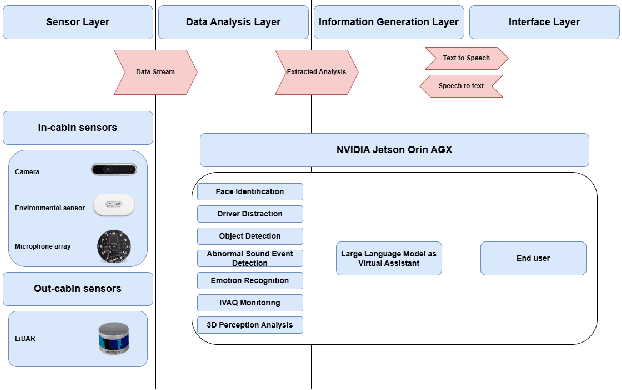

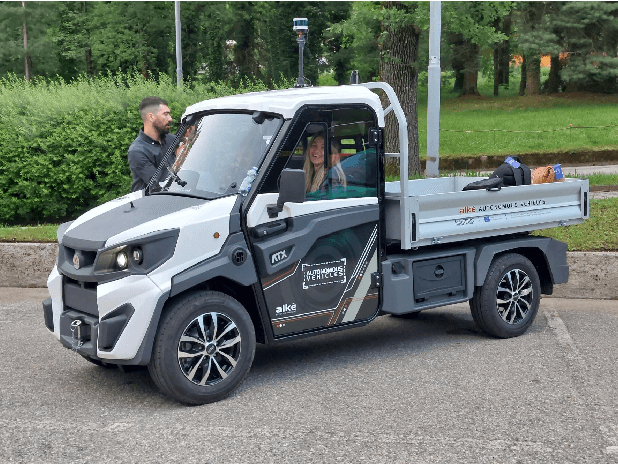

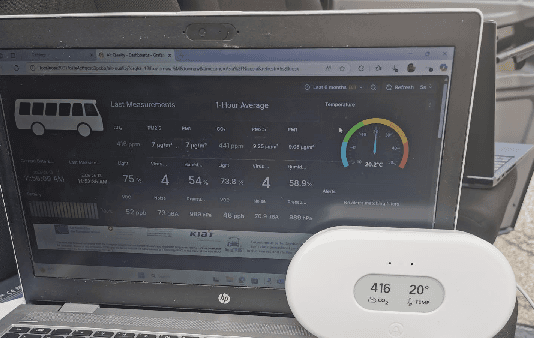

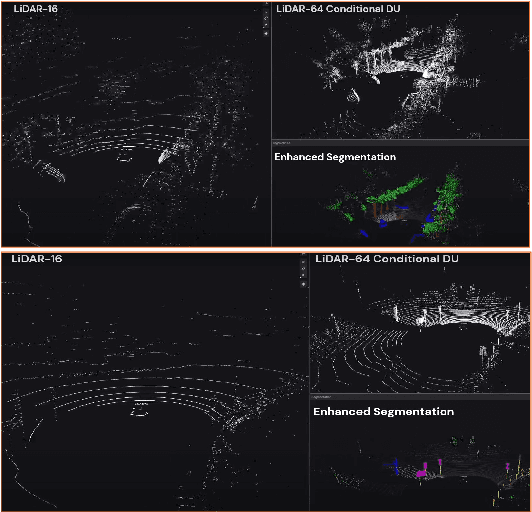

Abstract:This paper introduces a holistic perception system for internal and external monitoring of autonomous vehicles, with the aim of demonstrating a novel AI-leveraged self-adaptive framework of advanced vehicle technologies and solutions that optimize perception and experience on-board. Internal monitoring system relies on a multi-camera setup designed for predicting and identifying driver and occupant behavior through facial recognition, exploiting in addition a large language model as virtual assistant. Moreover, the in-cabin monitoring system includes AI-empowered smart sensors that measure air-quality and perform thermal comfort analysis for efficient on and off-boarding. On the other hand, external monitoring system perceives the surrounding environment of vehicle, through a LiDAR-based cost-efficient semantic segmentation approach, that performs highly accurate and efficient super-resolution on low-quality raw 3D point clouds. The holistic perception framework is developed in the context of EU's Horizon Europe programm AutoTRUST, and has been integrated and deployed on a real electric vehicle provided by ALKE. Experimental validation and evaluation at the integration site of Joint Research Centre at Ispra, Italy, highlights increased performance and efficiency of the modular blocks of the proposed perception architecture.

Optimizing Cooperative Multi-Object Tracking using Graph Signal Processing

Jun 11, 2025Abstract:Multi-Object Tracking (MOT) plays a crucial role in autonomous driving systems, as it lays the foundations for advanced perception and precise path planning modules. Nonetheless, single agent based MOT lacks in sensing surroundings due to occlusions, sensors failures, etc. Hence, the integration of multiagent information is essential for comprehensive understanding of the environment. This paper proposes a novel Cooperative MOT framework for tracking objects in 3D LiDAR scene by formulating and solving a graph topology-aware optimization problem so as to fuse information coming from multiple vehicles. By exploiting a fully connected graph topology defined by the detected bounding boxes, we employ the Graph Laplacian processing optimization technique to smooth the position error of bounding boxes and effectively combine them. In that manner, we reveal and leverage inherent coherences of diverse multi-agent detections, and associate the refined bounding boxes to tracked objects at two stages, optimizing localization and tracking accuracies. An extensive evaluation study has been conducted, using the real-world V2V4Real dataset, where the proposed method significantly outperforms the baseline frameworks, including the state-of-the-art deep-learning DMSTrack and V2V4Real, in various testing sequences.

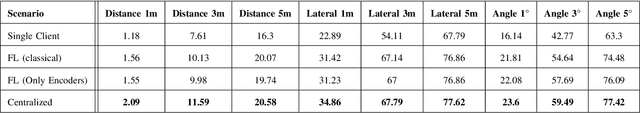

Personalized Federated Learning for Cross-view Geo-localization

Nov 07, 2024

Abstract:In this paper we propose a methodology combining Federated Learning (FL) with Cross-view Image Geo-localization (CVGL) techniques. We address the challenges of data privacy and heterogeneity in autonomous vehicle environments by proposing a personalized Federated Learning scenario that allows selective sharing of model parameters. Our method implements a coarse-to-fine approach, where clients share only the coarse feature extractors while keeping fine-grained features specific to local environments. We evaluate our approach against traditional centralized and single-client training schemes using the KITTI dataset combined with satellite imagery. Results demonstrate that our federated CVGL method achieves performance close to centralized training while maintaining data privacy. The proposed partial model sharing strategy shows comparable or slightly better performance than classical FL, offering significant reduced communication overhead without sacrificing accuracy. Our work contributes to more robust and privacy-preserving localization systems for autonomous vehicles operating in diverse environments

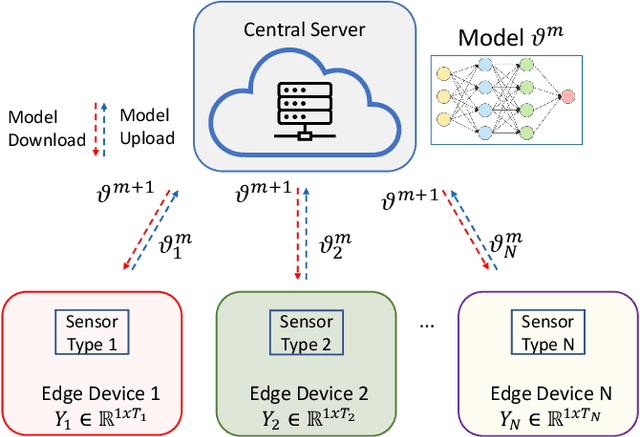

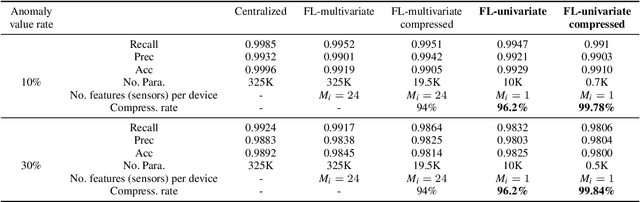

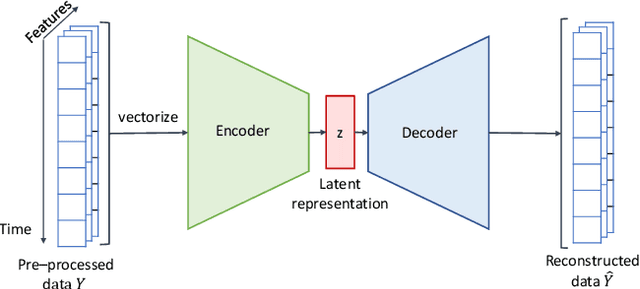

Towards Resource-Efficient Federated Learning in Industrial IoT for Multivariate Time Series Analysis

Nov 06, 2024

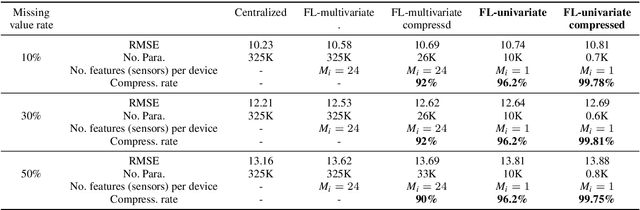

Abstract:Anomaly and missing data constitute a thorny problem in industrial applications. In recent years, deep learning enabled anomaly detection has emerged as a critical direction, however the improved detection accuracy is achieved with the utilization of large neural networks, increasing their storage and computational cost. Moreover, the data collected in edge devices contain user privacy, introducing challenges that can be successfully addressed by the privacy-preserving distributed paradigm, known as federated learning (FL). This framework allows edge devices to train and exchange models increasing also the communication cost. Thus, to deal with the increased communication, processing and storage challenges of the FL based deep anomaly detection NN pruning is expected to have significant benefits towards reducing the processing, storage and communication complexity. With this focus, a novel compression-based optimization problem is proposed at the server-side of a FL paradigm that fusses the received local models broadcast and performs pruning generating a more compressed model. Experiments in the context of anomaly detection and missing value imputation demonstrate that the proposed FL scenario along with the proposed compressed-based method are able to achieve high compression rates (more than $99.7\%$) with negligible performance losses (less than $1.18\%$ ) as compared to the centralized solutions.

An Optimization-based Deep Equilibrium Model for Hyperspectral Image Deconvolution with Convergence Guarantees

Jun 10, 2023

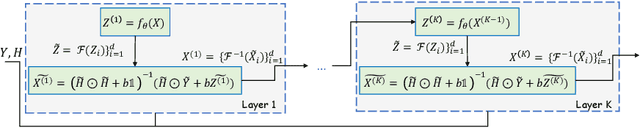

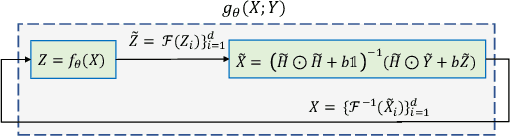

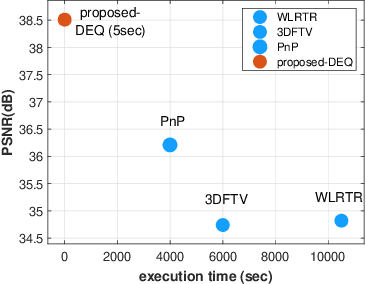

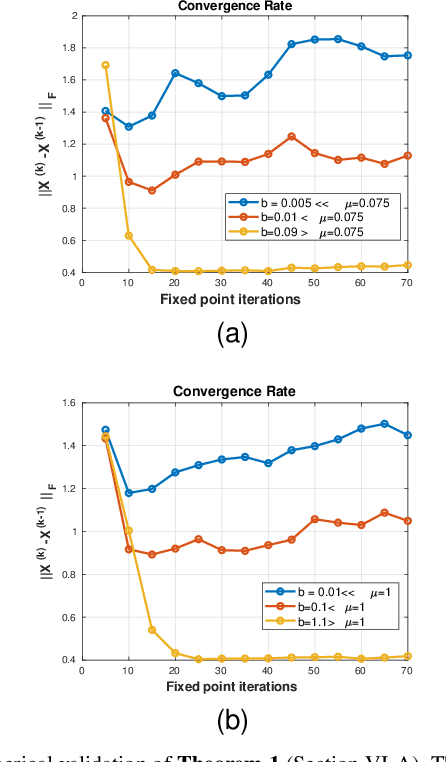

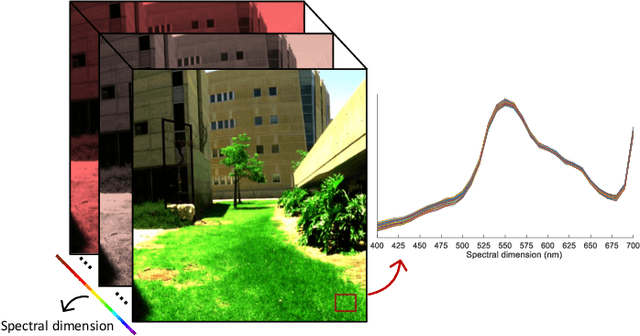

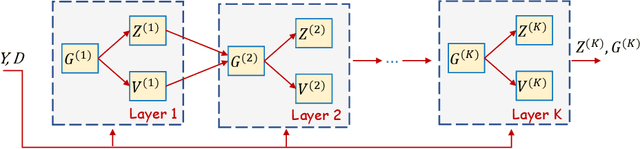

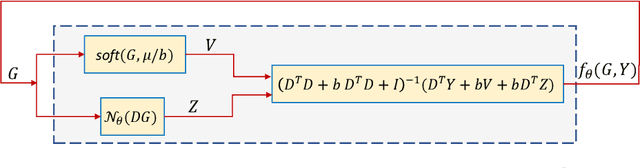

Abstract:In this paper, we propose a novel methodology for addressing the hyperspectral image deconvolution problem. This problem is highly ill-posed, and thus, requires proper priors (regularizers) to model the inherent spectral-spatial correlations of the HSI signals. To this end, a new optimization problem is formulated, leveraging a learnable regularizer in the form of a neural network. To tackle this problem, an effective solver is proposed using the half quadratic splitting methodology. The derived iterative solver is then expressed as a fixed-point calculation problem within the Deep Equilibrium (DEQ) framework, resulting in an interpretable architecture, with clear explainability to its parameters and convergence properties with practical benefits. The proposed model is a first attempt to handle the classical HSI degradation problem with different blurring kernels and noise levels via a single deep equilibrium model with significant computational efficiency. Extensive numerical experiments validate the superiority of the proposed methodology over other state-of-the-art methods. This superior restoration performance is achieved while requiring 99.85\% less computation time as compared to existing methods.

Deep Equilibrium Models Meet Federated Learning

May 29, 2023Abstract:In this study the problem of Federated Learning (FL) is explored under a new perspective by utilizing the Deep Equilibrium (DEQ) models instead of conventional deep learning networks. We claim that incorporating DEQ models into the federated learning framework naturally addresses several open problems in FL, such as the communication overhead due to the sharing large models and the ability to incorporate heterogeneous edge devices with significantly different computation capabilities. Additionally, a weighted average fusion rule is proposed at the server-side of the FL framework to account for the different qualities of models from heterogeneous edge devices. To the best of our knowledge, this study is the first to establish a connection between DEQ models and federated learning, contributing to the development of an efficient and effective FL framework. Finally, promising initial experimental results are presented, demonstrating the potential of this approach in addressing challenges of FL.

Deep Equilibrium Assisted Block Sparse Coding of Inter-dependent Signals: Application to Hyperspectral Imaging

Mar 29, 2022

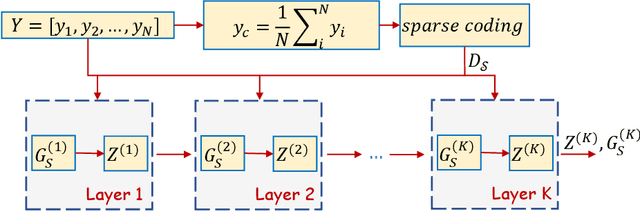

Abstract:In this study, the problem of computing a sparse representation for datasets of inter-dependent signals, given a fixed dictionary, is considered. A dataset of inter-dependent signals is defined as a matrix whose columns demonstrate strong dependencies. A computational efficient sparse coding optimization problem is derived by employing regularization terms that are adapted to the properties of the signals of interest. Exploiting the merits of the learnable regularization techniques, a neural network is employed to act as structure prior and reveal the underlying signal interdependencies. To solve the optimization problem Deep unrolling and Deep equilibrium based algorithms are developed, forming highly interpretable and concise deep-learning-based architectures, that process the input dataset in a block-by-block fashion. Extensive simulation results, in the context of hyperspectral image denoising, are provided, that demonstrate that the proposed algorithms outperform significantly other sparse coding approaches and exhibit superior performance against recent state-of-the-art deep-learning-based denoising models. In a wider perspective, our work provides a unique bridge between a classic approach, that is the sparse representation theory, and modern representation tools that are based on deep learning modeling.

A cross-domain recommender system using deep coupled autoencoders

Dec 08, 2021

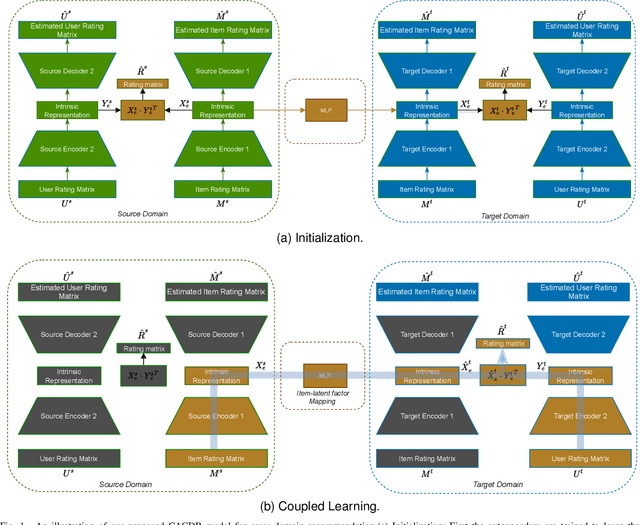

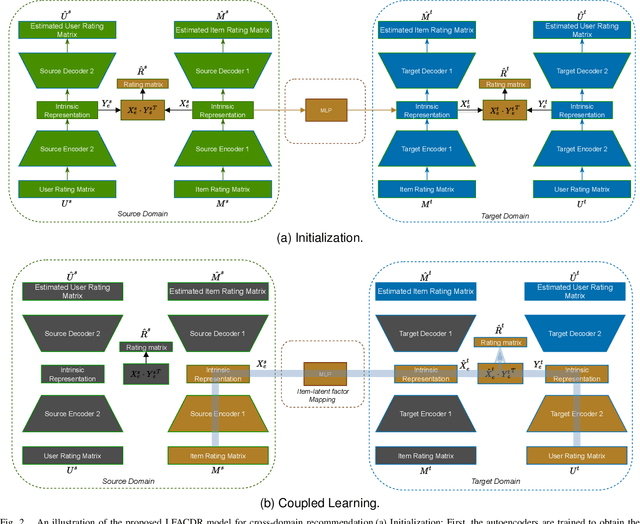

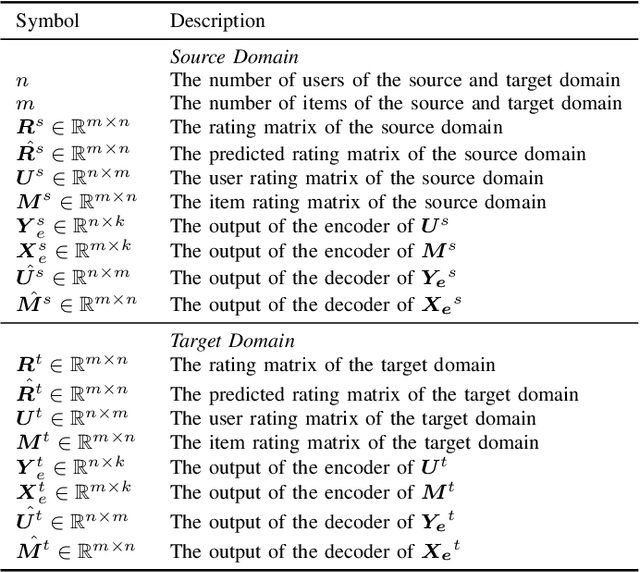

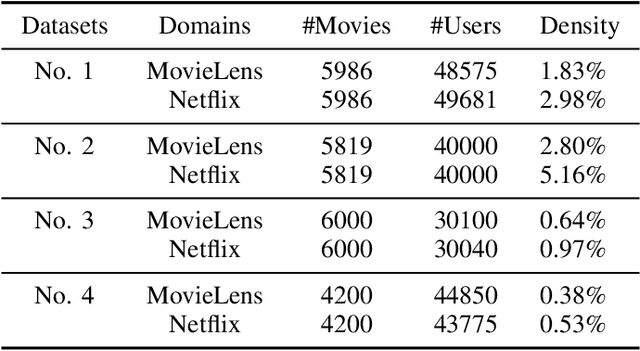

Abstract:Long-standing data sparsity and cold-start constitute thorny and perplexing problems for the recommendation systems. Cross-domain recommendation as a domain adaptation framework has been utilized to efficiently address these challenging issues, by exploiting information from multiple domains. In this study, an item-level relevance cross-domain recommendation task is explored, where two related domains, that is, the source and the target domain contain common items without sharing sensitive information regarding the users' behavior, and thus avoiding the leak of user privacy. In light of this scenario, two novel coupled autoencoder-based deep learning methods are proposed for cross-domain recommendation. The first method aims to simultaneously learn a pair of autoencoders in order to reveal the intrinsic representations of the items in the source and target domains, along with a coupled mapping function to model the non-linear relationships between these representations, thus transferring beneficial information from the source to the target domain. The second method is derived based on a new joint regularized optimization problem, which employs two autoencoders to generate in a deep and non-linear manner the user and item-latent factors, while at the same time a data-driven function is learnt to map the item-latent factors across domains. Extensive numerical experiments on two publicly available benchmark datasets are conducted illustrating the superior performance of our proposed methods compared to several state-of-the-art cross-domain recommendation frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge