Koray Ozcan

Quantization-Guided Training for Compact TinyML Models

Mar 10, 2021

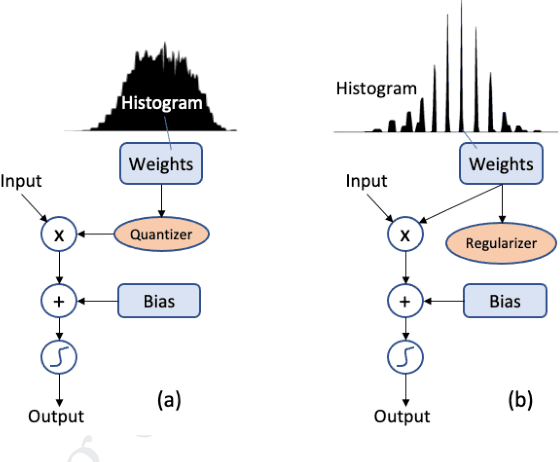

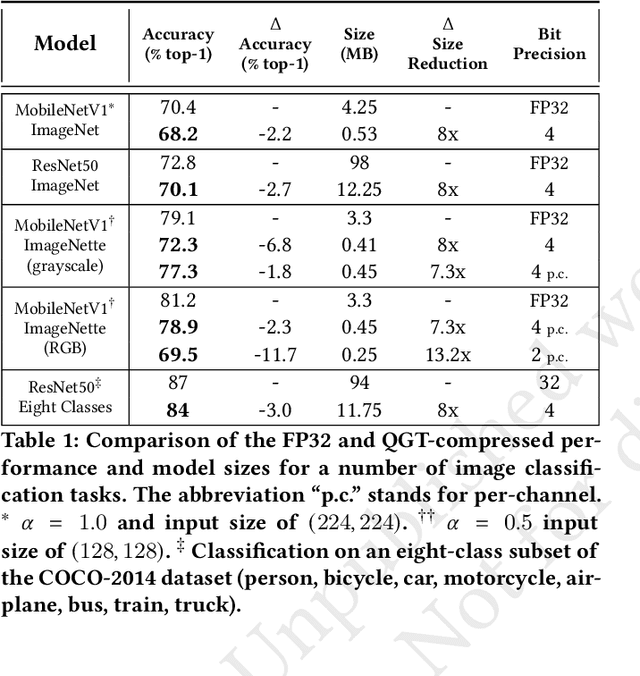

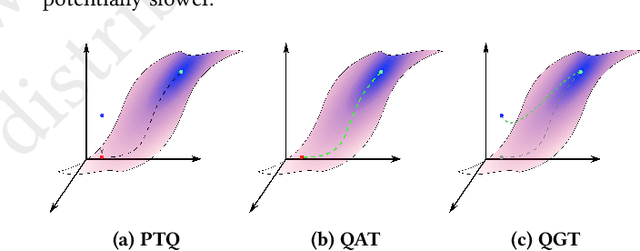

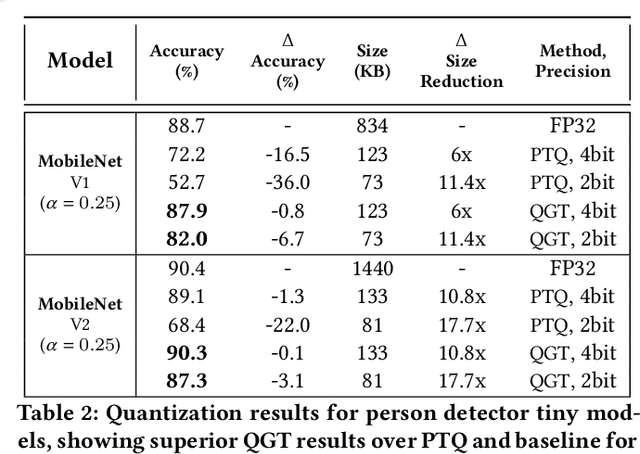

Abstract:We propose a Quantization Guided Training (QGT) method to guide DNN training towards optimized low-bit-precision targets and reach extreme compression levels below 8-bit precision. Unlike standard quantization-aware training (QAT) approaches, QGT uses customized regularization to encourage weight values towards a distribution that maximizes accuracy while reducing quantization errors. One of the main benefits of this approach is the ability to identify compression bottlenecks. We validate QGT using state-of-the-art model architectures on vision datasets. We also demonstrate the effectiveness of QGT with an 81KB tiny model for person detection down to 2-bit precision (representing 17.7x size reduction), while maintaining an accuracy drop of only 3% compared to a floating-point baseline.

Driver Behavior Analysis Using Lane Departure Detection Under Challenging Conditions

May 31, 2019

Abstract:In this paper, we present a novel model to detect lane regions and extract lane departure events (changes and incursions) from challenging, lower-resolution videos recorded with mobile cameras. Our algorithm used a Mask-RCNN based lane detection model as pre-processor. Recently, deep learning-based models provide state-of-the-art technology for object detection combined with segmentation. Among the several deep learning architectures, convolutional neural networks (CNNs) outperformed other machine learning models, especially for region proposal and object detection tasks. Recent development in object detection has been driven by the success of region proposal methods and region-based CNNs (R-CNNs). Our algorithm utilizes lane segmentation mask for detection and Fix-lag Kalman filter for tracking, rather than the usual approach of detecting lane lines from single video frames. The algorithm permits detection of driver lane departures into left or right lanes from continuous lane detections. Preliminary results show promise for robust detection of lane departure events. The overall sensitivity for lane departure events on our custom test dataset is 81.81%.

Accelerometer based Activity Classification with Variational Inference on Sticky HDP-SLDS

Oct 19, 2015

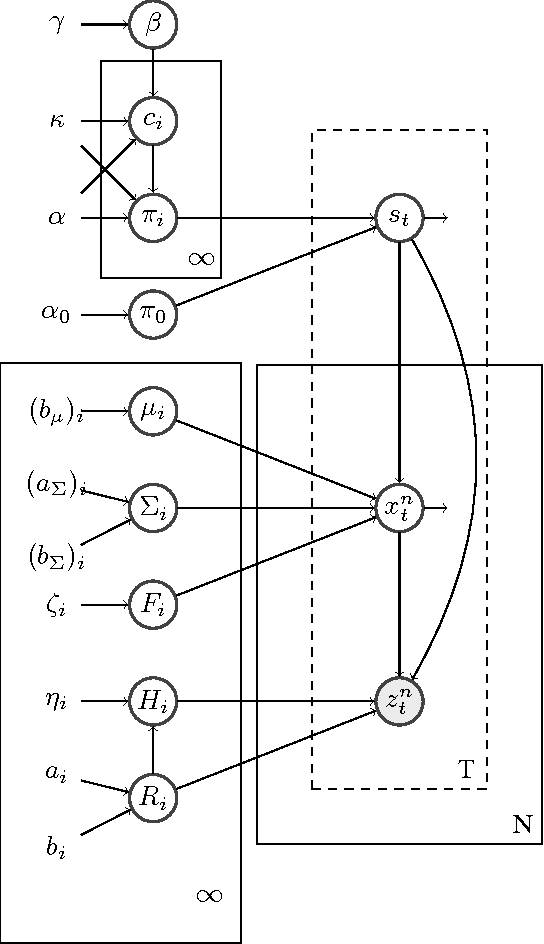

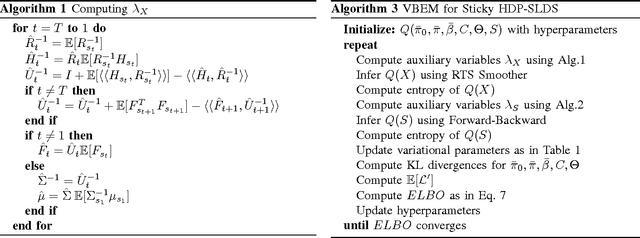

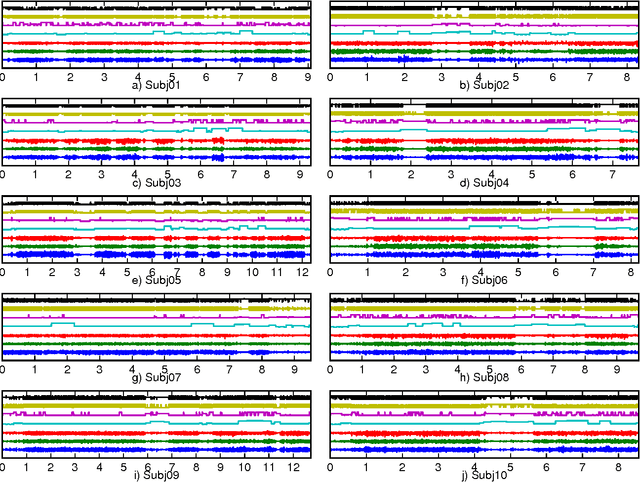

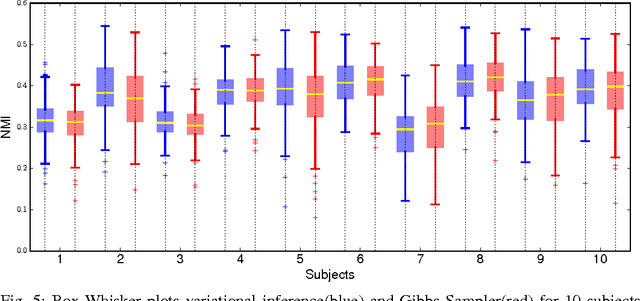

Abstract:As part of daily monitoring of human activities, wearable sensors and devices are becoming increasingly popular sources of data. With the advent of smartphones equipped with acceloremeter, gyroscope and camera; it is now possible to develop activity classification platforms everyone can use conveniently. In this paper, we propose a fast inference method for an unsupervised non-parametric time series model namely variational inference for sticky HDP-SLDS(Hierarchical Dirichlet Process Switching Linear Dynamical System). We show that the proposed algorithm can differentiate various indoor activities such as sitting, walking, turning, going up/down the stairs and taking the elevator using only the acceloremeter of an Android smartphone Samsung Galaxy S4. We used the front camera of the smartphone to annotate activity types precisely. We compared the proposed method with Hidden Markov Models with Gaussian emission probabilities on a dataset of 10 subjects. We showed that the efficacy of the stickiness property. We further compared the variational inference to the Gibbs sampler on the same model and show that variational inference is faster in one order of magnitude.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge