Kishore Reddy

Pose-GNN : Camera Pose Estimation System Using Graph Neural Networks

Mar 17, 2021

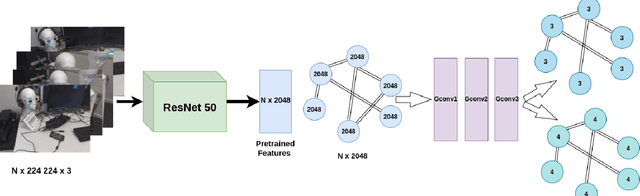

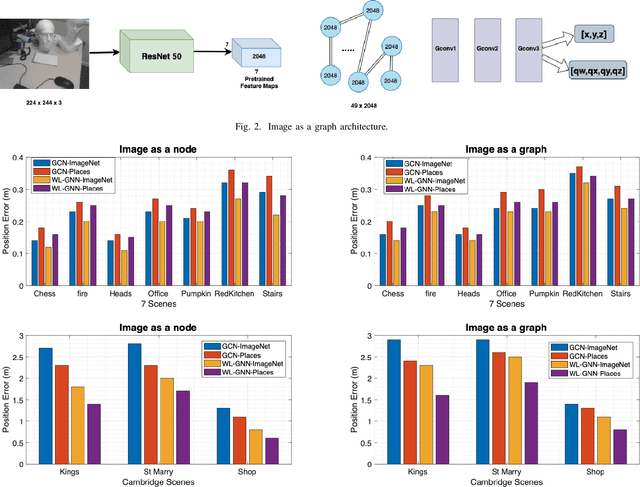

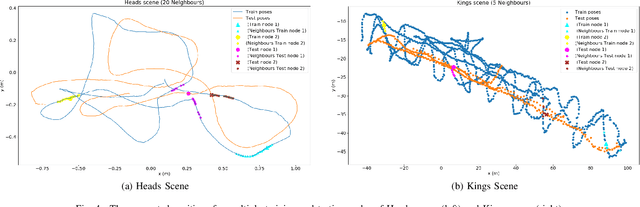

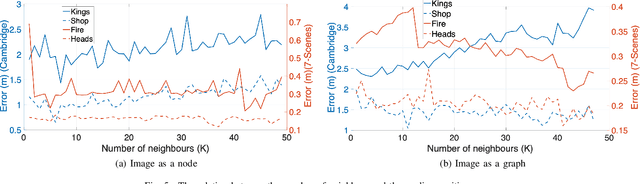

Abstract:We propose a novel image based localization system using graph neural networks (GNN). The pretrained ResNet50 convolutional neural network (CNN) architecture is used to extract the important features for each image. Following, the extracted features are input to GNN to find the pose of each image by either using the image features as a node in a graph and formulate the pose estimation problem as node pose regression or modelling the image features themselves as a graph and the problem becomes graph pose regression. We do an extensive comparison between the proposed two approaches and the state of the art single image localization methods and show that using GNN leads to enhanced performance for both indoor and outdoor environments.

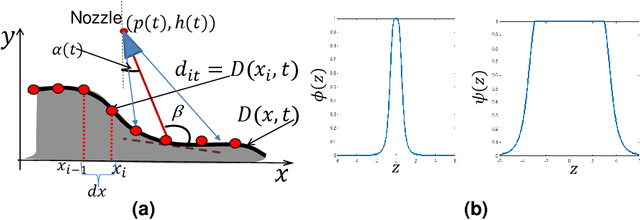

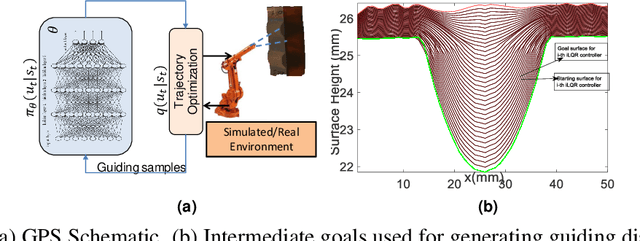

Guided Policy Search Based Control of a High Dimensional Advanced Manufacturing Process

Sep 12, 2020

Abstract:In this paper we apply guided policy search (GPS) based reinforcement learning framework for a high dimensional optimal control problem arising in an additive manufacturing process. The problem comprises of controlling the process parameters so that layer-wise deposition of material leads to desired geometric characteristics of the resulting part surface while minimizing the material deposited. A realistic simulation model of the deposition process along with carefully selected set of guiding distributions generated based on iterative Linear Quadratic Regulator is used to train a neural network policy using GPS. A closed loop control based on the trained policy and in-situ measurement of the deposition profile is tested experimentally, and shows promising performance.

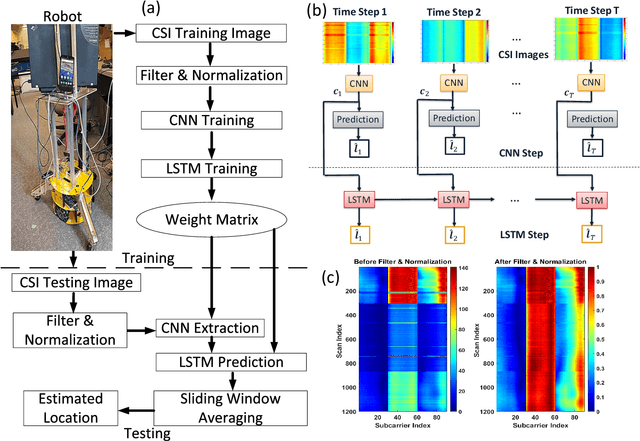

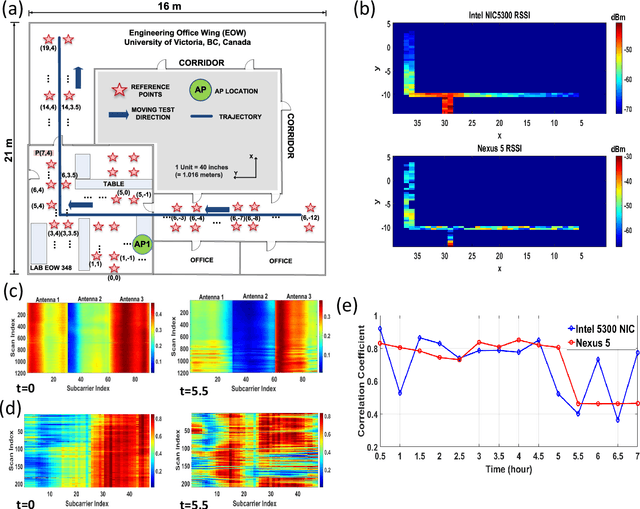

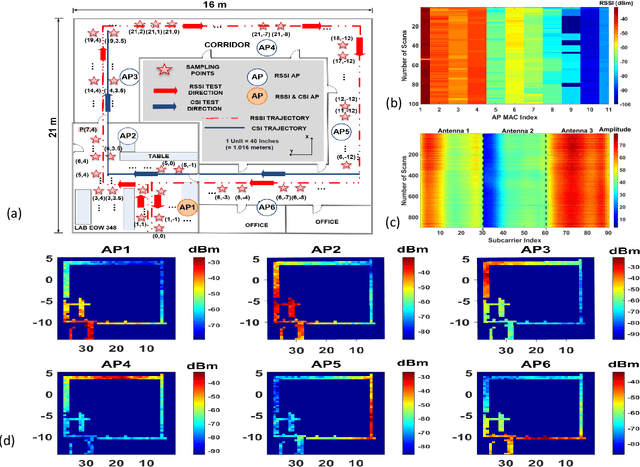

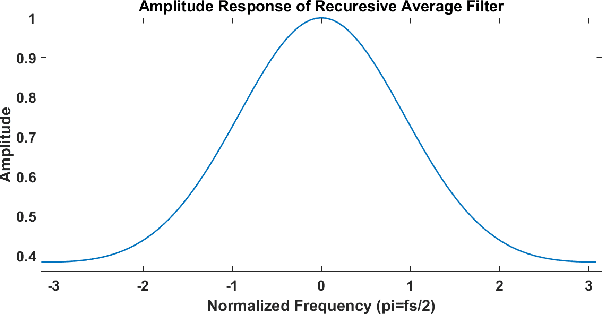

A CNN-LSTM Quantifier for Single Access Point CSI Indoor Localization

May 13, 2020

Abstract:This paper proposes a combined network structure between convolutional neural network (CNN) and long-short term memory (LSTM) quantifier for WiFi fingerprinting indoor localization. In contrast to conventional methods that utilize only spatial data with classification models, our CNN-LSTM network extracts both space and time features of the received channel state information (CSI) from a single router. Furthermore, the proposed network builds a quantification model rather than a limited classification model as in most of the literature work, which enables the estimation of testing points that are not identical to the reference points. We analyze the instability of CSI and demonstrate a mitigation solution using a comprehensive filter and normalization scheme. The localization accuracy is investigated through extensive on-site experiments with several mobile devices including mobile phone (Nexus 5) and laptop (Intel 5300 NIC) on hundreds of testing locations. Using only a single WiFi router, our structure achieves an average localization error of 2.5~m with $\mathrm{80\%}$ of the errors under 4~m, which outperforms the other reported algorithms by approximately $\mathrm{50\%}$ under the same test environment.

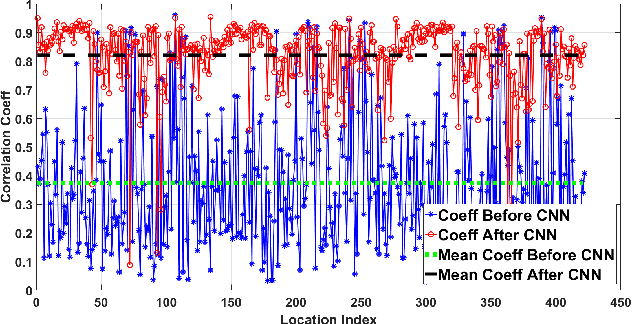

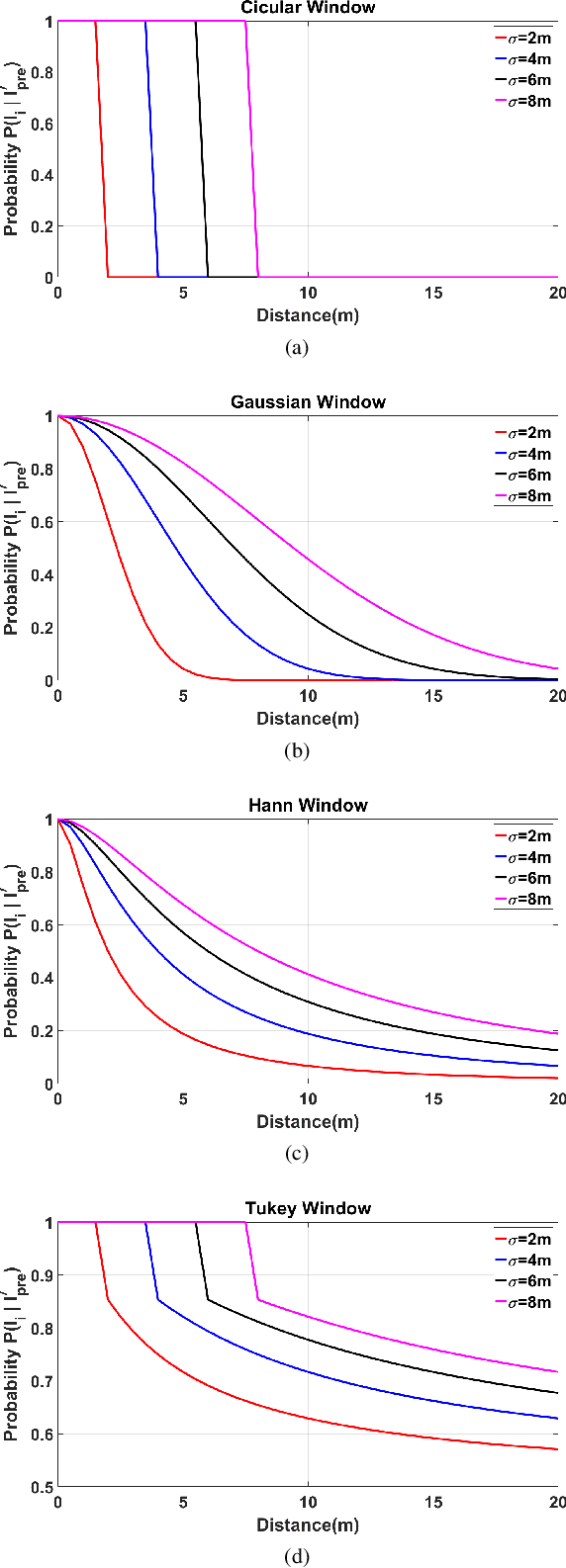

Semi-Sequential Probabilistic Model For Indoor Localization Enhancement

Jan 08, 2020

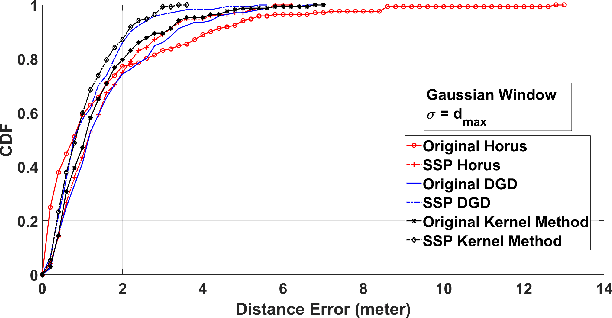

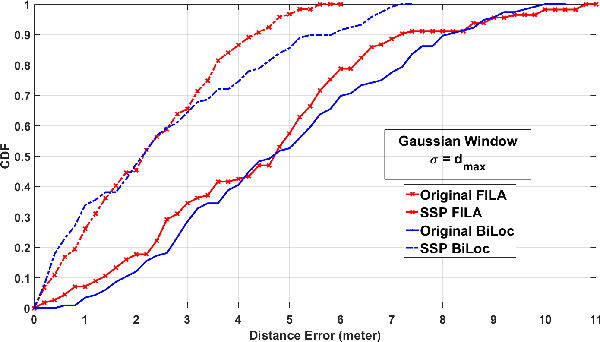

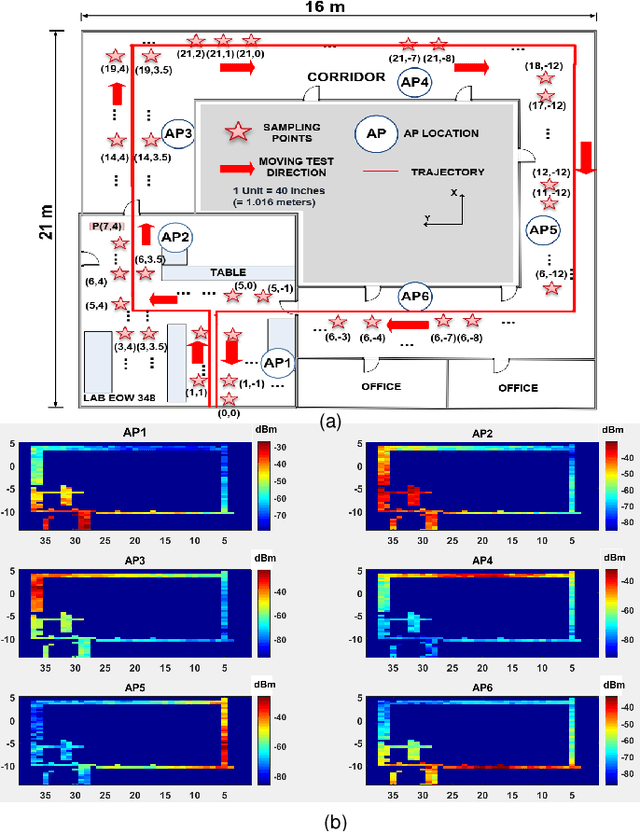

Abstract:This paper proposes a semi-sequential probabilistic model (SSP) that applies an additional short term memory to enhance the performance of the probabilistic indoor localization. The conventional probabilistic methods normally treat the locations in the database indiscriminately. In contrast, SSP leverages the information of the previous position to determine the probable location since the user's speed in an indoor environment is bounded and locations near the previous one have higher probability than the other locations. Although the SSP utilizes the previous location information, it does not require the exact moving speed and direction of the user. On-site experiments using the received signal strength indicator (RSSI) and channel state information (CSI) fingerprints for localization demonstrate that SSP reduces the maximum error and boosts the performance of existing probabilistic approaches by 25% - 30%.

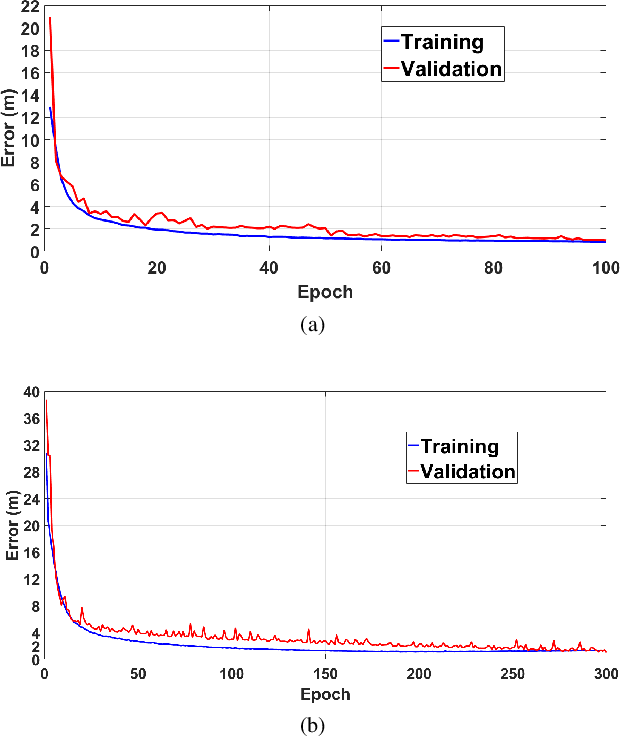

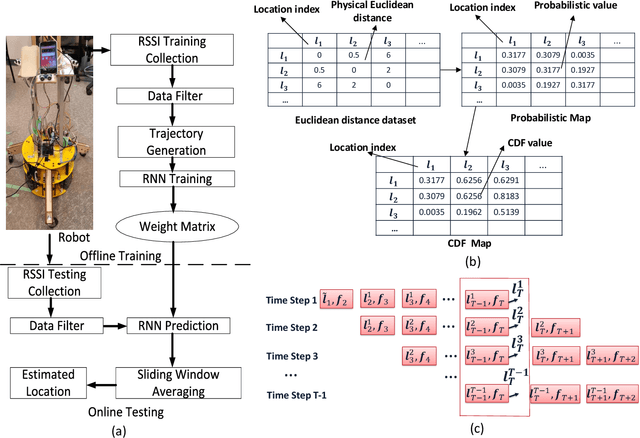

Recurrent Neural Networks For Accurate RSSI Indoor Localization

Mar 27, 2019

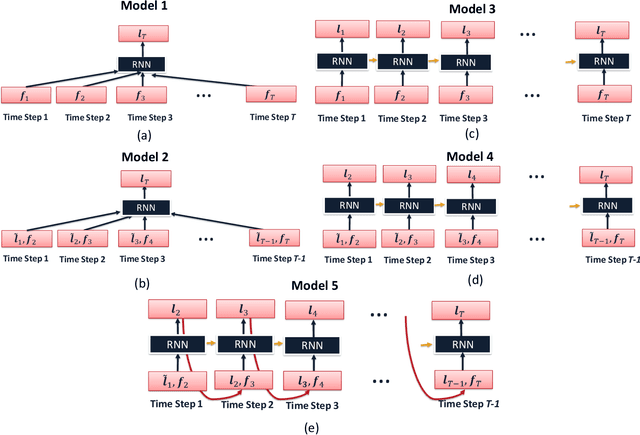

Abstract:This paper proposes recurrent neuron networks (RNNs) for a fingerprinting indoor localization using WiFi. Instead of locating user's position one at a time as in the cases of conventional algorithms, our RNN solution aims at trajectory positioning and takes into account the relation among the received signal strength indicator (RSSI) measurements in a trajectory. Furthermore, a weighted average filter is proposed for both input RSSI data and sequential output locations to enhance the accuracy among the temporal fluctuations of RSSI. The results using different types of RNN including vanilla RNN, long short-term memory (LSTM), gated recurrent unit (GRU) and bidirectional LSTM (BiLSTM) are presented. On-site experiments demonstrate that the proposed structure achieves an average localization error of $0.75$ m with $80\%$ of the errors under $1$ m, which outperforms the conventional KNN algorithms and probabilistic algorithms by approximately $30\%$ under the same test environment.

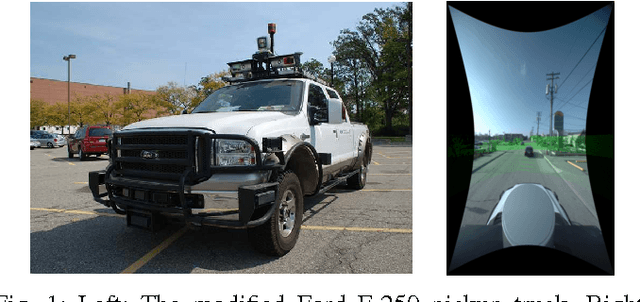

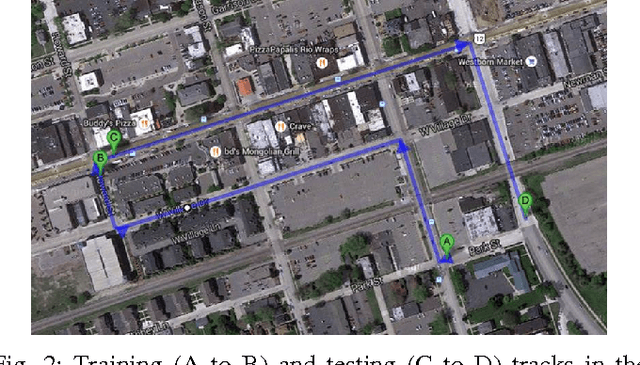

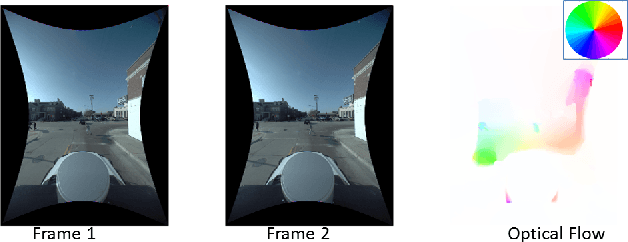

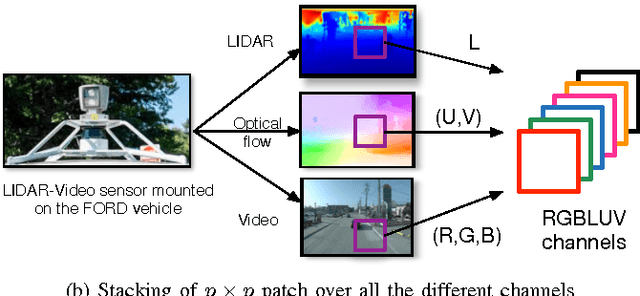

Multi-modal Sensor Registration for Vehicle Perception via Deep Neural Networks

Jul 08, 2015

Abstract:The ability to simultaneously leverage multiple modes of sensor information is critical for perception of an automated vehicle's physical surroundings. Spatio-temporal alignment of registration of the incoming information is often a prerequisite to analyzing the fused data. The persistence and reliability of multi-modal registration is therefore the key to the stability of decision support systems ingesting the fused information. LiDAR-video systems like on those many driverless cars are a common example of where keeping the LiDAR and video channels registered to common physical features is important. We develop a deep learning method that takes multiple channels of heterogeneous data, to detect the misalignment of the LiDAR-video inputs. A number of variations were tested on the Ford LiDAR-video driving test data set and will be discussed. To the best of our knowledge the use of multi-modal deep convolutional neural networks for dynamic real-time LiDAR-video registration has not been presented.

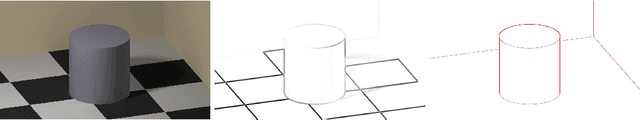

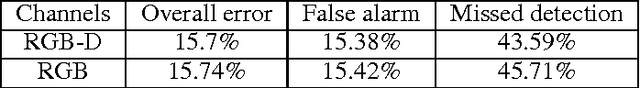

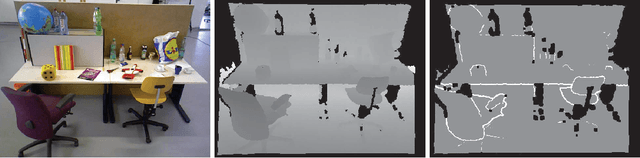

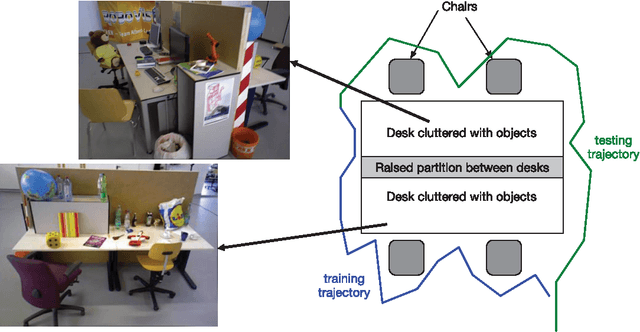

Occlusion Edge Detection in RGB-D Frames using Deep Convolutional Networks

Jul 08, 2015

Abstract:Occlusion edges in images which correspond to range discontinuity in the scene from the point of view of the observer are an important prerequisite for many vision and mobile robot tasks. Although they can be extracted from range data however extracting them from images and videos would be extremely beneficial. We trained a deep convolutional neural network (CNN) to identify occlusion edges in images and videos with both RGB-D and RGB inputs. The use of CNN avoids hand-crafting of features for automatically isolating occlusion edges and distinguishing them from appearance edges. Other than quantitative occlusion edge detection results, qualitative results are provided to demonstrate the trade-off between high resolution analysis and frame-level computation time which is critical for real-time robotics applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge