Julian Ryde

Dennis

RenderMap: Exploiting the Link Between Perception and Rendering for Dense Mapping

Feb 21, 2017

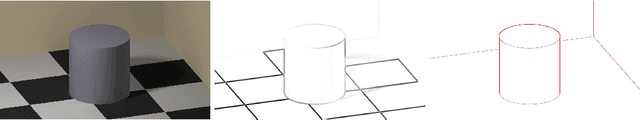

Abstract:We introduce an approach for the real-time (2Hz) creation of a dense map and alignment of a moving robotic agent within that map by rendering using a Graphics Processing Unit (GPU). This is done by recasting the scan alignment part of the dense mapping process as a rendering task. Alignment errors are computed from rendering the scene, comparing with range data from the sensors, and minimized by an optimizer. The proposed approach takes advantage of the advances in rendering techniques for computer graphics and GPU hardware to accelerate the algorithm. Moreover, it allows one to exploit information not used in classic dense mapping algorithms such as Iterative Closest Point (ICP) by rendering interfaces between the free space, occupied space and the unknown. The proposed approach leverages directly the rendering capabilities of the GPU, in contrast to other GPU-based approaches that deploy the GPU as a general purpose parallel computation platform. We argue that the proposed concept is a general consequence of treating perception problems as inverse problems of rendering. Many perception problems can be recast into a form where much of the computation is replaced by render operations. This is not only efficient since rendering is fast, but also simpler to implement and will naturally benefit from future advancements in GPU speed and rendering techniques. Furthermore, this general concept can go beyond addressing perception problems and can be used for other problem domains such as path planning.

Occlusion Edge Detection in RGB-D Frames using Deep Convolutional Networks

Jul 08, 2015

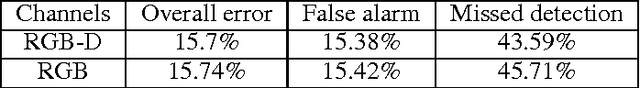

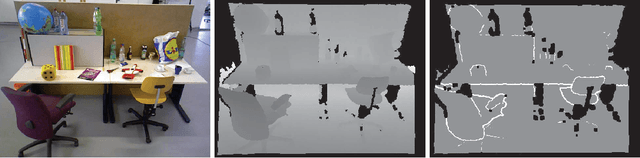

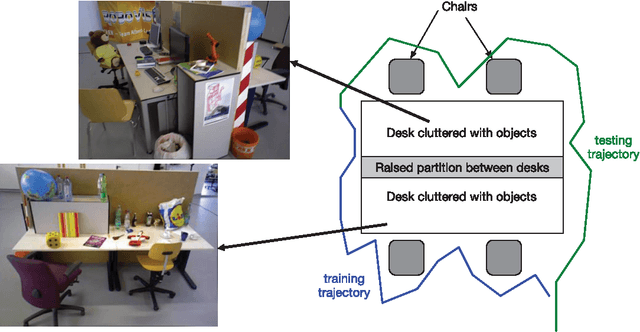

Abstract:Occlusion edges in images which correspond to range discontinuity in the scene from the point of view of the observer are an important prerequisite for many vision and mobile robot tasks. Although they can be extracted from range data however extracting them from images and videos would be extremely beneficial. We trained a deep convolutional neural network (CNN) to identify occlusion edges in images and videos with both RGB-D and RGB inputs. The use of CNN avoids hand-crafting of features for automatically isolating occlusion edges and distinguishing them from appearance edges. Other than quantitative occlusion edge detection results, qualitative results are provided to demonstrate the trade-off between high resolution analysis and frame-level computation time which is critical for real-time robotics applications.

Mutual Localization: Two Camera Relative 6-DOF Pose Estimation from Reciprocal Fiducial Observation

Apr 01, 2013

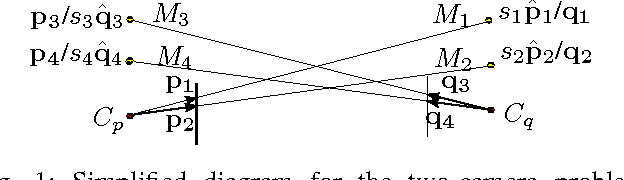

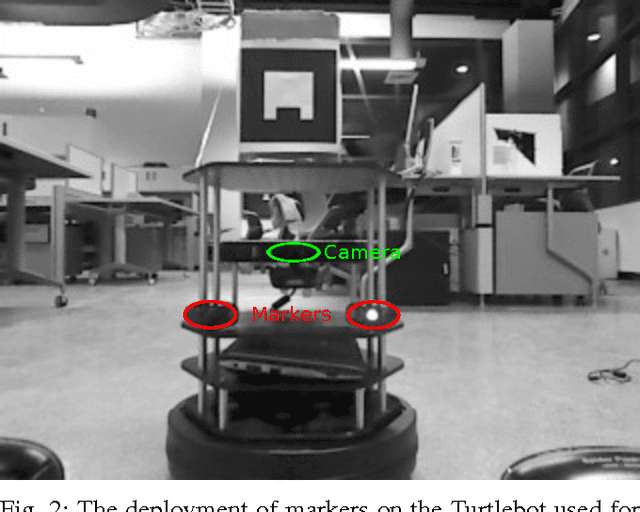

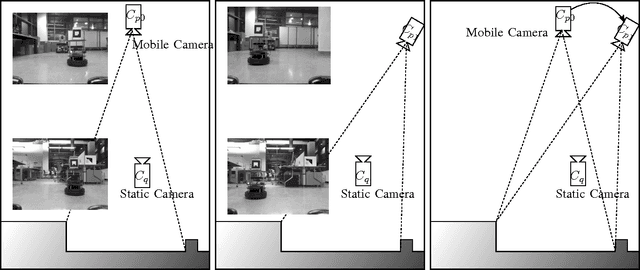

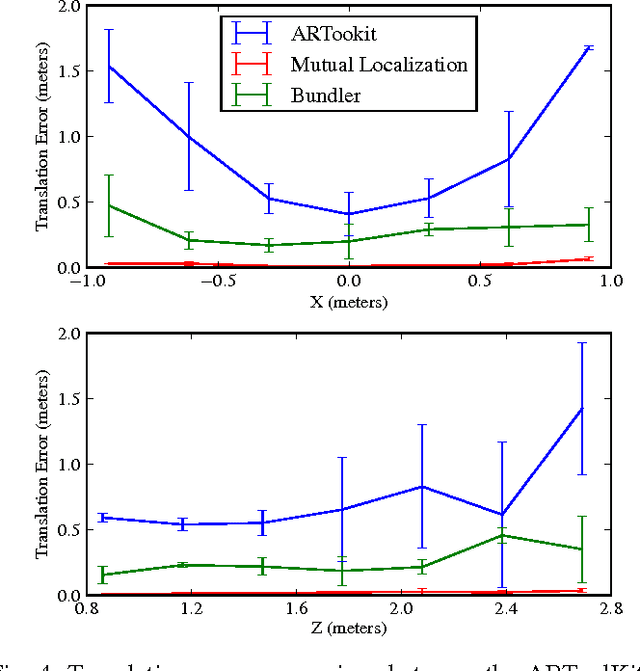

Abstract:Concurrently estimating the 6-DOF pose of multiple cameras or robots---cooperative localization---is a core problem in contemporary robotics. Current works focus on a set of mutually observable world landmarks and often require inbuilt egomotion estimates; situations in which both assumptions are violated often arise, for example, robots with erroneous low quality odometry and IMU exploring an unknown environment. In contrast to these existing works in cooperative localization, we propose a cooperative localization method, which we call mutual localization, that uses reciprocal observations of camera-fiducials to obviate the need for egomotion estimates and mutually observable world landmarks. We formulate and solve an algebraic formulation for the pose of the two camera mutual localization setup under these assumptions. Our experiments demonstrate the capabilities of our proposal egomotion-free cooperative localization method: for example, the method achieves 2cm range and 0.7 degree accuracy at 2m sensing for 6-DOF pose. To demonstrate the applicability of the proposed work, we deploy our method on Turtlebots and we compare our results with ARToolKit and Bundler, over which our method achieves a 10 fold improvement in translation estimation accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge