King N. Ngan

Forgetting to Remember: A Scalable Incremental Learning Framework for Cross-Task Blind Image Quality Assessment

Sep 15, 2022

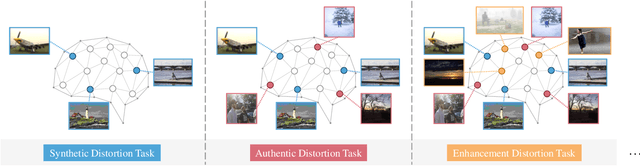

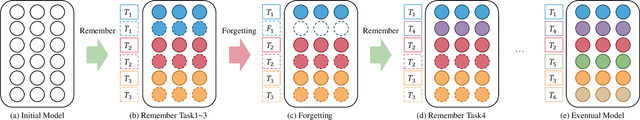

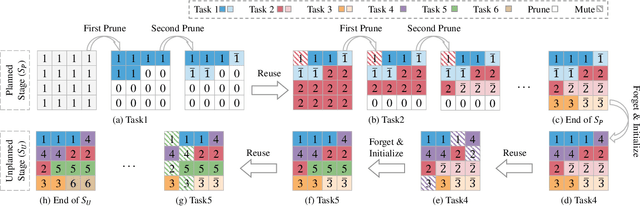

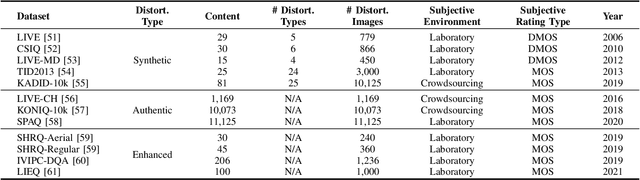

Abstract:Recent years have witnessed the great success of blind image quality assessment (BIQA) in various task-specific scenarios, which present invariable distortion types and evaluation criteria. However, due to the rigid structure and learning framework, they cannot apply to the cross-task BIQA scenario, where the distortion types and evaluation criteria keep changing in practical applications. This paper proposes a scalable incremental learning framework (SILF) that could sequentially conduct BIQA across multiple evaluation tasks with limited memory capacity. More specifically, we develop a dynamic parameter isolation strategy to sequentially update the task-specific parameter subsets, which are non-overlapped with each other. Each parameter subset is temporarily settled to Remember one evaluation preference toward its corresponding task, and the previously settled parameter subsets can be adaptively reused in the following BIQA to achieve better performance based on the task relevance. To suppress the unrestrained expansion of memory capacity in sequential tasks learning, we develop a scalable memory unit by gradually and selectively pruning unimportant neurons from previously settled parameter subsets, which enable us to Forget part of previous experiences and free the limited memory capacity for adapting to the emerging new tasks. Extensive experiments on eleven IQA datasets demonstrate that our proposed method significantly outperforms the other state-of-the-art methods in cross-task BIQA.

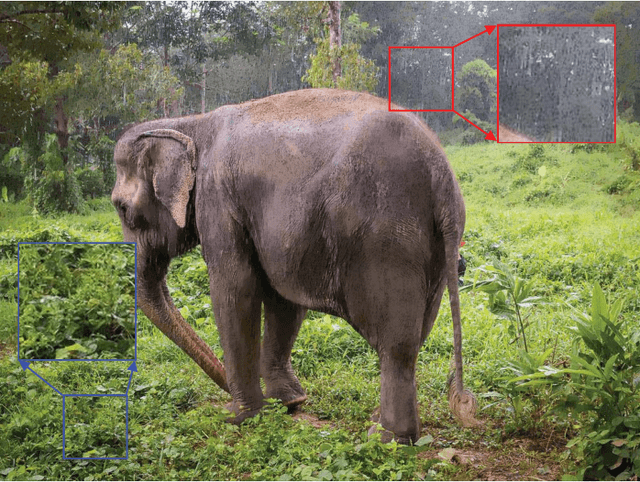

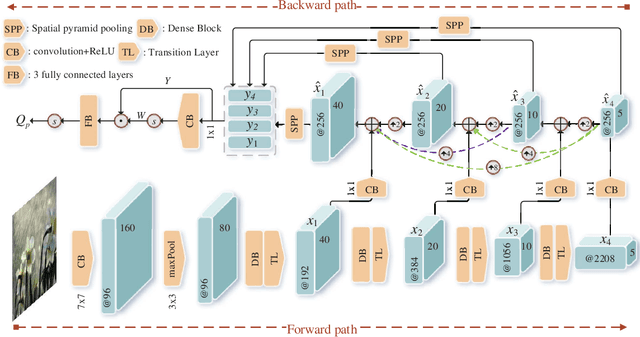

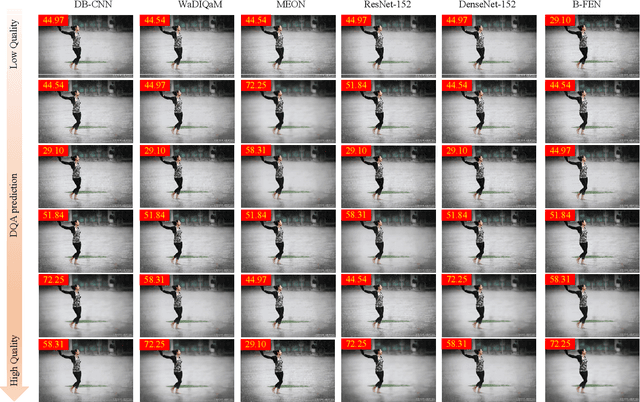

Subjective and Objective De-raining Quality Assessment Towards Authentic Rain Image

Oct 06, 2019

Abstract:Images acquired by outdoor vision systems easily suffer poor visibility and annoying interference due to the rainy weather, which brings great challenge for accurately understanding and describing the visual contents. Recent researches have devoted great efforts on the task of rain removal for improving the image visibility. However, there is very few exploration about the quality assessment of de-rained image, even it is crucial for accurately measuring the performance of various de-raining algorithms. In this paper, we first create a de-raining quality assessment (DQA) database that collects 206 authentic rain images and their de-rained versions produced by 6 representative single image rain removal algorithms. Then, a subjective study is conducted on our DQA database, which collects the subject-rated scores of all de-rained images. To quantitatively measure the quality of de-rained image with non-uniform artifacts, we propose a bi-directional feature embedding network (B-FEN) which integrates the features of global perception and local difference together. Experiments confirm that the proposed method significantly outperforms many existing universal blind image quality assessment models. To help the research towards perceptually preferred de-raining algorithm, we will publicly release our DQA database and B-FEN source code on https://github.com/wqb-uestc.

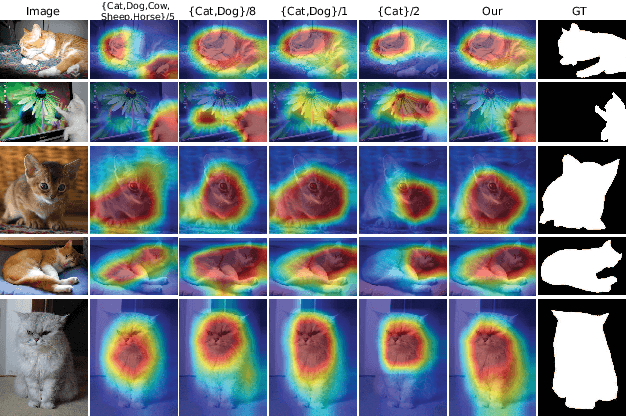

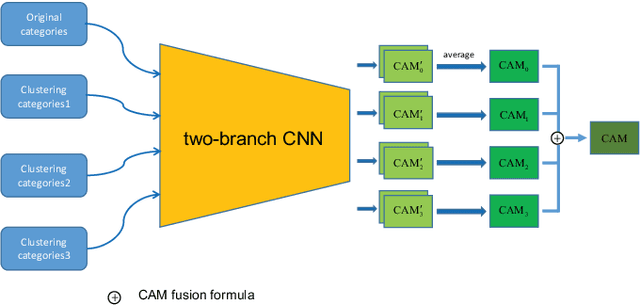

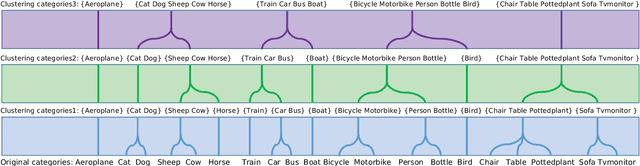

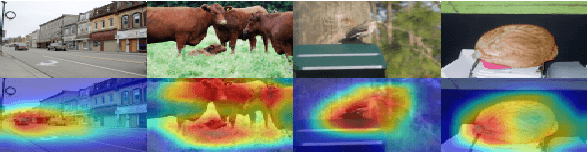

Class Activation Map generation by Multiple Level Class Grouping and Orthogonal Constraint

Sep 21, 2019

Abstract:Class activation map (CAM) highlights regions of classes based on classification network, which is widely used in weakly supervised tasks. However, it faces the problem that the class activation regions are usually small and local. Although several efforts paid to the second step (the CAM generation step) have partially enhanced the generation, we believe such problem is also caused by the first step (training step), because single classification model trained on the entire classes contains finite discriminate information that limits the object region extraction. To this end, this paper solves CAM generation by using multiple classification models. To form multiple classification networks that carry different discriminative information, we try to capture the semantic relationships between classes to form different semantic levels of classification models. Specifically, hierarchical clustering based on class relationships is used to form hierarchical clustering results, where the clustering levels are treated as semantic levels to form the classification models. Moreover, a new orthogonal module and a two-branch based CAM generation method are proposed to generate class regions that are orthogonal and complementary. We use the PASCAL VOC 2012 dataset to verify the proposed method. Experimental results show that our approach improves the CAM generation.

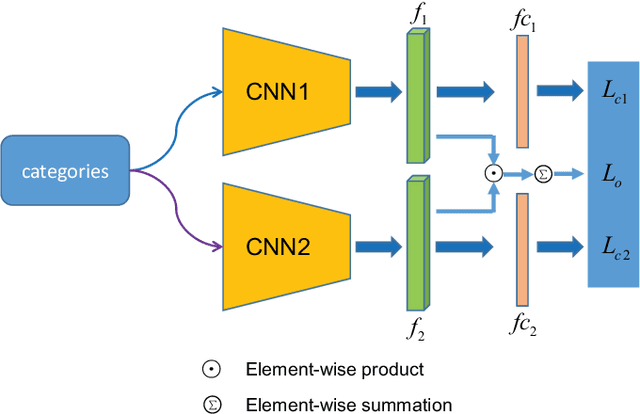

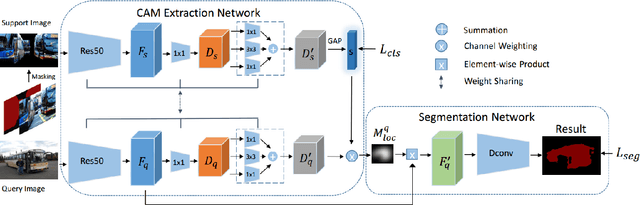

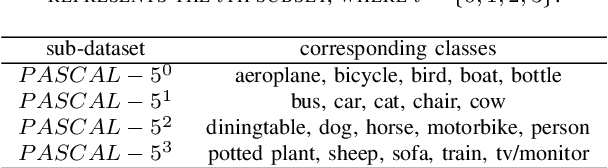

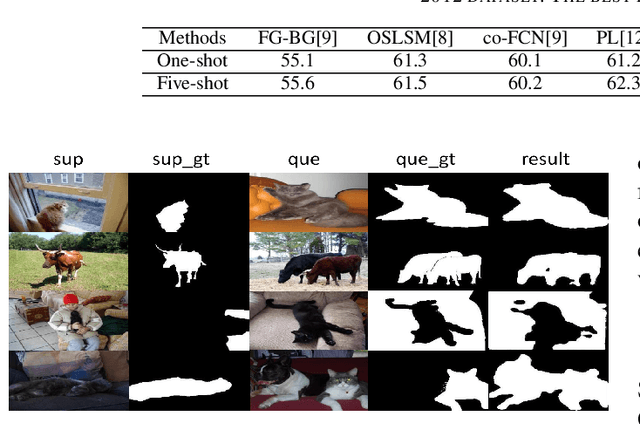

A New Few-shot Segmentation Network Based on Class Representation

Sep 19, 2019

Abstract:This paper studies few-shot segmentation, which is a task of predicting foreground mask of unseen classes by a few of annotations only, aided by a set of rich annotations already existed. The existing methods mainly focus the task on "\textit{how to transfer segmentation cues from support images (labeled images) to query images (unlabeled images)}", and try to learn efficient and general transfer module that can be easily extended to unseen classes. However, it is proved to be a challenging task to learn the transfer module that is general to various classes. This paper solves few-shot segmentation in a new perspective of "\textit{how to represent unseen classes by existing classes}", and formulates few-shot segmentation as the representation process that represents unseen classes (in terms of forming the foreground prior) by existing classes precisely. Based on such idea, we propose a new class representation based few-shot segmentation framework, which firstly generates class activation map of unseen class based on the knowledge of existing classes, and then uses the map as foreground probability map to extract the foregrounds from query image. A new two-branch based few-shot segmentation network is proposed. Moreover, a new CAM generation module that extracts the CAM of unseen classes rather than the classical training classes is raised. We validate the effectiveness of our method on Pascal VOC 2012 dataset, the value FB-IoU of one-shot and five-shot arrives at 69.2\% and 70.1\% respectively, which outperforms the state-of-the-art method.

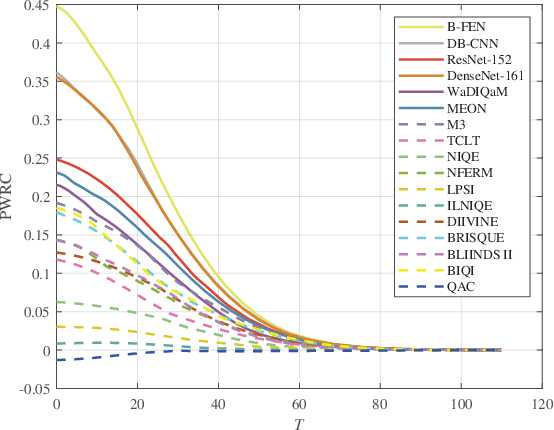

A Perceptually Weighted Rank Correlation Indicator for Objective Image Quality Assessment

May 15, 2017

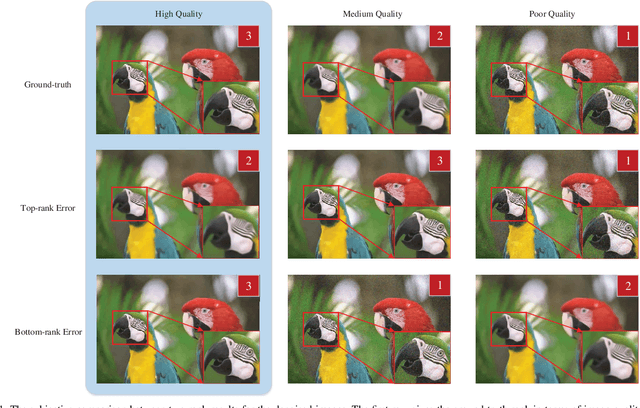

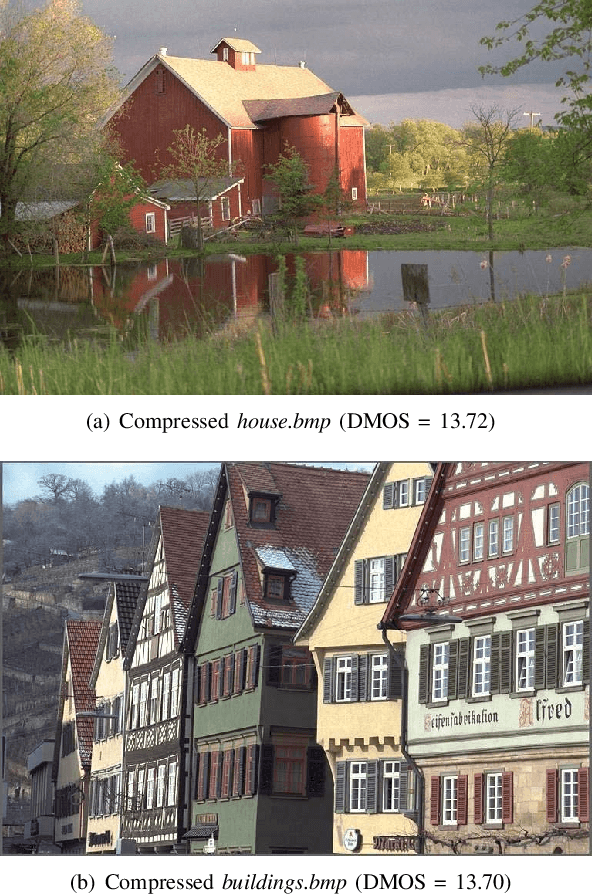

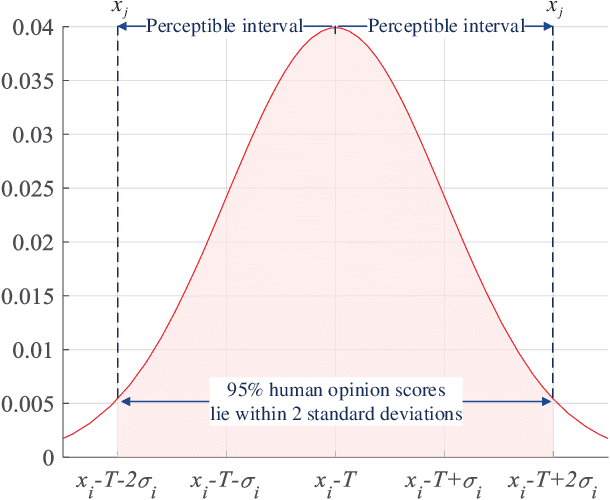

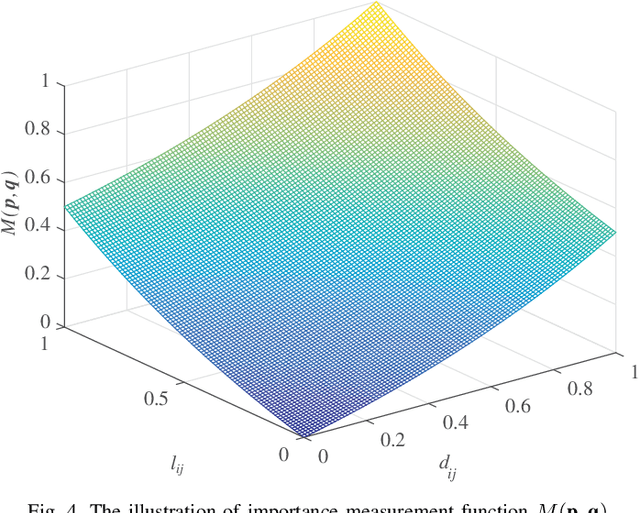

Abstract:In the field of objective image quality assessment (IQA), the Spearman's $\rho$ and Kendall's $\tau$ are two most popular rank correlation indicators, which straightforwardly assign uniform weight to all quality levels and assume each pair of images are sortable. They are successful for measuring the average accuracy of an IQA metric in ranking multiple processed images. However, two important perceptual properties are ignored by them as well. Firstly, the sorting accuracy (SA) of high quality images are usually more important than the poor quality ones in many real world applications, where only the top-ranked images would be pushed to the users. Secondly, due to the subjective uncertainty in making judgement, two perceptually similar images are usually hardly sortable, whose ranks do not contribute to the evaluation of an IQA metric. To more accurately compare different IQA algorithms, we explore a perceptually weighted rank correlation indicator in this paper, which rewards the capability of correctly ranking high quality images, and suppresses the attention towards insensitive rank mistakes. More specifically, we focus on activating `valid' pairwise comparison towards image quality, whose difference exceeds a given sensory threshold (ST). Meanwhile, each image pair is assigned an unique weight, which is determined by both the quality level and rank deviation. By modifying the perception threshold, we can illustrate the sorting accuracy with a more sophisticated SA-ST curve, rather than a single rank correlation coefficient. The proposed indicator offers a new insight for interpreting visual perception behaviors. Furthermore, the applicability of our indicator is validated in recommending robust IQA metrics for both the degraded and enhanced image data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge