Kimia Hassanzadeh

Learning robust driving policies without online exploration

Mar 15, 2021

Abstract:We propose a multi-time-scale predictive representation learning method to efficiently learn robust driving policies in an offline manner that generalize well to novel road geometries, and damaged and distracting lane conditions which are not covered in the offline training data. We show that our proposed representation learning method can be applied easily in an offline (batch) reinforcement learning setting demonstrating the ability to generalize well and efficiently under novel conditions compared to standard batch RL methods. Our proposed method utilizes training data collected entirely offline in the real-world which removes the need of intensive online explorations that impede applying deep reinforcement learning on real-world robot training. Various experiments were conducted in both simulator and real-world scenarios for the purpose of evaluation and analysis of our proposed claims.

SMARTS: Scalable Multi-Agent Reinforcement Learning Training School for Autonomous Driving

Nov 01, 2020

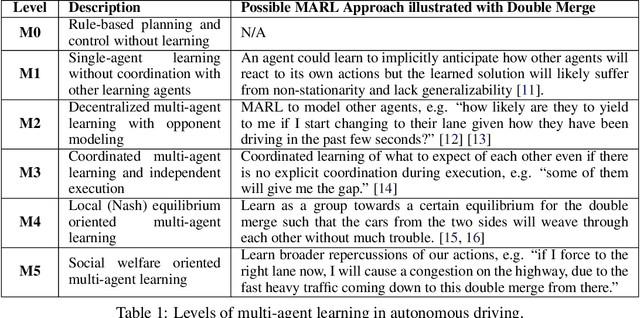

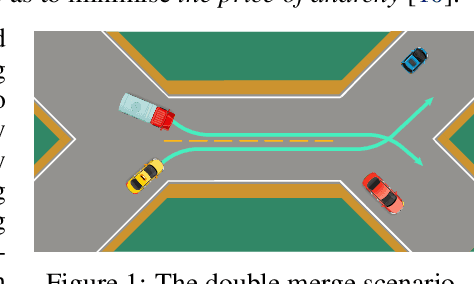

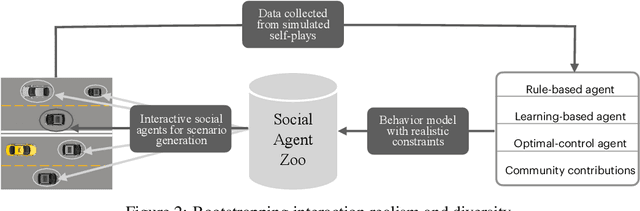

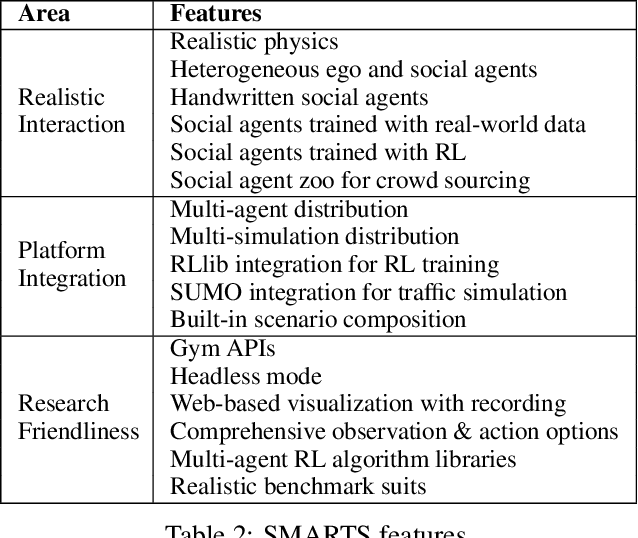

Abstract:Multi-agent interaction is a fundamental aspect of autonomous driving in the real world. Despite more than a decade of research and development, the problem of how to competently interact with diverse road users in diverse scenarios remains largely unsolved. Learning methods have much to offer towards solving this problem. But they require a realistic multi-agent simulator that generates diverse and competent driving interactions. To meet this need, we develop a dedicated simulation platform called SMARTS (Scalable Multi-Agent RL Training School). SMARTS supports the training, accumulation, and use of diverse behavior models of road users. These are in turn used to create increasingly more realistic and diverse interactions that enable deeper and broader research on multi-agent interaction. In this paper, we describe the design goals of SMARTS, explain its basic architecture and its key features, and illustrate its use through concrete multi-agent experiments on interactive scenarios. We open-source the SMARTS platform and the associated benchmark tasks and evaluation metrics to encourage and empower research on multi-agent learning for autonomous driving. Our code is available at https://github.com/huawei-noah/SMARTS.

Learning predictive representations in autonomous driving to improve deep reinforcement learning

Jun 26, 2020

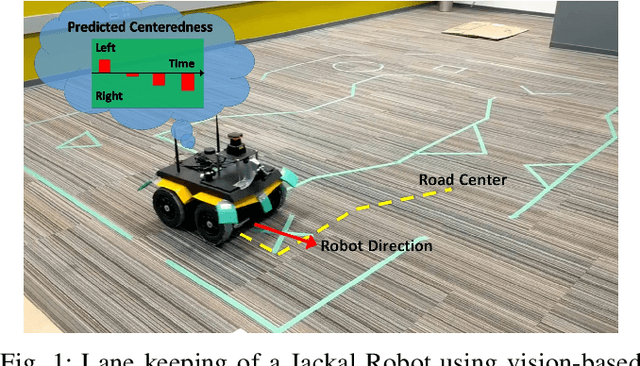

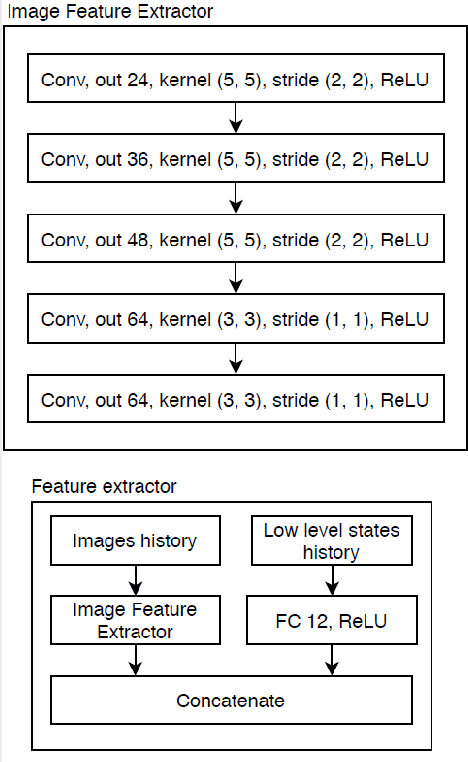

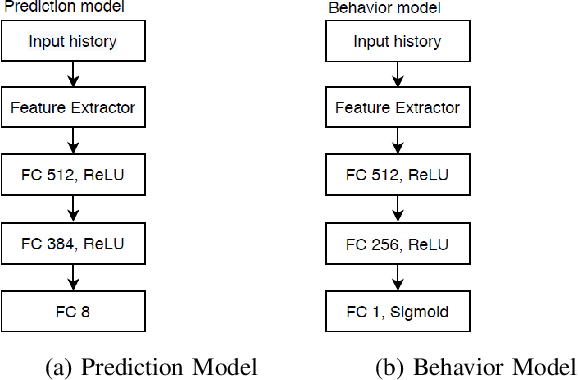

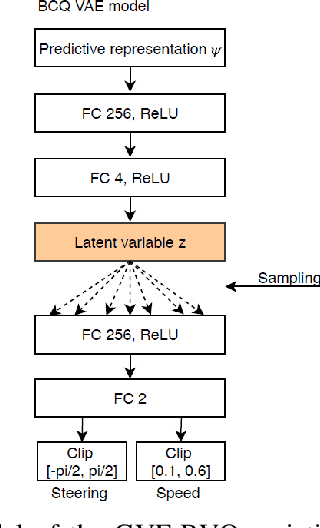

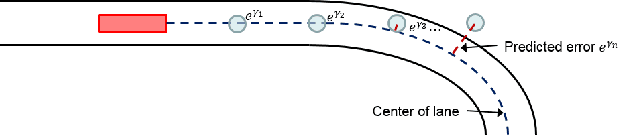

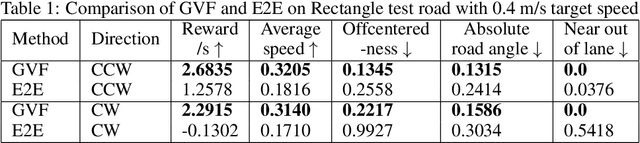

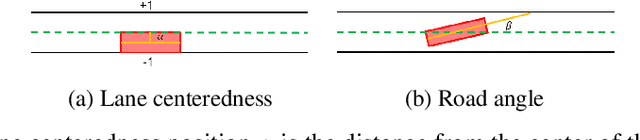

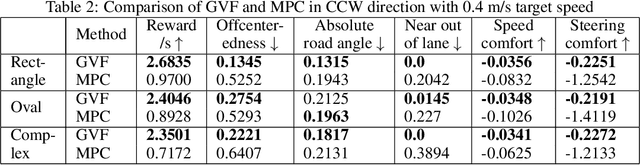

Abstract:Reinforcement learning using a novel predictive representation is applied to autonomous driving to accomplish the task of driving between lane markings where substantial benefits in performance and generalization are observed on unseen test roads in both simulation and on a real Jackal robot. The novel predictive representation is learned by general value functions (GVFs) to provide out-of-policy, or counter-factual, predictions of future lane centeredness and road angle that form a compact representation of the state of the agent improving learning in both online and offline reinforcement learning to learn to drive an autonomous vehicle with methods that generalizes well to roads not in the training data. Experiments in both simulation and the real-world demonstrate that predictive representations in reinforcement learning improve learning efficiency, smoothness of control and generalization to roads that the agent was never shown during training, including damaged lane markings. It was found that learning a predictive representation that consists of several predictions over different time scales, or discount factors, improves the performance and smoothness of the control substantially. The Jackal robot was trained in a two step process where the predictive representation is learned first followed by a batch reinforcement learning algorithm (BCQ) from data collected through both automated and human-guided exploration in the environment. We conclude that out-of-policy predictive representations with GVFs offer reinforcement learning many benefits in real-world problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge