Learning predictive representations in autonomous driving to improve deep reinforcement learning

Paper and Code

Jun 26, 2020

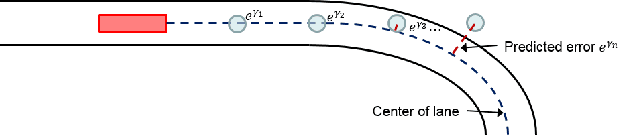

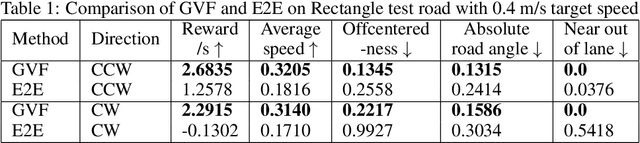

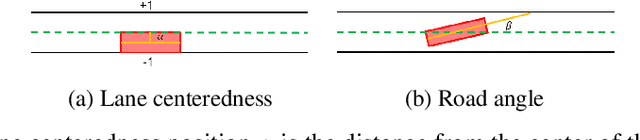

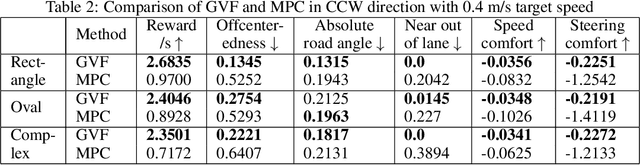

Reinforcement learning using a novel predictive representation is applied to autonomous driving to accomplish the task of driving between lane markings where substantial benefits in performance and generalization are observed on unseen test roads in both simulation and on a real Jackal robot. The novel predictive representation is learned by general value functions (GVFs) to provide out-of-policy, or counter-factual, predictions of future lane centeredness and road angle that form a compact representation of the state of the agent improving learning in both online and offline reinforcement learning to learn to drive an autonomous vehicle with methods that generalizes well to roads not in the training data. Experiments in both simulation and the real-world demonstrate that predictive representations in reinforcement learning improve learning efficiency, smoothness of control and generalization to roads that the agent was never shown during training, including damaged lane markings. It was found that learning a predictive representation that consists of several predictions over different time scales, or discount factors, improves the performance and smoothness of the control substantially. The Jackal robot was trained in a two step process where the predictive representation is learned first followed by a batch reinforcement learning algorithm (BCQ) from data collected through both automated and human-guided exploration in the environment. We conclude that out-of-policy predictive representations with GVFs offer reinforcement learning many benefits in real-world problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge