Kilian Kleeberger

Towards Packaging Unit Detection for Automated Palletizing Tasks

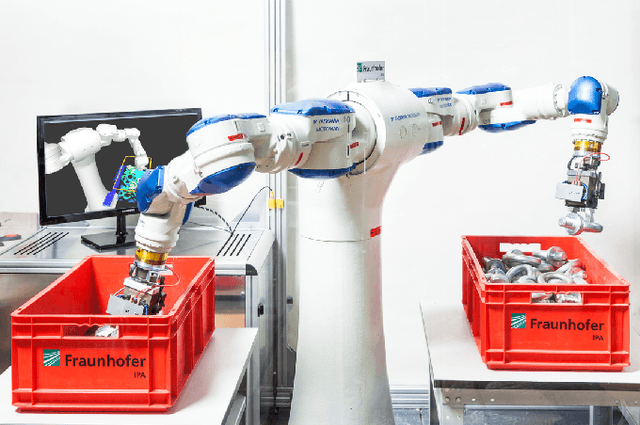

Aug 11, 2023Abstract:For various automated palletizing tasks, the detection of packaging units is a crucial step preceding the actual handling of the packaging units by an industrial robot. We propose an approach to this challenging problem that is fully trained on synthetically generated data and can be robustly applied to arbitrary real world packaging units without further training or setup effort. The proposed approach is able to handle sparse and low quality sensor data, can exploit prior knowledge if available and generalizes well to a wide range of products and application scenarios. To demonstrate the practical use of our approach, we conduct an extensive evaluation on real-world data with a wide range of different retail products. Further, we integrated our approach in a lab demonstrator and a commercial solution will be marketed through an industrial partner.

Precise Object Placement with Pose Distance Estimations for Different Objects and Grippers

Oct 03, 2021

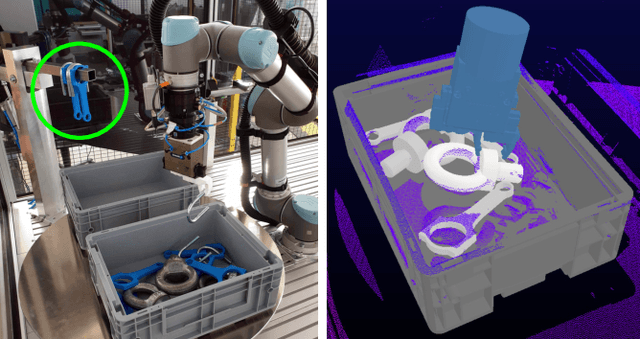

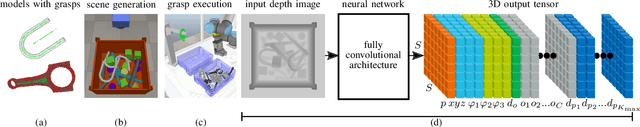

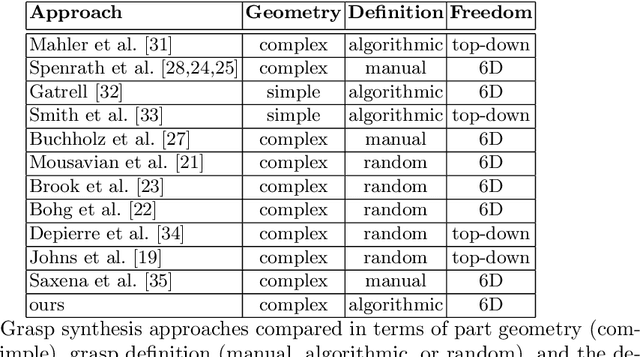

Abstract:This paper introduces a novel approach for the grasping and precise placement of various known rigid objects using multiple grippers within highly cluttered scenes. Using a single depth image of the scene, our method estimates multiple 6D object poses together with an object class, a pose distance for object pose estimation, and a pose distance from a target pose for object placement for each automatically obtained grasp pose with a single forward pass of a neural network. By incorporating model knowledge into the system, our approach has higher success rates for grasping than state-of-the-art model-free approaches. Furthermore, our method chooses grasps that result in significantly more precise object placements than prior model-based work.

Automatic Grasp Pose Generation for Parallel Jaw Grippers

Apr 23, 2021

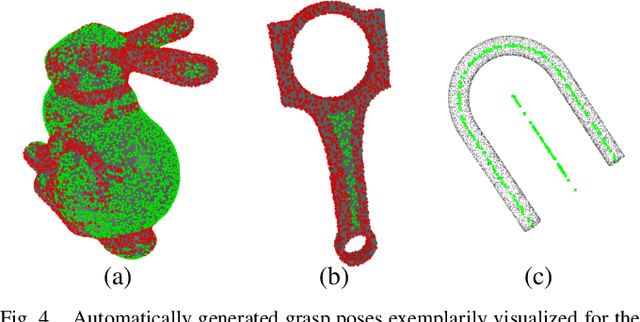

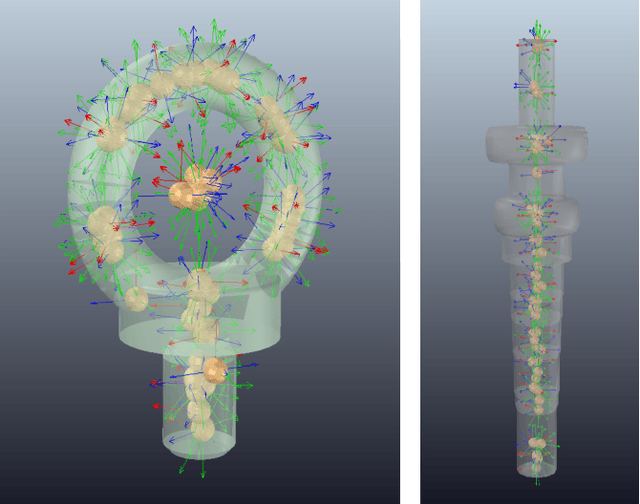

Abstract:This paper presents a novel approach for the automatic offline grasp pose synthesis on known rigid objects for parallel jaw grippers. We use several criteria such as gripper stroke, surface friction, and a collision check to determine suitable 6D grasp poses on an object. In contrast to most available approaches, we neither aim for the best grasp pose nor for as many grasp poses as possible, but for a highly diverse set of grasps distributed all along the object. In order to accomplish this objective, we employ a clustering algorithm to the sampled set of grasps. This allows to simultaneously reduce the set of grasp pose candidates and maintain a high variance in terms of position and orientation between the individual grasps. We demonstrate that the grasps generated by our method can be successfully used in real-world robotic grasping applications.

Investigations on Output Parameterizations of Neural Networks for Single Shot 6D Object Pose Estimation

Apr 15, 2021

Abstract:Single shot approaches have demonstrated tremendous success on various computer vision tasks. Finding good parameterizations for 6D object pose estimation remains an open challenge. In this work, we propose different novel parameterizations for the output of the neural network for single shot 6D object pose estimation. Our learning-based approach achieves state-of-the-art performance on two public benchmark datasets. Furthermore, we demonstrate that the pose estimates can be used for real-world robotic grasping tasks without additional ICP refinement.

Transferring Experience from Simulation to the Real World for Precise Pick-And-Place Tasks in Highly Cluttered Scenes

Jan 12, 2021

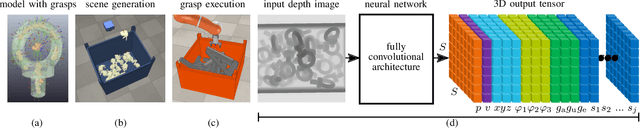

Abstract:In this paper, we introduce a novel learning-based approach for grasping known rigid objects in highly cluttered scenes and precisely placing them based on depth images. Our Placement Quality Network (PQ-Net) estimates the object pose and the quality for each automatically generated grasp pose for multiple objects simultaneously at 92 fps in a single forward pass of a neural network. All grasping and placement trials are executed in a physics simulation and the gained experience is transferred to the real world using domain randomization. We demonstrate that our policy successfully transfers to the real world. PQ-Net outperforms other model-free approaches in terms of grasping success rate and automatically scales to new objects of arbitrary symmetry without any human intervention.

Single Shot 6D Object Pose Estimation

Apr 27, 2020

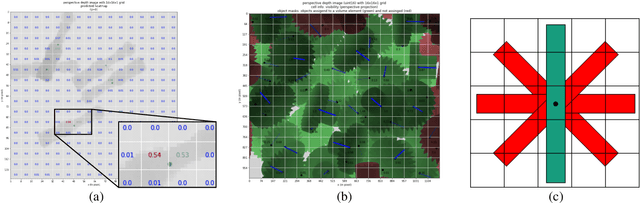

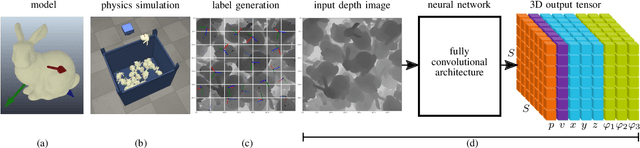

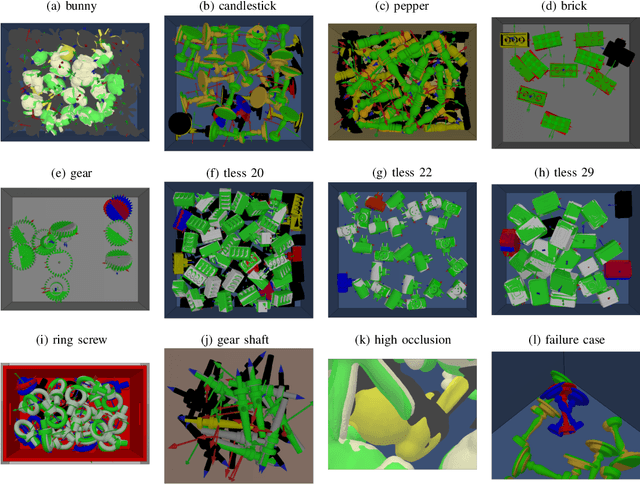

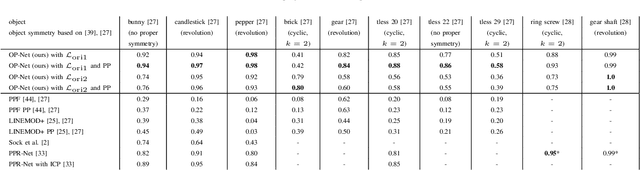

Abstract:In this paper, we introduce a novel single shot approach for 6D object pose estimation of rigid objects based on depth images. For this purpose, a fully convolutional neural network is employed, where the 3D input data is spatially discretized and pose estimation is considered as a regression task that is solved locally on the resulting volume elements. With 65 fps on a GPU, our Object Pose Network (OP-Net) is extremely fast, is optimized end-to-end, and estimates the 6D pose of multiple objects in the image simultaneously. Our approach does not require manually 6D pose-annotated real-world datasets and transfers to the real world, although being entirely trained on synthetic data. The proposed method is evaluated on public benchmark datasets, where we can demonstrate that state-of-the-art methods are significantly outperformed.

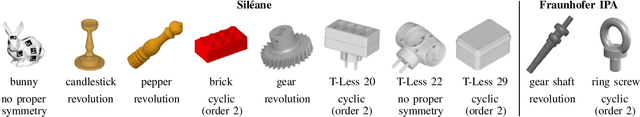

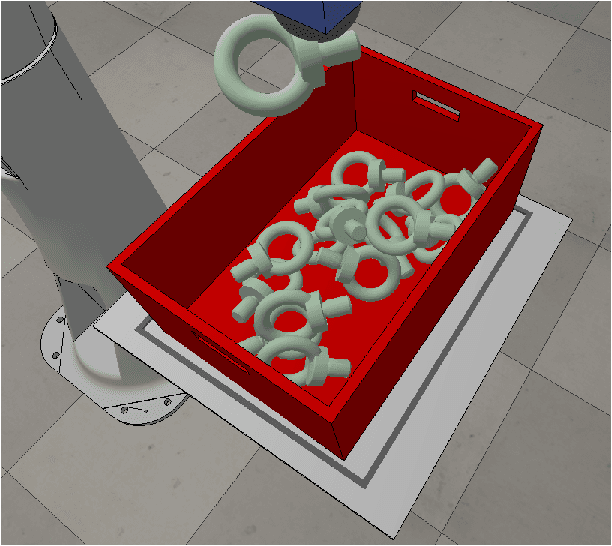

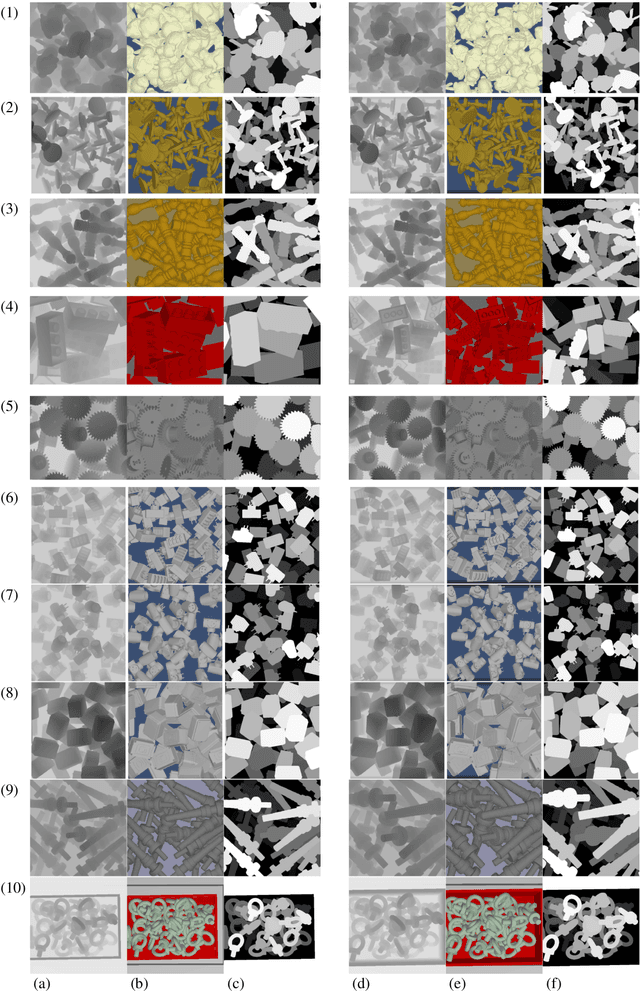

Large-scale 6D Object Pose Estimation Dataset for Industrial Bin-Picking

Dec 06, 2019

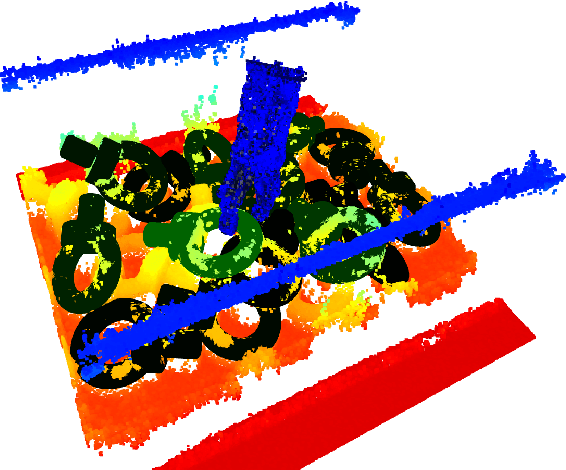

Abstract:In this paper, we introduce a new public dataset for 6D object pose estimation and instance segmentation for industrial bin-picking. The dataset comprises both synthetic and real-world scenes. For both, point clouds, depth images, and annotations comprising the 6D pose (position and orientation), a visibility score, and a segmentation mask for each object are provided. Along with the raw data, a method for precisely annotating real-world scenes is proposed. To the best of our knowledge, this is the first public dataset for 6D object pose estimation and instance segmentation for bin-picking containing sufficiently annotated data for learning-based approaches. Furthermore, it is one of the largest public datasets for object pose estimation in general. The dataset is publicly available at http://www.bin-picking.ai/en/dataset.html.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge