Khrystyna Faryna

Medical Imaging AI Competitions Lack Fairness

Dec 19, 2025

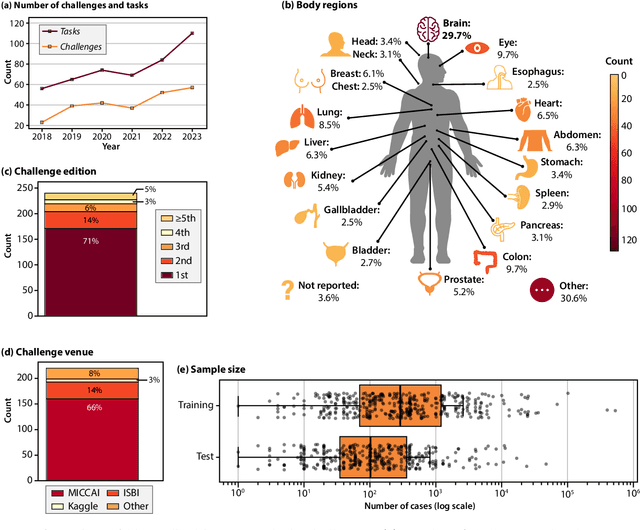

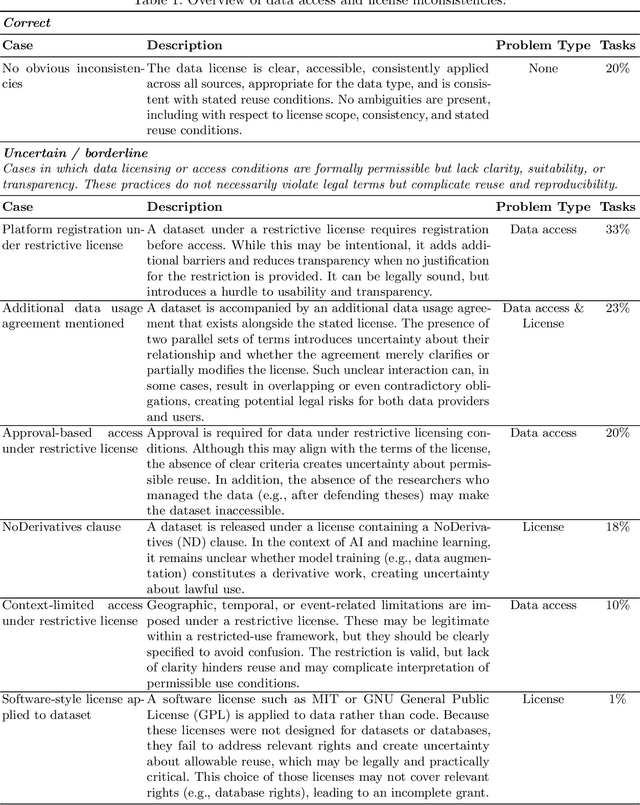

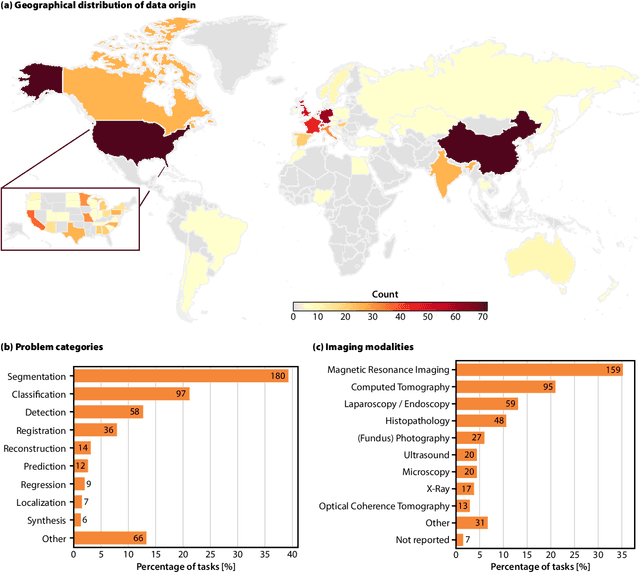

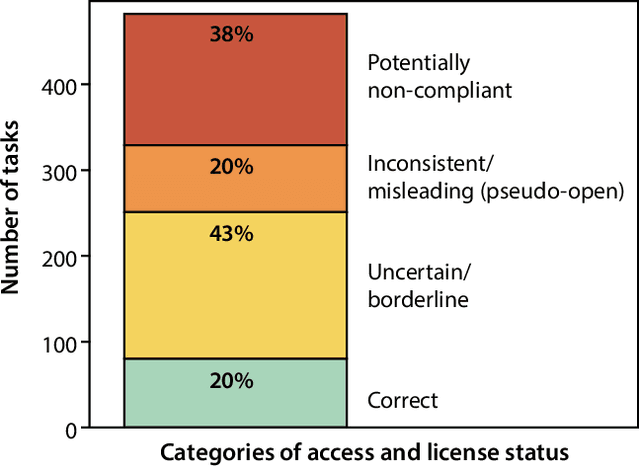

Abstract:Benchmarking competitions are central to the development of artificial intelligence (AI) in medical imaging, defining performance standards and shaping methodological progress. However, it remains unclear whether these benchmarks provide data that are sufficiently representative, accessible, and reusable to support clinically meaningful AI. In this work, we assess fairness along two complementary dimensions: (1) whether challenge datasets are representative of real-world clinical diversity, and (2) whether they are accessible and legally reusable in line with the FAIR principles. To address this question, we conducted a large-scale systematic study of 241 biomedical image analysis challenges comprising 458 tasks across 19 imaging modalities. Our findings show substantial biases in dataset composition, including geographic location, modality-, and problem type-related biases, indicating that current benchmarks do not adequately reflect real-world clinical diversity. Despite their widespread influence, challenge datasets were frequently constrained by restrictive or ambiguous access conditions, inconsistent or non-compliant licensing practices, and incomplete documentation, limiting reproducibility and long-term reuse. Together, these shortcomings expose foundational fairness limitations in our benchmarking ecosystem and highlight a disconnect between leaderboard success and clinical relevance.

Multi-Label Annotation of Chest Abdomen Pelvis Computed Tomography Text Reports Using Deep Learning

Feb 25, 2021

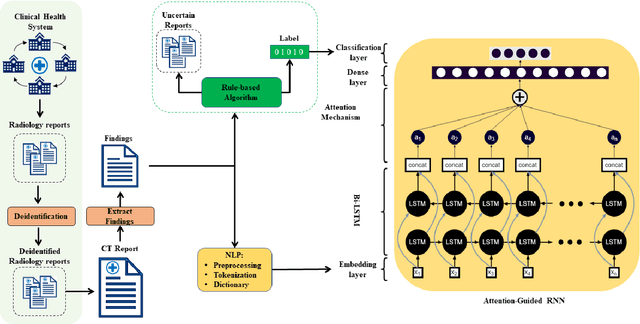

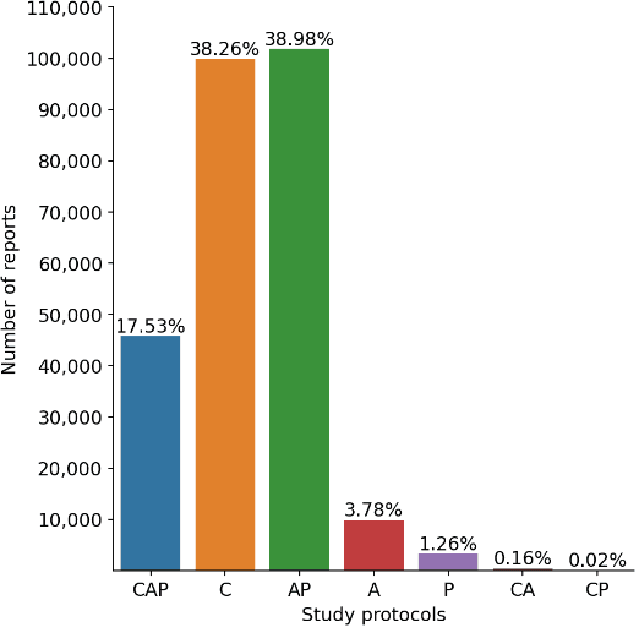

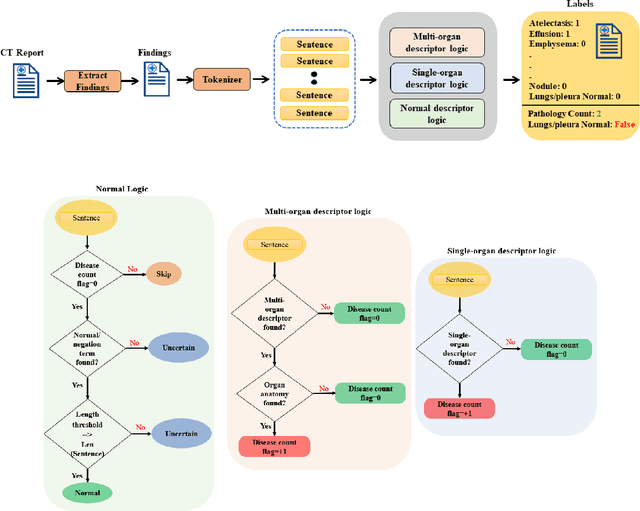

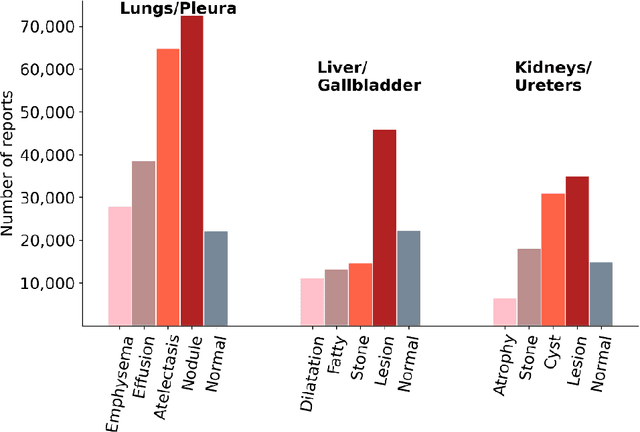

Abstract:To develop a high throughput multi-label annotator for body Computed Tomography (CT) reports that can be applied to a variety of diseases, organs, and cases. First, we used a dictionary approach to develop a rule-based algorithm (RBA) for extraction of disease labels from radiology text reports. We targeted three organ systems (lungs/pleura, liver/gallbladder, kidneys/ureters) with four diseases per system based on their prevalence in our dataset. To expand the algorithm beyond pre-defined keywords, an attention-guided recurrent neural network (RNN) was trained using the RBA-extracted labels to classify the reports as being positive for one or more diseases or normal for each organ system. Confounding effects on model performance were evaluated using random or pre-trained embedding as well as different sizes of training datasets. Performance was evaluated using the receiver operating characteristic (ROC) area under the curve (AUC) against 2,158 manually obtained labels. Our model extracted disease labels from 261,229 radiology reports of 112,501 unique subjects. Pre-trained models outperformed random embedding across all diseases. As the training dataset size was reduced, performance was robust except for a few diseases with relatively small number of cases. Pre-trained Classification AUCs achieved > 0.95 for all five disease outcomes across all three organ systems. Our label-extracting pipeline was able to encompass a variety of cases and diseases by generalizing beyond strict rules with exceptional accuracy. As a framework, this model can be easily adapted to enable automated labeling of hospital-scale medical data sets for training image-based disease classifiers.

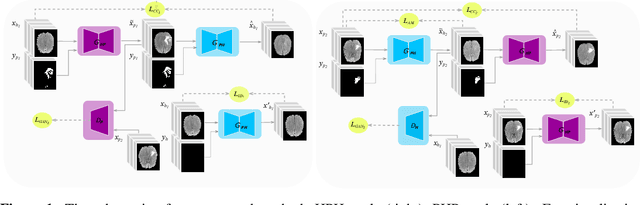

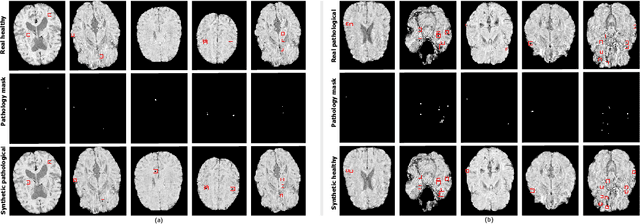

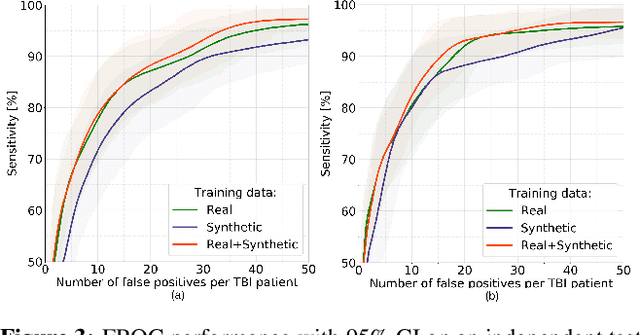

Adversarial cycle-consistent synthesis of cerebral microbleeds for data augmentation

Jan 16, 2021

Abstract:We propose a novel framework for controllable pathological image synthesis for data augmentation. Inspired by CycleGAN, we perform cycle-consistent image-to-image translation between two domains: healthy and pathological. Guided by a semantic mask, an adversarially trained generator synthesizes pathology on a healthy image in the specified location. We demonstrate our approach on an institutional dataset of cerebral microbleeds in traumatic brain injury patients. We utilize synthetic images generated with our method for data augmentation in cerebral microbleeds detection. Enriching the training dataset with synthetic images exhibits the potential to increase detection performance for cerebral microbleeds in traumatic brain injury patients.

Weakly Supervised 3D Classification of Chest CT using Aggregated Multi-Resolution Deep Segmentation Features

Oct 31, 2020Abstract:Weakly supervised disease classification of CT imaging suffers from poor localization owing to case-level annotations, where even a positive scan can hold hundreds to thousands of negative slices along multiple planes. Furthermore, although deep learning segmentation and classification models extract distinctly unique combinations of anatomical features from the same target class(es), they are typically seen as two independent processes in a computer-aided diagnosis (CAD) pipeline, with little to no feature reuse. In this research, we propose a medical classifier that leverages the semantic structural concepts learned via multi-resolution segmentation feature maps, to guide weakly supervised 3D classification of chest CT volumes. Additionally, a comparative analysis is drawn across two different types of feature aggregation to explore the vast possibilities surrounding feature fusion. Using a dataset of 1593 scans labeled on a case-level basis via rule-based model, we train a dual-stage convolutional neural network (CNN) to perform organ segmentation and binary classification of four representative diseases (emphysema, pneumonia/atelectasis, mass and nodules) in lungs. The baseline model, with separate stages for segmentation and classification, results in AUC of 0.791. Using identical hyperparameters, the connected architecture using static and dynamic feature aggregation improves performance to AUC of 0.832 and 0.851, respectively. This study advances the field in two key ways. First, case-level report data is used to weakly supervise a 3D CT classifier of multiple, simultaneous diseases for an organ. Second, segmentation and classification models are connected with two different feature aggregation strategies to enhance the classification performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge