Katja Mombaur

Learning Skateboarding for Humanoid Robots through Massively Parallel Reinforcement Learning

Sep 12, 2024

Abstract:Learning-based methods have proven useful at generating complex motions for robots, including humanoids. Reinforcement learning (RL) has been used to learn locomotion policies, some of which leverage a periodic reward formulation. This work extends the periodic reward formulation of locomotion to skateboarding for the REEM-C robot. Brax/MJX is used to implement the RL problem to achieve fast training. Initial results in simulation are presented with hardware experiments in progress.

Development and Validation of a Modular Sensor-Based System for Gait Analysis and Control in Lower-Limb Exoskeletons

Sep 02, 2024Abstract:With rapid advancements in exoskeleton hardware technologies, successful assessment and accurate control remain challenging. This study introduces a modular sensor-based system to enhance biomechanical evaluation and control in lower-limb exoskeletons, utilizing advanced sensor technologies and fuzzy logic. We aim to surpass the limitations of current biomechanical evaluation methods confined to laboratories and to address the high costs and complexity of exoskeleton control systems. The system integrates inertial measurement units, force-sensitive resistors, and load cells into instrumented crutches and 3D-printed insoles. These components function both independently and collectively to capture comprehensive biomechanical data, including the anteroposterior center of pressure and crutch ground reaction forces. This data is processed through a central unit using fuzzy logic algorithms for real-time gait phase estimation and exoskeleton control. Validation experiments with three participants, benchmarked against gold-standard motion capture and force plate technologies, demonstrate our system's capability for reliable gait phase detection and precise biomechanical measurements. By offering our designs open-source and integrating cost-effective technologies, this study advances wearable robotics and promotes broader innovation and adoption in exoskeleton research.

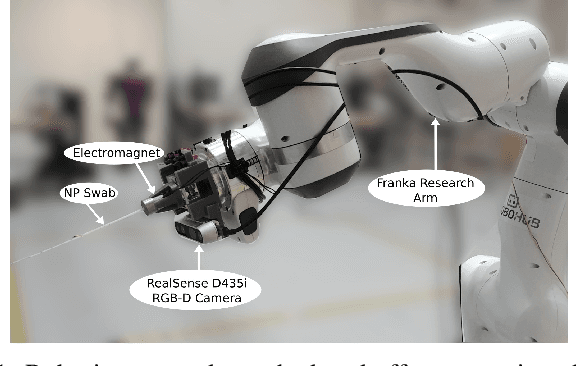

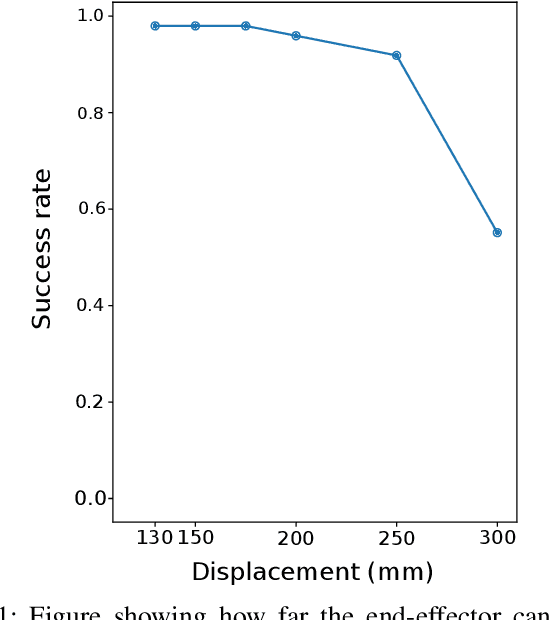

Complete Autonomous Robotic Nasopharyngeal Swab System with Evaluation on a Stochastically Moving Phantom Head

Aug 23, 2024Abstract:The application of autonomous robotics to close-contact healthcare tasks has a clear role for the future due to its potential to reduce infection risks to staff and improve clinical efficiency. Nasopharyngeal (NP) swab sample collection for diagnosing upper-respiratory illnesses is one type of close contact task that is interesting for robotics due to the dexterity requirements and the unobservability of the nasal cavity. We propose a control system that performs the test using a collaborative manipulator arm with an instrumented end-effector to take visual and force measurements, under the scenario that the patient is unrestrained and the tools are general enough to be applied to other close contact tasks. The system employs a visual servo controller to align the swab with the nostrils. A compliant joint velocity controller inserts the swab along a trajectory optimized through a simulation environment, that also reacts to measured forces applied to the swab. Additional subsystems include a fuzzy logic system for detecting when the swab reaches the nasopharynx and a method for detaching the swab and aborting the procedure if safety criteria is violated. The system is evaluated using a second robotic arm that holds a nasal cavity phantom and simulates the natural head motions that could occur during the procedure. Through extensive experiments, we identify controller configurations capable of effectively performing the NP swab test even with significant head motion, which demonstrates the safety and reliability of the system.

Robotic Eye-in-hand Visual Servo Axially Aligning Nasopharyngeal Swabs with the Nasal Cavity

Aug 22, 2024

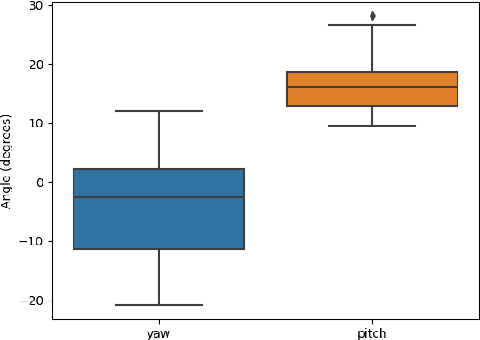

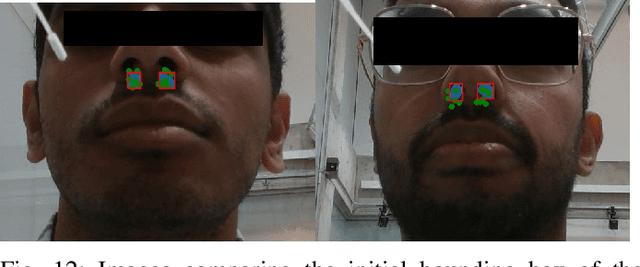

Abstract:The nasopharyngeal (NP) swab test is a method for collecting cultures to diagnose for different types of respiratory illnesses, including COVID-19. Delegating this task to robots would be beneficial in terms of reducing infection risks and bolstering the healthcare system, but a critical component of the NP swab test is having the swab aligned properly with the nasal cavity so that it does not cause excessive discomfort or injury by traveling down the wrong passage. Existing research towards robotic NP swabbing typically assumes the patient's head is held within a fixture. This simplifies the alignment problem, but is also dissimilar to clinical scenarios where patients are typically free-standing. Consequently, our work creates a vision-guided pipeline to allow an instrumented robot arm to properly position and orient NP swabs with respect to the nostrils of free-standing patients. The first component of the pipeline is a precomputed joint lookup table to allow the arm to meet the patient's arbitrary position in the designated workspace, while avoiding joint limits. Our pipeline leverages semantic face models from computer vision to estimate the Euclidean pose of the face with respect to a monocular RGB-D camera placed on the end-effector. These estimates are passed into an unscented Kalman filter on manifolds state estimator and a pose based visual servo control loop to move the swab to the designated pose in front of the nostril. Our pipeline was validated with human trials, featuring a cohort of 25 participants. The system is effective, reaching the nostril for 84% of participants, and our statistical analysis did not find significant demographic biases within the cohort.

Collaborative Robot Arm Inserting Nasopharyngeal Swabs with Admittance Control

Aug 21, 2024

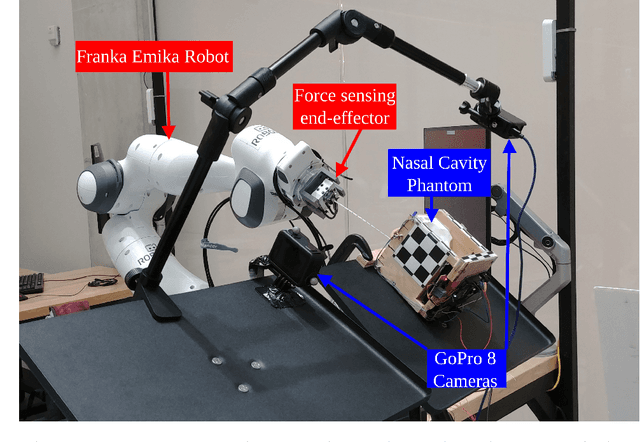

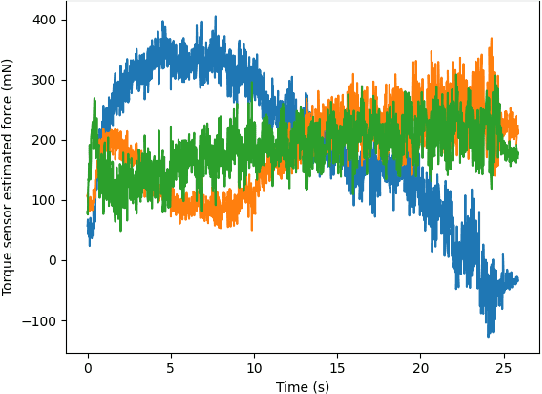

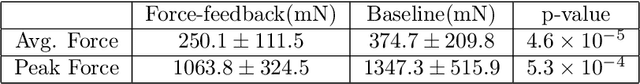

Abstract:The nasopharyngeal (NP) swab sample test, commonly used to detect COVID-19 and other respiratory illnesses, involves moving a swab through the nasal cavity to collect samples from the nasopharynx. While typically this is done by human healthcare workers, there is a significant societal interest to enable robots to do this test to reduce exposure to patients and to free up human resources. The task is challenging from the robotics perspective because of the dexterity and safety requirements. While other works have implemented specific hardware solutions, our research differentiates itself by using a ubiquitous rigid robotic arm. This work presents a case study where we investigate the strengths and challenges using compliant control system to accomplish NP swab tests with such a robotic configuration. To accomplish this, we designed a force sensing end-effector that integrates with the proposed torque controlled compliant control loop. We then conducted experiments where the robot inserted NP swabs into a 3D printed nasal cavity phantom. Ultimately, we found that the compliant control system outperformed a basic position controller and shows promise for human use. However, further efforts are needed to ensure the initial alignment with the nostril and to address head motion.

Slow waltzing with REEM-C: a physical-social human-robot interaction study of robot-to-human communication

Aug 09, 2024Abstract:Humans often work closely together and relay a wealth of information through physical interaction. Robots, on the other hand, have not yet been developed to work similarly closely with humans, and to effectively convey information when engaging in physical human-robot interaction (pHRI). This currently limits the potential of physical human-robot collaboration to solve real-world problems. This paper investigates the question of how to establish clear and intuitive robot-to-human communication, while ensuring human comfort during pHRI. We approach this question from the perspective of a leader-follower scenario, in which a full-body humanoid robot leads a slow waltz dance by signaling the next steps to a human partner. This is achieved through the development of a whole-body control framework combining admittance and impedance control, which allows for different communication modalities including haptic, visual, and audio signals. Participant experiments allowed to validate the performance of the controller, and to understand what types of communication work better in terms of effectiveness and comfort during robot-led pHRI.

Learning Velocity-based Humanoid Locomotion: Massively Parallel Learning with Brax and MJX

Jul 06, 2024

Abstract:Humanoid locomotion is a key skill to bring humanoids out of the lab and into the real-world. Many motion generation methods for locomotion have been proposed including reinforcement learning (RL). RL locomotion policies offer great versatility and generalizability along with the ability to experience new knowledge to improve over time. This work presents a velocity-based RL locomotion policy for the REEM-C robot. The policy uses a periodic reward formulation and is implemented in Brax/MJX for fast training. Simulation results for the policy are demonstrated with future experimental results in progress.

Estimating speaker direction on a humanoid robot with binaural acoustic signals

Jul 22, 2023

Abstract:To achieve human-like behaviour during speech interactions, it is necessary for a humanoid robot to estimate the location of a human talker. Here, we present a method to optimize the parameters used for the direction of arrival (DOA) estimation, while also considering real-time applications for human-robot interaction scenarios. This method is applied to binaural sound source localization framework on a humanoid robotic head. Real data is collected and annotated for this work. Optimizations are performed via a brute force method and a Bayesian model based method, results are validated and discussed, and effects on latency for real-time use are also explored.

Optimizing wearable assistive devices with neuromuscular models and optimal control

Apr 09, 2018

Abstract:The coupling of human movement dynamics with the function and design of wearable assistive devices is vital to better understand the interaction between the two. Advanced neuromuscular models and optimal control formulations provide the possibility to study and improve this interaction. In addition, optimal control can also be used to generate predictive simulations that generate novel movements for the human model under varying optimization criterion.

Motion optimization and parameter identification for a human and lower-back exoskeleton model

Mar 15, 2018

Abstract:Designing an exoskeleton to reduce the risk of low-back injury during lifting is challenging. Computational models of the human-robot system coupled with predictive movement simulations can help to simplify this design process. Here, we present a study that models the interaction between a human model actuated by muscles and a lower-back exoskeleton. We provide a computational framework for identifying the spring parameters of the exoskeleton using an optimal control approach and forward-dynamics simulations. This is applied to generate dynamically consistent bending and lifting movements in the sagittal plane. Our computations are able to predict motions and forces of the human and exoskeleton that are within the torque limits of a subject. The identified exoskeleton could also yield a considerable reduction of the peak lower-back torques as well as the cumulative lower-back load during the movements. This work is relevant to the research communities working on human-robot interaction, and can be used as a basis for a better human-centered design process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge