Robotic Eye-in-hand Visual Servo Axially Aligning Nasopharyngeal Swabs with the Nasal Cavity

Paper and Code

Aug 22, 2024

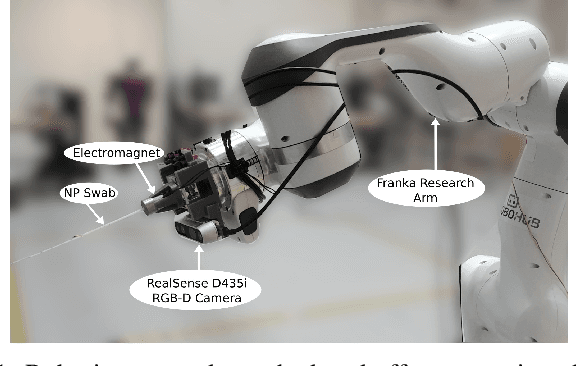

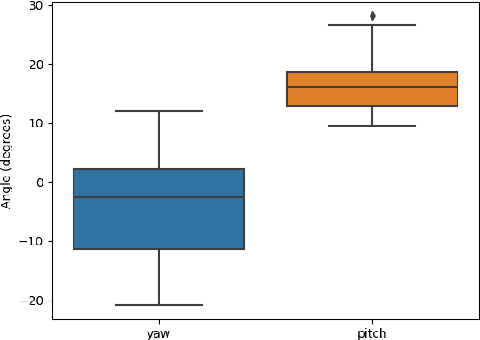

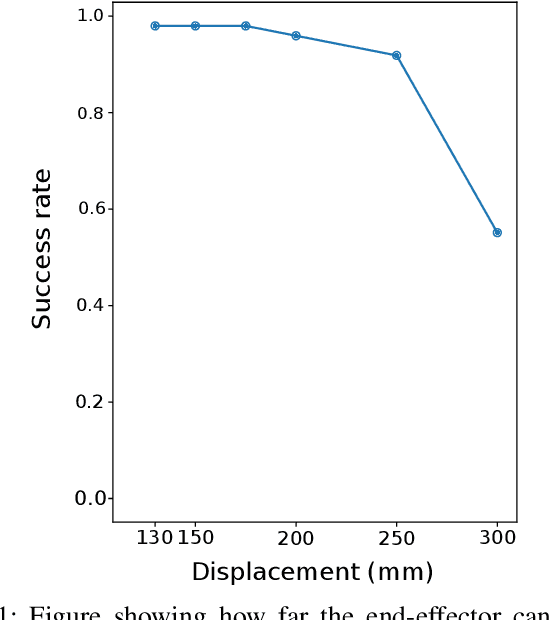

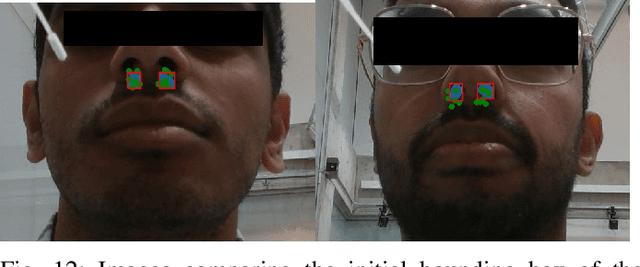

The nasopharyngeal (NP) swab test is a method for collecting cultures to diagnose for different types of respiratory illnesses, including COVID-19. Delegating this task to robots would be beneficial in terms of reducing infection risks and bolstering the healthcare system, but a critical component of the NP swab test is having the swab aligned properly with the nasal cavity so that it does not cause excessive discomfort or injury by traveling down the wrong passage. Existing research towards robotic NP swabbing typically assumes the patient's head is held within a fixture. This simplifies the alignment problem, but is also dissimilar to clinical scenarios where patients are typically free-standing. Consequently, our work creates a vision-guided pipeline to allow an instrumented robot arm to properly position and orient NP swabs with respect to the nostrils of free-standing patients. The first component of the pipeline is a precomputed joint lookup table to allow the arm to meet the patient's arbitrary position in the designated workspace, while avoiding joint limits. Our pipeline leverages semantic face models from computer vision to estimate the Euclidean pose of the face with respect to a monocular RGB-D camera placed on the end-effector. These estimates are passed into an unscented Kalman filter on manifolds state estimator and a pose based visual servo control loop to move the swab to the designated pose in front of the nostril. Our pipeline was validated with human trials, featuring a cohort of 25 participants. The system is effective, reaching the nostril for 84% of participants, and our statistical analysis did not find significant demographic biases within the cohort.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge