Karin Müller

ObjectFinder: Open-Vocabulary Assistive System for Interactive Object Search by Blind People

Dec 04, 2024

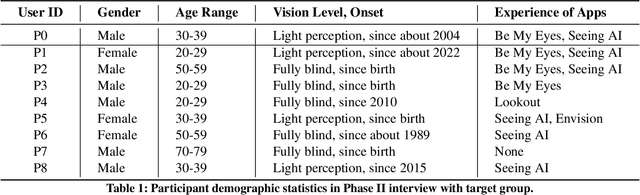

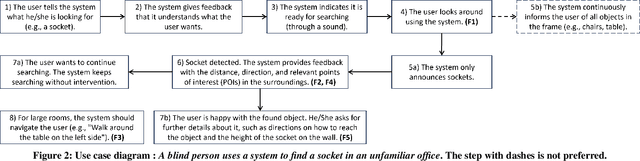

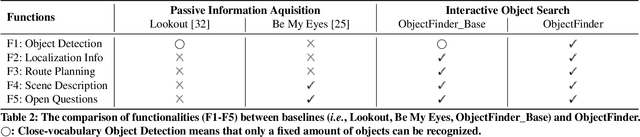

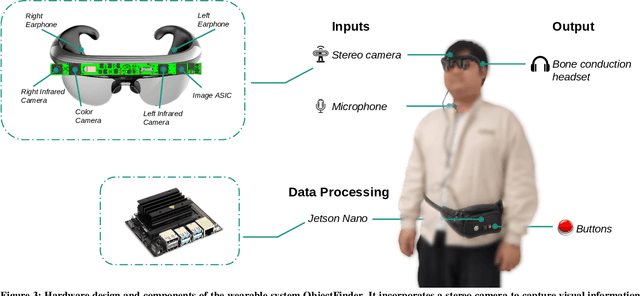

Abstract:Assistive technology can be leveraged by blind people when searching for objects in their daily lives. We created ObjectFinder, an open-vocabulary interactive object-search prototype, which combines object detection with scene description and navigation. It enables blind persons to detect and navigate to objects of their choice. Our approach used co-design for the development of the prototype. We further conducted need-finding interviews to better understand challenges in object search, followed by a study with the ObjectFinder prototype in a laboratory setting simulating a living room and an office, with eight blind users. Additionally, we compared the prototype with BeMyEyes and Lookout for object search. We found that most participants felt more independent with ObjectFinder and preferred it over the baselines when deployed on more efficient hardware, as it enhances mental mapping and allows for active target definition. Moreover, we identified factors for future directions for the development of object-search systems.

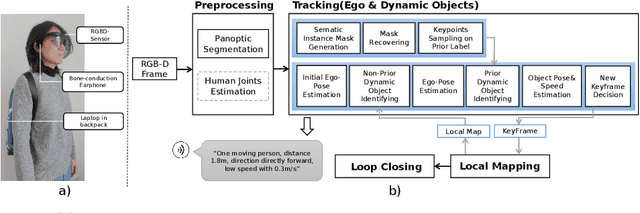

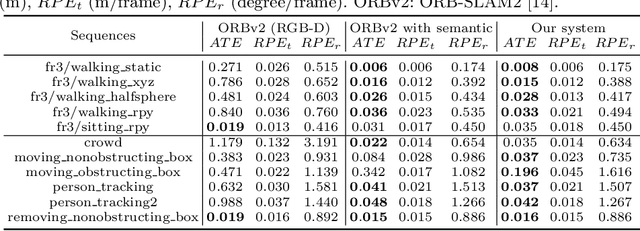

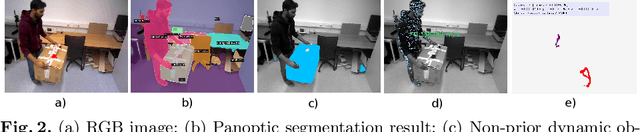

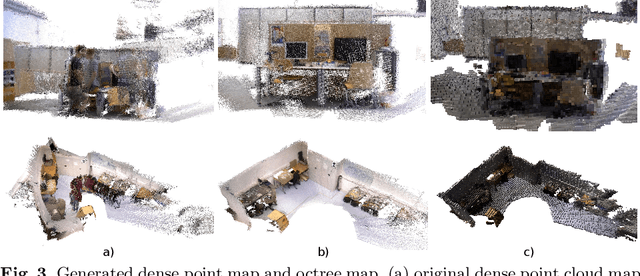

Indoor Navigation Assistance for Visually Impaired People via Dynamic SLAM and Panoptic Segmentation with an RGB-D Sensor

Apr 03, 2022

Abstract:Exploring an unfamiliar indoor environment and avoiding obstacles is challenging for visually impaired people. Currently, several approaches achieve the avoidance of static obstacles based on the mapping of indoor scenes. To solve the issue of distinguishing dynamic obstacles, we propose an assistive system with an RGB-D sensor to detect dynamic information of a scene. Once the system captures an image, panoptic segmentation is performed to obtain the prior dynamic object information. With sparse feature points extracted from images and the depth information, poses of the user can be estimated. After the ego-motion estimation, the dynamic object can be identified and tracked. Then, poses and speed of tracked dynamic objects can be estimated, which are passed to the users through acoustic feedback.

Trans4Trans: Efficient Transformer for Transparent Object and Semantic Scene Segmentation in Real-World Navigation Assistance

Aug 20, 2021

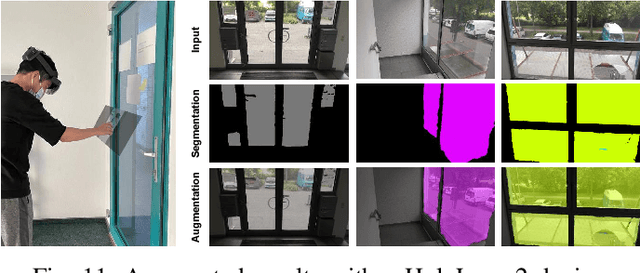

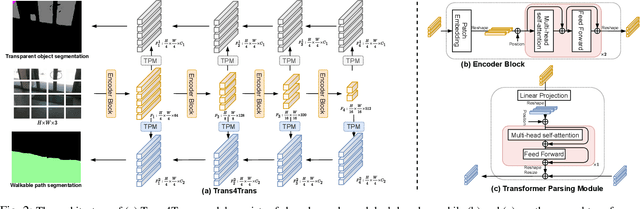

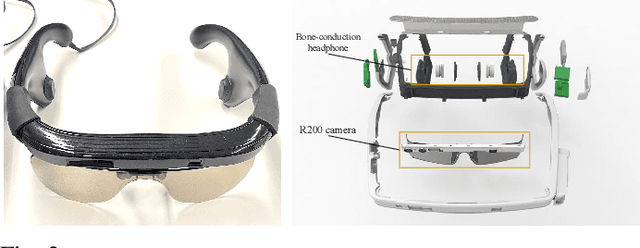

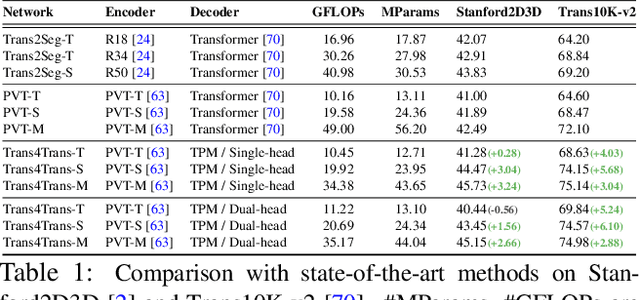

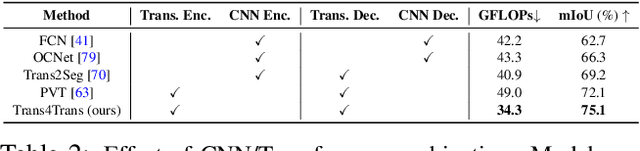

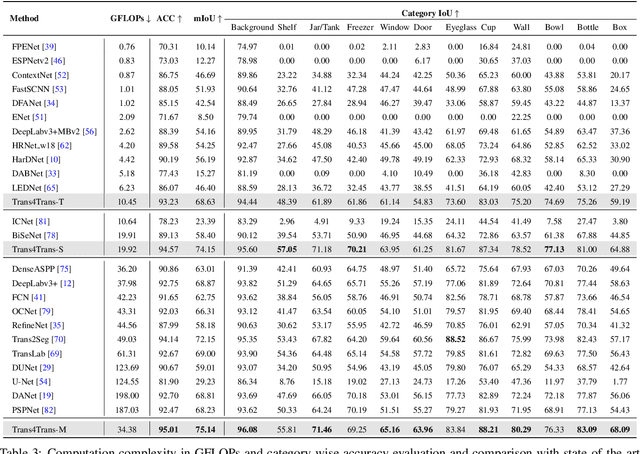

Abstract:Transparent objects, such as glass walls and doors, constitute architectural obstacles hindering the mobility of people with low vision or blindness. For instance, the open space behind glass doors is inaccessible, unless it is correctly perceived and interacted with. However, traditional assistive technologies rarely cover the segmentation of these safety-critical transparent objects. In this paper, we build a wearable system with a novel dual-head Transformer for Transparency (Trans4Trans) perception model, which can segment general- and transparent objects. The two dense segmentation results are further combined with depth information in the system to help users navigate safely and assist them to negotiate transparent obstacles. We propose a lightweight Transformer Parsing Module (TPM) to perform multi-scale feature interpretation in the transformer-based decoder. Benefiting from TPM, the double decoders can perform joint learning from corresponding datasets to pursue robustness, meanwhile maintain efficiency on a portable GPU, with negligible calculation increase. The entire Trans4Trans model is constructed in a symmetrical encoder-decoder architecture, which outperforms state-of-the-art methods on the test sets of Stanford2D3D and Trans10K-v2 datasets, obtaining mIoU of 45.13% and 75.14%, respectively. Through a user study and various pre-tests conducted in indoor and outdoor scenes, the usability and reliability of our assistive system have been extensively verified. Meanwhile, the Tran4Trans model has outstanding performances on driving scene datasets. On Cityscapes, ACDC, and DADA-seg datasets corresponding to common environments, adverse weather, and traffic accident scenarios, mIoU scores of 81.5%, 76.3%, and 39.2% are obtained, demonstrating its high efficiency and robustness for real-world transportation applications.

Trans4Trans: Efficient Transformer for Transparent Object Segmentation to Help Visually Impaired People Navigate in the Real World

Jul 07, 2021

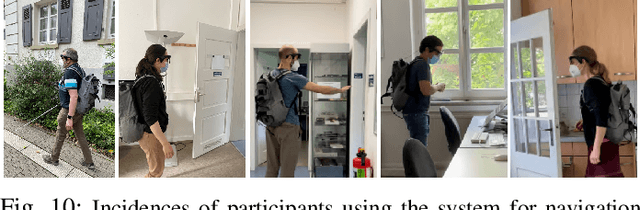

Abstract:Common fully glazed facades and transparent objects present architectural barriers and impede the mobility of people with low vision or blindness, for instance, a path detected behind a glass door is inaccessible unless it is correctly perceived and reacted. However, segmenting these safety-critical objects is rarely covered by conventional assistive technologies. To tackle this issue, we construct a wearable system with a novel dual-head Transformer for Transparency (Trans4Trans) model, which is capable of segmenting general and transparent objects and performing real-time wayfinding to assist people walking alone more safely. Especially, both decoders created by our proposed Transformer Parsing Module (TPM) enable effective joint learning from different datasets. Besides, the efficient Trans4Trans model composed of symmetric transformer-based encoder and decoder, requires little computational expenses and is readily deployed on portable GPUs. Our Trans4Trans model outperforms state-of-the-art methods on the test sets of Stanford2D3D and Trans10K-v2 datasets and obtains mIoU of 45.13% and 75.14%, respectively. Through various pre-tests and a user study conducted in indoor and outdoor scenarios, the usability and reliability of our assistive system have been extensively verified.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge