Kathrin Gerling

ObjectFinder: Open-Vocabulary Assistive System for Interactive Object Search by Blind People

Dec 04, 2024

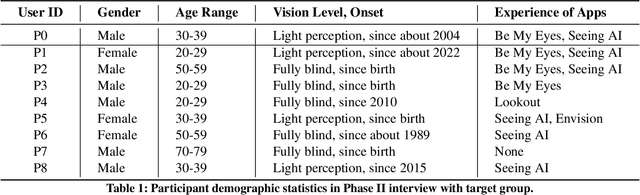

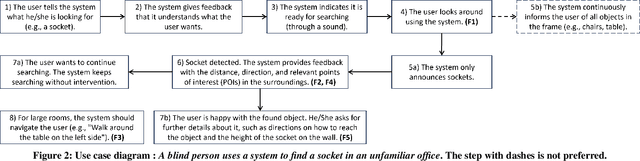

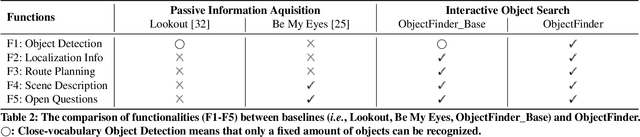

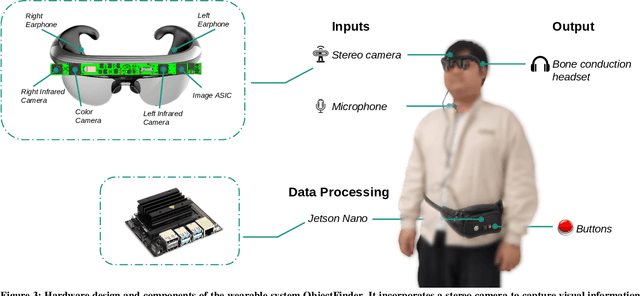

Abstract:Assistive technology can be leveraged by blind people when searching for objects in their daily lives. We created ObjectFinder, an open-vocabulary interactive object-search prototype, which combines object detection with scene description and navigation. It enables blind persons to detect and navigate to objects of their choice. Our approach used co-design for the development of the prototype. We further conducted need-finding interviews to better understand challenges in object search, followed by a study with the ObjectFinder prototype in a laboratory setting simulating a living room and an office, with eight blind users. Additionally, we compared the prototype with BeMyEyes and Lookout for object search. We found that most participants felt more independent with ObjectFinder and preferred it over the baselines when deployed on more efficient hardware, as it enhances mental mapping and allows for active target definition. Moreover, we identified factors for future directions for the development of object-search systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge