Karam Ghanem

TapeAgents: a Holistic Framework for Agent Development and Optimization

Dec 11, 2024

Abstract:We present TapeAgents, an agent framework built around a granular, structured log tape of the agent session that also plays the role of the session's resumable state. In TapeAgents we leverage tapes to facilitate all stages of the LLM Agent development lifecycle. The agent reasons by processing the tape and the LLM output to produce new thought and action steps and append them to the tape. The environment then reacts to the agent's actions by likewise appending observation steps to the tape. By virtue of this tape-centred design, TapeAgents can provide AI practitioners with holistic end-to-end support. At the development stage, tapes facilitate session persistence, agent auditing, and step-by-step debugging. Post-deployment, one can reuse tapes for evaluation, fine-tuning, and prompt-tuning; crucially, one can adapt tapes from other agents or use revised historical tapes. In this report, we explain the TapeAgents design in detail. We demonstrate possible applications of TapeAgents with several concrete examples of building monolithic agents and multi-agent teams, of optimizing agent prompts and finetuning the agent's LLM. We present tooling prototypes and report a case study where we use TapeAgents to finetune a Llama-3.1-8B form-filling assistant to perform as well as GPT-4o while being orders of magnitude cheaper. Lastly, our comparative analysis shows that TapeAgents's advantages over prior frameworks stem from our novel design of the LLM agent as a resumable, modular state machine with a structured configuration, that generates granular, structured logs and that can transform these logs into training text -- a unique combination of features absent in previous work.

The Uncanny Valley: A Comprehensive Analysis of Diffusion Models

Feb 20, 2024

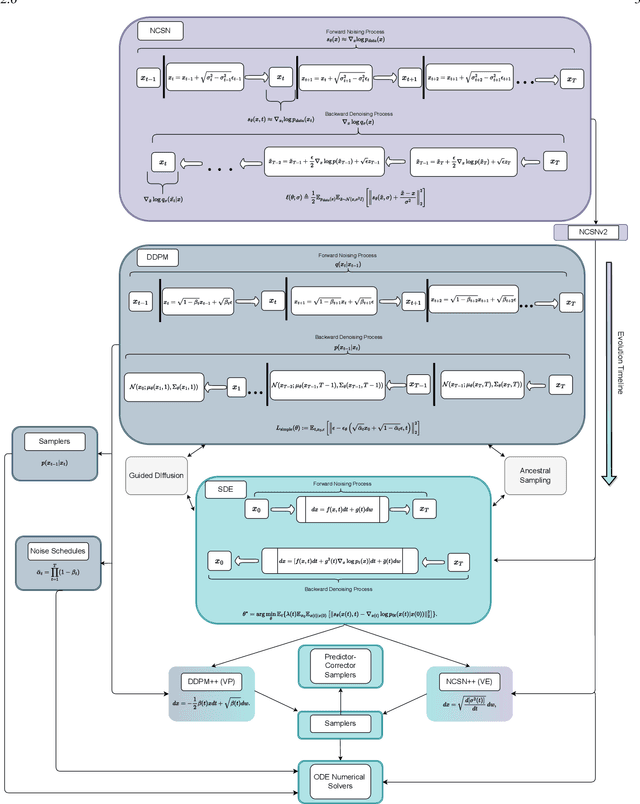

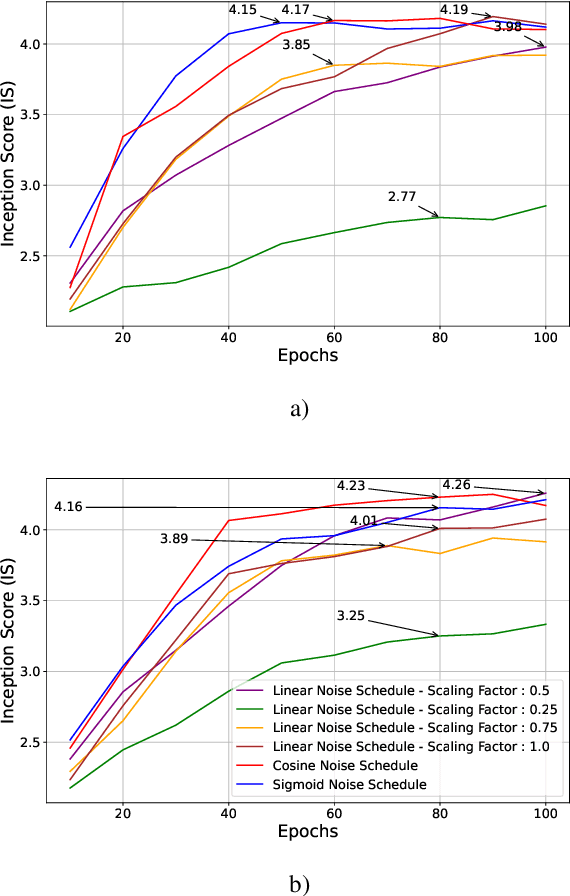

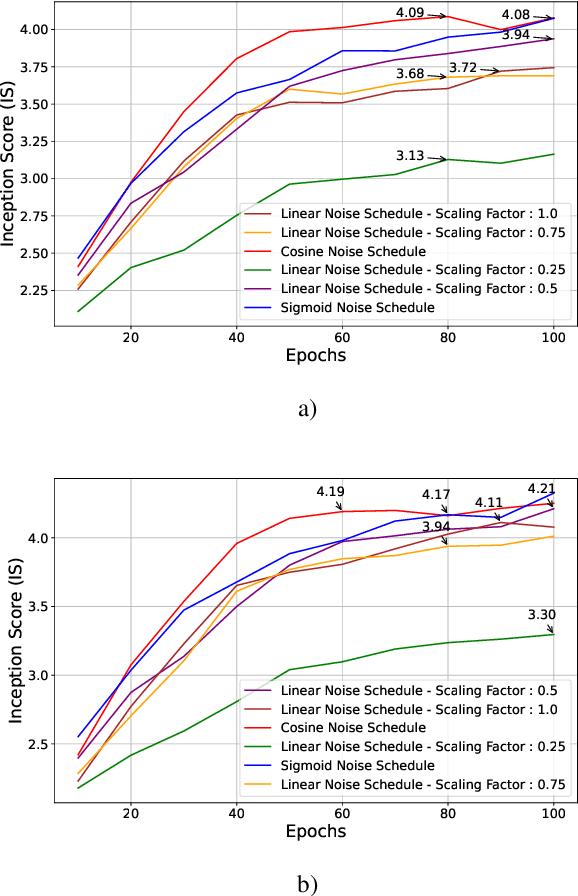

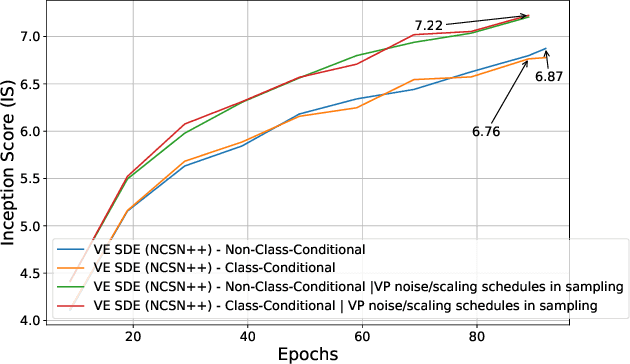

Abstract:Through Diffusion Models (DMs), we have made significant advances in generating high-quality images. Our exploration of these models delves deeply into their core operational principles by systematically investigating key aspects across various DM architectures: i) noise schedules, ii) samplers, and iii) guidance. Our comprehensive examination of these models sheds light on their hidden fundamental mechanisms, revealing the concealed foundational elements that are essential for their effectiveness. Our analyses emphasize the hidden key factors that determine model performance, offering insights that contribute to the advancement of DMs. Past findings show that the configuration of noise schedules, samplers, and guidance is vital to the quality of generated images; however, models reach a stable level of quality across different configurations at a remarkably similar point, revealing that the decisive factors for optimal performance predominantly reside in the diffusion process dynamics and the structural design of the model's network, rather than the specifics of configuration details. Our comparative analysis reveals that Denoising Diffusion Probabilistic Model (DDPM)-based diffusion dynamics consistently outperform the Noise Conditioned Score Network (NCSN)-based ones, not only when evaluated in their original forms but also when continuous through Stochastic Differential Equation (SDE)-based implementations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge