Kamil Saigol

Safe end-to-end imitation learning for model predictive control

Oct 14, 2018

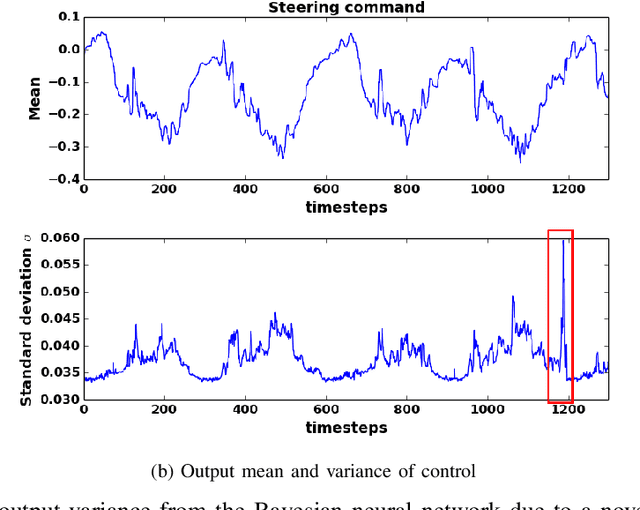

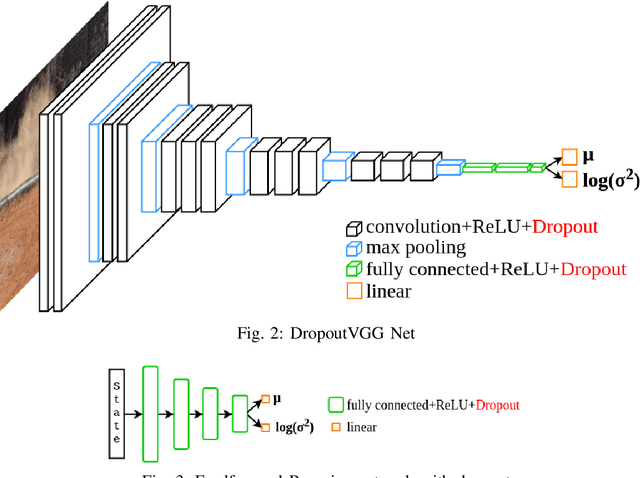

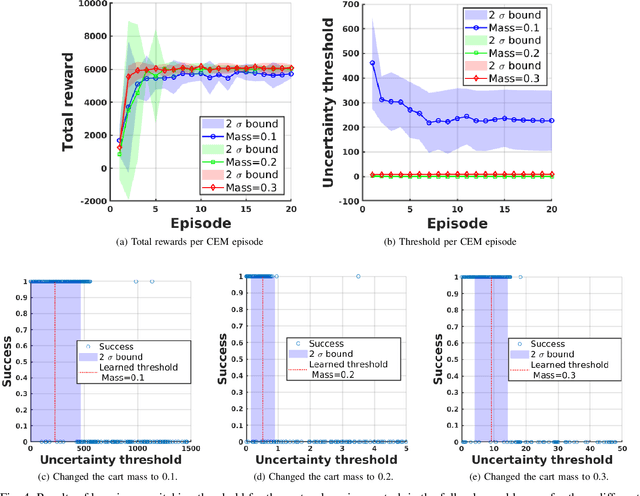

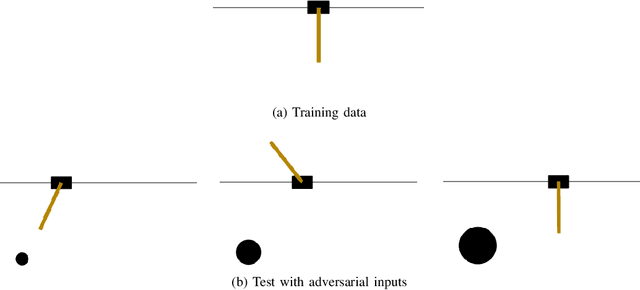

Abstract:We propose the use of Bayesian networks, which provide both a mean value and an uncertainty estimate as output, to enhance the safety of learned control policies under circumstances in which a test-time input differs significantly from the training set. Our algorithm combines reinforcement learning and end-to-end imitation learning to simultaneously learn a control policy as well as a threshold over the predictive uncertainty of the learned model, with no hand-tuning required. Corrective action, such as a return of control to the model predictive controller or human expert, is taken when the uncertainty threshold is exceeded. We validate our method on fully-observable and vision-based partially-observable systems using cart-pole and autonomous driving simulations using deep convolutional Bayesian neural networks. We demonstrate that our method is robust to uncertainty resulting from varying system dynamics as well as from partial state observability.

Agile Off-Road Autonomous Driving Using End-to-End Deep Imitation Learning

Sep 10, 2018

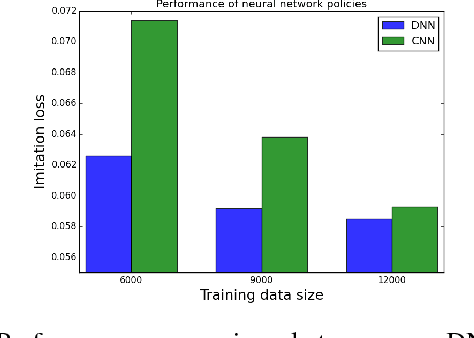

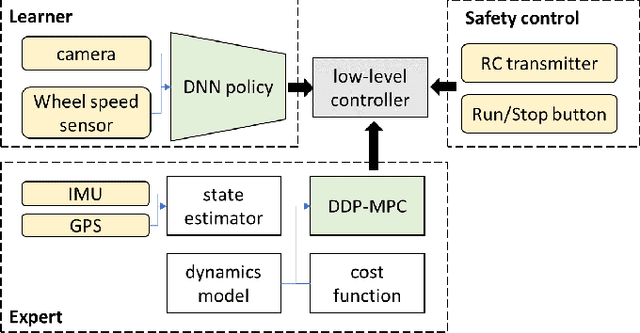

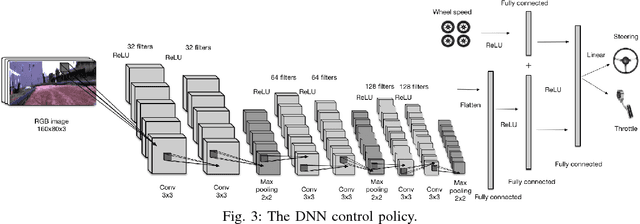

Abstract:We present an end-to-end imitation learning system for agile, off-road autonomous driving using only low-cost on-board sensors. By imitating a model predictive controller equipped with advanced sensors, we train a deep neural network control policy to map raw, high-dimensional observations to continuous steering and throttle commands. Compared with recent approaches to similar tasks, our method requires neither state estimation nor on-the-fly planning to navigate the vehicle. Our approach relies on, and experimentally validates, recent imitation learning theory. Empirically, we show that policies trained with online imitation learning overcome well-known challenges related to covariate shift and generalize better than policies trained with batch imitation learning. Built on these insights, our autonomous driving system demonstrates successful high-speed off-road driving, matching the state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge