Kai Hwang

Unsupervised Anomaly Detection for Tabular Data Using Noise Evaluation

Dec 16, 2024Abstract:Unsupervised anomaly detection (UAD) plays an important role in modern data analytics and it is crucial to provide simple yet effective and guaranteed UAD algorithms for real applications. In this paper, we present a novel UAD method for tabular data by evaluating how much noise is in the data. Specifically, we propose to learn a deep neural network from the clean (normal) training dataset and a noisy dataset, where the latter is generated by adding highly diverse noises to the clean data. The neural network can learn a reliable decision boundary between normal data and anomalous data when the diversity of the generated noisy data is sufficiently high so that the hard abnormal samples lie in the noisy region. Importantly, we provide theoretical guarantees, proving that the proposed method can detect anomalous data successfully, although the method does not utilize any real anomalous data in the training stage. Extensive experiments through more than 60 benchmark datasets demonstrate the effectiveness of the proposed method in comparison to 12 baselines of UAD. Our method obtains a 92.27\% AUC score and a 1.68 ranking score on average. Moreover, compared to the state-of-the-art UAD methods, our method is easier to implement.

Rethinking the Effectiveness of Graph Classification Datasets in Benchmarks for Assessing GNNs

Jul 06, 2024Abstract:Graph classification benchmarks, vital for assessing and developing graph neural networks (GNNs), have recently been scrutinized, as simple methods like MLPs have demonstrated comparable performance. This leads to an important question: Do these benchmarks effectively distinguish the advancements of GNNs over other methodologies? If so, how do we quantitatively measure this effectiveness? In response, we first propose an empirical protocol based on a fair benchmarking framework to investigate the performance discrepancy between simple methods and GNNs. We further propose a novel metric to quantify the dataset effectiveness by considering both dataset complexity and model performance. To the best of our knowledge, our work is the first to thoroughly study and provide an explicit definition for dataset effectiveness in the graph learning area. Through testing across 16 real-world datasets, we found our metric to align with existing studies and intuitive assumptions. Finally, we explore the causes behind the low effectiveness of certain datasets by investigating the correlation between intrinsic graph properties and class labels, and we developed a novel technique supporting the correlation-controllable synthetic dataset generation. Our findings shed light on the current understanding of benchmark datasets, and our new platform could fuel the future evolution of graph classification benchmarks.

Adaptive Graph Convolution Networks for Traffic Flow Forecasting

Jul 07, 2023

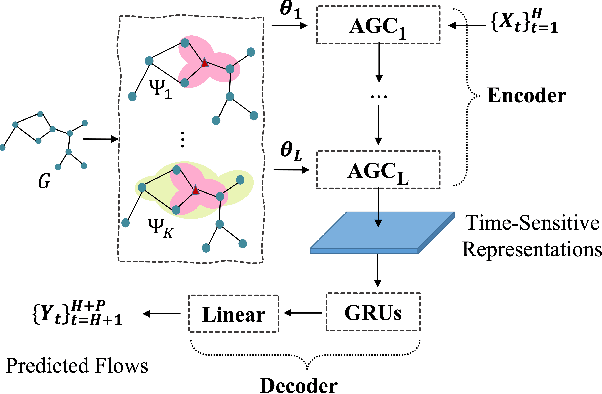

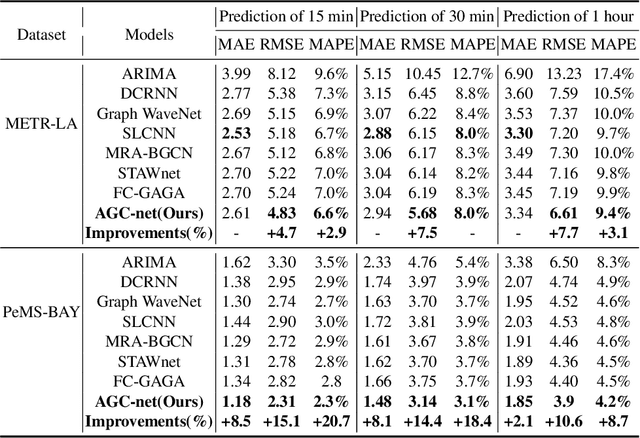

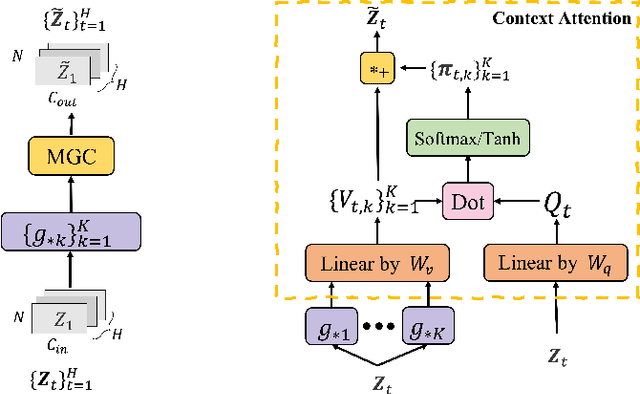

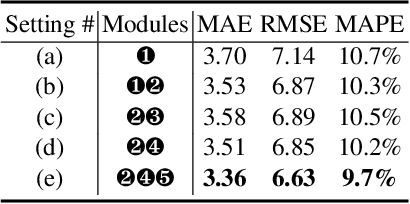

Abstract:Traffic flow forecasting is a highly challenging task due to the dynamic spatial-temporal road conditions. Graph neural networks (GNN) has been widely applied in this task. However, most of these GNNs ignore the effects of time-varying road conditions due to the fixed range of the convolution receptive field. In this paper, we propose a novel Adaptive Graph Convolution Networks (AGC-net) to address this issue in GNN. The AGC-net is constructed by the Adaptive Graph Convolution (AGC) based on a novel context attention mechanism, which consists of a set of graph wavelets with various learnable scales. The AGC transforms the spatial graph representations into time-sensitive features considering the temporal context. Moreover, a shifted graph convolution kernel is designed to enhance the AGC, which attempts to correct the deviations caused by inaccurate topology. Experimental results on two public traffic datasets demonstrate the effectiveness of the AGC-net\footnote{Code is available at: https://github.com/zhengdaoli/AGC-net} which outperforms other baseline models significantly.

DeepNetQoE: Self-adaptive QoE Optimization Framework of Deep Networks

Jul 17, 2020

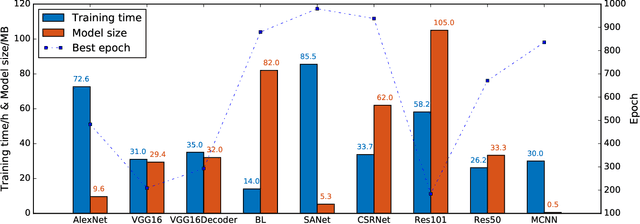

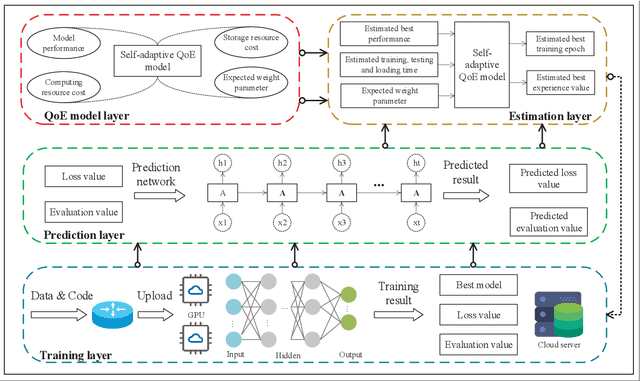

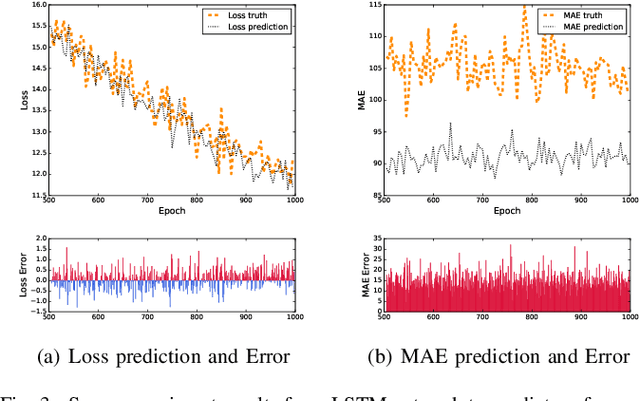

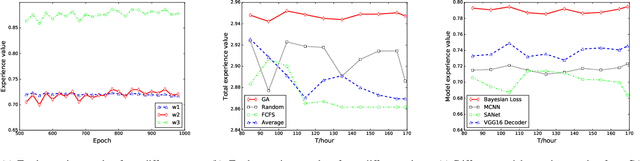

Abstract:Future advances in deep learning and its impact on the development of artificial intelligence (AI) in all fields depends heavily on data size and computational power. Sacrificing massive computing resources in exchange for better precision rates of the network model is recognized by many researchers. This leads to huge computing consumption and satisfactory results are not always expected when computing resources are limited. Therefore, it is necessary to find a balance between resources and model performance to achieve satisfactory results. This article proposes a self-adaptive quality of experience (QoE) framework, DeepNetQoE, to guide the training of deep networks. A self-adaptive QoE model is set up that relates the model's accuracy with the computing resources required for training which will allow the experience value of the model to improve. To maximize the experience value when computer resources are limited, a resource allocation model and solutions need to be established. In addition, we carry out experiments based on four network models to analyze the experience values with respect to the crowd counting example. Experimental results show that the proposed DeepNetQoE is capable of adaptively obtaining a high experience value according to user needs and therefore guiding users to determine the computational resources allocated to the network models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge