Justin Dulay

Using Human Perception to Regularize Transfer Learning

Nov 15, 2022

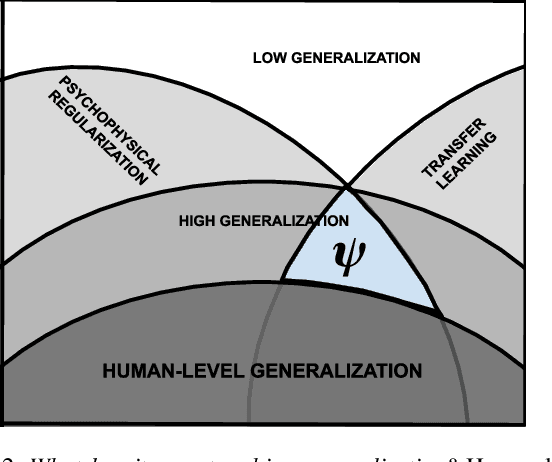

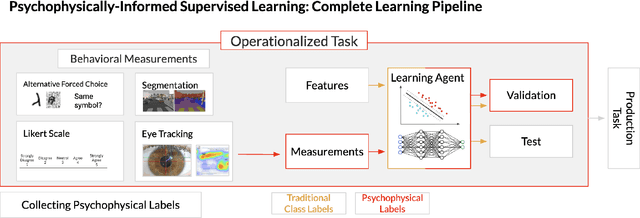

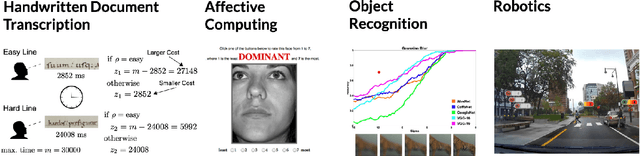

Abstract:Recent trends in the machine learning community show that models with fidelity toward human perceptual measurements perform strongly on vision tasks. Likewise, human behavioral measurements have been used to regularize model performance. But can we transfer latent knowledge gained from this across different learning objectives? In this work, we introduce PERCEP-TL (Perceptual Transfer Learning), a methodology for improving transfer learning with the regularization power of psychophysical labels in models. We demonstrate which models are affected the most by perceptual transfer learning and find that models with high behavioral fidelity -- including vision transformers -- improve the most from this regularization by as much as 1.9\% Top@1 accuracy points. These findings suggest that biologically inspired learning agents can benefit from human behavioral measurements as regularizers and psychophysical learned representations can be transferred to independent evaluation tasks.

Measuring Human Perception to Improve Open Set Recognition

Sep 11, 2022

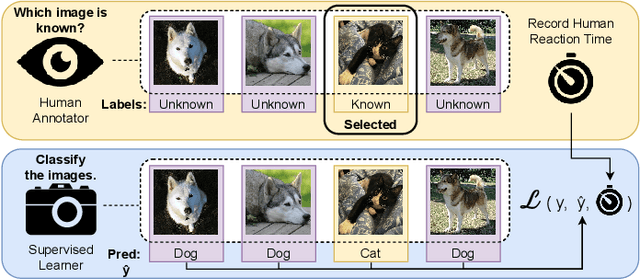

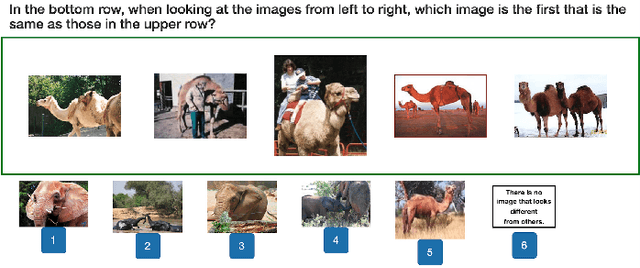

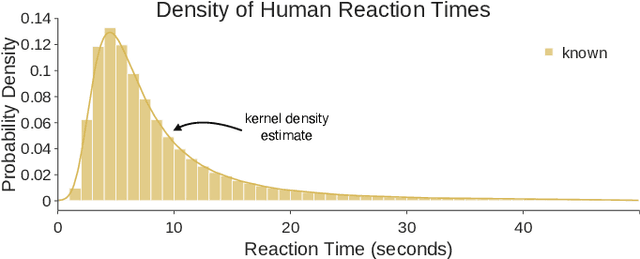

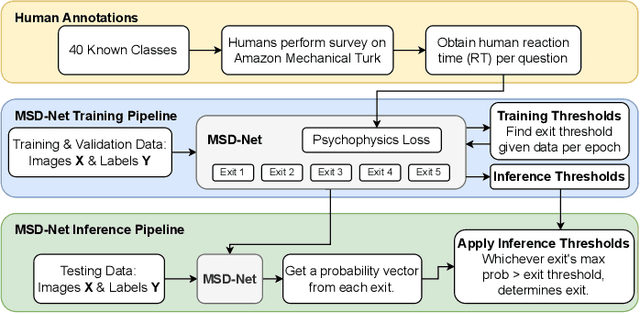

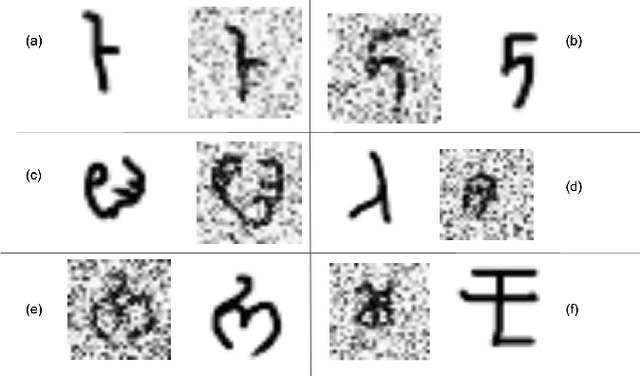

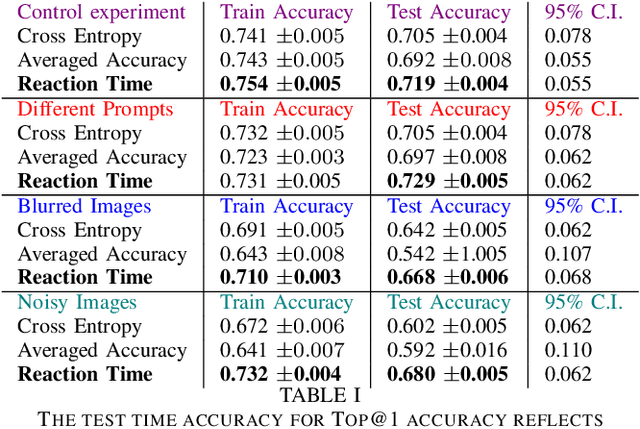

Abstract:The human ability to recognize when an object is known or novel currently outperforms all open set recognition algorithms. Human perception as measured by the methods and procedures of visual psychophysics from psychology can provide an additional data stream for managing novelty in visual recognition tasks in computer vision. For instance, measured reaction time from human subjects can offer insight as to whether a known class sample may be confused with a novel one. In this work, we designed and performed a large-scale behavioral experiment that collected over 200,000 human reaction time measurements associated with object recognition. The data collected indicated reaction time varies meaningfully across objects at the sample level. We therefore designed a new psychophysical loss function that enforces consistency with human behavior in deep networks which exhibit variable reaction time for different images. As in biological vision, this approach allows us to achieve good open set recognition performance in regimes with limited labeled training data. Through experiments using data from ImageNet, significant improvement is observed when training Multi-Scale DenseNets with this new formulation: models trained with our loss function significantly improved top-1 validation accuracy by 7%, top-1 test accuracy on known samples by 18%, and top-1 test accuracy on unknown samples by 33%. We compared our method to 10 open set recognition methods from the literature, which were all outperformed on multiple metrics.

Guiding Machine Perception with Psychophysics

Jul 05, 2022

Abstract:{G}{ustav} Fechner's 1860 delineation of psychophysics, the measurement of sensation in relation to its stimulus, is widely considered to be the advent of modern psychological science. In psychophysics, a researcher parametrically varies some aspects of a stimulus, and measures the resulting changes in a human subject's experience of that stimulus; doing so gives insight to the determining relationship between a sensation and the physical input that evoked it. This approach is used heavily in perceptual domains, including signal detection, threshold measurement, and ideal observer analysis. Scientific fields like vision science have always leaned heavily on the methods and procedures of psychophysics, but there is now growing appreciation of them by machine learning researchers, sparked by widening overlap between biological and artificial perception \cite{rojas2011automatic, scheirer2014perceptual,escalera2014chalearn,zhang2018agil, grieggs2021measuring}. Machine perception that is guided by behavioral measurements, as opposed to guidance restricted to arbitrarily assigned human labels, has significant potential to fuel further progress in artificial intelligence.

Multi-resolution Outlier Pooling for Sorghum Classification

Jun 22, 2021

Abstract:Automated high throughput plant phenotyping involves leveraging sensors, such as RGB, thermal and hyperspectral cameras (among others), to make large scale and rapid measurements of the physical properties of plants for the purpose of better understanding the difference between crops and facilitating rapid plant breeding programs. One of the most basic phenotyping tasks is to determine the cultivar, or species, in a particular sensor product. This simple phenotype can be used to detect errors in planting and to learn the most differentiating features between cultivars. It is also a challenging visual recognition task, as a large number of highly related crops are grown simultaneously, leading to a classification problem with low inter-class variance. In this paper, we introduce the Sorghum-100 dataset, a large dataset of RGB imagery of sorghum captured by a state-of-the-art gantry system, a multi-resolution network architecture that learns both global and fine-grained features on the crops, and a new global pooling strategy called Dynamic Outlier Pooling which outperforms standard global pooling strategies on this task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge