Using Human Perception to Regularize Transfer Learning

Paper and Code

Nov 15, 2022

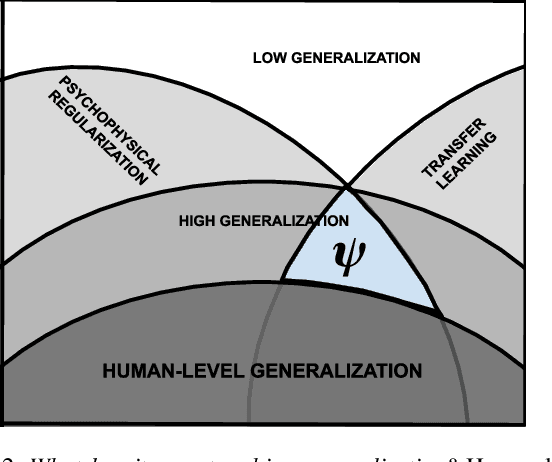

Recent trends in the machine learning community show that models with fidelity toward human perceptual measurements perform strongly on vision tasks. Likewise, human behavioral measurements have been used to regularize model performance. But can we transfer latent knowledge gained from this across different learning objectives? In this work, we introduce PERCEP-TL (Perceptual Transfer Learning), a methodology for improving transfer learning with the regularization power of psychophysical labels in models. We demonstrate which models are affected the most by perceptual transfer learning and find that models with high behavioral fidelity -- including vision transformers -- improve the most from this regularization by as much as 1.9\% Top@1 accuracy points. These findings suggest that biologically inspired learning agents can benefit from human behavioral measurements as regularizers and psychophysical learned representations can be transferred to independent evaluation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge