Sonia Poltoratski

Guiding Machine Perception with Psychophysics

Jul 05, 2022

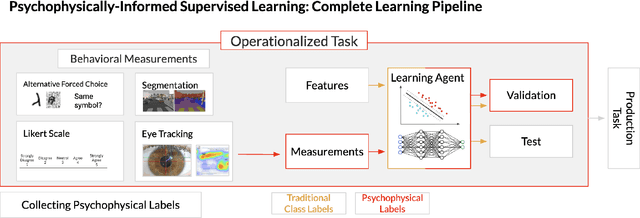

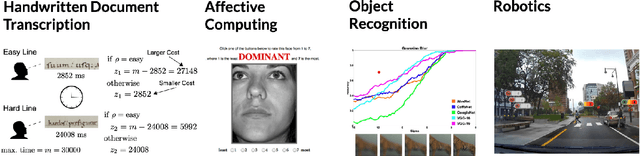

Abstract:{G}{ustav} Fechner's 1860 delineation of psychophysics, the measurement of sensation in relation to its stimulus, is widely considered to be the advent of modern psychological science. In psychophysics, a researcher parametrically varies some aspects of a stimulus, and measures the resulting changes in a human subject's experience of that stimulus; doing so gives insight to the determining relationship between a sensation and the physical input that evoked it. This approach is used heavily in perceptual domains, including signal detection, threshold measurement, and ideal observer analysis. Scientific fields like vision science have always leaned heavily on the methods and procedures of psychophysics, but there is now growing appreciation of them by machine learning researchers, sparked by widening overlap between biological and artificial perception \cite{rojas2011automatic, scheirer2014perceptual,escalera2014chalearn,zhang2018agil, grieggs2021measuring}. Machine perception that is guided by behavioral measurements, as opposed to guidance restricted to arbitrarily assigned human labels, has significant potential to fuel further progress in artificial intelligence.

Validation and generalization of pixel-wise relevance in convolutional neural networks trained for face classification

Jun 16, 2020

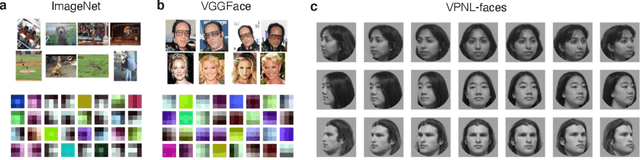

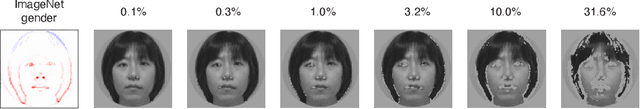

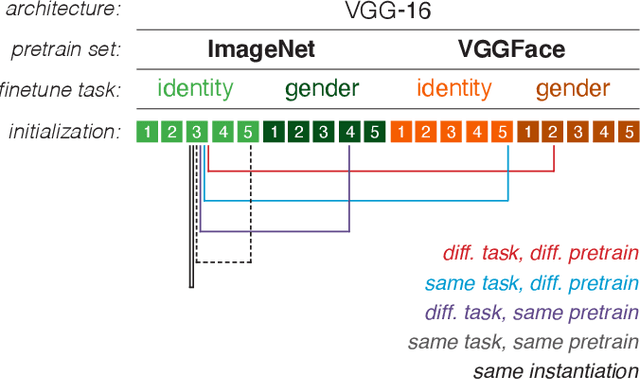

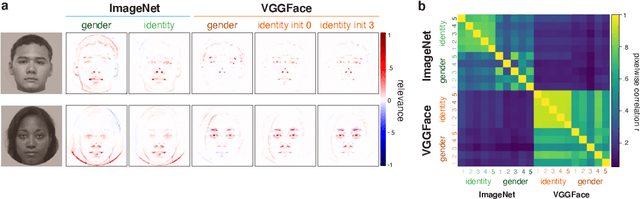

Abstract:The increased use of convolutional neural networks for face recognition in science, governance, and broader society has created an acute need for methods that can show how these 'black box' decisions are made. To be interpretable and useful to humans, such a method should convey a model's learned classification strategy in a way that is robust to random initializations or spurious correlations in input data. To this end, we applied the decompositional pixel-wise attribution method of layer-wise relevance propagation (LRP) to resolve the decisions of several classes of VGG-16 models trained for face recognition. We then quantified how these relevance measures vary with and generalize across key model parameters, such as the pretraining dataset (ImageNet or VGGFace), the finetuning task (gender or identity classification), and random initializations of model weights. Using relevance-based image masking, we find that relevance maps for face classification prove generally stable across random initializations, and can generalize across finetuning tasks. However, there is markedly less generalization across pretraining datasets, indicating that ImageNet- and VGGFace-trained models sample face information differently even as they achieve comparably high classification performance. Fine-grained analyses of relevance maps across models revealed asymmetries in generalization that point to specific benefits of choice parameters, and suggest that it may be possible to find an underlying set of important face image pixels that drive decisions across convolutional neural networks and tasks. Finally, we evaluated model decision weighting against human measures of similarity, providing a novel framework for interpreting face recognition decisions across human and machine.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge