Justin Bui

GaSpCT: Gaussian Splatting for Novel CT Projection View Synthesis

Apr 04, 2024

Abstract:We present GaSpCT, a novel view synthesis and 3D scene representation method used to generate novel projection views for Computer Tomography (CT) scans. We adapt the Gaussian Splatting framework to enable novel view synthesis in CT based on limited sets of 2D image projections and without the need for Structure from Motion (SfM) methodologies. Therefore, we reduce the total scanning duration and the amount of radiation dose the patient receives during the scan. We adapted the loss function to our use-case by encouraging a stronger background and foreground distinction using two sparsity promoting regularizers: a beta loss and a total variation (TV) loss. Finally, we initialize the Gaussian locations across the 3D space using a uniform prior distribution of where the brain's positioning would be expected to be within the field of view. We evaluate the performance of our model using brain CT scans from the Parkinson's Progression Markers Initiative (PPMI) dataset and demonstrate that the rendered novel views closely match the original projection views of the simulated scan, and have better performance than other implicit 3D scene representations methodologies. Furthermore, we empirically observe reduced training time compared to neural network based image synthesis for sparse-view CT image reconstruction. Finally, the memory requirements of the Gaussian Splatting representations are reduced by 17% compared to the equivalent voxel grid image representations.

Cascade Watchdog: A Multi-tiered Adversarial Guard for Outlier Detection

Aug 20, 2021

Abstract:The identification of out-of-distribution content is critical to the successful implementation of neural networks. Watchdog techniques have been developed to support the detection of these inputs, but the performance can be limited by the amount of available data. Generative adversarial networks have displayed numerous capabilities, including the ability to generate facsimiles with excellent accuracy. This paper presents and empirically evaluates a multi-tiered watchdog, which is developed using GAN generated data, for improved out-of-distribution detection. The cascade watchdog uses adversarial training to increase the amount of available data similar to the out-of-distribution elements that are more difficult to detect. Then, a specialized second guard is added in sequential order. The results show a solid and significant improvement on the detection of the most challenging out-of-distribution inputs while preserving an extremely low false positive rate.

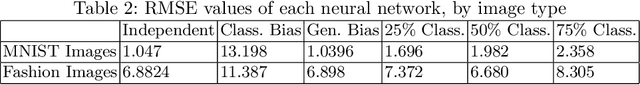

Classification of Common Waveforms Including a Watchdog for Unknown Signals

Aug 16, 2021

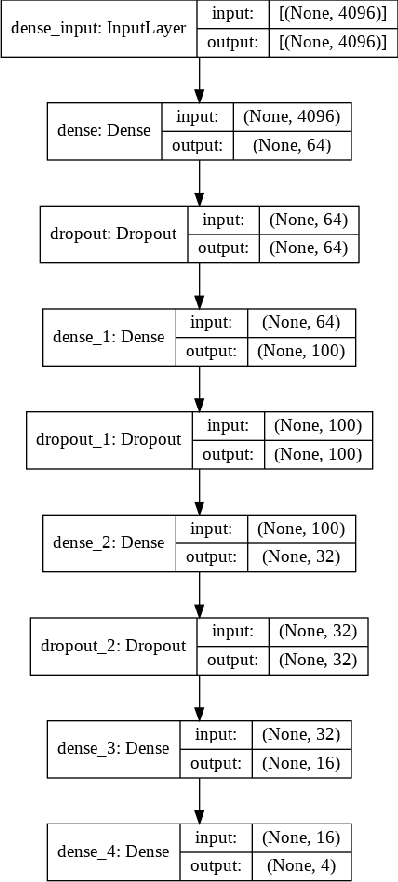

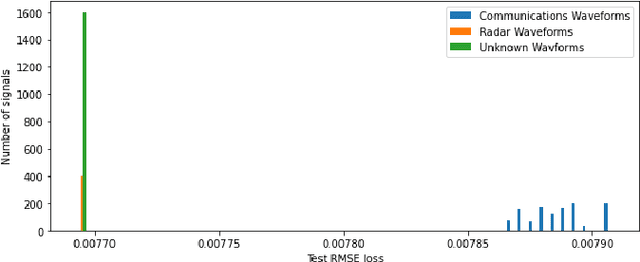

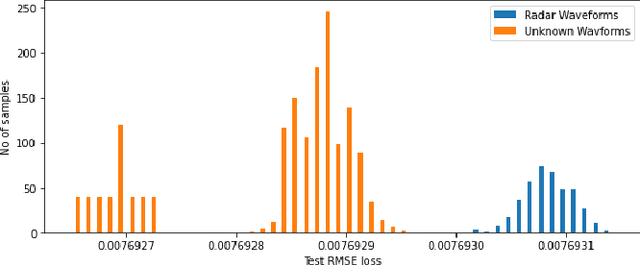

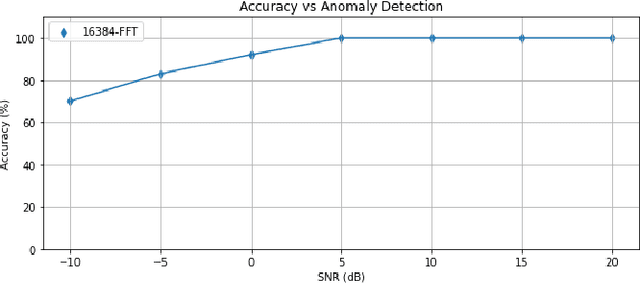

Abstract:In this paper, we examine the use of a deep multi-layer perceptron model architecture to classify received signal samples as coming from one of four common waveforms, Single Carrier (SC), Single-Carrier Frequency Division Multiple Access (SC-FDMA), Orthogonal Frequency Division Multiplexing (OFDM), and Linear Frequency Modulation (LFM), used in communication and radar networks. Synchronization of the signals is not needed as we assume there is an unknown and uncompensated time and frequency offset. An autoencoder with a deep CNN architecture is also examined to create a new fifth classification category of an unknown waveform type. This is accomplished by calculating a minimum and maximum threshold values from the root mean square error (RMSE) of the radar and communication waveforms. The classifier and autoencoder work together to monitor a spectrum area to identify the common waveforms inside the area of operation along with detecting unknown waveforms. Results from testing showed the classifier had 100\% classification rate above 0 dB with accuracy of 83.2\% and 94.7\% at -10 dB and -5 dB, respectively, with signal impairments present. Results for the anomaly detector showed 85.3\% accuracy at 0 dB with 100\% at SNR greater than 0 dB with signal impairments present when using a high-value Fast Fourier Transform (FFT) size. Accurate detection rates decline as additional noise is introduced to the signals, with 78.1\% at -5 dB and 56.5\% at -10 dB. However, these low rates seen can be potentially mitigated by using even higher FFT sizes also shown in our results.

The application of artificial intelligence in software engineering: a review challenging conventional wisdom

Aug 03, 2021

Abstract:The field of artificial intelligence (AI) is witnessing a recent upsurge in research, tools development, and deployment of applications. Multiple software companies are shifting their focus to developing intelligent systems; and many others are deploying AI paradigms to their existing processes. In parallel, the academic research community is injecting AI paradigms to provide solutions to traditional engineering problems. Similarly, AI has evidently been proved useful to software engineering (SE). When one observes the SE phases (requirements, design, development, testing, release, and maintenance), it becomes clear that multiple AI paradigms (such as neural networks, machine learning, knowledge-based systems, natural language processing) could be applied to improve the process and eliminate many of the major challenges that the SE field has been facing. This survey chapter is a review of the most commonplace methods of AI applied to SE. The review covers methods between years 1975-2017, for the requirements phase, 46 major AI-driven methods are found, 19 for design, 15 for development, 68 for testing, and 15 for release and maintenance. Furthermore, the purpose of this chapter is threefold; firstly, to answer the following questions: is there sufficient intelligence in the SE lifecycle? What does applying AI to SE entail? Secondly, to measure, formulize, and evaluate the overlap of SE phases and AI disciplines. Lastly, this chapter aims to provide serious questions to challenging the current conventional wisdom (i.e., status quo) of the state-of-the-art, craft a call for action, and to redefine the path forward.

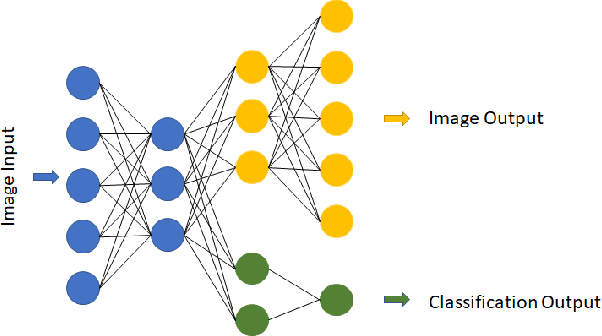

A Proof of Concept Neural Network Watchdog using a Hybrid Generative Classifier For Optimized Outlier Detection

Feb 28, 2021

Abstract:With the continuous development of tools such as TensorFlow and PyTorch, Neural Networks are becoming easier to develop and train. With the expansion of these tools, however, neural networks have also become more black boxed. A neural network trained to classify fruit may classify a picture of a giraffe as a banana. A neural network watchdog may be implemented to identify such out-of-distribution inputs, allowing a classifier to disregard such data. By building a hybrid generator/classifier network, we can easily implement a watchdog while improving training and evaluation efficiency.

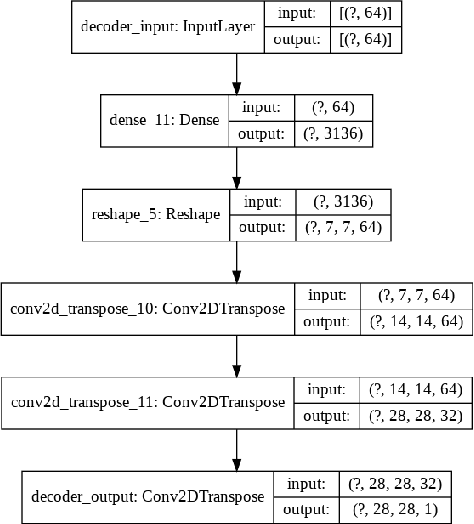

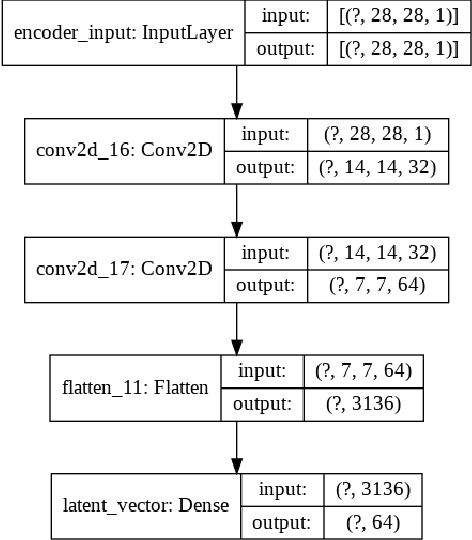

Autoencoder Watchdog Outlier Detection for Classifiers

Oct 24, 2020

Abstract:Neural networks have often been described as black boxes. A generic neural network trained to differentiate between kittens and puppies will classify a picture of a kumquat as a kitten or a puppy. An autoencoder watch dog screens trained classifier/regression machine input candidates before processing, e.g. to first test whether the neural network input is a puppy or a kitten. Preliminary results are presented using convolutional neural networks and convolutional autoencoder watchdogs using MNIST images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge