Feras A. Batarseh

A Review of Cybersecurity Incidents in the Food and Agriculture Sector

Mar 12, 2024Abstract:The increasing utilization of emerging technologies in the Food & Agriculture (FA) sector has heightened the need for security to minimize cyber risks. Considering this aspect, this manuscript reviews disclosed and documented cybersecurity incidents in the FA sector. For this purpose, thirty cybersecurity incidents were identified, which took place between July 2011 and April 2023. The details of these incidents are reported from multiple sources such as: the private industry and flash notifications generated by the Federal Bureau of Investigation (FBI), internal reports from the affected organizations, and available media sources. Considering the available information, a brief description of the security threat, ransom amount, and impact on the organization are discussed for each incident. This review reports an increased frequency of cybersecurity threats to the FA sector. To minimize these cyber risks, popular cybersecurity frameworks and recent agriculture-specific cybersecurity solutions are also discussed. Further, the need for AI assurance in the FA sector is explained, and the Farmer-Centered AI (FCAI) framework is proposed. The main aim of the FCAI framework is to support farmers in decision-making for agricultural production, by incorporating AI assurance. Lastly, the effects of the reported cyber incidents on other critical infrastructures, food security, and the economy are noted, along with specifying the open issues for future development.

ExClaim: Explainable Neural Claim Verification Using Rationalization

Jan 21, 2023

Abstract:With the advent of deep learning, text generation language models have improved dramatically, with text at a similar level as human-written text. This can lead to rampant misinformation because content can now be created cheaply and distributed quickly. Automated claim verification methods exist to validate claims, but they lack foundational data and often use mainstream news as evidence sources that are strongly biased towards a specific agenda. Current claim verification methods use deep neural network models and complex algorithms for a high classification accuracy but it is at the expense of model explainability. The models are black-boxes and their decision-making process and the steps it took to arrive at a final prediction are obfuscated from the user. We introduce a novel claim verification approach, namely: ExClaim, that attempts to provide an explainable claim verification system with foundational evidence. Inspired by the legal system, ExClaim leverages rationalization to provide a verdict for the claim and justifies the verdict through a natural language explanation (rationale) to describe the model's decision-making process. ExClaim treats the verdict classification task as a question-answer problem and achieves a performance of 0.93 F1 score. It provides subtasks explanations to also justify the intermediate outcomes. Statistical and Explainable AI (XAI) evaluations are conducted to ensure valid and trustworthy outcomes. Ensuring claim verification systems are assured, rational, and explainable is an essential step toward improving Human-AI trust and the accessibility of black-box systems.

Rationalization for Explainable NLP: A Survey

Jan 21, 2023

Abstract:Recent advances in deep learning have improved the performance of many Natural Language Processing (NLP) tasks such as translation, question-answering, and text classification. However, this improvement comes at the expense of model explainability. Black-box models make it difficult to understand the internals of a system and the process it takes to arrive at an output. Numerical (LIME, Shapley) and visualization (saliency heatmap) explainability techniques are helpful; however, they are insufficient because they require specialized knowledge. These factors led rationalization to emerge as a more accessible explainable technique in NLP. Rationalization justifies a model's output by providing a natural language explanation (rationale). Recent improvements in natural language generation have made rationalization an attractive technique because it is intuitive, human-comprehensible, and accessible to non-technical users. Since rationalization is a relatively new field, it is disorganized. As the first survey, rationalization literature in NLP from 2007-2022 is analyzed. This survey presents available methods, explainable evaluations, code, and datasets used across various NLP tasks that use rationalization. Further, a new subfield in Explainable AI (XAI), namely, Rational AI (RAI), is introduced to advance the current state of rationalization. A discussion on observed insights, challenges, and future directions is provided to point to promising research opportunities.

Proceedings of AAAI 2022 Fall Symposium: The Role of AI in Responding to Climate Challenges

Jan 06, 2023Abstract:Climate change is one of the most pressing challenges of our time, requiring rapid action across society. As artificial intelligence tools (AI) are rapidly deployed, it is therefore crucial to understand how they will impact climate action. On the one hand, AI can support applications in climate change mitigation (reducing or preventing greenhouse gas emissions), adaptation (preparing for the effects of a changing climate), and climate science. These applications have implications in areas ranging as widely as energy, agriculture, and finance. At the same time, AI is used in many ways that hinder climate action (e.g., by accelerating the use of greenhouse gas-emitting fossil fuels). In addition, AI technologies have a carbon and energy footprint themselves. This symposium brought together participants from across academia, industry, government, and civil society to explore these intersections of AI with climate change, as well as how each of these sectors can contribute to solutions.

AI Assurance using Causal Inference: Application to Public Policy

Dec 01, 2021

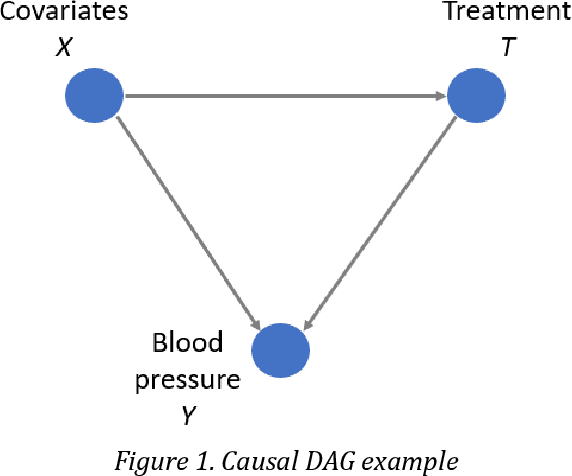

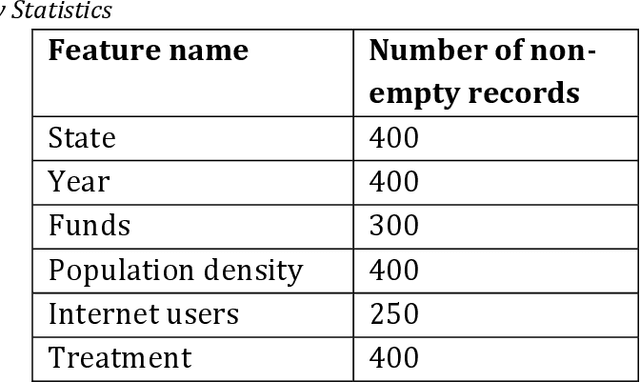

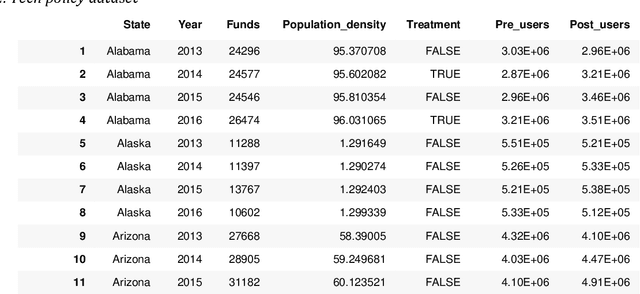

Abstract:Developing and implementing AI-based solutions help state and federal government agencies, research institutions, and commercial companies enhance decision-making processes, automate chain operations, and reduce the consumption of natural and human resources. At the same time, most AI approaches used in practice can only be represented as "black boxes" and suffer from the lack of transparency. This can eventually lead to unexpected outcomes and undermine trust in such systems. Therefore, it is crucial not only to develop effective and robust AI systems, but to make sure their internal processes are explainable and fair. Our goal in this chapter is to introduce the topic of designing assurance methods for AI systems with high-impact decisions using the example of the technology sector of the US economy. We explain how these fields would benefit from revealing cause-effect relationships between key metrics in the dataset by providing the causal experiment on technology economics dataset. Several causal inference approaches and AI assurance techniques are reviewed and the transformation of the data into a graph-structured dataset is demonstrated.

Outlier Detection using AI: A Survey

Dec 01, 2021

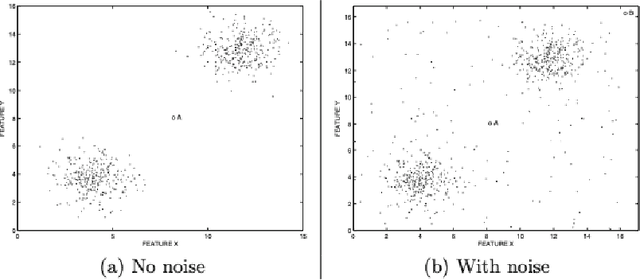

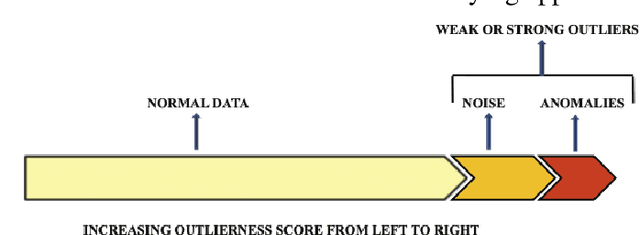

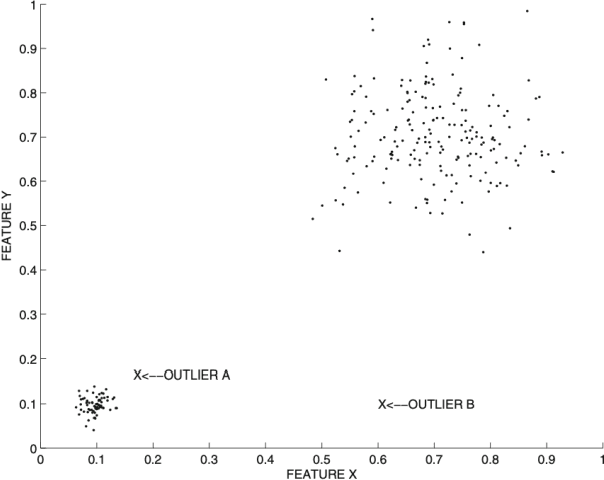

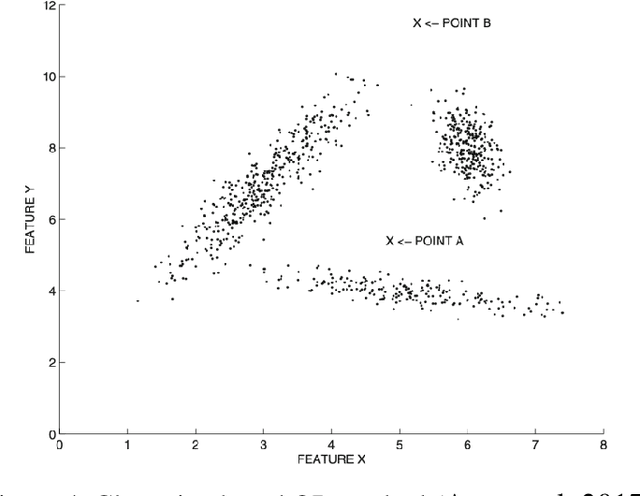

Abstract:An outlier is an event or observation that is defined as an unusual activity, intrusion, or a suspicious data point that lies at an irregular distance from a population. The definition of an outlier event, however, is subjective and depends on the application and the domain (Energy, Health, Wireless Network, etc.). It is important to detect outlier events as carefully as possible to avoid infrastructure failures because anomalous events can cause minor to severe damage to infrastructure. For instance, an attack on a cyber-physical system such as a microgrid may initiate voltage or frequency instability, thereby damaging a smart inverter which involves very expensive repairing. Unusual activities in microgrids can be mechanical faults, behavior changes in the system, human or instrument errors or a malicious attack. Accordingly, and due to its variability, Outlier Detection (OD) is an ever-growing research field. In this chapter, we discuss the progress of OD methods using AI techniques. For that, the fundamental concepts of each OD model are introduced via multiple categories. Broad range of OD methods are categorized into six major categories: Statistical-based, Distance-based, Density-based, Clustering-based, Learning-based, and Ensemble methods. For every category, we discuss recent state-of-the-art approaches, their application areas, and performances. After that, a brief discussion regarding the advantages, disadvantages, and challenges of each technique is provided with recommendations on future research directions. This survey aims to guide the reader to better understand recent progress of OD methods for the assurance of AI.

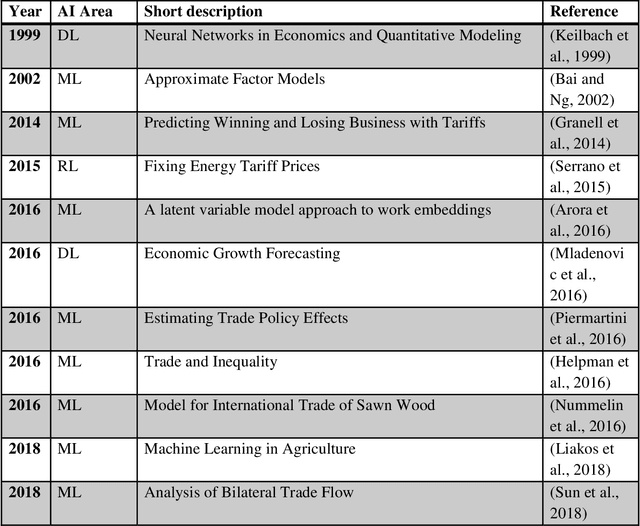

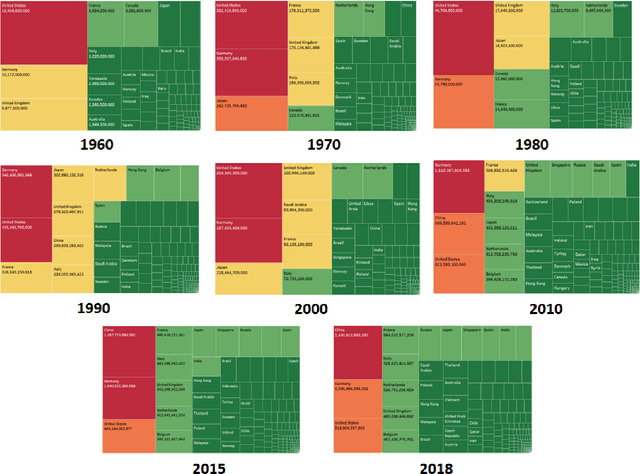

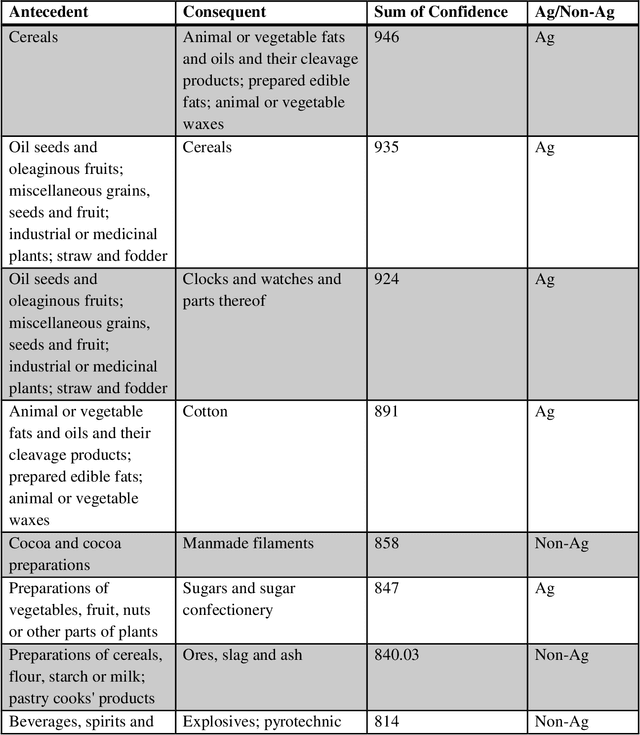

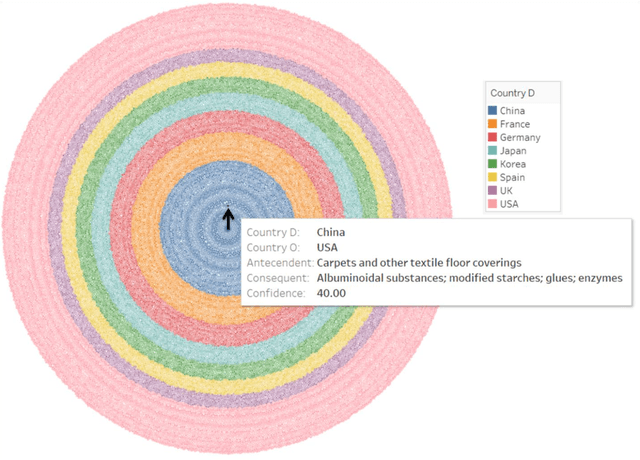

Public Policymaking for International Agricultural Trade using Association Rules and Ensemble Machine Learning

Nov 15, 2021

Abstract:International economics has a long history of improving our understanding of factors causing trade, and the consequences of free flow of goods and services across countries. The recent shocks to the free trade regime, especially trade disputes among major economies, as well as black swan events, such as trade wars and pandemics, raise the need for improved predictions to inform policy decisions. AI methods are allowing economists to solve such prediction problems in new ways. In this manuscript, we present novel methods that predict and associate food and agricultural commodities traded internationally. Association Rules (AR) analysis has been deployed successfully for economic scenarios at the consumer or store level, such as for market basket analysis. In our work however, we present analysis of imports and exports associations and their effects on commodity trade flows. Moreover, Ensemble Machine Learning methods are developed to provide improved agricultural trade predictions, outlier events' implications, and quantitative pointers to policy makers.

* Paper published at Elsevier's Journal of Machine Learning with Applications https://www.sciencedirect.com/science/article/pii/S2666827021000232

A Survey on AI Assurance

Nov 15, 2021

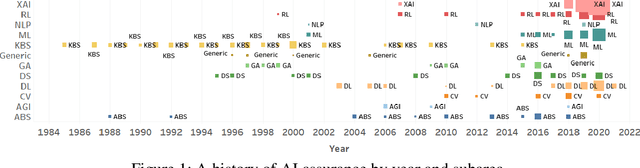

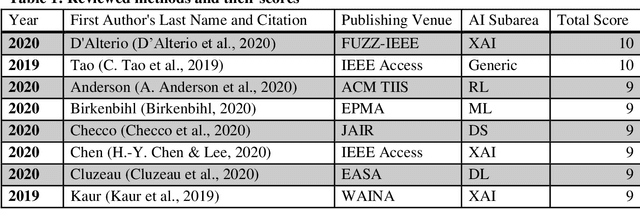

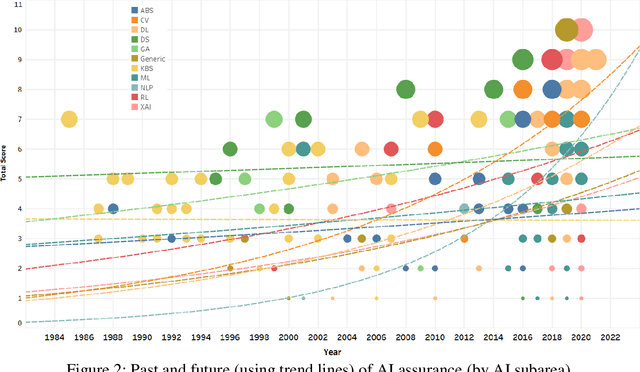

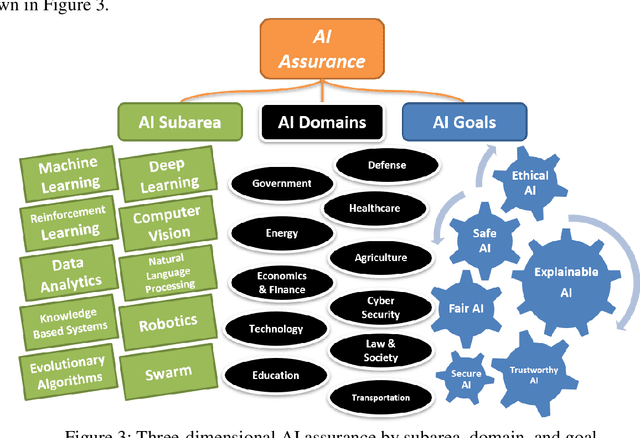

Abstract:Artificial Intelligence (AI) algorithms are increasingly providing decision making and operational support across multiple domains. AI includes a wide library of algorithms for different problems. One important notion for the adoption of AI algorithms into operational decision process is the concept of assurance. The literature on assurance, unfortunately, conceals its outcomes within a tangled landscape of conflicting approaches, driven by contradicting motivations, assumptions, and intuitions. Accordingly, albeit a rising and novel area, this manuscript provides a systematic review of research works that are relevant to AI assurance, between years 1985 - 2021, and aims to provide a structured alternative to the landscape. A new AI assurance definition is adopted and presented and assurance methods are contrasted and tabulated. Additionally, a ten-metric scoring system is developed and introduced to evaluate and compare existing methods. Lastly, in this manuscript, we provide foundational insights, discussions, future directions, a roadmap, and applicable recommendations for the development and deployment of AI assurance.

* This paper is published at Springer's Journal of Big Data

Measuring Outcomes in Healthcare Economics using Artificial Intelligence: with Application to Resource Management

Nov 15, 2021Abstract:The quality of service in healthcare is constantly challenged by outlier events such as pandemics (i.e. Covid-19) and natural disasters (such as hurricanes and earthquakes). In most cases, such events lead to critical uncertainties in decision making, as well as in multiple medical and economic aspects at a hospital. External (geographic) or internal factors (medical and managerial), lead to shifts in planning and budgeting, but most importantly, reduces confidence in conventional processes. In some cases, support from other hospitals proves necessary, which exacerbates the planning aspect. This manuscript presents three data-driven methods that provide data-driven indicators to help healthcare managers organize their economics and identify the most optimum plan for resources allocation and sharing. Conventional decision-making methods fall short in recommending validated policies for managers. Using reinforcement learning, genetic algorithms, traveling salesman, and clustering, we experimented with different healthcare variables and presented tools and outcomes that could be applied at health institutes. Experiments are performed; the results are recorded, evaluated, and presented.

* This paper is published at Cambridge University Press Journal of Data & Policy

DeepAg: Deep Learning Approach for Measuring the Effects of Outlier Events on Agricultural Production and Policy

Nov 06, 2021

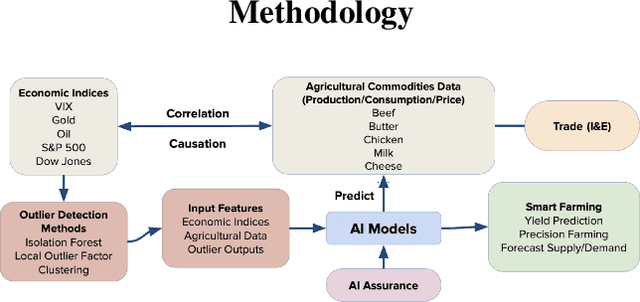

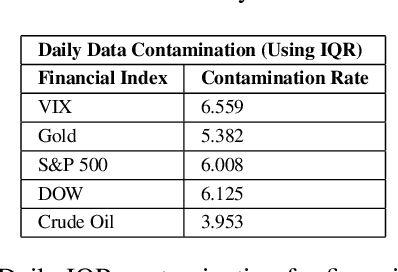

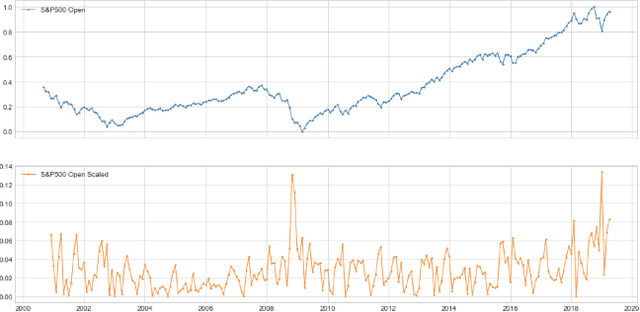

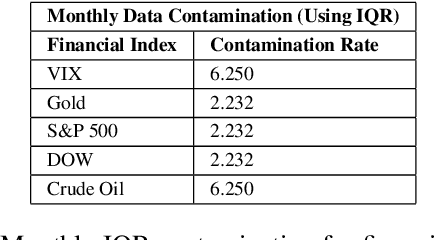

Abstract:Quantitative metrics that measure the global economy's equilibrium have strong and interdependent relationships with the agricultural supply chain and international trade flows. Sudden shocks in these processes caused by outlier events such as trade wars, pandemics, or weather can have complex effects on the global economy. In this paper, we propose a novel framework, namely: DeepAg, that employs econometrics and measures the effects of outlier events detection using Deep Learning (DL) to determine relationships between commonplace financial indices (such as the DowJones), and the production values of agricultural commodities (such as Cheese and Milk). We employed a DL technique called Long Short-Term Memory (LSTM) networks successfully to predict commodity production with high accuracy and also present five popular models (regression and boosting) as baselines to measure the effects of outlier events. The results indicate that DeepAg with outliers' considerations (using Isolation Forests) outperforms baseline models, as well as the same model without outliers detection. Outlier events make a considerable impact when predicting commodity production with respect to financial indices. Moreover, we present the implications of DeepAg on public policy, provide insights for policymakers and farmers, and for operational decisions in the agricultural ecosystem. Data are collected, models developed, and the results are recorded and presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge