Robert J. Marks II

Changepoint Detection for Real-Time Spectrum Sharing Radar

Jun 30, 2022

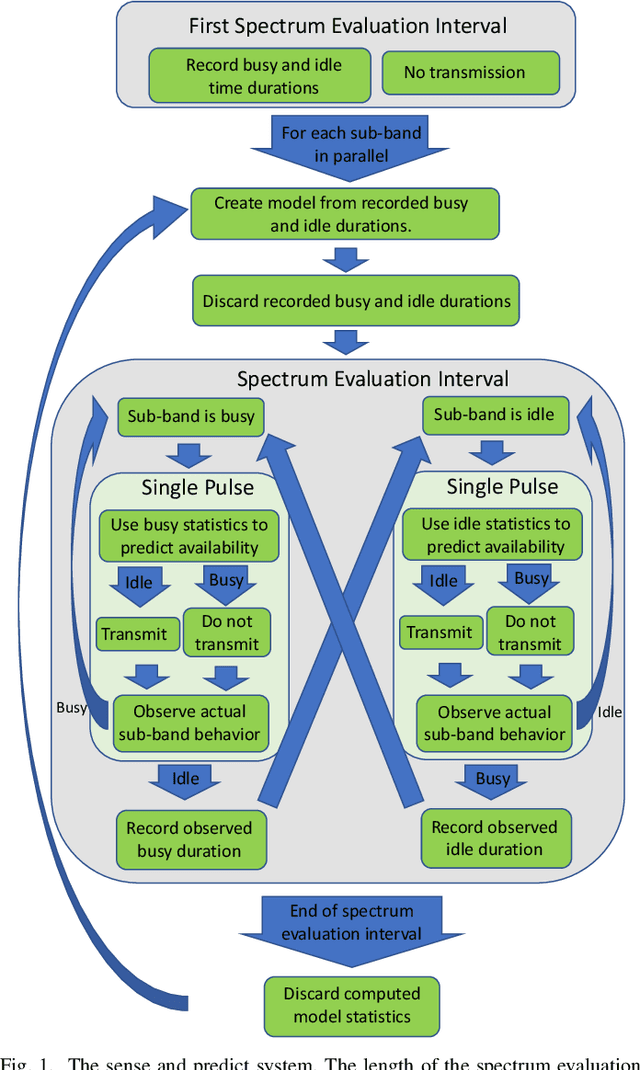

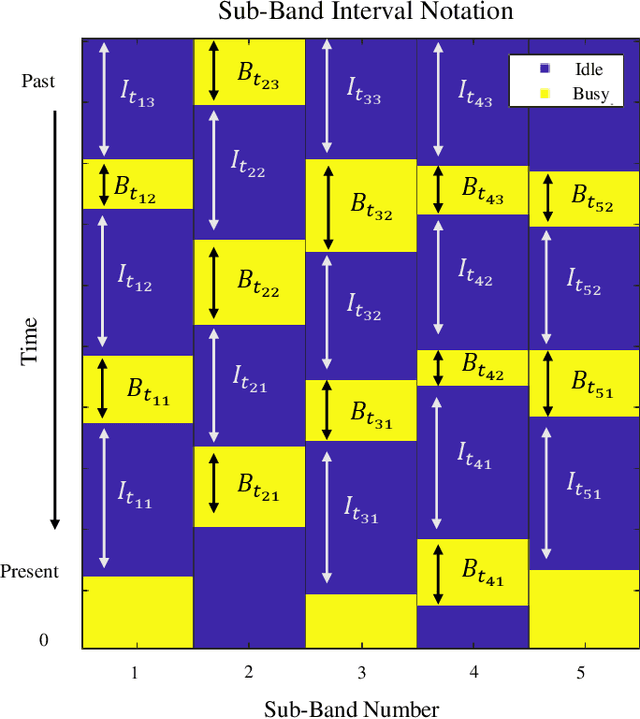

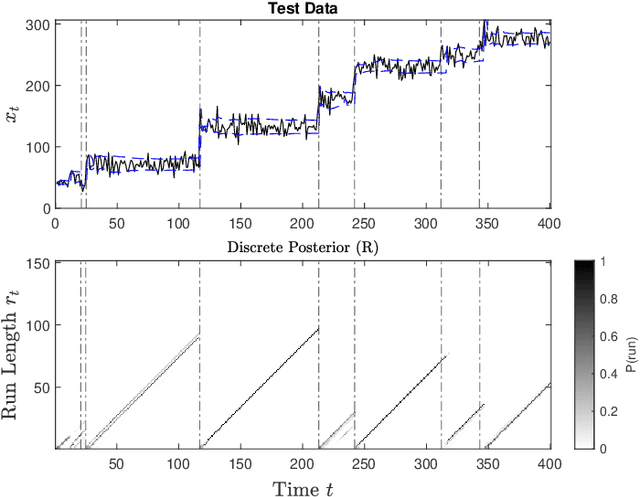

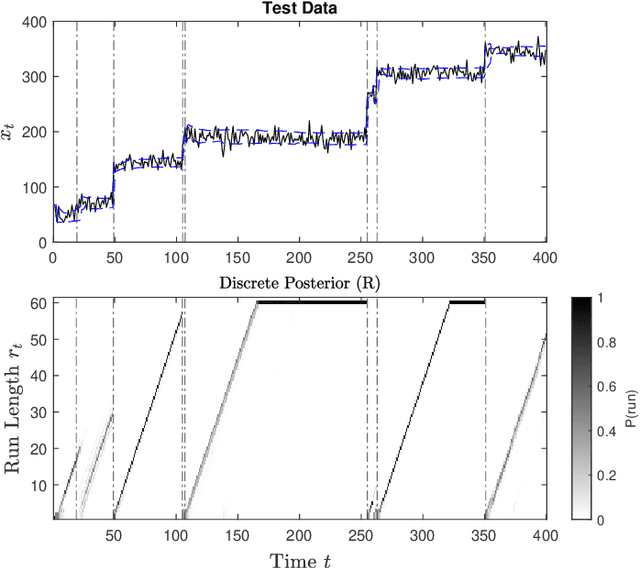

Abstract:Radar must adapt to changing environments, and we propose changepoint detection as a method to do so. In the world of increasingly congested radio frequencies, radars must adapt to avoid interference. Many radar systems employ the prediction action cycle to proactively determine transmission mode while spectrum sharing. This method constructs and implements a model of the environment to predict unused frequencies, and then transmits in this predicted availability. For these selection strategies, performance is directly reliant on the quality of the underlying environmental models. In order to keep up with a changing environment, these models can employ changepoint detection. Changepoint detection is the identification of sudden changes, or changepoints, in the distribution from which data is drawn. This information allows the models to discard "garbage" data from a previous distribution, which has no relation to the current state of the environment. In this work, bayesian online changepoint detection (BOCD) is applied to the sense and predict algorithm to increase the accuracy of its models and improve its performance. In the context of spectrum sharing, these changepoints represent interferers leaving and entering the spectral environment. The addition of changepoint detection allows for dynamic and robust spectrum sharing even as interference patterns change dramatically. BOCD is especially advantageous because it enables online changepoint detection, allowing models to be updated continuously as data are collected. This strategy can also be applied to many other predictive algorithms that create models in a changing environment.

Dilated POCS: Minimax Convex Optimization

Jun 09, 2022

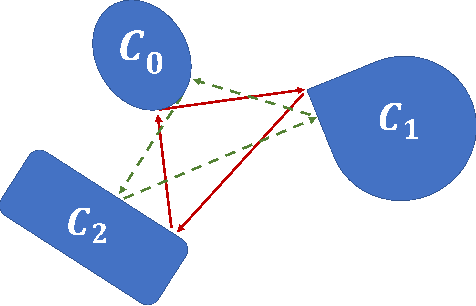

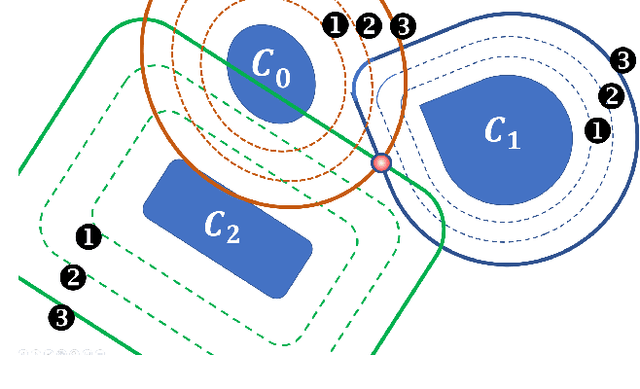

Abstract:Alternating projection onto convex sets (POCS) provides an iterative procedure to find a signal that satisfies two or more convex constraints when the sets intersect. For nonintersecting constraints, the method of simultaneous projections produces a minimum mean square error (MSE) solution. In certain cases, a minimax solution is more desirable. Generating a minimax solution is possible using dilated POCS (D-POCS). The minimax solution uses morphological dilation of nonintersecting signal convex constraints. The sets are progressively dilated to the point where there is intersection at a minimax solution. Examples are given contrasting the MSE and minimax solutions in problem of tomographic reconstruction of images. Lastly, morphological erosion of signal sets is suggested as a method to shrink the overlap when sets intersect at more than one point.

Generatively Augmented Neural Network Watchdog for Image Classification Networks

Sep 07, 2021

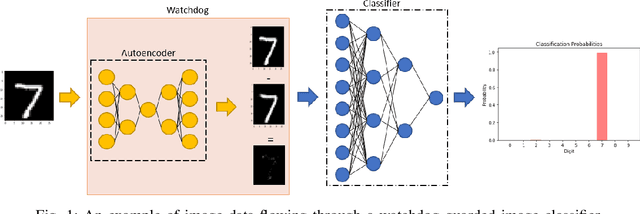

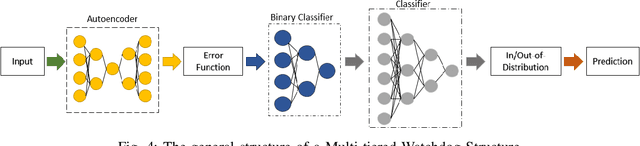

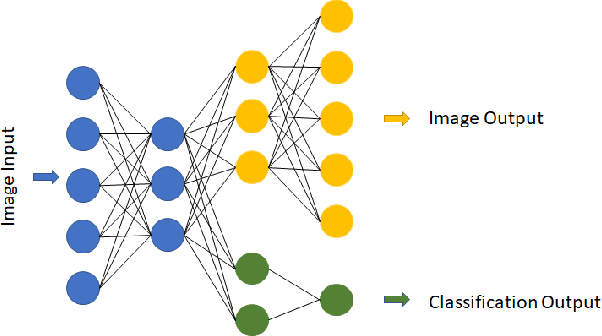

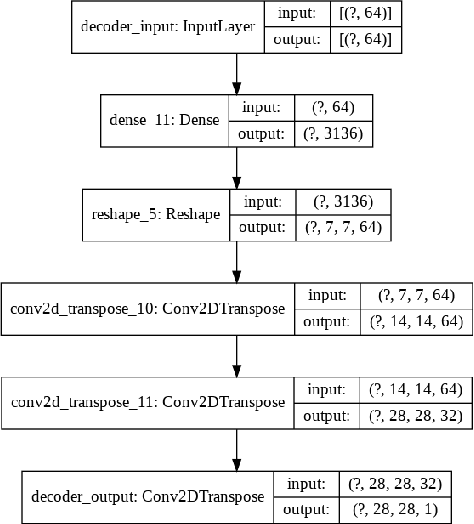

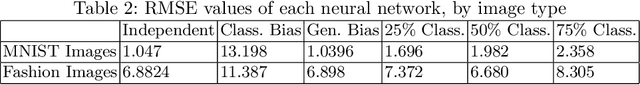

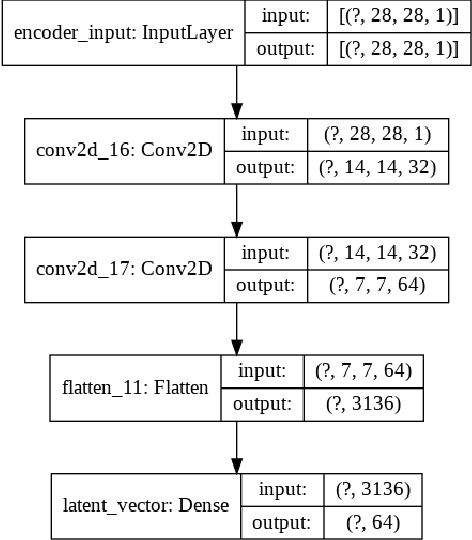

Abstract:The identification of out-of-distribution data is vital to the deployment of classification networks. For example, a generic neural network that has been trained to differentiate between images of dogs and cats can only classify an input as either a dog or a cat. If a picture of a car or a kumquat were to be supplied to this classifier, the result would still be either a dog or a cat. In order to mitigate this, techniques such as the neural network watchdog have been developed. The compression of the image input into the latent layer of the autoencoder defines the region of in-distribution in the image space. This in-distribution set of input data has a corresponding boundary in the image space. The watchdog assesses whether inputs are in inside or outside this boundary. This paper demonstrates how to sharpen this boundary using generative network training data augmentation thereby bettering the discrimination and overall performance of the watchdog.

Classification of Common Waveforms Including a Watchdog for Unknown Signals

Aug 16, 2021

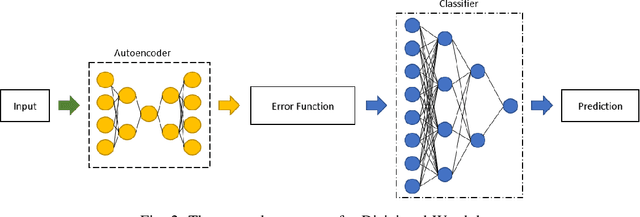

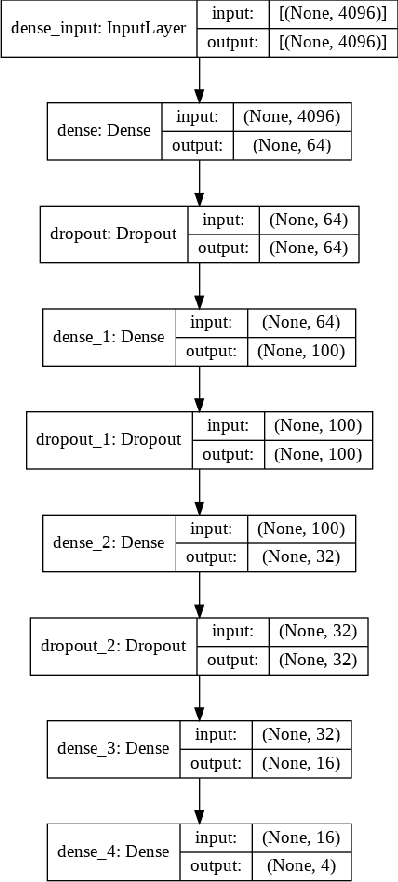

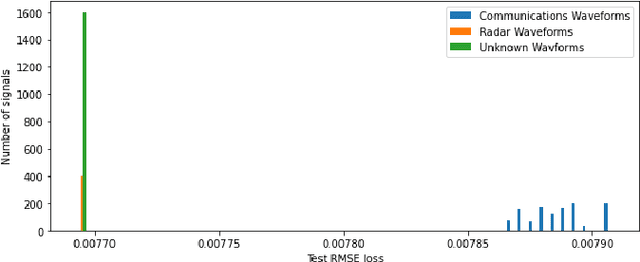

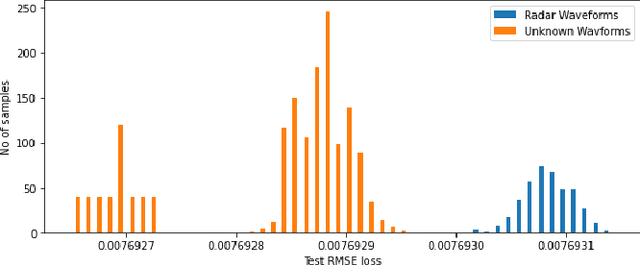

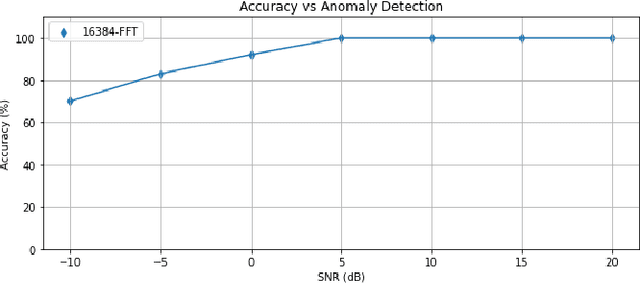

Abstract:In this paper, we examine the use of a deep multi-layer perceptron model architecture to classify received signal samples as coming from one of four common waveforms, Single Carrier (SC), Single-Carrier Frequency Division Multiple Access (SC-FDMA), Orthogonal Frequency Division Multiplexing (OFDM), and Linear Frequency Modulation (LFM), used in communication and radar networks. Synchronization of the signals is not needed as we assume there is an unknown and uncompensated time and frequency offset. An autoencoder with a deep CNN architecture is also examined to create a new fifth classification category of an unknown waveform type. This is accomplished by calculating a minimum and maximum threshold values from the root mean square error (RMSE) of the radar and communication waveforms. The classifier and autoencoder work together to monitor a spectrum area to identify the common waveforms inside the area of operation along with detecting unknown waveforms. Results from testing showed the classifier had 100\% classification rate above 0 dB with accuracy of 83.2\% and 94.7\% at -10 dB and -5 dB, respectively, with signal impairments present. Results for the anomaly detector showed 85.3\% accuracy at 0 dB with 100\% at SNR greater than 0 dB with signal impairments present when using a high-value Fast Fourier Transform (FFT) size. Accurate detection rates decline as additional noise is introduced to the signals, with 78.1\% at -5 dB and 56.5\% at -10 dB. However, these low rates seen can be potentially mitigated by using even higher FFT sizes also shown in our results.

A Proof of Concept Neural Network Watchdog using a Hybrid Generative Classifier For Optimized Outlier Detection

Feb 28, 2021

Abstract:With the continuous development of tools such as TensorFlow and PyTorch, Neural Networks are becoming easier to develop and train. With the expansion of these tools, however, neural networks have also become more black boxed. A neural network trained to classify fruit may classify a picture of a giraffe as a banana. A neural network watchdog may be implemented to identify such out-of-distribution inputs, allowing a classifier to disregard such data. By building a hybrid generator/classifier network, we can easily implement a watchdog while improving training and evaluation efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge