Glauco A. Amigo

Generatively Augmented Neural Network Watchdog for Image Classification Networks

Sep 07, 2021

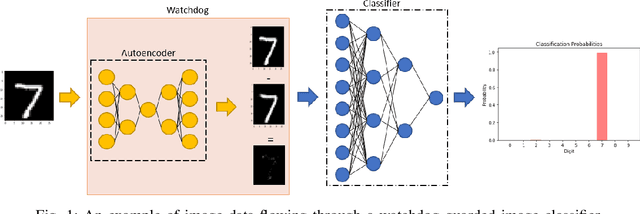

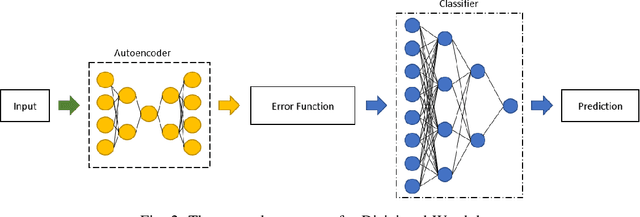

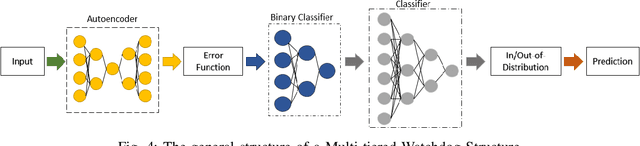

Abstract:The identification of out-of-distribution data is vital to the deployment of classification networks. For example, a generic neural network that has been trained to differentiate between images of dogs and cats can only classify an input as either a dog or a cat. If a picture of a car or a kumquat were to be supplied to this classifier, the result would still be either a dog or a cat. In order to mitigate this, techniques such as the neural network watchdog have been developed. The compression of the image input into the latent layer of the autoencoder defines the region of in-distribution in the image space. This in-distribution set of input data has a corresponding boundary in the image space. The watchdog assesses whether inputs are in inside or outside this boundary. This paper demonstrates how to sharpen this boundary using generative network training data augmentation thereby bettering the discrimination and overall performance of the watchdog.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge