Junyoung Byun

Safeguarding Privacy of Retrieval Data against Membership Inference Attacks: Is This Query Too Close to Home?

May 28, 2025Abstract:Retrieval-augmented generation (RAG) mitigates the hallucination problem in large language models (LLMs) and has proven effective for specific, personalized applications. However, passing private retrieved documents directly to LLMs introduces vulnerability to membership inference attacks (MIAs), which try to determine whether the target datum exists in the private external database or not. Based on the insight that MIA queries typically exhibit high similarity to only one target document, we introduce Mirabel, a similarity-based MIA detection framework designed for the RAG system. With the proposed Mirabel, we show that simple detect-and-hide strategies can successfully obfuscate attackers, maintain data utility, and remain system-agnostic. We experimentally prove its detection and defense against various state-of-the-art MIA methods and its adaptability to existing private RAG systems.

Leveraging Programmatically Generated Synthetic Data for Differentially Private Diffusion Training

Dec 13, 2024Abstract:Programmatically generated synthetic data has been used in differential private training for classification to enhance performance without privacy leakage. However, as the synthetic data is generated from a random process, the distribution of real data and the synthetic data are distinguishable and difficult to transfer. Therefore, the model trained with the synthetic data generates unrealistic random images, raising challenges to adapt the synthetic data for generative models. In this work, we propose DP-SynGen, which leverages programmatically generated synthetic data in diffusion models to address this challenge. By exploiting the three stages of diffusion models(coarse, context, and cleaning) we identify stages where synthetic data can be effectively utilized. We theoretically and empirically verified that cleaning and coarse stages can be trained without private data, replacing them with synthetic data to reduce the privacy budget. The experimental results show that DP-SynGen improves the quality of generative data by mitigating the negative impact of privacy-induced noise on the generation process.

Introducing Competition to Boost the Transferability of Targeted Adversarial Examples through Clean Feature Mixup

May 24, 2023Abstract:Deep neural networks are widely known to be susceptible to adversarial examples, which can cause incorrect predictions through subtle input modifications. These adversarial examples tend to be transferable between models, but targeted attacks still have lower attack success rates due to significant variations in decision boundaries. To enhance the transferability of targeted adversarial examples, we propose introducing competition into the optimization process. Our idea is to craft adversarial perturbations in the presence of two new types of competitor noises: adversarial perturbations towards different target classes and friendly perturbations towards the correct class. With these competitors, even if an adversarial example deceives a network to extract specific features leading to the target class, this disturbance can be suppressed by other competitors. Therefore, within this competition, adversarial examples should take different attack strategies by leveraging more diverse features to overwhelm their interference, leading to improving their transferability to different models. Considering the computational complexity, we efficiently simulate various interference from these two types of competitors in feature space by randomly mixing up stored clean features in the model inference and named this method Clean Feature Mixup (CFM). Our extensive experimental results on the ImageNet-Compatible and CIFAR-10 datasets show that the proposed method outperforms the existing baselines with a clear margin. Our code is available at https://github.com/dreamflake/CFM.

Improving the Utility of Differentially Private Clustering through Dynamical Processing

Apr 27, 2023

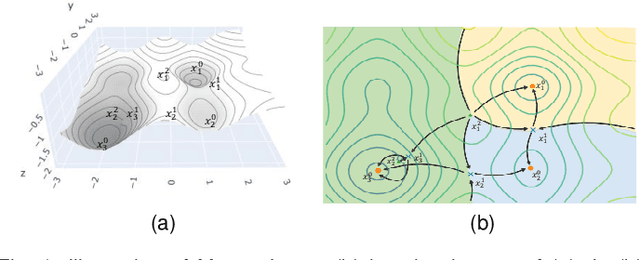

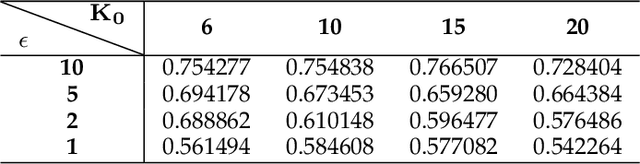

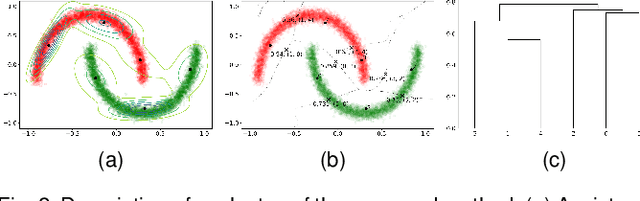

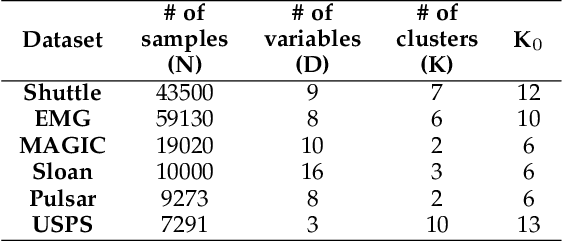

Abstract:This study aims to alleviate the trade-off between utility and privacy in the task of differentially private clustering. Existing works focus on simple clustering methods, which show poor clustering performance for non-convex clusters. By utilizing Morse theory, we hierarchically connect the Gaussian sub-clusters to fit complex cluster distributions. Because differentially private sub-clusters are obtained through the existing methods, the proposed method causes little or no additional privacy loss. We provide a theoretical background that implies that the proposed method is inductive and can achieve any desired number of clusters. Experiments on various datasets show that our framework achieves better clustering performance at the same privacy level, compared to the existing methods.

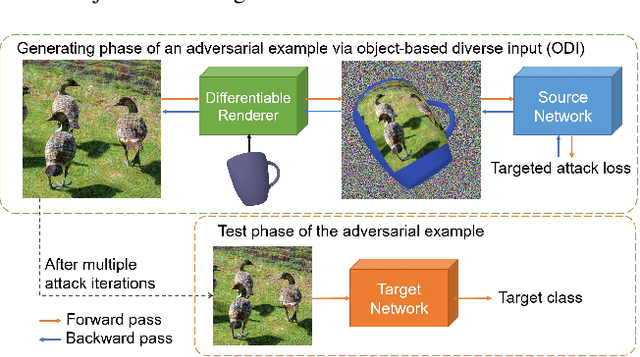

Improving the Transferability of Targeted Adversarial Examples through Object-Based Diverse Input

Mar 17, 2022

Abstract:The transferability of adversarial examples allows the deception on black-box models, and transfer-based targeted attacks have attracted a lot of interest due to their practical applicability. To maximize the transfer success rate, adversarial examples should avoid overfitting to the source model, and image augmentation is one of the primary approaches for this. However, prior works utilize simple image transformations such as resizing, which limits input diversity. To tackle this limitation, we propose the object-based diverse input (ODI) method that draws an adversarial image on a 3D object and induces the rendered image to be classified as the target class. Our motivation comes from the humans' superior perception of an image printed on a 3D object. If the image is clear enough, humans can recognize the image content in a variety of viewing conditions. Likewise, if an adversarial example looks like the target class to the model, the model should also classify the rendered image of the 3D object as the target class. The ODI method effectively diversifies the input by leveraging an ensemble of multiple source objects and randomizing viewing conditions. In our experimental results on the ImageNet-Compatible dataset, this method boosts the average targeted attack success rate from 28.3% to 47.0% compared to the state-of-the-art methods. We also demonstrate the applicability of the ODI method to adversarial examples on the face verification task and its superior performance improvement. Our code is available at https://github.com/dreamflake/ODI.

Geometrically Adaptive Dictionary Attack on Face Recognition

Nov 08, 2021Abstract:CNN-based face recognition models have brought remarkable performance improvement, but they are vulnerable to adversarial perturbations. Recent studies have shown that adversaries can fool the models even if they can only access the models' hard-label output. However, since many queries are needed to find imperceptible adversarial noise, reducing the number of queries is crucial for these attacks. In this paper, we point out two limitations of existing decision-based black-box attacks. We observe that they waste queries for background noise optimization, and they do not take advantage of adversarial perturbations generated for other images. We exploit 3D face alignment to overcome these limitations and propose a general strategy for query-efficient black-box attacks on face recognition named Geometrically Adaptive Dictionary Attack (GADA). Our core idea is to create an adversarial perturbation in the UV texture map and project it onto the face in the image. It greatly improves query efficiency by limiting the perturbation search space to the facial area and effectively recycling previous perturbations. We apply the GADA strategy to two existing attack methods and show overwhelming performance improvement in the experiments on the LFW and CPLFW datasets. Furthermore, we also present a novel attack strategy that can circumvent query similarity-based stateful detection that identifies the process of query-based black-box attacks.

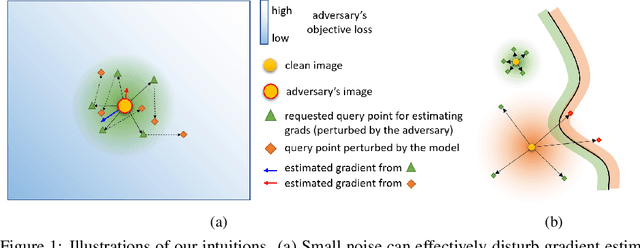

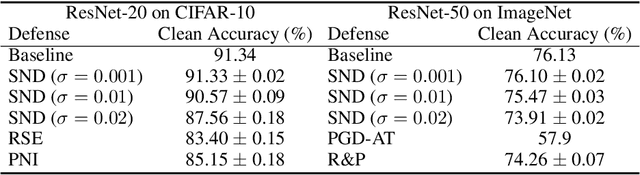

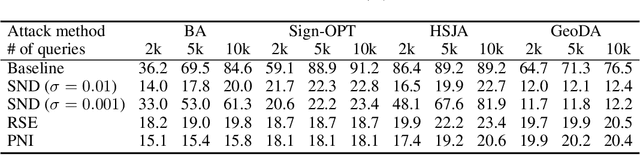

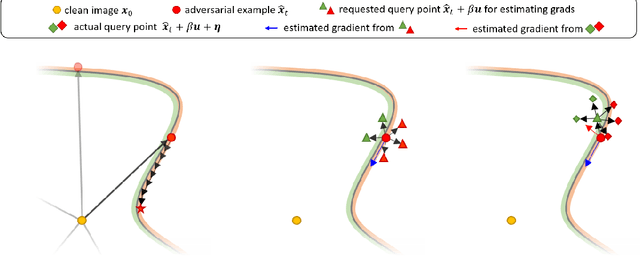

Small Input Noise is Enough to Defend Against Query-based Black-box Attacks

Jan 13, 2021

Abstract:While deep neural networks show unprecedented performance in various tasks, the vulnerability to adversarial examples hinders their deployment in safety-critical systems. Many studies have shown that attacks are also possible even in a black-box setting where an adversary cannot access the target model's internal information. Most black-box attacks are based on queries, each of which obtains the target model's output for an input, and many recent studies focus on reducing the number of required queries. In this paper, we pay attention to an implicit assumption of these attacks that the target model's output exactly corresponds to the query input. If some randomness is introduced into the model to break this assumption, query-based attacks may have tremendous difficulty in both gradient estimation and local search, which are the core of their attack process. From this motivation, we observe even a small additive input noise can neutralize most query-based attacks and name this simple yet effective approach Small Noise Defense (SND). We analyze how SND can defend against query-based black-box attacks and demonstrate its effectiveness against eight different state-of-the-art attacks with CIFAR-10 and ImageNet datasets. Even with strong defense ability, SND almost maintains the original clean accuracy and computational speed. SND is readily applicable to pre-trained models by adding only one line of code at the inference stage, so we hope that it will be used as a baseline of defense against query-based black-box attacks in the future.

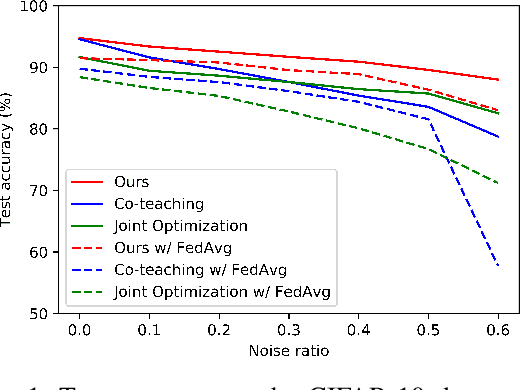

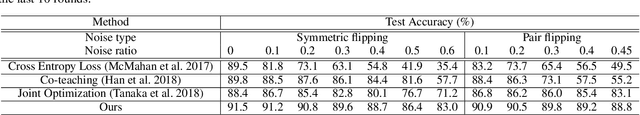

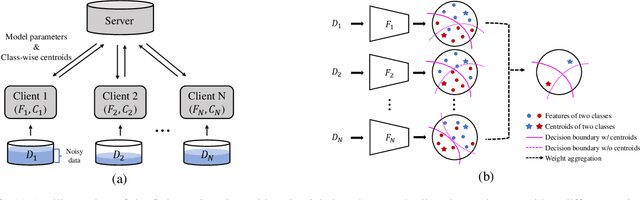

Robust Federated Learning with Noisy Labels

Dec 03, 2020

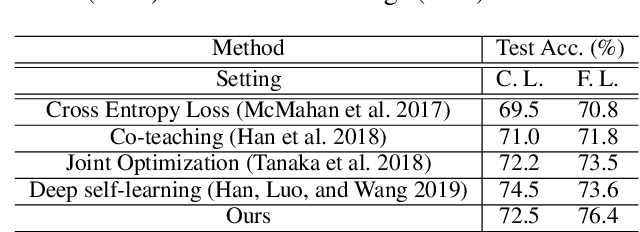

Abstract:Federated learning is a paradigm that enables local devices to jointly train a server model while keeping the data decentralized and private. In federated learning, since local data are collected by clients, it is hardly guaranteed that the data are correctly annotated. Although a lot of studies have been conducted to train the networks robust to these noisy data in a centralized setting, these algorithms still suffer from noisy labels in federated learning. Compared to the centralized setting, clients' data can have different noise distributions due to variations in their labeling systems or background knowledge of users. As a result, local models form inconsistent decision boundaries and their weights severely diverge from each other, which are serious problems in federated learning. To solve these problems, we introduce a novel federated learning scheme that the server cooperates with local models to maintain consistent decision boundaries by interchanging class-wise centroids. These centroids are central features of local data on each device, which are aligned by the server every communication round. Updating local models with the aligned centroids helps to form consistent decision boundaries among local models, although the noise distributions in clients' data are different from each other. To improve local model performance, we introduce a novel approach to select confident samples that are used for updating the model with given labels. Furthermore, we propose a global-guided pseudo-labeling method to update labels of unconfident samples by exploiting the global model. Our experimental results on the noisy CIFAR-10 dataset and the Clothing1M dataset show that our approach is noticeably effective in federated learning with noisy labels.

BitNet: Learning-Based Bit-Depth Expansion

Oct 10, 2019

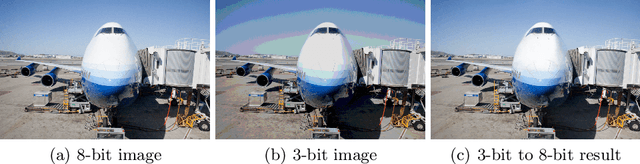

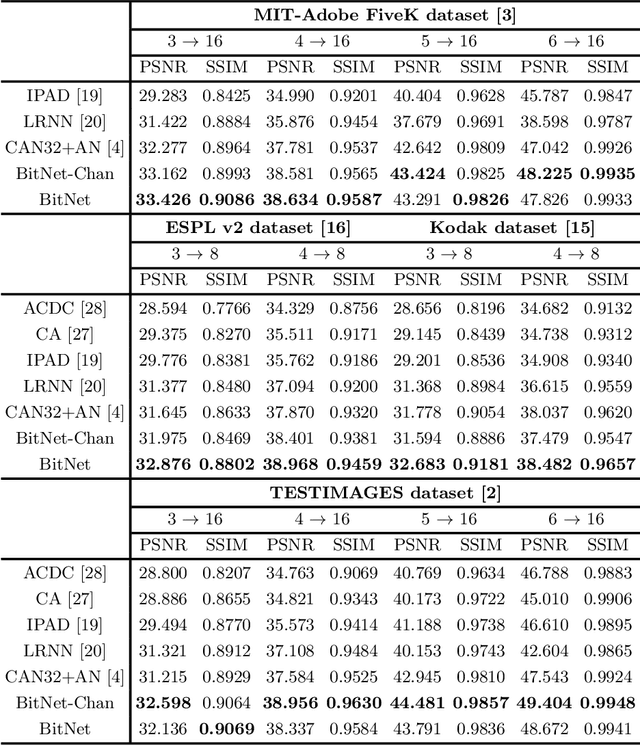

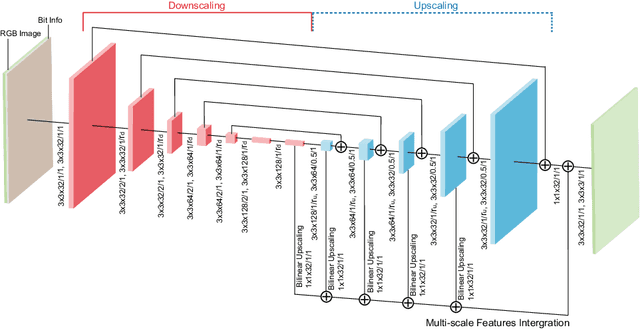

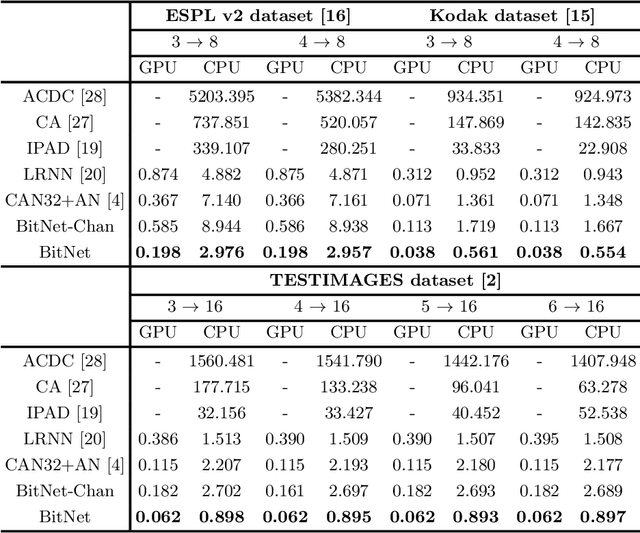

Abstract:Bit-depth is the number of bits for each color channel of a pixel in an image. Although many modern displays support unprecedented higher bit-depth to show more realistic and natural colors with a high dynamic range, most media sources are still in bit-depth of 8 or lower. Since insufficient bit-depth may generate annoying false contours or lose detailed visual appearance, bit-depth expansion (BDE) from low bit-depth (LBD) images to high bit-depth (HBD) images becomes more and more important. In this paper, we adopt a learning-based approach for BDE and propose a novel CNN-based bit-depth expansion network (BitNet) that can effectively remove false contours and restore visual details at the same time. We have carefully designed our BitNet based on an encoder-decoder architecture with dilated convolutions and a novel multi-scale feature integration. We have performed various experiments with four different datasets including MIT-Adobe FiveK, Kodak, ESPL v2, and TESTIMAGES, and our proposed BitNet has achieved state-of-the-art performance in terms of PSNR and SSIM among other existing BDE methods and famous CNN-based image processing networks. Unlike previous methods that separately process each color channel, we treat all RGB channels at once and have greatly improved color restoration. In addition, our network has shown the fastest computational speed in near real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge