Junwoo Chang

Group-Invariant Unsupervised Skill Discovery: Symmetry-aware Skill Representations for Generalizable Behavior

Jan 20, 2026Abstract:Unsupervised skill discovery aims to acquire behavior primitives that improve exploration and accelerate downstream task learning. However, existing approaches often ignore the geometric symmetries of physical environments, leading to redundant behaviors and sample inefficiency. To address this, we introduce Group-Invariant Skill Discovery (GISD), a framework that explicitly embeds group structure into the skill discovery objective. Our approach is grounded in a theoretical guarantee: we prove that in group-symmetric environments, the standard Wasserstein dependency measure admits a globally optimal solution comprised of an equivariant policy and a group-invariant scoring function. Motivated by this, we formulate the Group-Invariant Wasserstein dependency measure, which restricts the optimization to this symmetry-aware subspace without loss of optimality. Practically, we parameterize the scoring function using a group Fourier representation and define the intrinsic reward via the alignment of equivariant latent features, ensuring that the discovered skills generalize systematically under group transformations. Experiments on state-based and pixel-based locomotion benchmarks demonstrate that GISD achieves broader state-space coverage and improved efficiency in downstream task learning compared to a strong baseline.

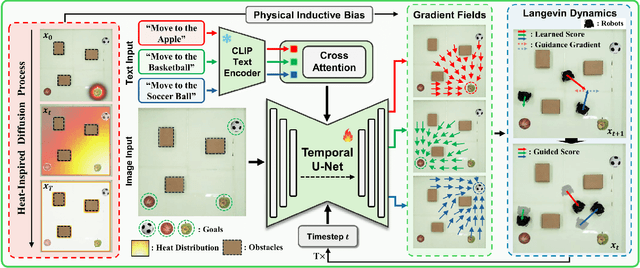

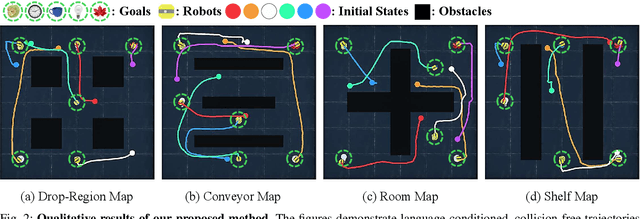

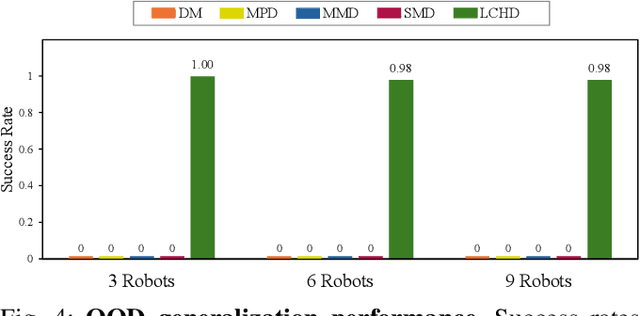

Multi-Robot Motion Planning from Vision and Language using Heat-Inspired Diffusion

Dec 15, 2025

Abstract:Diffusion models have recently emerged as powerful tools for robot motion planning by capturing the multi-modal distribution of feasible trajectories. However, their extension to multi-robot settings with flexible, language-conditioned task specifications remains limited. Furthermore, current diffusion-based approaches incur high computational cost during inference and struggle with generalization because they require explicit construction of environment representations and lack mechanisms for reasoning about geometric reachability. To address these limitations, we present Language-Conditioned Heat-Inspired Diffusion (LCHD), an end-to-end vision-based framework that generates language-conditioned, collision-free trajectories. LCHD integrates CLIP-based semantic priors with a collision-avoiding diffusion kernel serving as a physical inductive bias that enables the planner to interpret language commands strictly within the reachable workspace. This naturally handles out-of-distribution scenarios -- in terms of reachability -- by guiding robots toward accessible alternatives that match the semantic intent, while eliminating the need for explicit obstacle information at inference time. Extensive evaluations on diverse real-world-inspired maps, along with real-robot experiments, show that LCHD consistently outperforms prior diffusion-based planners in success rate, while reducing planning latency.

Symmetry-Aware Steering of Equivariant Diffusion Policies: Benefits and Limits

Dec 12, 2025Abstract:Equivariant diffusion policies (EDPs) combine the generative expressivity of diffusion models with the strong generalization and sample efficiency afforded by geometric symmetries. While steering these policies with reinforcement learning (RL) offers a promising mechanism for fine-tuning beyond demonstration data, directly applying standard (non-equivariant) RL can be sample-inefficient and unstable, as it ignores the symmetries that EDPs are designed to exploit. In this paper, we theoretically establish that the diffusion process of an EDP is equivariant, which in turn induces a group-invariant latent-noise MDP that is well-suited for equivariant diffusion steering. Building on this theory, we introduce a principled symmetry-aware steering framework and compare standard, equivariant, and approximately equivariant RL strategies through comprehensive experiments across tasks with varying degrees of symmetry. While we identify the practical boundaries of strict equivariance under symmetry breaking, we show that exploiting symmetry during the steering process yields substantial benefits-enhancing sample efficiency, preventing value divergence, and achieving strong policy improvements even when EDPs are trained from extremely limited demonstrations.

SE(3)-Equivariant Robot Learning and Control: A Tutorial Survey

Mar 12, 2025

Abstract:Recent advances in deep learning and Transformers have driven major breakthroughs in robotics by employing techniques such as imitation learning, reinforcement learning, and LLM-based multimodal perception and decision-making. However, conventional deep learning and Transformer models often struggle to process data with inherent symmetries and invariances, typically relying on large datasets or extensive data augmentation. Equivariant neural networks overcome these limitations by explicitly integrating symmetry and invariance into their architectures, leading to improved efficiency and generalization. This tutorial survey reviews a wide range of equivariant deep learning and control methods for robotics, from classic to state-of-the-art, with a focus on SE(3)-equivariant models that leverage the natural 3D rotational and translational symmetries in visual robotic manipulation and control design. Using unified mathematical notation, we begin by reviewing key concepts from group theory, along with matrix Lie groups and Lie algebras. We then introduce foundational group-equivariant neural network design and show how the group-equivariance can be obtained through their structure. Next, we discuss the applications of SE(3)-equivariant neural networks in robotics in terms of imitation learning and reinforcement learning. The SE(3)-equivariant control design is also reviewed from the perspective of geometric control. Finally, we highlight the challenges and future directions of equivariant methods in developing more robust, sample-efficient, and multi-modal real-world robotic systems.

Denoising Heat-inspired Diffusion with Insulators for Collision Free Motion Planning

Oct 19, 2023

Abstract:Diffusion models have risen as a powerful tool in robotics due to their flexibility and multi-modality. While some of these methods effectively address complex problems, they often depend heavily on inference-time obstacle detection and require additional equipment. Addressing these challenges, we present a method that, during inference time, simultaneously generates only reachable goals and plans motions that avoid obstacles, all from a single visual input. Central to our approach is the novel use of a collision-avoiding diffusion kernel for training. Through evaluations against behavior-cloning and classical diffusion models, our framework has proven its robustness. It is particularly effective in multi-modal environments, navigating toward goals and avoiding unreachable ones blocked by obstacles, while ensuring collision avoidance.

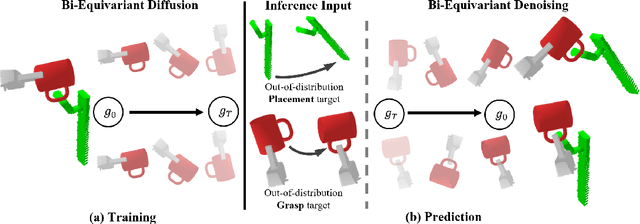

Diffusion-EDFs: Bi-equivariant Denoising Generative Modeling on SE(3) for Visual Robotic Manipulation

Sep 07, 2023

Abstract:Recent studies have verified that equivariant methods can significantly improve the data efficiency, generalizability, and robustness in robot learning. Meanwhile, denoising diffusion-based generative modeling has recently gained significant attention as a promising approach for robotic manipulation learning from demonstrations with stochastic behaviors. In this paper, we present Diffusion-EDFs, a novel approach that incorporates spatial roto-translation equivariance, i.e., SE(3)-equivariance to diffusion generative modeling. By integrating SE(3)-equivariance into our model architectures, we demonstrate that our proposed method exhibits remarkable data efficiency, requiring only 5 to 10 task demonstrations for effective end-to-end training. Furthermore, our approach showcases superior generalizability compared to previous diffusion-based manipulation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge