Jun he

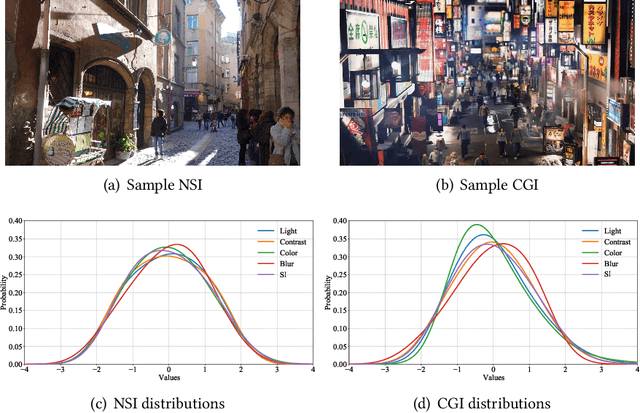

Subjective and Objective Quality Assessment for in-the-Wild Computer Graphics Images

Mar 14, 2023

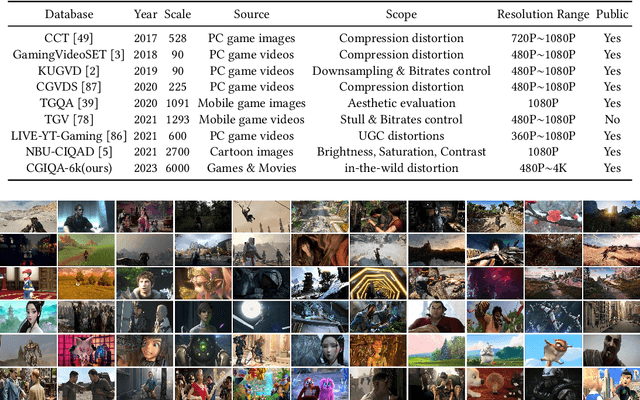

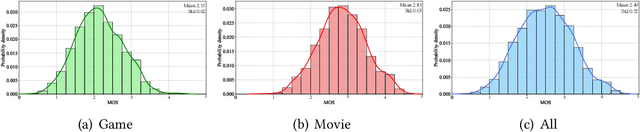

Abstract:Computer graphics images (CGIs) are artificially generated by means of computer programs and are widely perceived under various scenarios, such as games, streaming media, etc. In practical, the quality of CGIs consistently suffers from poor rendering during the production and inevitable compression artifacts during the transmission of multimedia applications. However, few works have been dedicated to dealing with the challenge of computer graphics images quality assessment (CGIQA). Most image quality assessment (IQA) metrics are developed for natural scene images (NSIs) and validated on the databases consisting of NSIs with synthetic distortions, which are not suitable for in-the-wild CGIs. To bridge the gap between evaluating the quality of NSIs and CGIs, we construct a large-scale in-the-wild CGIQA database consisting of 6,000 CGIs (CGIQA-6k) and carry out the subjective experiment in a well-controlled laboratory environment to obtain the accurate perceptual ratings of the CGIs. Then, we propose an effective deep learning-based no-reference (NR) IQA model by utilizing multi-stage feature fusion strategy and multi-stage channel attention mechanism. The major motivation of the proposed model is to make full use of inter-channel information from low-level to high-level since CGIs have apparent patterns as well as rich interactive semantic content. Experimental results show that the proposed method outperforms all other state-of-the-art NR IQA methods on the constructed CGIQA-6k database and other CGIQA-related databases. The database along with the code will be released to facilitate further research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge