Jun Gong

HICD: Hallucination-Inducing via Attention Dispersion for Contrastive Decoding to Mitigate Hallucinations in Large Language Models

Mar 17, 2025Abstract:Large Language Models (LLMs) often generate hallucinations, producing outputs that are contextually inaccurate or factually incorrect. We introduce HICD, a novel method designed to induce hallucinations for contrastive decoding to mitigate hallucinations. Unlike existing contrastive decoding methods, HICD selects attention heads crucial to the model's prediction as inducing heads, then induces hallucinations by dispersing attention of these inducing heads and compares the hallucinated outputs with the original outputs to obtain the final result. Our approach significantly improves performance on tasks requiring contextual faithfulness, such as context completion, reading comprehension, and question answering. It also improves factuality in tasks requiring accurate knowledge recall. We demonstrate that our inducing heads selection and attention dispersion method leads to more "contrast-effective" hallucinations for contrastive decoding, outperforming other hallucination-inducing methods. Our findings provide a promising strategy for reducing hallucinations by inducing hallucinations in a controlled manner, enhancing the performance of LLMs in a wide range of tasks.

Hybrid Beamforming Design for RSMA-assisted mmWave Integrated Sensing and Communications

Jun 07, 2024

Abstract:Integrated sensing and communications (ISAC) has been considered one of the new paradigms for sixth-generation (6G) wireless networks. In the millimeter-wave (mmWave) ISAC system, hybrid beamforming (HBF) is considered an emerging technology to exploit the limited number of radio frequency (RF) chains in order to reduce the system hardware cost and power consumption. However, the HBF structure reduces the spatial degrees of freedom for the ISAC system, which further leads to increased interference between multiple users and between users and radar sensing. To solve the above problem, rate split multiple access (RSMA), which is a flexible and robust interference management strategy, is considered. We investigate the joint common rate allocation and HBF design problem for the HBF-based RSMA-assisted mmWave ISAC scheme. We propose the penalty dual decomposition (PDD) method coupled with the weighted mean squared error (WMMSE) minimization method to solve this high-dimensional non-convex problem, which converges to the Karush-Kuhn-Tucker (KKT) point of the original problem. Then, we extend the proposed algorithm to the HBF design based on finite-resolution phase shifters (PSs) to further improve the energy efficiency of the system. Simulation results demonstrate the effectiveness of the proposed algorithm and show that the RSMA-ISAC scheme outperforms other benchmark schemes.

Advancing Location-Invariant and Device-Agnostic Motion Activity Recognition on Wearable Devices

Feb 06, 2024Abstract:Wearable sensors have permeated into people's lives, ushering impactful applications in interactive systems and activity recognition. However, practitioners face significant obstacles when dealing with sensing heterogeneities, requiring custom models for different platforms. In this paper, we conduct a comprehensive evaluation of the generalizability of motion models across sensor locations. Our analysis highlights this challenge and identifies key on-body locations for building location-invariant models that can be integrated on any device. For this, we introduce the largest multi-location activity dataset (N=50, 200 cumulative hours), which we make publicly available. We also present deployable on-device motion models reaching 91.41% frame-level F1-score from a single model irrespective of sensor placements. Lastly, we investigate cross-location data synthesis, aiming to alleviate the laborious data collection tasks by synthesizing data in one location given data from another. These contributions advance our vision of low-barrier, location-invariant activity recognition systems, catalyzing research in HCI and ubiquitous computing.

Noise-to-Norm Reconstruction for Industrial Anomaly Detection and Localization

Jul 06, 2023Abstract:Anomaly detection has a wide range of applications and is especially important in industrial quality inspection. Currently, many top-performing anomaly-detection models rely on feature-embedding methods. However, these methods do not perform well on datasets with large variations in object locations. Reconstruction-based methods use reconstruction errors to detect anomalies without considering positional differences between samples. In this study, a reconstruction-based method using the noise-to-norm paradigm is proposed, which avoids the invariant reconstruction of anomalous regions. Our reconstruction network is based on M-net and incorporates multiscale fusion and residual attention modules to enable end-to-end anomaly detection and localization. Experiments demonstrate that the method is effective in reconstructing anomalous regions into normal patterns and achieving accurate anomaly detection and localization. On the MPDD and VisA datasets, our proposed method achieved more competitive results than the latest methods, and it set a new state-of-the-art standard on the MPDD dataset.

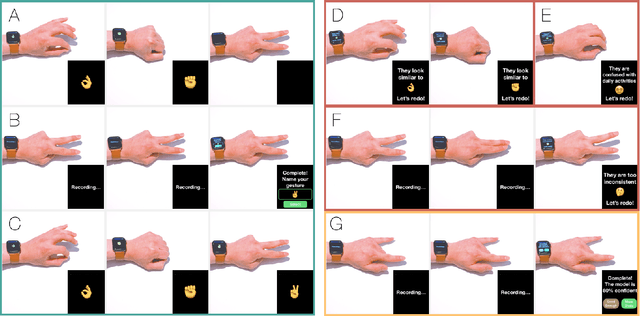

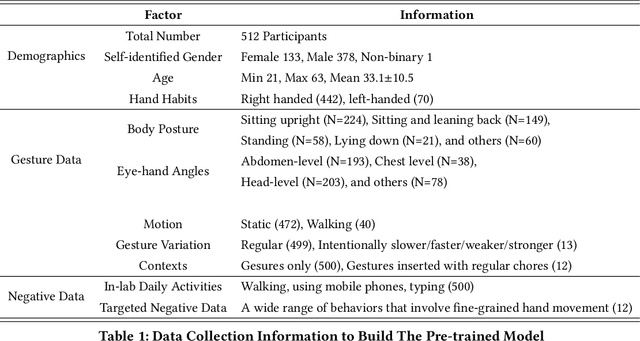

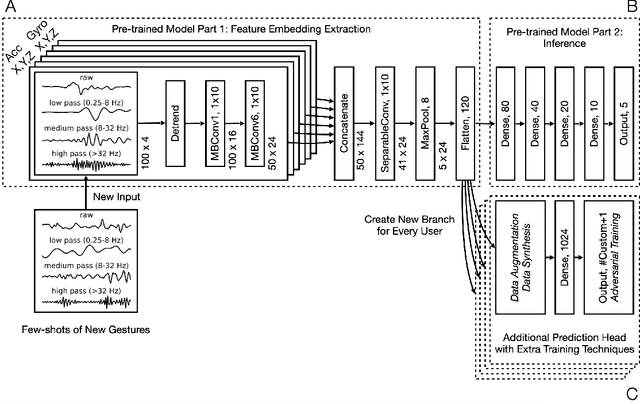

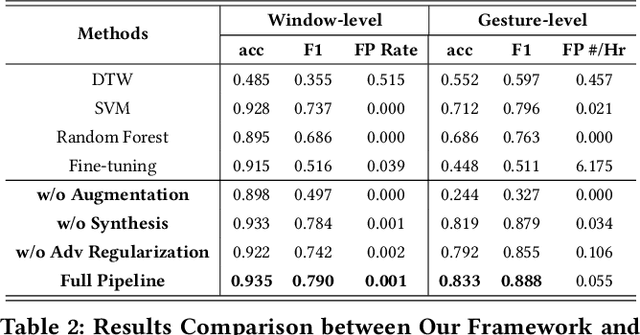

Enabling hand gesture customization on wrist-worn devices

Mar 29, 2022

Abstract:We present a framework for gesture customization requiring minimal examples from users, all without degrading the performance of existing gesture sets. To achieve this, we first deployed a large-scale study (N=500+) to collect data and train an accelerometer-gyroscope recognition model with a cross-user accuracy of 95.7% and a false-positive rate of 0.6 per hour when tested on everyday non-gesture data. Next, we design a few-shot learning framework which derives a lightweight model from our pre-trained model, enabling knowledge transfer without performance degradation. We validate our approach through a user study (N=20) examining on-device customization from 12 new gestures, resulting in an average accuracy of 55.3%, 83.1%, and 87.2% on using one, three, or five shots when adding a new gesture, while maintaining the same recognition accuracy and false-positive rate from the pre-existing gesture set. We further evaluate the usability of our real-time implementation with a user experience study (N=20). Our results highlight the effectiveness, learnability, and usability of our customization framework. Our approach paves the way for a future where users are no longer bound to pre-existing gestures, freeing them to creatively introduce new gestures tailored to their preferences and abilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge