Juan Miguel Valverde

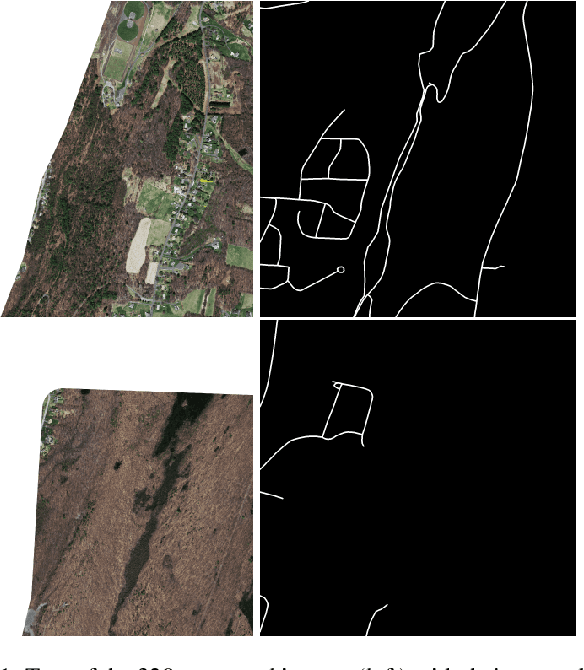

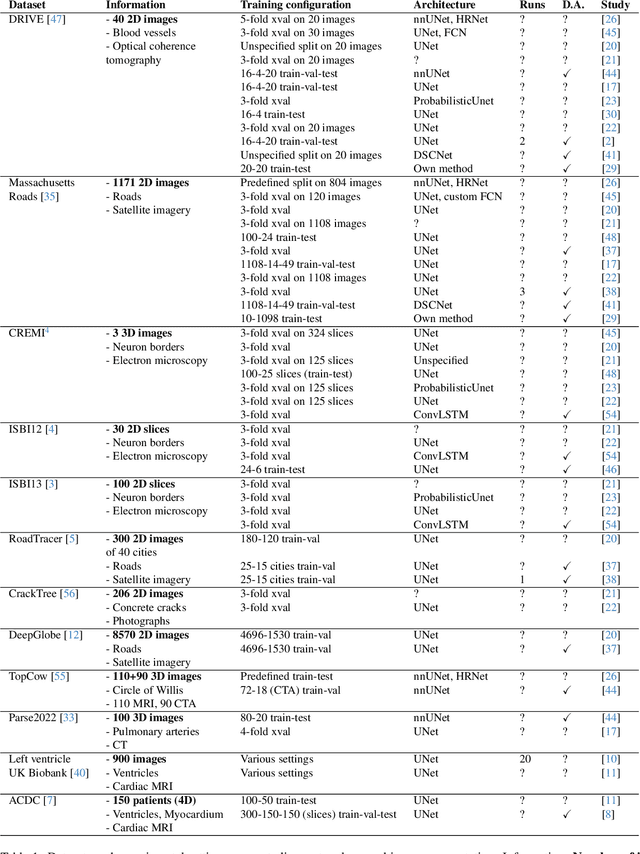

Disconnect to Connect: A Data Augmentation Method for Improving Topology Accuracy in Image Segmentation

Mar 07, 2025Abstract:Accurate segmentation of thin, tubular structures (e.g., blood vessels) is challenging for deep neural networks. These networks classify individual pixels, and even minor misclassifications can break the thin connections within these structures. Existing methods for improving topology accuracy, such as topology loss functions, rely on very precise, topologically-accurate training labels, which are difficult to obtain. This is because annotating images, especially 3D images, is extremely laborious and time-consuming. Low image resolution and contrast further complicates the annotation by causing tubular structures to appear disconnected. We present CoLeTra, a data augmentation strategy that integrates to the models the prior knowledge that structures that appear broken are actually connected. This is achieved by creating images with the appearance of disconnected structures while maintaining the original labels. Our extensive experiments, involving different architectures, loss functions, and datasets, demonstrate that CoLeTra leads to segmentations topologically more accurate while often improving the Dice coefficient and Hausdorff distance. CoLeTra's hyper-parameters are intuitive to tune, and our sensitivity analysis shows that CoLeTra is robust to changes in these hyper-parameters. We also release a dataset specifically suited for image segmentation methods with a focus on topology accuracy. CoLetra's code can be found at https://github.com/jmlipman/CoLeTra.

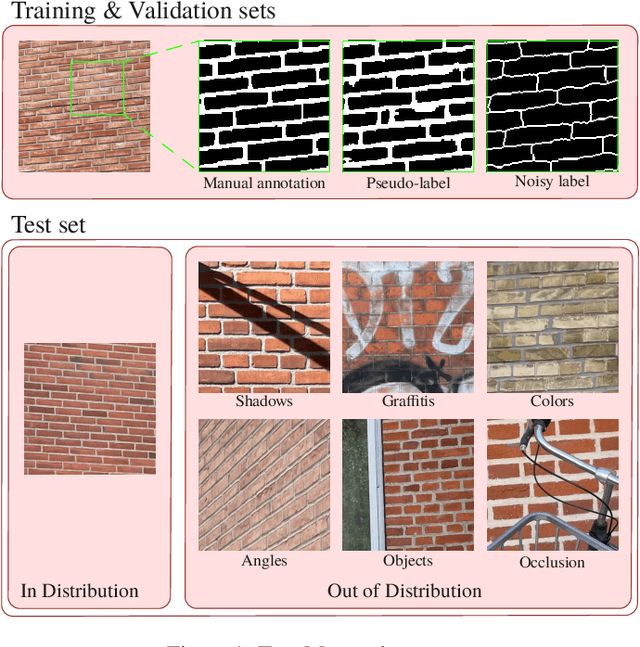

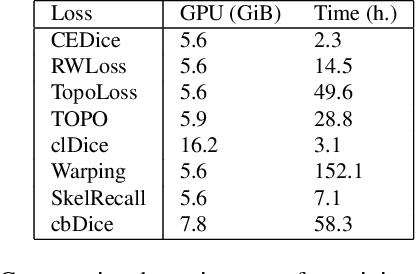

TopoMortar: A dataset to evaluate image segmentation methods focused on topology accuracy

Mar 05, 2025

Abstract:We present TopoMortar, a brick wall dataset that is the first dataset specifically designed to evaluate topology-focused image segmentation methods, such as topology loss functions. TopoMortar enables to investigate in two ways whether methods incorporate prior topological knowledge. First, by eliminating challenges seen in real-world data, such as small training set, noisy labels, and out-of-distribution test-set images, that, as we show, impact the effectiveness of topology losses. Second, by allowing to assess in the same dataset topology accuracy across dataset challenges, isolating dataset-related effects from the effect of incorporating prior topological knowledge. In these two experiments, it is deliberately difficult to improve topology accuracy without actually using topology information, thus, permitting to attribute an improvement in topology accuracy to the incorporation of prior topological knowledge. To this end, TopoMortar includes three types of labels (accurate, noisy, pseudo-labels), two fixed training sets (large and small), and in-distribution and out-of-distribution test-set images. We compared eight loss functions on TopoMortar, and we found that clDice achieved the most topologically accurate segmentations, Skeleton Recall loss performed best particularly with noisy labels, and the relative advantageousness of the other loss functions depended on the experimental setting. Additionally, we show that simple methods, such as data augmentation and self-distillation, can elevate Cross entropy Dice loss to surpass most topology loss functions, and that those simple methods can enhance topology loss functions as well. clDice and Skeleton Recall loss, both skeletonization-based loss functions, were also the fastest to train, making this type of loss function a promising research direction. TopoMortar and our code can be found at https://github.com/jmlipman/TopoMortar

Sauron U-Net: Simple automated redundancy elimination in medical image segmentation via filter pruning

Sep 27, 2022

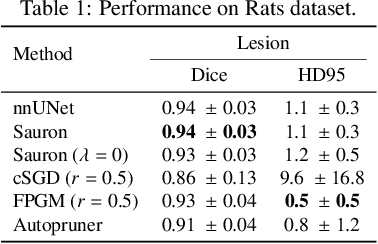

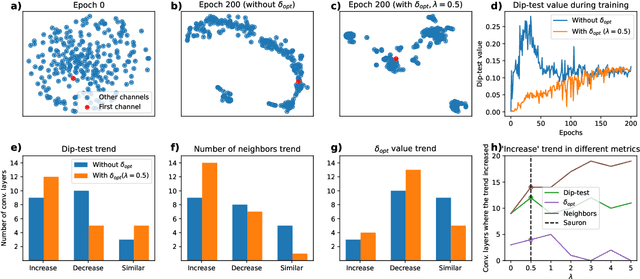

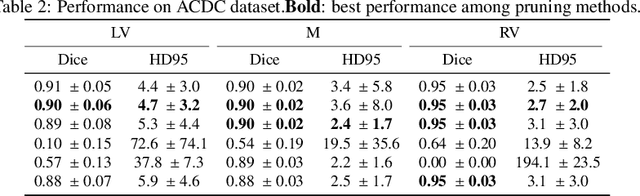

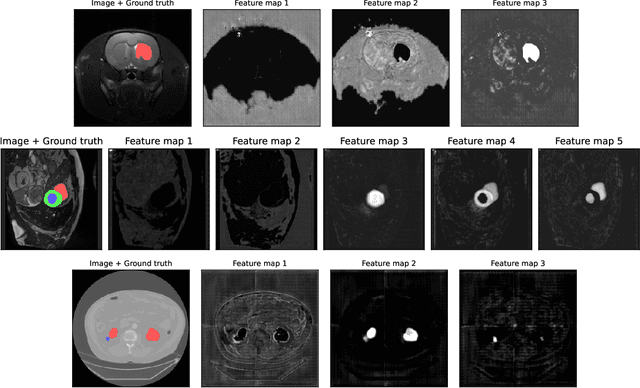

Abstract:We present Sauron, a filter pruning method that eliminates redundant feature maps by discarding the corresponding filters with automatically-adjusted layer-specific thresholds. Furthermore, Sauron minimizes a regularization term that, as we show with various metrics, promotes the formation of feature maps clusters. In contrast to most filter pruning methods, Sauron is single-phase, similarly to typical neural network optimization, requiring fewer hyperparameters and design decisions. Additionally, unlike other cluster-based approaches, our method does not require pre-selecting the number of clusters, which is non-trivial to determine and varies across layers. We evaluated Sauron and three state-of-the-art filter pruning methods on three medical image segmentation tasks. This is an area where filter pruning has received little attention and where it can help building efficient models for medical grade computers that cannot use cloud services due to privacy considerations. Sauron achieved models with higher performance and pruning rate than the competing pruning methods. Additionally, since Sauron removes filters during training, its optimization accelerated over time. Finally, we show that the feature maps of a Sauron-pruned model were highly interpretable. The Sauron code is publicly available at https://github.com/jmlipman/SauronUNet.

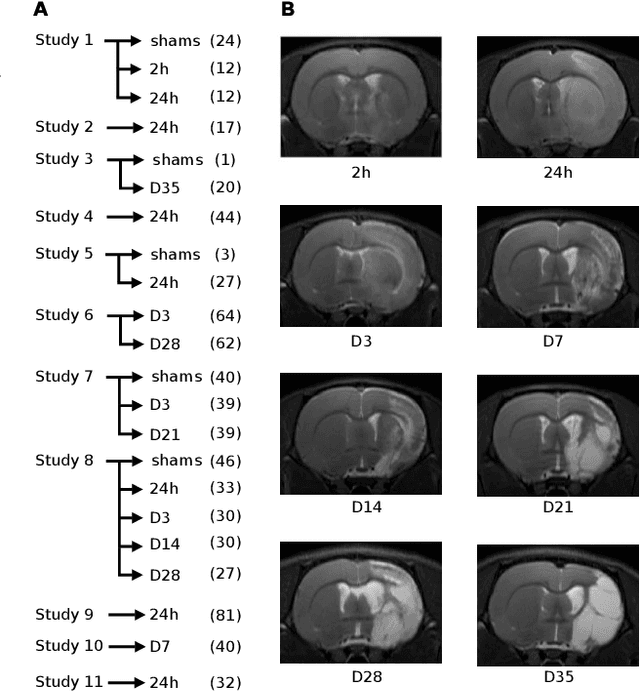

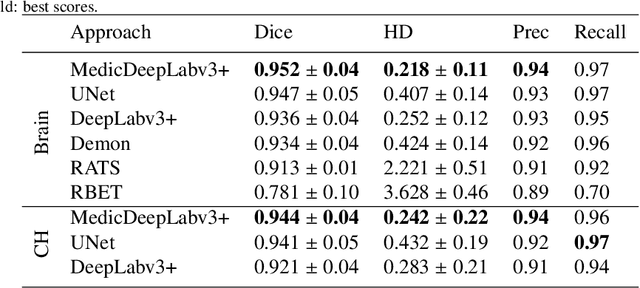

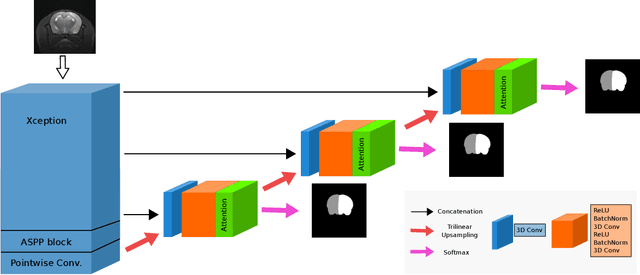

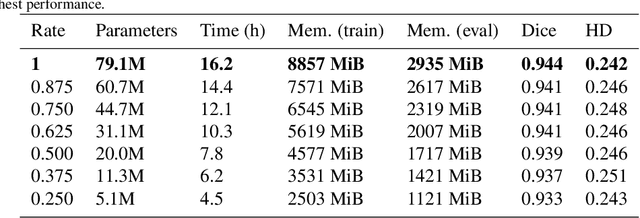

Automatic hemisphere segmentation in rodent MRI with lesions

Aug 04, 2021

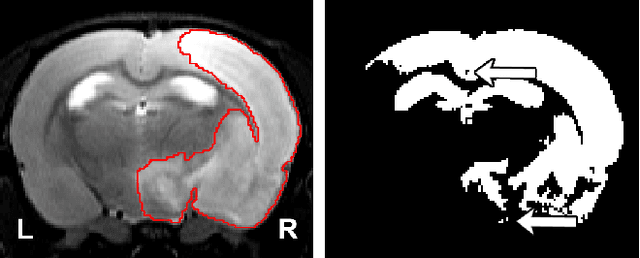

Abstract:We present MedicDeepLabv3+, a convolutional neural network that is the first completely automatic method to segment brain hemispheres in magnetic resonance (MR) images of rodents with lesions. MedicDeepLabv3+ improves the state-of-the-art DeepLabv3+ with an advanced decoder, incorporating spatial attention layers and additional skip connections that, as we show in our experiments, lead to more precise segmentations. MedicDeepLabv3+ requires no MR image preprocessing, such as bias-field correction or registration to a template, produces segmentations in less than a second, and its GPU memory requirements can be adjusted based on the available resources. Using a large dataset of 723 MR rat brain images, we evaluated our MedicDeepLabv3+, two state-of-the-art convolutional neural networks (DeepLabv3+, UNet) and three approaches that were specifically designed for skull-stripping rodent MR images (Demon, RATS and RBET). In our experiments, MedicDeepLabv3+ outperformed the other methods, yielding an average Dice coefficient of 0.952 and 0.944 in the brain and contralateral hemisphere regions. Additionally, we show that despite limiting the GPU memory and the training data to only three images, our MedicDeepLabv3+ also provided satisfactory segmentations. In conclusion, our method, publicly available at https://github.com/jmlipman/MedicDeepLabv3Plus, yielded excellent results in multiple scenarios, demonstrating its capability to reduce human workload in rodent neuroimaging studies.

Region-wise Loss for Biomedical Image Segmentation

Aug 03, 2021

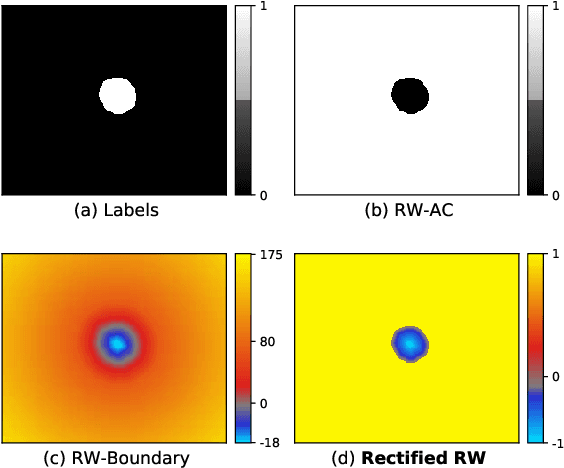

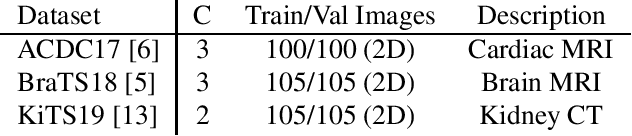

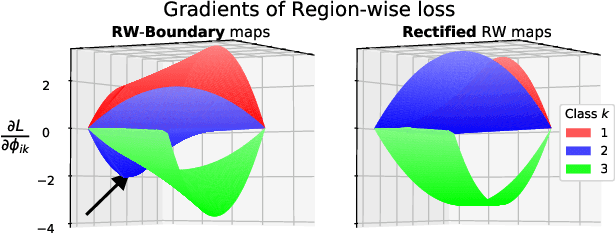

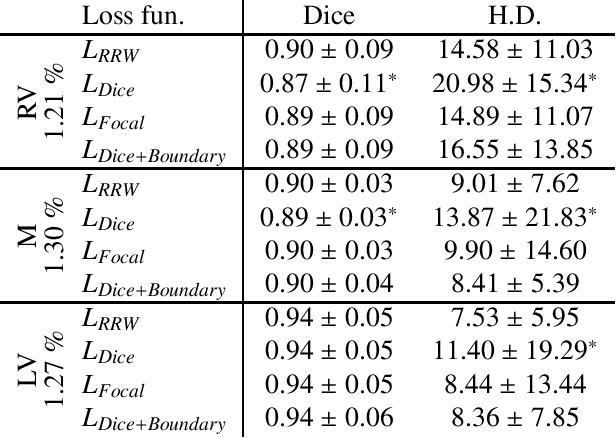

Abstract:We propose Region-wise (RW) loss for biomedical image segmentation. Region-wise loss is versatile, can simultaneously account for class imbalance and pixel importance, and it can be easily implemented as the pixel-wise multiplication between the softmax output and a RW map. We show that, under the proposed Region-wise loss framework, certain loss functions, such as Active Contour and Boundary loss, can be reformulated similarly with appropriate RW maps, thus revealing their underlying similarities and a new perspective to understand these loss functions. We investigate the observed optimization instability caused by certain RW maps, such as Boundary loss distance maps, and we introduce a mathematically-grounded principle to avoid such instability. This principle provides excellent adaptability to any dataset and practically ensures convergence without extra regularization terms or optimization tricks. Following this principle, we propose a simple version of boundary distance maps called rectified RW maps that, as we demonstrate in our experiments, achieve state-of-the-art performance with similar or better Dice coefficients and Hausdorff distances than Dice, Focal, and Boundary losses in three distinct segmentation tasks. We quantify the optimization instability provided by Boundary loss distance maps, and we empirically show that our rectified RW maps are stable to optimize. The code to run all our experiments is publicly available at: https://github.com/jmlipman/RegionWiseLoss.

Transfer Learning in Magnetic Resonance Brain Imaging: a Systematic Review

Feb 02, 2021

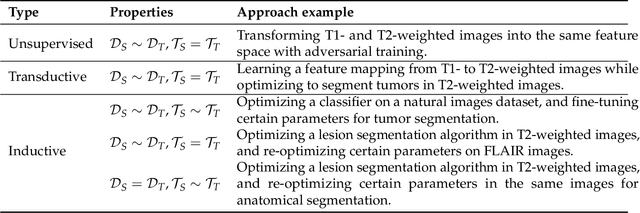

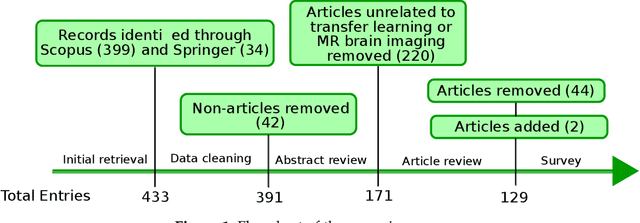

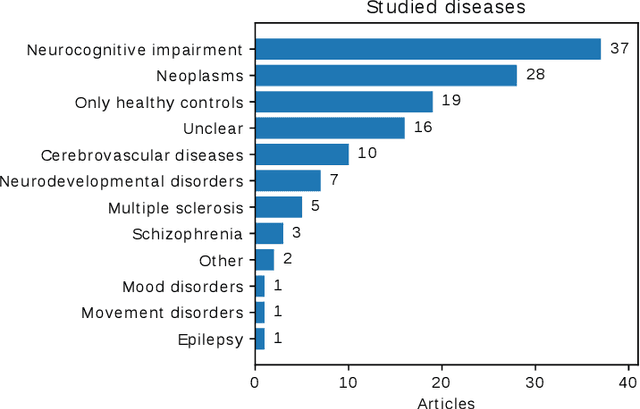

Abstract:Transfer learning refers to machine learning techniques that focus on acquiring knowledge from related tasks to improve generalization in the tasks of interest. In MRI, transfer learning is important for developing strategies that address the variation in MR images. Additionally, transfer learning is beneficial to re-utilize machine learning models that were trained to solve related tasks to the task of interest. Our goal is to identify research directions, gaps of knowledge, applications, and widely used strategies among the transfer learning approaches applied in MR brain imaging. We performed a systematic literature search for articles that applied transfer learning to MR brain imaging. We screened 433 studies and we categorized and extracted relevant information, including task type, application, and machine learning methods. Furthermore, we closely examined brain MRI-specific transfer learning approaches and other methods that tackled privacy, unseen target domains, and unlabeled data. We found 129 articles that applied transfer learning to brain MRI tasks. The most frequent applications were dementia related classification tasks and brain tumor segmentation. A majority of articles utilized transfer learning on convolutional neural networks (CNNs). Only few approaches were clearly brain MRI specific, considered privacy issues, unseen target domains or unlabeled data. We proposed a new categorization to group specific, widely-used approaches. There is an increasing interest in transfer learning within brain MRI. Public datasets have contributed to the popularity of Alzheimer's diagnostics/prognostics and tumor segmentation. Likewise, the availability of pretrained CNNs has promoted their utilization. Finally, the majority of the surveyed studies did not examine in detail the interpretation of their strategies after applying transfer learning, and did not compare to other approaches.

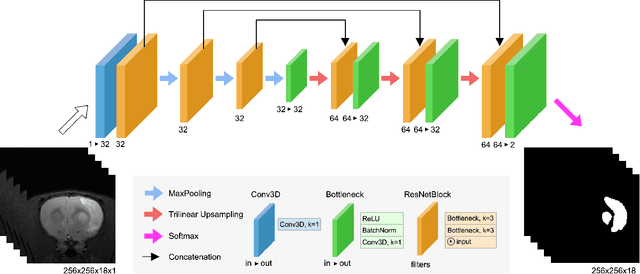

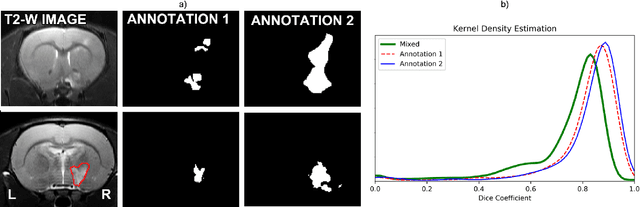

RatLesNetv2: A Fully Convolutional Network for Rodent Brain Lesion Segmentation

Jan 24, 2020

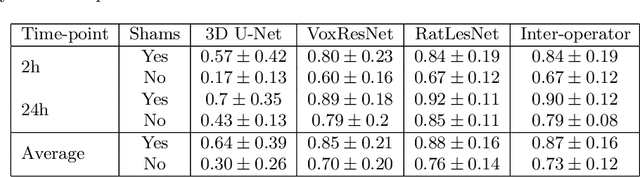

Abstract:Segmentation of rodent brain lesions on magnetic resonance images (MRIs) is a time-consuming task with high inter- and intra-operator variability due to its subjective nature. We present a three-dimensional fully convolutional neural network (ConvNet) named RatLesNetv2 for segmenting rodent brain lesions. We compare its performance with other ConvNets on an unusually large and heterogeneous data set composed by 916 T2-weighted rat brain scans at nine different lesion stages. RatLesNetv2 obtained similar to higher Dice coefficients than the other ConvNets and it produced much more realistic and compact segmentations with notably less holes and lower Hausdorff distance. RatLesNetv2-derived segmentations also exceeded inter-rater agreement Dice coefficients. Additionally, we show that training on disparate ground truths leads to significantly different segmentations, and we study RatLesNetv2 generalization capability when optimizing for training sets of different sizes. RatLesNetv2 is publicly available at https://github.com/jmlipman/RatLesNetv2.

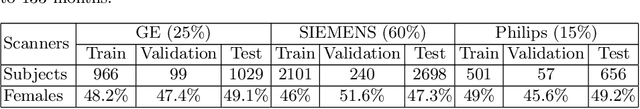

Predicting intelligence based on cortical WM/GM contrast, cortical thickness and volumetry

Sep 09, 2019

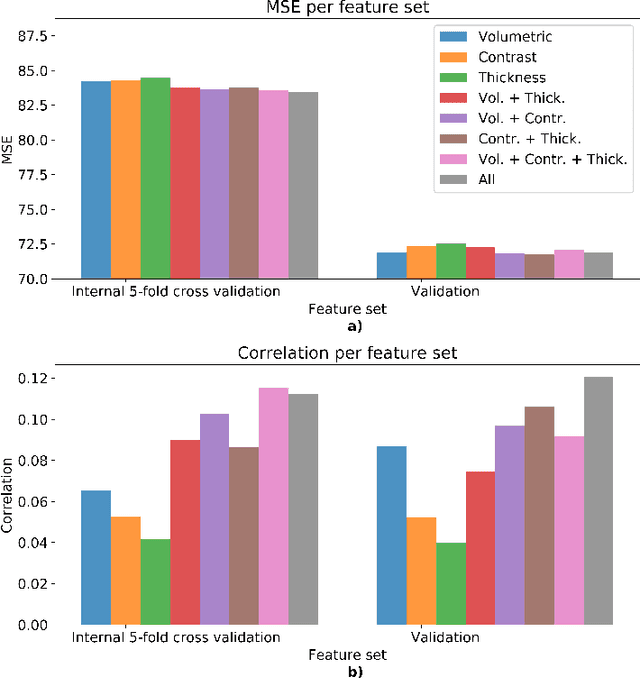

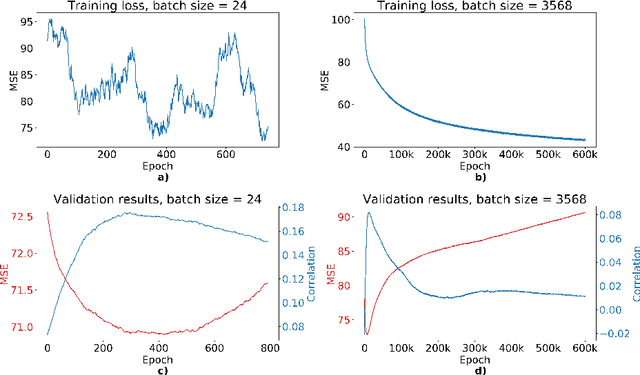

Abstract:We propose a four-layer fully-connected neural network (FNN) for predicting fluid intelligence scores from T1-weighted MR images for the ABCD-challenge. In addition to the volumes of brain structures, the FNN uses cortical WM/GM contrast and cortical thickness at 78 cortical regions. These last two measurements were derived from the T1-weighted MR images using cortical surfaces produced by the CIVET pipeline. The age and gender of the subjects and the scanner manufacturer are also used as features for the learning algorithm. This yielded 283 features provided to the FNN with two hidden layers of 20 and 15 nodes. The method was applied to the data from the ABCD study. Trained with a training set of 3736 subjects, the proposed method achieved a MSE of 71.596 and a correlation of 0.151 in the validation set of 415 subjects. For the final submission, the model was trained with 3568 subjects and it achieved a MSE of 94.0270 in the test set comprised of 4383 subjects.

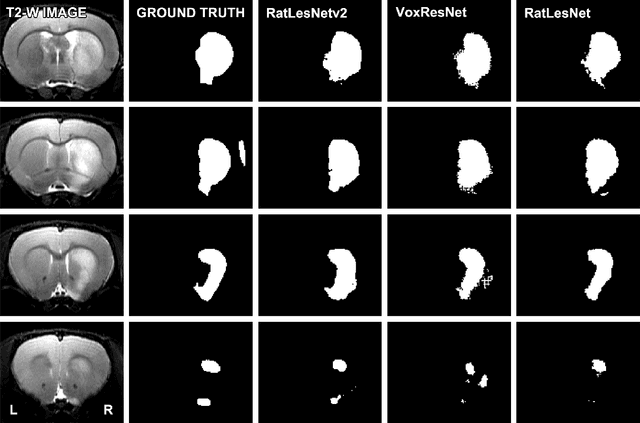

Automatic Rodent Brain MRI Lesion Segmentation with Fully Convolutional Networks

Aug 23, 2019

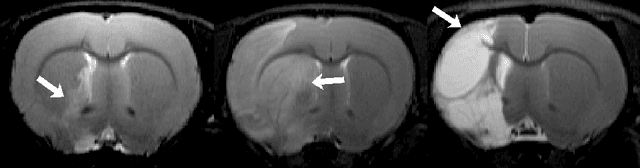

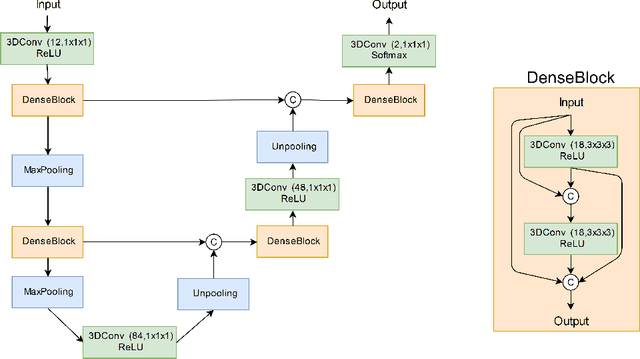

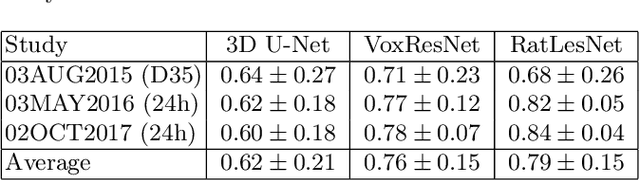

Abstract:Manual segmentation of rodent brain lesions from magnetic resonance images (MRIs) is an arduous, time-consuming and subjective task that is highly important in pre-clinical research. Several automatic methods have been developed for different human brain MRI segmentation, but little research has targeted automatic rodent lesion segmentation. The existing tools for performing automatic lesion segmentation in rodents are constrained by strict assumptions about the data. Deep learning has been successfully used for medical image segmentation. However, there has not been any deep learning approach specifically designed for tackling rodent brain lesion segmentation. In this work, we propose a novel Fully Convolutional Network (FCN), RatLesNet, for the aforementioned task. Our dataset consists of 131 T2-weighted rat brain scans from 4 different studies in which ischemic stroke was induced by transient middle cerebral artery occlusion. We compare our method with two other 3D FCNs originally developed for anatomical segmentation (VoxResNet and 3D-U-Net) with 5-fold cross-validation on a single study and a generalization test, where the training was done on a single study and testing on three remaining studies. The labels generated by our method were quantitatively and qualitatively better than the predictions of the compared methods. The average Dice coefficient achieved in the 5-fold cross-validation experiment with the proposed approach was 0.88, between 3.7% and 38% higher than the compared architectures. The presented architecture also outperformed the other FCNs at generalizing on different studies, achieving the average Dice coefficient of 0.79.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge