Joseph Geumlek

Privacy Amplification by Mixing and Diffusion Mechanisms

May 29, 2019

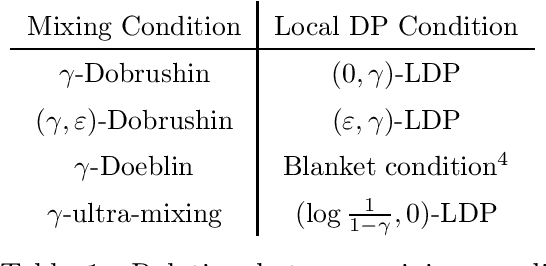

Abstract:A fundamental result in differential privacy states that the privacy guarantees of a mechanism are preserved by any post-processing of its output. In this paper we investigate under what conditions stochastic post-processing can amplify the privacy of a mechanism. By interpreting post-processing as the application of a Markov operator, we first give a series of amplification results in terms of uniform mixing properties of the Markov process defined by said operator. Next we provide amplification bounds in terms of coupling arguments which can be applied in cases where uniform mixing is not available. Finally, we introduce a new family of mechanisms based on diffusion processes which are closed under post-processing, and analyze their privacy via a novel heat flow argument. As applications, we show that the rate of "privacy amplification by iteration" in Noisy SGD introduced by Feldman et al. [FOCS'18] admits an exponential improvement in the strongly convex case, and propose a simple mechanism based on the Ornstein-Uhlenbeck process which has better mean squared error than the Gaussian mechanism when releasing a bounded function of the data.

Rényi Differential Privacy Mechanisms for Posterior Sampling

Oct 02, 2017

Abstract:Using a recently proposed privacy definition of R\'enyi Differential Privacy (RDP), we re-examine the inherent privacy of releasing a single sample from a posterior distribution. We exploit the impact of the prior distribution in mitigating the influence of individual data points. In particular, we focus on sampling from an exponential family and specific generalized linear models, such as logistic regression. We propose novel RDP mechanisms as well as offering a new RDP analysis for an existing method in order to add value to the RDP framework. Each method is capable of achieving arbitrary RDP privacy guarantees, and we offer experimental results of their efficacy.

On the Theory and Practice of Privacy-Preserving Bayesian Data Analysis

Jun 09, 2016

Abstract:Bayesian inference has great promise for the privacy-preserving analysis of sensitive data, as posterior sampling automatically preserves differential privacy, an algorithmic notion of data privacy, under certain conditions (Dimitrakakis et al., 2014; Wang et al., 2015). While this one posterior sample (OPS) approach elegantly provides privacy "for free," it is data inefficient in the sense of asymptotic relative efficiency (ARE). We show that a simple alternative based on the Laplace mechanism, the workhorse of differential privacy, is as asymptotically efficient as non-private posterior inference, under general assumptions. This technique also has practical advantages including efficient use of the privacy budget for MCMC. We demonstrate the practicality of our approach on a time-series analysis of sensitive military records from the Afghanistan and Iraq wars disclosed by the Wikileaks organization.

* Updated to match the accepted UAI version. Generalized the ARE result and included a more detailed proof. Improved some figures, etc

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge