Privacy Amplification by Mixing and Diffusion Mechanisms

Paper and Code

May 29, 2019

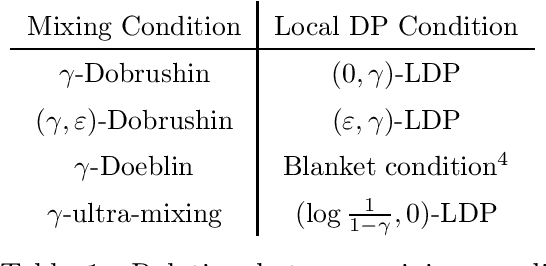

A fundamental result in differential privacy states that the privacy guarantees of a mechanism are preserved by any post-processing of its output. In this paper we investigate under what conditions stochastic post-processing can amplify the privacy of a mechanism. By interpreting post-processing as the application of a Markov operator, we first give a series of amplification results in terms of uniform mixing properties of the Markov process defined by said operator. Next we provide amplification bounds in terms of coupling arguments which can be applied in cases where uniform mixing is not available. Finally, we introduce a new family of mechanisms based on diffusion processes which are closed under post-processing, and analyze their privacy via a novel heat flow argument. As applications, we show that the rate of "privacy amplification by iteration" in Noisy SGD introduced by Feldman et al. [FOCS'18] admits an exponential improvement in the strongly convex case, and propose a simple mechanism based on the Ornstein-Uhlenbeck process which has better mean squared error than the Gaussian mechanism when releasing a bounded function of the data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge