Josef Taher

Multispectral airborne laser scanning for tree species classification: a benchmark of machine learning and deep learning algorithms

Apr 19, 2025

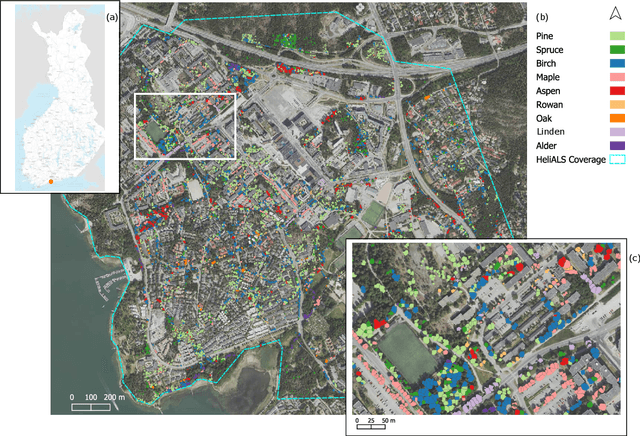

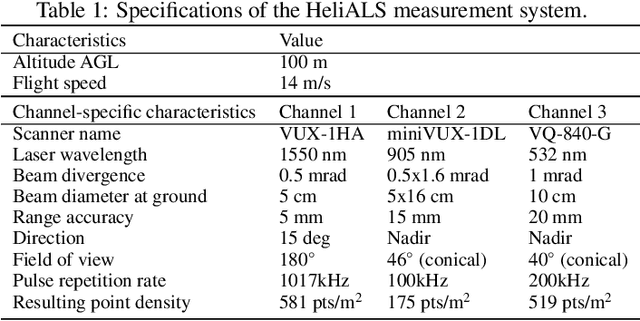

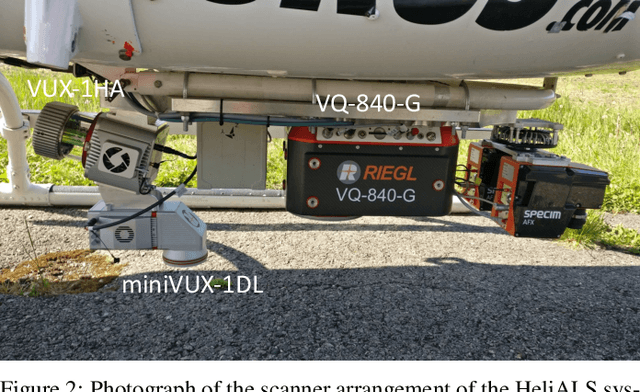

Abstract:Climate-smart and biodiversity-preserving forestry demands precise information on forest resources, extending to the individual tree level. Multispectral airborne laser scanning (ALS) has shown promise in automated point cloud processing and tree segmentation, but challenges remain in identifying rare tree species and leveraging deep learning techniques. This study addresses these gaps by conducting a comprehensive benchmark of machine learning and deep learning methods for tree species classification. For the study, we collected high-density multispectral ALS data (>1000 pts/m$^2$) at three wavelengths using the FGI-developed HeliALS system, complemented by existing Optech Titan data (35 pts/m$^2$), to evaluate the species classification accuracy of various algorithms in a test site located in Southern Finland. Based on 5261 test segments, our findings demonstrate that point-based deep learning methods, particularly a point transformer model, outperformed traditional machine learning and image-based deep learning approaches on high-density multispectral point clouds. For the high-density ALS dataset, a point transformer model provided the best performance reaching an overall (macro-average) accuracy of 87.9% (74.5%) with a training set of 1065 segments and 92.0% (85.1%) with 5000 training segments. The best image-based deep learning method, DetailView, reached an overall (macro-average) accuracy of 84.3% (63.9%), whereas a random forest (RF) classifier achieved an overall (macro-average) accuracy of 83.2% (61.3%). Importantly, the overall classification accuracy of the point transformer model on the HeliALS data increased from 73.0% with no spectral information to 84.7% with single-channel reflectance, and to 87.9% with spectral information of all the three channels.

Unsupervised deep learning for semantic segmentation of multispectral LiDAR forest point clouds

Feb 10, 2025Abstract:Point clouds captured with laser scanning systems from forest environments can be utilized in a wide variety of applications within forestry and plant ecology, such as the estimation of tree stem attributes, leaf angle distribution, and above-ground biomass. However, effectively utilizing the data in such tasks requires the semantic segmentation of the data into wood and foliage points, also known as leaf-wood separation. The traditional approach to leaf-wood separation has been geometry- and radiometry-based unsupervised algorithms, which tend to perform poorly on data captured with airborne laser scanning (ALS) systems, even with a high point density. While recent machine and deep learning approaches achieve great results even on sparse point clouds, they require manually labeled training data, which is often extremely laborious to produce. Multispectral (MS) information has been demonstrated to have potential for improving the accuracy of leaf-wood separation, but quantitative assessment of its effects has been lacking. This study proposes a fully unsupervised deep learning method, GrowSP-ForMS, which is specifically designed for leaf-wood separation of high-density MS ALS point clouds and based on the GrowSP architecture. GrowSP-ForMS achieved a mean accuracy of 84.3% and a mean intersection over union (mIoU) of 69.6% on our MS test set, outperforming the unsupervised reference methods by a significant margin. When compared to supervised deep learning methods, our model performed similarly to the slightly older PointNet architecture but was outclassed by more recent approaches. Finally, two ablation studies were conducted, which demonstrated that our proposed changes increased the test set mIoU of GrowSP-ForMS by 29.4 percentage points (pp) in comparison to the original GrowSP model and that utilizing MS data improved the mIoU by 5.6 pp from the monospectral case.

Unsupervised semantic segmentation of urban high-density multispectral point clouds

Oct 24, 2024

Abstract:The availability of highly accurate urban airborne laser scanning (ALS) data will increase rapidly in the future, especially as acquisition costs decrease, for example through the use of drones. Current challenges in data processing are related to the limited spectral information and low point density of most ALS datasets. Another challenge will be the growing need for annotated training data, frequently produced by manual processes, to enable semantic interpretation of point clouds. This study proposes to semantically segment new high-density (1200 points per square metre on average) multispectral ALS data with an unsupervised ground-aware deep clustering method GroupSP inspired by the unsupervised GrowSP algorithm. GroupSP divides the scene into superpoints as a preprocessing step. The neural network is trained iteratively by grouping the superpoints and using the grouping assignments as pseudo-labels. The predictions for the unseen data are given by over-segmenting the test set and mapping the predicted classes into ground truth classes manually or with automated majority voting. GroupSP obtained an overall accuracy (oAcc) of 97% and a mean intersection over union (mIoU) of 80%. When compared to other unsupervised semantic segmentation methods, GroupSP outperformed GrowSP and non-deep K-means. However, a supervised random forest classifier outperformed GroupSP. The labelling efforts in GroupSP can be minimal; it was shown, that the GroupSP can semantically segment seven urban classes (building, high vegetation, low vegetation, asphalt, rock, football field, and gravel) with oAcc of 95% and mIoU of 75% using only 0.004% of the available annotated points in the mapping assignment. Finally, the multispectral information was examined; adding each new spectral channel improved the mIoU. Additionally, echo deviation was valuable, especially when distinguishing ground-level classes.

Towards High-Definition Maps: a Framework Leveraging Semantic Segmentation to Improve NDT Map Compression and Descriptivity

Jan 10, 2023

Abstract:High-Definition (HD) maps are needed for robust navigation of autonomous vehicles, limited by the on-board storage capacity. To solve this, we propose a novel framework, Environment-Aware Normal Distributions Transform (EA-NDT), that significantly improves compression of standard NDT map representation. The compressed representation of EA-NDT is based on semantic-aided clustering of point clouds resulting in more optimal cells compared to grid cells of standard NDT. To evaluate EA-NDT, we present an open-source implementation that extracts planar and cylindrical primitive features from a point cloud and further divides them into smaller cells to represent the data as an EA-NDT HD map. We collected an open suburban environment dataset and evaluated EA-NDT HD map representation against the standard NDT representation. Compared to the standard NDT, EA-NDT achieved consistently at least 1.5x higher map compression while maintaining the same descriptive capability. Moreover, we showed that EA-NDT is capable of producing maps with significantly higher descriptivity score when using the same number of cells than the standard NDT.

Leveraging Road Area Semantic Segmentation with Auxiliary Steering Task

Dec 19, 2022Abstract:Robustness of different pattern recognition methods is one of the key challenges in autonomous driving, especially when driving in the high variety of road environments and weather conditions, such as gravel roads and snowfall. Although one can collect data from these adverse conditions using cars equipped with sensors, it is quite tedious to annotate the data for training. In this work, we address this limitation and propose a CNN-based method that can leverage the steering wheel angle information to improve the road area semantic segmentation. As the steering wheel angle data can be easily acquired with the associated images, one could improve the accuracy of road area semantic segmentation by collecting data in new road environments without manual data annotation. We demonstrate the effectiveness of the proposed approach on two challenging data sets for autonomous driving and show that when the steering task is used in our segmentation model training, it leads to a 0.1-2.9% gain in the road area mIoU (mean Intersection over Union) compared to the corresponding reference transfer learning model.

* 11 pages, 4 figures (Supplementary material 6 pages, 3 figures). Author's accepted version of the contribution included in proceedings of the 21st International Conference on Image Analysis and Processing (ICIAP), 2022

Multimodal End-to-End Learning for Autonomous Steering in Adverse Road and Weather Conditions

Oct 28, 2020

Abstract:Autonomous driving is challenging in adverse road and weather conditions in which there might not be lane lines, the road might be covered in snow and the visibility might be poor. We extend the previous work on end-to-end learning for autonomous steering to operate in these adverse real-life conditions with multimodal data. We collected 28 hours of driving data in several road and weather conditions and trained convolutional neural networks to predict the car steering wheel angle from front-facing color camera images and lidar range and reflectance data. We compared the CNN model performances based on the different modalities and our results show that the lidar modality improves the performances of different multimodal sensor-fusion models. We also performed on-road tests with different models and they support this observation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge