José-Ramón Cano

Semi-Supervised Constrained Clustering: An In-Depth Overview, Ranked Taxonomy and Future Research Directions

Feb 28, 2023Abstract:Clustering is a well-known unsupervised machine learning approach capable of automatically grouping discrete sets of instances with similar characteristics. Constrained clustering is a semi-supervised extension to this process that can be used when expert knowledge is available to indicate constraints that can be exploited. Well-known examples of such constraints are must-link (indicating that two instances belong to the same group) and cannot-link (two instances definitely do not belong together). The research area of constrained clustering has grown significantly over the years with a large variety of new algorithms and more advanced types of constraints being proposed. However, no unifying overview is available to easily understand the wide variety of available methods, constraints and benchmarks. To remedy this, this study presents in-detail the background of constrained clustering and provides a novel ranked taxonomy of the types of constraints that can be used in constrained clustering. In addition, it focuses on the instance-level pairwise constraints, and gives an overview of its applications and its historical context. Finally, it presents a statistical analysis covering 307 constrained clustering methods, categorizes them according to their features, and provides a ranking score indicating which methods have the most potential based on their popularity and validation quality. Finally, based upon this analysis, potential pitfalls and future research directions are provided.

Semi-supervised Clustering with Two Types of Background Knowledge: Fusing Pairwise Constraints and Monotonicity Constraints

Feb 25, 2023

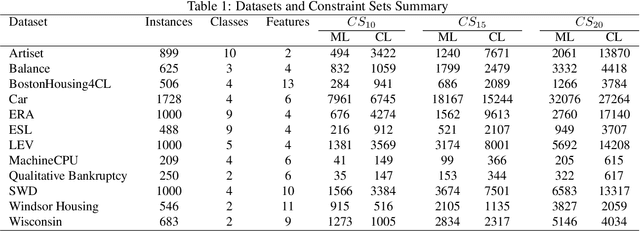

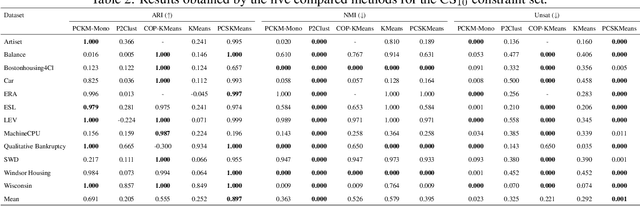

Abstract:This study addresses the problem of performing clustering in the presence of two types of background knowledge: pairwise constraints and monotonicity constraints. To achieve this, the formal framework to perform clustering under monotonicity constraints is, firstly, defined, resulting in a specific distance measure. Pairwise constraints are integrated afterwards by designing an objective function which combines the proposed distance measure and a pairwise constraint-based penalty term, in order to fuse both types of information. This objective function can be optimized with an EM optimization scheme. The proposed method serves as the first approach to the problem it addresses, as it is the first method designed to work with the two types of background knowledge mentioned above. Our proposal is tested in a variety of benchmark datasets and in a real-world case of study.

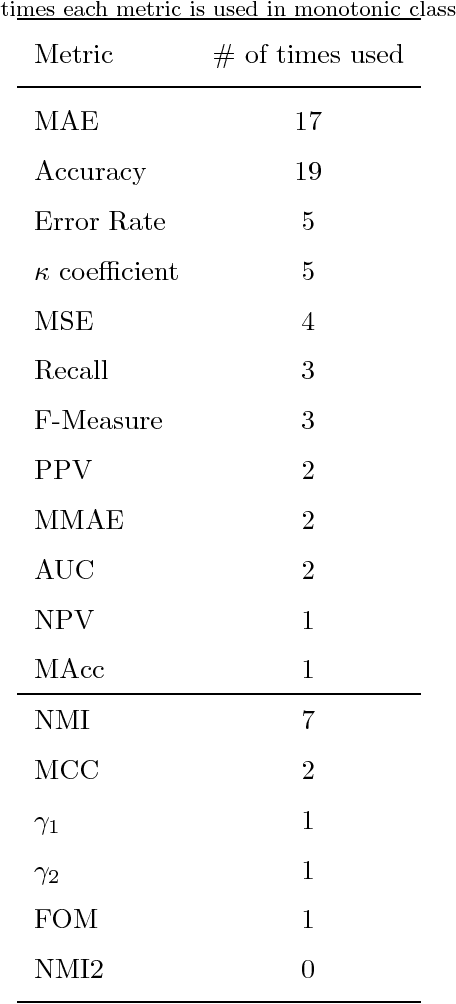

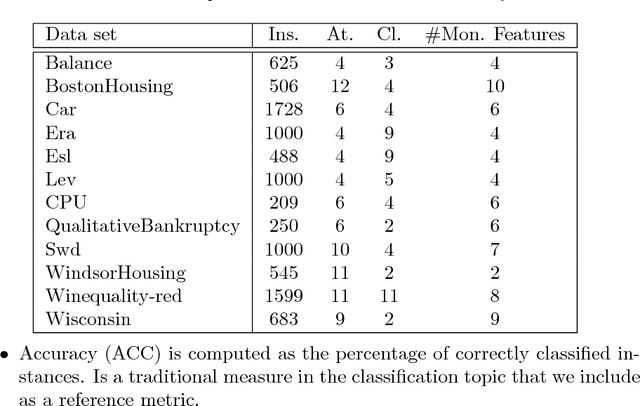

Monotonic classification: an overview on algorithms, performance measures and data sets

Nov 17, 2018

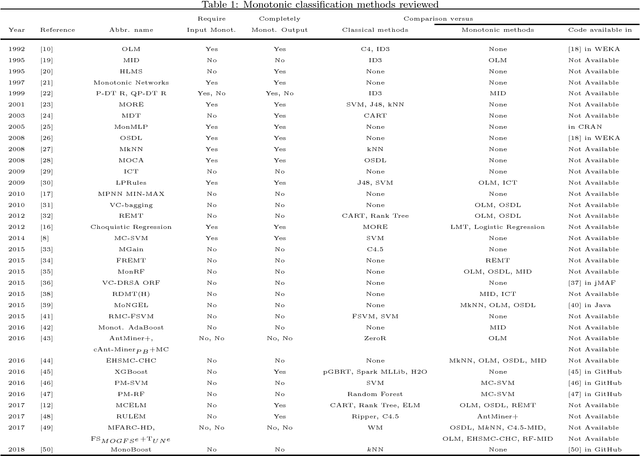

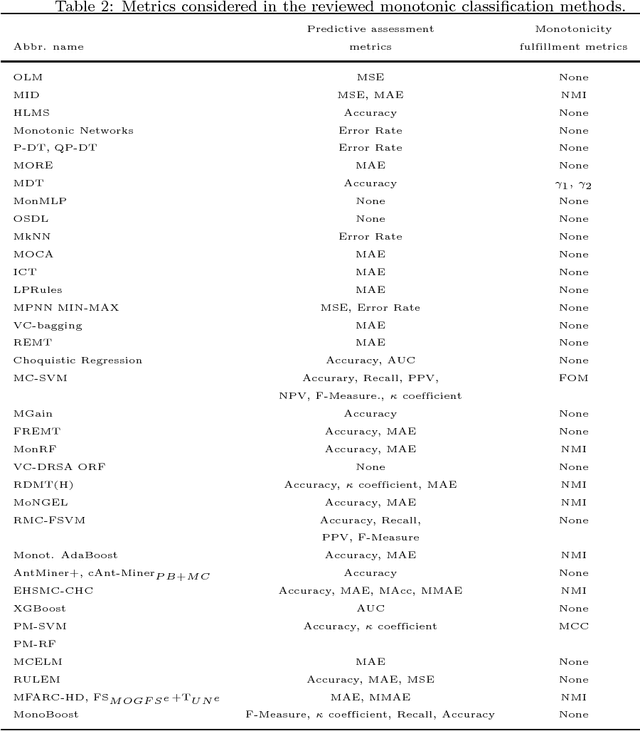

Abstract:Currently, knowledge discovery in databases is an essential step to identify valid, novel and useful patterns for decision making. There are many real-world scenarios, such as bankruptcy prediction, option pricing or medical diagnosis, where the classification models to be learned need to fulfil restrictions of monotonicity (i.e. the target class label should not decrease when input attributes values increase). For instance, it is rational to assume that a higher debt ratio of a company should never result in a lower level of bankruptcy risk. Consequently, there is a growing interest from the data mining research community concerning monotonic predictive models. This paper aims to present an overview about the literature in the field, analyzing existing techniques and proposing a taxonomy of the algorithms based on the type of model generated. For each method, we review the quality metrics considered in the evaluation and the different data sets and monotonic problems used in the analysis. In this way, this paper serves as an overview of the research about monotonic classification in specialized literature and can be used as a functional guide of the field.

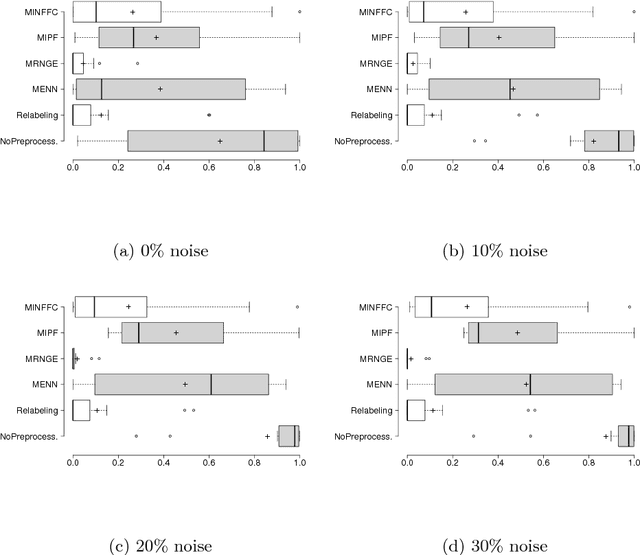

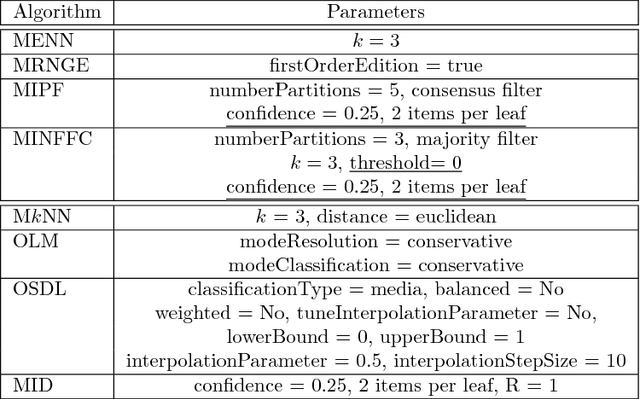

Label Noise Filtering Techniques to Improve Monotonic Classification

Oct 21, 2018

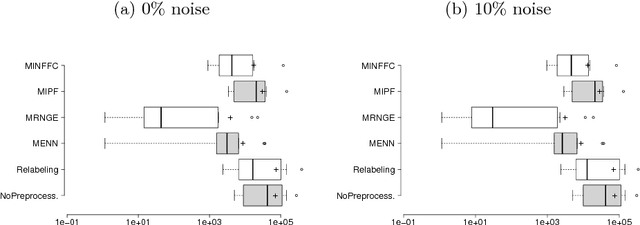

Abstract:The monotonic ordinal classification has increased the interest of researchers and practitioners within machine learning community in the last years. In real applications, the problems with monotonicity constraints are very frequent. To construct predictive monotone models from those problems, many classifiers require as input a data set satisfying the monotonicity relationships among all samples. Changing the class labels of the data set (relabelling) is useful for this. Relabelling is assumed to be an important building block for the construction of monotone classifiers and it is proved that it can improve the predictive performance. In this paper, we will address the construction of monotone datasets considering as noise the cases that do not meet the monotonicity restrictions. For the first time in the specialized literature, we propose the use of noise filtering algorithms in a preprocessing stage with a double goal: to increase both the monotonicity index of the models and the accuracy of the predictions for different monotonic classifiers. The experiments are performed over 12 datasets coming from classification and regression problems and show that our scheme improves the prediction capabilities of the monotonic classifiers instead of being applied to original and relabeled datasets. In addition, we have included the analysis of noise filtering process in the particular case of wine quality classification to understand its effect in the predictive models generated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge