Jonathan Grizou

Self-Calibrating BCIs: Ranking and Recovery of Mental Targets Without Labels

Jun 11, 2025Abstract:We consider the problem of recovering a mental target (e.g., an image of a face) that a participant has in mind from paired EEG (i.e., brain responses) and image (i.e., perceived faces) data collected during interactive sessions without access to labeled information. The problem has been previously explored with labeled data but not via self-calibration, where labeled data is unavailable. Here, we present the first framework and an algorithm, CURSOR, that learns to recover unknown mental targets without access to labeled data or pre-trained decoders. Our experiments on naturalistic images of faces demonstrate that CURSOR can (1) predict image similarity scores that correlate with human perceptual judgments without any label information, (2) use these scores to rank stimuli against an unknown mental target, and (3) generate new stimuli indistinguishable from the unknown mental target (validated via a user study, N=53).

Curio: A Cost-Effective Solution for Robotics Education

May 24, 2025Abstract:Student engagement is one of the key challenges in robotics and artificial intelligence (AI) education. Tangible learning approaches, such as educational robots, provide an effective way to enhance engagement and learning by offering real-world applications to bridge the gap between theory and practice. However, existing platforms often face barriers such as high cost or limited capabilities. In this paper, we present Curio, a cost-effective, smartphone-integrated robotics platform designed to lower the entry barrier to robotics and AI education. With a retail price below $50, Curio is more affordable than similar platforms. By leveraging smartphones, Curio eliminates the need for onboard processing units, dedicated cameras, and additional sensors while maintaining the ability to perform AI-based tasks. To evaluate the impact of Curio on student engagement, we conducted a case study with 20 participants, where we examined usability, engagement, and potential for integrating into AI and robotics education. The results indicate high engagement and motivation levels across all participants. Additionally, 95% of participants reported an improvement in their understanding of robotics. Findings suggest that using a robotic system such as Curio can enhance engagement and hands-on learning in robotics and AI education. All resources and projects with Curio are available at trycurio.com.

IFTT-PIN: A Self-Calibrating PIN-Entry Method

Jul 02, 2024

Abstract:Personalising an interface to the needs and preferences of a user often incurs additional interaction steps. In this paper, we demonstrate a novel method that enables the personalising of an interface without the need for explicit calibration procedures, via a process we call self-calibration. A second-order effect of self-calibration is that an outside observer cannot easily infer what a user is trying to achieve because they cannot interpret the user's actions. To explore this security angle, we developed IFTT-PIN (If This Then PIN) as the first self-calibrating PIN-entry method. When using IFTT-PIN, users are free to choose any button for any meaning without ever explicitly communicating their choice to the machine. IFTT-PIN infers both the user's PIN and their preferred button mapping at the same time. This paper presents the concept, implementation, and interactive demonstrations of IFTT-PIN, as well as an evaluation against shoulder surfing attacks. Our study (N=24) shows that by adding self-calibration to an existing PIN entry method, IFTT-PIN statistically significantly decreased PIN attack decoding rate by ca. 8.5 times (p=1.1e-9), while only decreasing the PIN entry encoding rate by ca. 1.4 times (p=0.02), leading to a positive security-usability trade-off. IFTT-PIN's entry rate significantly improved 21 days after first exposure (p=3.6e-6) to the method, suggesting self-calibrating interfaces are memorable despite using an initially undefined user interface. Self-calibration methods might lead to novel opportunities for interaction that are more inclusive and versatile, a potentially interesting challenge for the community. A short introductory video is available at https://youtu.be/pP5sfniNRns.

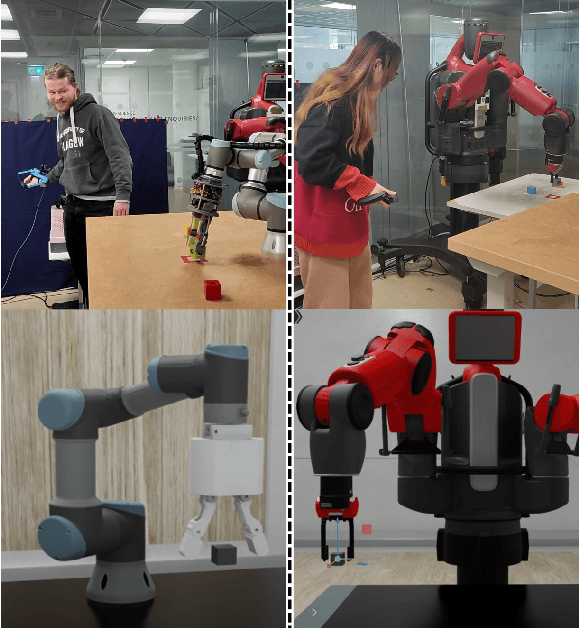

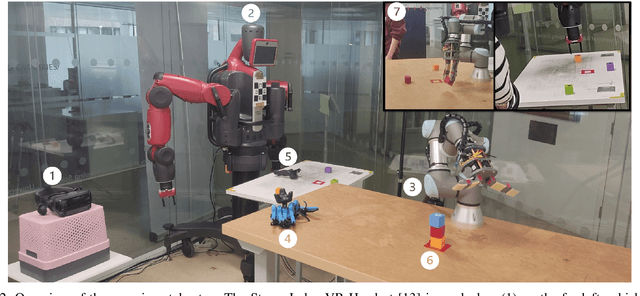

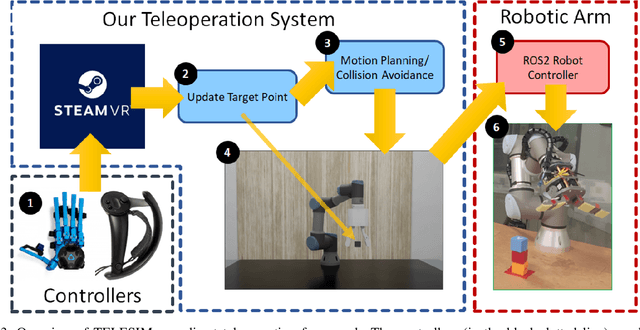

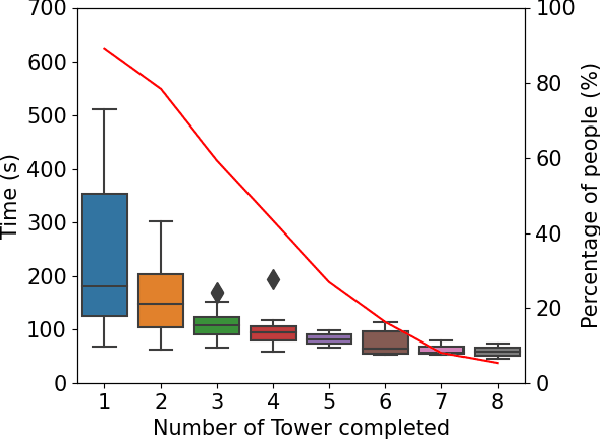

TELESIM: A Modular and Plug-and-Play Framework for Robotic Arm Teleoperation using a Digital Twin

Sep 20, 2023

Abstract:We present TELESIM, a modular and plug-and-play framework for direct teleoperation of a robotic arm using a digital twin as the interface between the user and the robotic system. We tested TELESIM by performing a user survey with 37 participants on two different robots using two different control modalities: a virtual reality controller and a finger mapping hardware controller using different grasping systems. Users were asked to teleoperate the robot to pick and place 3 cubes in a tower and to repeat this task as many times as possible in 10 minutes, with only 5 minutes of training beforehand. Our experimental results show that most users were able to succeed by building at least a tower of 3 cubes regardless of the control modality or robot used, demonstrating the user-friendliness of TELESIM.

Interactive introduction to self-calibrating interfaces

Dec 12, 2022Abstract:This interactive paper aims to provide an intuitive understanding of the self-calibrating interface paradigm. Under this paradigm, you can choose how to use an interface which can adapt to your preferences on the fly. We introduce a PIN entering task and gradually release constraints, moving from a pre-calibrated interface to a self-calibrating interface while increasing the complexity of input modalities from buttons, to points on a map, to sketches, and finally to spoken words. This is not a traditional research paper with a hypothesis and experimental results to support claims; the research supporting this work has already been done and we refer to it extensively in the later sections. Instead, our aim is to walk you through an intriguing interaction paradigm in small logical steps with supporting illustrations, interactive demonstrations, and videos to reinforce your learning. We designed this paper for the enjoyments of curious minds of any backgrounds, it is written in plain English and no prior knowledge is necessary. All demos are available online at openvault.jgrizou.com and linked individually in the paper.

IFTT-PIN: A PIN-Entry Method Leveraging the Self-Calibration Paradigm

May 26, 2022

Abstract:IFTT-PIN is a self-calibrating version of the PIN-entry method introduced in Roth et al. (2004) [1]. In [1], digits are split into two sets and assigned a color respectively. To communicate their digit, users press the button with the same color that is assigned to their digit, which can thus be identified by elimination after a few iterations. IFTT-PIN uses the same principle but does not pre-assign colors to each button. Instead, users are free to choose which button to use for each color. The button-to-color mapping only exists in the user's mind and is never directly communicated to the interface. In other words, IFTT-PIN infers both the user's PIN and their preferred button-to-color mapping at the same time, a process called self-calibration. In this paper, we present online interactive demonstrations of IFTT-PIN with and without self-calibration and introduce the key concepts and assumptions making self-calibration possible. IFTT-PIN can be tested at https://jgrizou.github.io/IFTT-PIN/ with a video introduction available at https://youtu.be/5I1ibPJdLHM. We review related work in the field of brain-computer interface and further propose self-calibration as a novel approach to protect users against shoulder surfing attacks. Finally, we introduce a vault cracking challenge as a test of usability and security that was informally tested at our institute. With IFTT-PIN, we wish to demonstrate a new interactive experience where users can decide actively and on-the-fly how to use an interface. The self-calibration paradigm might lead to novel opportunities for interaction in other applications or domains. We hope this work will inspire the community to invent them.

Metaversal Learning Environments: Measuring, predicting and improving interpersonal effectiveness

May 05, 2022

Abstract:Experiential learning has been known to be an engaging and effective modality for personal and professional development. The Metaverse provides ample opportunities for the creation of environments in which such experiential learning can occur. In this work, we introduce a novel architecture that combines Artificial intelligence and Virtual Reality to create a highly immersive and efficient learning experience using avatars. The framework allows us to measure the interpersonal effectiveness of an individual interacting with the avatar. We first present a small pilot study and its results which were used to enhance the framework. We then present a larger study using the enhanced framework to measure, assess, and predict the interpersonal effectiveness of individuals interacting with an avatar. Results reveal that individuals with deficits in their interpersonal effectiveness show a significant improvement in performance after multiple interactions with an avatar. The results also reveal that individuals interact naturally with avatars within this framework, and exhibit similar behavioral traits as they would in the real world. We use this as a basis to analyze the underlying audio and video data streams of individuals during these interactions. Finally, we extract relevant features from these data and present a machine-learning based approach to predict interpersonal effectiveness during human-avatar conversation. We conclude by discussing the implications of these findings to build beneficial applications for the real world.

IFTT-PIN: Demonstrating the Self-Calibration Paradigm on a PIN-Entry Task

Apr 05, 2022

Abstract:We demonstrate IFTT-PIN, a self-calibrating version of the PIN-entry method introduced in Roth et al. (2004) [1]. In [1], digits are split into two sets and assigned a color respectively. To communicate their digit, users press the button with the same color that is assigned to their digit, which can be identified by elimination after a few iterations. IFTT-PIN uses the same principle but does not pre-assign colors to each button. Instead, users are free to choose which button to use for each color. IFTT-PIN infers both the user's PIN and their preferred button-to-color mapping at the same time, a process called self-calibration. Different versions of IFTT-PIN can be tested at https://jgrizou.github.io/IFTT-PIN/ and a video introduction at https://youtu.be/5I1ibPJdLHM.

The Open Vault Challenge -- Learning how to build calibration-free interactive systems by cracking the code of a vault

Jun 06, 2019

Abstract:This demo takes the form of a challenge to the IJCAI community. A physical vault, secured by a 4-digit code, will be placed in the demo area. The author will publicly open the vault by entering the code on a touch-based interface, and as many times as requested. The challenge to the IJCAI participants will be to crack the code, open the vault, and collect its content. The interface is based on previous work on calibration-free interactive systems that enables a user to start instructing a machine without the machine knowing how to interpret the user's actions beforehand. The intent and the behavior of the human are simultaneously learned by the machine. An online demo and videos are available for readers to participate in the challenge. An additional interface using vocal commands will be revealed on the demo day, demonstrating the scalability of our approach to continuous input signals.

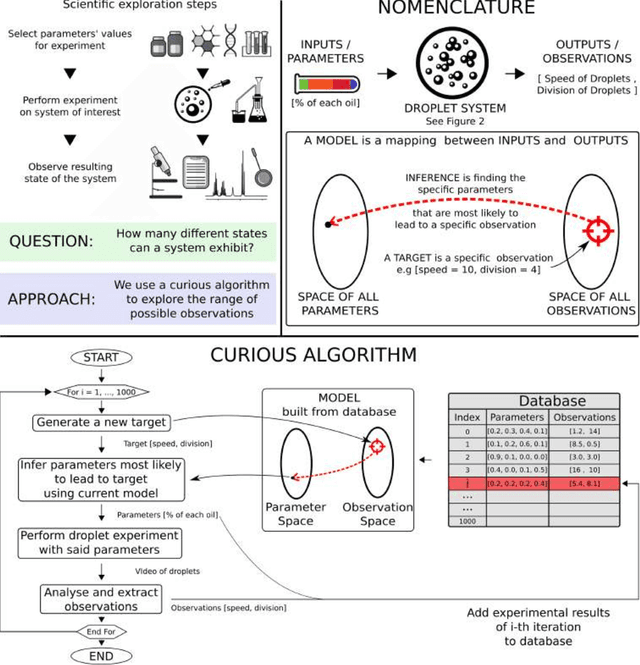

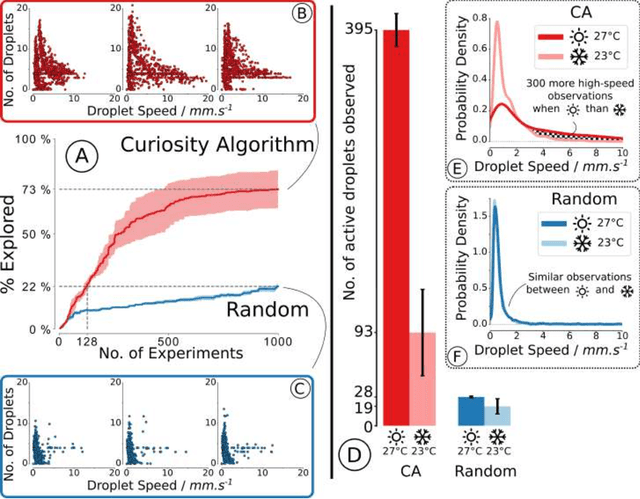

Exploration of Self-Propelling Droplets Using a Curiosity Driven Robotic Assistant

Apr 22, 2019

Abstract:We describe a chemical robotic assistant equipped with a curiosity algorithm (CA) that can efficiently explore the state a complex chemical system can exhibit. The CA-robot is designed to explore formulations in an open-ended way with no explicit optimization target. By applying the CA-robot to the study of self-propelling multicomponent oil-in-water droplets, we are able to observe an order of magnitude more variety of droplet behaviours than possible with a random parameter search and given the same budget. We demonstrate that the CA-robot enabled the discovery of a sudden and highly specific response of droplets to slight temperature changes. Six modes of self-propelled droplets motion were identified and classified using a time-temperature phase diagram and probed using a variety of techniques including NMR. This work illustrates how target free search can significantly increase the rate of unpredictable observations leading to new discoveries with potential applications in formulation chemistry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge